Author Notes:

The following is a huge piece of writing. It resulted from a conversation I had with a young woman who showed interest in learning how to program and possibly enter the IT profession. It is also an attempt to bring the realities of Information Technology profession as it is today into perspective so that a young woman interested in this field can make informed choices as to how she may be able to enter the field either professionally or for self-interest.

Those who read this piece and would like to pursue further study are more than welcome to contact me with their questions and requests for assistance at support@blackfalconsoftware.com.

I will do everything I can to help you on this long but potentially exciting journey while also offering advice on how to avoid the most serious pitfalls you may encounter.

In addition, since this is such a long piece, it is also available in downloadable PDF form at the following address… https://1drv.ms/b/s!AnW5gyh0E3V-g2bQ4UCq4Df-V2tf

Opening Notes… And there are many…

In the past several years there has been a noticeable effort to recruit young, capable women into the Information Technology profession. From the articles I have come across on this subject it appears that there is little understanding as to why so many left the field during the 1990s and early 2000s. The result is that many such efforts are aimed at technical training with the underlying idea that women are as good as men in this field of endeavor. However, historically that has never been the point.

The profession used to be populated by a female work force of around 35% to 40%. This number has now dwindled down to approximately 17%. This reduction in female technical professionals did not come about due to a lack of self-esteem but more from a better understanding of the field has evolved in the more recent years.

The real question is why would any sane Human being want to engage in a profession that has few if any real professional standards, ever changing technologies that disallow anyone to become finely honed in their preferred skill sets, increasingly thins knowledge bases for what technologies and processes actually work to get the job done, onerous working hours, and most often bad working conditions resulting from the band aids that are being touted as the new software engineering standards? Only really crazy people would enter such an activity. And only really crazy people would rationalize that somehow this all so much fun.

To be fair there is a lot of fun to be had in this profession but that enjoyment of creating a personal piece of quality for another has been terribly overshadowed by the sociological changes in US society that has found their way into other societies as well, though maybe not as disastrously.

Women in Human societies have often had and continue to experience a form of second-class citizenship due to their lack of masculine attributes. Even if they have adopted masculine psychological attributes, acceptance is still in many respects viewed with suspect simply because they have the underlying desire to create, nurture, and build; something which most males of the species don’t seem to comprehend let alone have.

With the exception of such aberrations as the Hillary Clintons, Madeline Albrights, and the Margaret Thatchers of the world, along with their “protégés”, when was the last time anyone can remember an intelligent woman demanding that a country go to war?

Surprisingly as this may sound it is this sociology and its offshoots that has reduced the number of women in a profession that currently appears to only cater to those who don’t mind floundering around in the growing technological quagmire that is the US Information Technology profession.

I doubt any amount of technical education or esteem building seminars directed at young ladies will increase the dismal percentage of female workers in this activity. So far there have been no noticeable results to crow about.

A young woman I met while she was working at a discount pharmacy my wife and patronize told me she was interested in learning about our profession looking at it from the point of view that she has seemingly acquired that it shouldn’t be a “boys only” field. She was of course right and it is my guess that the substantial loss of our female counterparts has contributed significantly to the erratic nature of the realities of working in this field. Women often bring a soothing influence to the environments they inhabit and there is really nothing soothing about having your life upended by the constant cycles of deployment that Agile promoters have brought to the daily lives of professional developers. Noticeably, there have been quite few pieces written about the constant stresses of such development styles that often lead to burnout.

The hype about how all this is so much fun since we all get to learn on a continuous basis is just that, “hype”, marketing fantasy to get everyone to adopt to the new tool, standard, or design pattern that is being bandied about on all the technical sites in order to keep all of them relevant.

Never is there any discussion about building something of quality with tools that we already have at our disposal. That just isn’t “cool” anymore.

Too often, our profession is viewed from a male-geek interpretation of the environments we work in. However, that is Hollywood pushing its image management again as it is far different than what the popular portrayals of our work actually involve. And the realities of my field as I just stated are directly responsible for many young women shying away from entering into one of the potential career paths as well as many women professionals who are either currently leaving it or have already done so.

Nonetheless, these realities, like all realities have two sides to its coinage as they provide for both a lot of negatives as well as many positives depending on how you approach your interests in learning to become a developer; whether a hobbyist or professional with each requiring the same type of training and study. However, if you keep your mind open and study the field from the reports of actual analysts who make it their job to be impartial about it you will be able to successfully navigate the glaring negative aspects of it allowing you to grow your own path to a successful and satisfying endeavor.

However, as a senior software engineer with 44 years in the profession, 42 of them in the corporate environments, it is a very difficult task to try to relate to a young woman who may be interested in entering the field either out of self-interest or for a possible endeavor that could produce some level of income.

My perspectives on the field are far different than one who has had even half of the experience I have or one who has even less. I have worked through three major eras of the Information Technology profession. And from the standpoint of one such as a young woman would have I could not even imagine the perspectives she may have.

As a result, I have prepared a lot of information to be read. There is a lot of history to my profession that will provide you with an understanding at how the field developed to the point it is at today. It will also provide notes on where decreases of the female proportion of profession began to occur over the years.

You may question the reasoning for providing you with such a history but as you read it you will see the major eras of hardware and software developments that senior professionals such as myself have experienced and why we have such perspectives. This history will also demonstrate that the field is no longer as easily approachable by young people today simply due to the massive increase in complexities involved in learning even a single aspect of it such as a programming language.

Do not try to learn or understand it all in one reading since much of it will come by simply studying and tinkering with a programming language and its supporting technologies.

Beyond the historical notes, I provide you with a technical overview of programming languages and how they work from a very simplistic viewpoint. I also provide you with a recommendation for what I believe would be your best language to begin with. And interestingly enough there are quite a few young professionals who are making the same recommendation. As you will note, there is some bias to this recommendation as to my expertise with the Microsoft development environments but I also provide sound reasoning for its selection.

You should also know that if you are seriously interested in learning the basics of this profession I would be more than happy to correspond with you to answer any questions you may have, including discussing the various software tools you will need, as well as help the development environments you will begin to study.

I will also provide you with the two recommended texts you will find later in this paper, both of which have been selected with the new programming student in mind. And both are available at Amazon.com

As to why two texts, this is quite simple once you have come to understand how such manuals are written. No manual, no matter the technical subject, ever covers all of the possible questions a student may have, unless of course you are taking a formal course for which a specific manual is used. Manuals are most often written from two perspectives; one, from the actual detailed documentation by the vendor of the technology or two, by the experience of the author who has actually worked with the technology. The former type of manual is somewhat more prevalent and has always been a sore point with professionals since so much of the information is freely available on the vendors’ sites. However, for a new student, finding such information can be a difficult task within itself since you have to have an understanding of the technology to find your way around such massive knowledge bases. So to begin such studies, printed manuals are almost always the best starting point since they are clearly arranged by the most important topics that have to be learned in a sequential manner to make you into a successful developer.

The first manual you will find in the list is “C# For Beginners: The tactical guidebook – Learn CSharp by coding”. This is the one you should start with as it was designed completely for a beginner programmer. The other manual is also quite excellent coming from Microsoft Press and can be used for additional study and reference, which you will most likely need to do. Both of these manuals should be able to provide answers to many of the questions you may have during your studies. Those that you have difficulty in resolving just send me an email with a description of what you are trying to accomplish and I will send you an answer you should be able to incorporate into your work.

Though I have never taught in a university setting I have provided technical classes in the corporate environments I have been employed in and quite a number of technical seminars all of which the attendees enjoyed. I have also trained several technical professionals in the technologies that have been required for their positions.

I am also an ardent supporter of women technical professionals simply by the fact that if my profession were to have more of them I believe it would bring a sense of professional calm to an industry that has grown increasingly erratic in the Microsoft environments especially for which I specialize in.

So let’s begin…

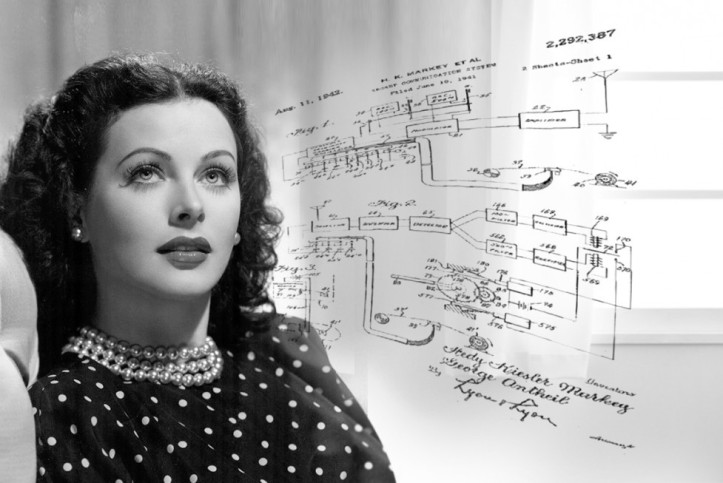

Actress Hedy Lamarr was also a fine mathematician in the 1940s who co-invented the basis for today’s modern WiFi

Actress Hedy Lamarr was also a fine mathematician in the 1940s who co-invented the basis for today’s modern WiFi

The History…

The Beginnings

Commercial computers and the development of what is now known as the Information Technology profession got its beginnings in the 1950s when UNIVAC, a Sperry-Rand corporation, introduced the first UNIVAC machines for actual computerization of organizations. This introduction was a very limited one and few organizations appeared to be all that interested in such an investment as so much of it remained within the purview of the government and military\scientific organizations.

There is some dispute as to whether UNIVAC saw this lack of interest (through a study they initiated) as an omen of the future and decided not to invest further in the commercialization of such equipment or if its developments were somehow secreted away and adopted by the International Business Machine Corporation (IBM) through a somewhat questionable contract for rights between the two organizations. In either case IBM, which came to fame as a result of its typewriter and punch-card equipment, began production of the first actual commercial computers in the 1960s. This equipment was known as the IBM-360 line of machines. They also had a smaller line of scientific equipment, which interestingly enough had the same series number as the much larger UNIVAC mainframes; the 1100s. The low-end IBM business machine was called the IBM 360-20, which I actually worked with on an account with UNIVAC as a systems engineer back around 1977 or so when I was engaged within a conversion project from the 360-20 to UNIVAC’s brand new 9000 series of equipment; this one being the 9030 model.

These years were marked by the development of formal technology, development infrastructures for corporations and a lot of advances for both the UNIVAC and IBM equipment, with IBM eventually winning the market share.

Though the conditions of the profession at the time were quite arduous, the female contingent of professionals was quite developed and was at an all-time high of between 35% and 40% of the US technical workforce in the 1980s. Even during a time of greater misogyny (prejudices/mistreatment against/of women) in the US work forces, women seemingly found a career they could work in where they could feel more at equal with the male work force.

Into the 1970s

In the late 1970s, microprocessor technology was being developed at an increasing rate which produced a fledgling hobbyist market for which the Z80 microprocessor was one of the most popular of processors for such development. However, programming such units was rather difficult since the software hadn’t caught up with the physical equipment in terms of easy to use commercialized products. The result was that the British company, Commodore, actually produced the first viable programmable, personal computer in the guise of the PET Computer and the later Commodore 64; the latter, a machine that allowed for approximately 32 to 48 kilobytes (kilobyte=1000 bytes) of ram for programming leaving the rest to be partitioned off for the operating system (OS) which was hard-wired into the unit’s motherboard (the actual, physical board in a personal computer where all the circuitry and the actual microprocessor reside).

As limited as the capabilities of the Commodore 64 was, it became one of the most popular products in computer history and still retains that honor today. Commodore would later release a more capable model in the Commodore 128 in the but the original 64 still retained its popularity and continued to sell well. On this equipment a lot of software advances were accomplished including the beginnings of a fledgling gaming industry that is now worth billions today.

Along with the rise of Commodore two other companies entered the fray and began to produce similar equipment; Atari and Apple. By the early 1980s these three companies became the standards in the emerging personal computer market.

Entering the technology profession is a difficult endeavor for anyone, male or female, but the effort, if one has the interest, should keep young women looking forward to helping change the landscape so that more young ladies from all backgrounds in life have the opportunity to not only enter the profession but have the courage to change it to the standards of a nurturing profession for the pursuit of excellence instead of the morass that currently exists for the pursuit of profit…

Entering the technology profession is a difficult endeavor for anyone, male or female, but the effort, if one has the interest, should keep young women looking forward to helping change the landscape so that more young ladies from all backgrounds in life have the opportunity to not only enter the profession but have the courage to change it to the standards of a nurturing profession for the pursuit of excellence instead of the morass that currently exists for the pursuit of profit…

On to the 1980s

In 1981, IBM, seeing the proverbial “writing on the wall”, released the first commercially feasible “business” personal computer, the IBM-XT. There was little internal innovation with this introduction as IBM actually used many off-the-shelf parts to assemble this machine. However, their contribution to this developing field was the way in which they were able to make this machine work as a full-fledged business personal computer despite its limitations.

While Commodore, Atari, and Apple all used either the Z80 or Motorola chips (chipset=the actual microprocessor hardware; most often just called a chip), IBM introduced its model with the new 8080 chip from a fledgling company called Intel. (Please don’t quote me on this since there was a variant to the 8080 that was used at the time as well and I don’t remember if it was Intel or its earlier incarnation as Fairchild Semiconductor that introduced either of these microprocessors).

Once the IBM-XT was introduced, the development of the commercial personal computer market began since the software development of actual applications that both consumers and business organizations could use began in earnest.

Women were still a substantial percentage of the technical workforce as the 1990s emerged but discouragement to their entry into the profession would set in as the decade moved on.

Women were still a substantial percentage of the technical workforce as the 1990s emerged but discouragement to their entry into the profession would set in as the decade moved on.

And the 1990s

By the early 1990s my profession literally leap-frogged from primitive personal computers to networked machines with advancing operating systems and an increasing number of software development tools to the point that microprocessor work began to develop into its own separate area or development endeavors by software engineers.

At this time the percentage of woman professionals in the field still remained somewhat steady at the original 35% to 40% of the technical workforce. However, this would soon change as businesses expected software developers working with these new personal computers to develop results increasingly faster due to the easy nature of such development when compared to similar development on the mainframes.

Around 1990 or so, Microsoft Windows began gaining interest with its first release of windows 3.0, which was basically an operating system shell. In other words it provided a graphical interface to the user while still relying on the current DOS operating system to manage all of the internal processes of the machine (ie: writing to a hard drive). Around 1991 Windows 3.1 was released along with the first developer-friendly language that could take advantage of the windows graphical user interface; Visual Basic 1.0.

In that same year Sun Microsystems would release what has become today the popular Java language. Originally designed in the research laboratory as a language that could support software in appliances (such as a television), its commercial release as a result, remained somewhat stunted until it was re-oriented towards large scale application deployments in and around 1995. This language would become the foremost threat to the increasing dominance of the Microsoft Corporation as Sun Microsystems would wisely let Microsoft and the top language vendor at the time competing with it, Borland International; fight it out for software language development dominance.

Borland International was the vendor to release the critically acclaimed and popular Turbo Pascal and C++ compilers at the time, which Microsoft’s products could not easily compete with in terms of similar offerings in C++ and their BASIC products. In the latter part of the 1990s Borland would make critical, tactical mistakes in its marketing with their attempts to match Microsoft’s own marketing capabilities by attempting to develop the same scale of software offerings. Given that Borland International was primarily a superb, technical organization, it squandered its strengths on foolish corporate acquisitions subsequently weakening its technical acumen allowing Microsoft to literally but slowly steamroll it out of existence.

Nonetheless, the modern era of software application development as you have experienced it today was born with the first introduction of Visual Basic 1.0 in 1991 that allowed for the first easy-to-use Windows development environment for the graphical Windows frameworks.

Surprisingly, what a lot of people today believe were the results of innovations by both the Microsoft and Apple corporations were mostly invented at the XEROX Parc research laboratories in the 1960s and 1970s. The mouse, graphical display, and networking are just some of the examples of the inventions of this once powerful American research center. The story is that its demise came as a result of its greatest strengths; research. However, the company was highly politicized, which prohibited from getting their inventions to market in a timely and cost effective way.

Borland’s language offerings nonetheless remained quite popular for a time since they targeted the DOS operating systems directly, which is what Windows would sit atop of until 1995 when Windows 95, the first substantial personal computer 32bit operating system would be released. And with Borland’s introduction of Turbo Pascal 4.0 in 1987, which laid the ground work for the modern IDE (Integrated Development Environment), Borland secured software language prominence until later versions of Visual Basic would unseat it as Windows became the dominant OS for personal computers.

There were at the time also several other languages that had become very popular and still were in tremendous use for DOS-only applications, which were run within the new Windows environment in what is called a DOS command shell.

These languages were the previous mentioned BASIC languages, Borland International’s Pascal compilers, and Europe’s excellent Jensen Top-Speed language compilers. The DBase database language systems were also being increasingly developed and refined and were being released from new companies beyond the original developer of DBase, Ashton Tate.

Though it was Ashton Tate that won fame for the creation of DBase, the language itself was originally developed at the US Jet Propulsion Laboratories or JPL by a single software engineer. At least this is how we understood this history at the time. I would later find different story years later, regarding this history but it appeared suspect to me. As a result, I would not quote me on this piece of historical information.

Nonetheless, an outgrowth of DBase development was the incredible networked DBase system, Vulcan, by Emerald Bay in the early 1990s. Vulcan was the premier DBase system at the time as it not only came capable of networking among peer-to-peer workstations (in this type of setup a workstation would act both as a server and workstation allowing other permissioned workstations to access a centralized database store) but also provided a very powerful and rich DBase-like language.

With the exception of Borland’s Pascal compilers, no other language development ever came close to the Emerald Bay offering in uniqueness, simplicity, power, and capability. It was so easy to implement that an associate and I had a networked demonstration system up and running within 15 minutes (it took a few days to actually build the demo software).

Emerald Bay would experience a similar demise as would Borland by making a critical marketing mistake. At the time, IBMs famed SQL database language (Structured Query Language) was being moved from the IBM mainframes into the development areas for database applications on personal computers. SQL is a language that everyone eventually becomes familiar with if they pursue a software developer career.

Up to this point the majority of database systems relied in what were known as ISAM systems (Indexed Sequential Access Methods), which provided for key-centered data access as well as sequential data access. Key-centered data access allows one to find a record based upon a key defined for that record type such as a social security number. Sequential data access simply allows an application to read a file of records going from the first to the last.

Another form of data access at the time was known as DRAM or Direct Record Address Method, which allows an application to retrieve a record based upon its internally stored physical address on a hard disk. To date, I believe this is still considered the fastest type of record access available. However, due to its complexities it was never formally moved to database systems that would run on personal computers and what would later be called servers (simply super personal computers designed for multiple access and heavy usage).

These forms of data access would become overly arduous to work with when wanting to work with groups of records since so much coding had to be implemented to perform such types of data retrieval. The IBM SQL language however, allowed for a far easier way to work with such record groups, which are called sets or record sets (sets of records).

As a result, SQL became increasingly popular for database systems that would run on personal computers. Though all of the DBase languages as well as the Vulcan language had 4th generation techniques of reading and writing record sets to their own database systems, the popularity of a generic database language grew to the point that DBase and DBase-like systems were beginning to see a dramatic shift in how developers wanted to work with their data.

The people at Emerald Bay, like the people at Borland International, all of whom were superior professional internals developers saw no need to move their product to the SQL language. They believed their product was far more efficient than any database predicated on SQL.

The people at Emerald Bay were correct in their technical preference since all SQL systems rely on what is known as the BTree\B+Tree systems of data access. Both these systems are refinements of the original mainframe ISAM systems with the SQL language simply acting as a translation layer which would take the SQL code, convert it into ISAM based code during a request to the database and return the data to the SQL language in a way it could understand it so that developers could work with it.

Such layers ALWAYS make an application less efficient but with the increasing power and modernization of the physical hardware as well as the microprocessors, such inefficiencies had actual less real-time impact on application performance.

Emerald Bay refused to understand this trend and their excellent product quickly found its way into the dustbins of technology history. Had they not made this mistake, the Vulcan language would probably be quite alive and well today due to its inherent power.

The database organization, Sybase, would introduce a similar product based upon SQL and its own powerful database, called Power-Builder. However, due to the complexities of this product it eventually lost to the more popular Windows-based languages such as Visual Basic and the new Visual C++ compilers.

Microsoft and Borland international would in the 1990s also refine and develop new C++ language compilers which became the de-facto standard for all internals (ie: operating systems) and game programming and the C++ language itself remains the standard for such work today. However, any modern language today can be used to develop games with the many interface libraries that have been built to interact with the underlying C++ libraries.

The 1990s also saw the introduction of the Modula languages, which was basically an extension of the Pascal language. ADA, the popular military language developed by the US Armed Forces was seeing an increase in interest as well. The US Armed Forces under the leadership of Admiral Grace Hopper created the first English-based mainframe developer language called COBOL in the 1960s. This language is still in heavy use around the world on the mainframes and has been refined into a modern microprocessor language as well. In an attempt to mirror the popularity of COBOL, the US Armed Forces tried a similar project for commercial use with their ADA language. However, since the language was very Pascal-like and primarily used for weapon systems, business software developers saw little need for it and basically ignored its refinements.

Up through the early 1990s both Microsoft and IBM partnered to create a universal operating system that could take advantage of the increasing graphic powers of the developing personal computers. This new operating system was called OS2.

Technically, OS2 would become the most well designed operating system ever for the personal computer with tremendous stability. However, over time Microsoft and IBM would come to a major disagreement as to how many of the attributes of their joint effort should work. The result was that Microsoft left the partnership to pursue its own advancements with its current windows operating system. IBM continued to refine its OS2 operating system but did such a poor job of marketing it that few organizations would eventually adopt it as a standard, though there was no question that it was far superior to what Microsoft was developing.

There were two other drawbacks to OS2 that would sink its capacity to compete with the evolving Windows environments. One, it was far too complex to configure for a personal computer taking developers literally hours at a time to tinker with it and its huge amount of settings to get it to work as desired on such machinery. This would be eventually corrected with its last version known as OS2-Warp but by that time, the perception of OS2 was so severely damaged due to its complexities that this last version failed to catch any interest.

The other drawback was that IBM was primarily a mainframe company and wanted to maintain its dominance in the large scale equipment market. As a result, they hobbled the OS2 operating system to initially work well on only PC-286 machines, which were the next advancement in pc hardware after the IBM-XT. The problem though was that by the time OS2 had been refined for the PC-286 (Intel) machinery, Intel had already released the highly advanced 386 chip, encouraging vendors to immediately develop 386 machinery.

The Intel 386 chip initiated the age of the modern personal computer and mobile technologies you are now familiar with and at the time presented a serious threat to IBM’s dominance in computer hardware, which was predicated on mainframe technologies. Though IBM also sold personal computers, they were not nearly as popular as the comparable equipment from the growing industry competition.

The new Intel 386 chip was the first microprocessor to be completely based upon 32bit chip technology, which provided a unique platform for advanced software development and new hardware design. Prior to this new technology, the Commodore equipment relied on 8bit chips along with its immediate competition. When the IBM PC-XT was released, microprocessor technology moved into the 16bit chip technology. And when the IBM PC-286 technology was released, chip technology became predicated on 24bit operations.

However, with the release of 386 chip technology, 32bit chips would now become the norm even after 64bit chip technology would be developed several years later. It would take years before the new 64bit technology would supersede 32bit technology as it has done today.

Each generation of chip technology not only allowed for faster processing power but also an increase in accessible memory. In terms of memory accessibility, all DOS operating systems were limited to 640 kilobytes (kb) of memory; in other words the largest program that could be processed would be 640kb minus the size of the operating system. In most cases this would leave such systems with approximately 512+kb of memory for program size. DOS systems were also only single-task machines as they could only run one program at a time.

In terms of speed, operating systems process at the “bit” level in terms of computer instructions.

You have most likely already heard the term, “byte”, which is considered the smallest programmable unit in software. A “byte” represents a single character of data such as for example, the letter “A”. Each byte however, is comprised of 8 bits (on the mainframes it was 9 with the 9th bit used by the machine for parity checks) predicated on the binary system of mathematics. As a result, each bit within a byte would either reflect a “0” or a “1” with all 8 bits representing a binary, numerical representation of character data (text) or a number itself.

The result then is that an 8bit chip can process a single byte at a time (8 bits); a 16bit chip, 16 bits or two bytes at a time; a 24bit chip 24 bits or 3 bytes at a time; and 32 bits or 4 bytes at a time. Each chip size also represented mathematically how much memory it could access. So with 8 bit chips, up to 64kb could be accessed while with a 32 bit chip up to 4 gigabytes could be accessed. You can see the extreme differences with this.

In 1995, Microsoft released the operating system that all of its subsequent operating systems have been predicated upon, “Windows 95”. Outside of the eventual release of IBM’s later OS2 operating systems, Windows 95 was the first operating system that could take full advantage of the new 32bit hardware. However, it was hobbled by the fact that it could still support 16bit software with emulations of the DOS operating system. Having this capability within the operating system restricted it to what it could do with the advances of the 32bit architecture. It nonetheless, extended the life of DOS-based application development.

Windows 95 also heralded in the beginning of a new era of software development where businesses were becoming used to the faster development capabilities of software developers and engineers. At the same time the Internet (developed by the DARPA division of the US military in the late 1960s early 1970s) was beginning to make inroads into commercial organizations as a development platform for web applications.

The result was that pressures began to mount on development teams to produce new applications at increasingly faster rates. In turn, software engineers and software engineering analysts began to apply software engineering development standards to microprocessor software development efforts, which were directly related to the research and success that such standards had developed within the mainframe development environments.

Outsourcing and insourcing of technical work also began around this time to a limited extent as the first cadre of technical professionals from Asia and India began to be seen in technical organizations. Prior to 2000, these foreign technicians were some of the finest people that their countries had to offer. These newcomers were highly professional, very friendly, and always willing to offer to help anyone in need. You couldn’t have asked to meet a nicer more professional group of personnel anywhere.

Stay tuned for the next part in this series…