The Full Series

- Part 1: We create the whole

NeuralNetwork class from scratch. - Part 2: We create an environment in Unity in order to test the neural network within that environment.

- Part 3: We make a great improvement to the neural network already created by adding a new type of mutation to the code.

Introduction

Before you start, I want you to know that I'm switching to YouTube:

Over the past two decades, Machine Learning has become one of the mainstays of information technology and with that, a rather central, albeit usually hidden, part of our life. With the ever increasing amounts of data becoming available, there is good reason to believe that smart data analysis will become even more pervasive as a necessary ingredient for technological progress. Recently, not only Neural Networks have been taking over the "Machine Learning" gig, but I also noticed there was a lack of tutorials that explain how you can implement a Neural Network from scratch, so I thought I should make one!

Background

In this article, you're going to understand the core fundamentals of Neural Networks, how you can implement one in pure C# and train it using genetic mutation. You need to know basing C# programming knowledge and basic knowledge of Object Oriented Programming before going through this article. Note that the Neural Network is going to learn through unsupervised learning/mutation. Supervised Learning/Backpropagation is not going to be introduced in this article. This is, however, one of my top priorities.

Understanding Neural Networks

If you have no idea how neural networks work, I suggest you watch this video made by 3Blue1Brown:

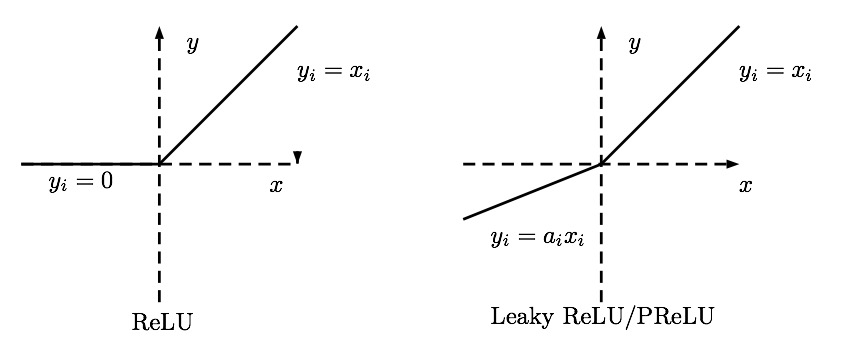

I did not make that video, and I don't think I'll ever be able to explain Neural Networks in a more intuitive way. Note that, in the video, the Sigmoid activation function was introduced, but I'm going to use "Leaky ReLU" instead because it is faster to train and does not introduce the same issues the ReLU has (Dead Neurons). Furthermore, the bias explained in the video is going to be represented as a neuron that has a value of 1 all the time.

You can also watch the video posted earlier at the beginning of the article. I made that one, and it's using Leaky ReLU as an activation function.

Using the Code

Let's assume we want to make a neural network like this one:

It would be a good practice to split the network into a group of sections. Like this:

This way, a neural network is just an array of NeuralSections. In the NeuralNetwork.cs script, we should first import the needed namespaces:

using System;

using System.Collections.Generic;

using System.Collections.ObjectModel;

Then, let's declare the NeuralNetwork class:

public class NeuralNetwork

{

...

}

The NeuralNetwork class should start with:

public UInt32[] Topology

{

get

{

UInt32[] Result = new UInt32[TheTopology.Count];

TheTopology.CopyTo(Result, 0);

return Result;

}

}

ReadOnlyCollection<UInt32> TheTopology;

NeuralSection[] Sections;

Random TheRandomizer;

Then, the constructor should ensure that the inputs are valid, initialize TheRandomizer, set the topology of the network, initialize all the sections of the network and construct each of these sections:

public NeuralNetwork (UInt32[] Topology, Int32? Seed = 0)

{

if (Topology.Length < 2)

throw new ArgumentException("A Neural Network cannot contain less than 2 Layers.",

"Topology");

for (int i = 0; i < Topology.Length; i++)

{

if(Topology[i] < 1)

throw new ArgumentException("A single layer of neurons must contain,

at least, one neuron.", "Topology");

}

if (Seed.HasValue)

TheRandomizer = new Random(Seed.Value);

else

TheRandomizer = new Random();

TheTopology = new List<uint>(Topology).AsReadOnly();

Sections = new NeuralSection[TheTopology.Count - 1];

for (int i = 0; i < Sections.Length; i++)

{

Sections[i] = new NeuralSection(TheTopology[i], TheTopology[i + 1], TheRandomizer);

}

}

Another constructor that can clone NeuralNetworks should be present in order to make training of offsprings possible:

public NeuralNetwork (NeuralNetwork Main)

{

TheRandomizer = new Random(Main.TheRandomizer.Next());

TheTopology = Main.TheTopology;

Sections = new NeuralSection[TheTopology.Count - 1];

for (int i = 0; i < Sections.Length; i++)

{

Sections[i] = new NeuralSection (Main.Sections[i]);

}

}

Then, there is the FeedForward function. It takes the input array, makes sure it is valid, passes it through all the sections and returns the output of the final section:

public double[] FeedForward(double[] Input)

{

if (Input == null)

throw new ArgumentException("The input array cannot be set to null.", "Input");

else if (Input.Length != TheTopology[0])

throw new ArgumentException

("The input array's length does not match the number of neurons

in the input layer.", "Input");

double[] Output = Input;

for (int i = 0; i < Sections.Length; i++)

{

Output = Sections[i].FeedForward(Output);

}

return Output;

}

Now, we need to give the developer the ability to mutate the NeuralNetworks. The Mutate function just mutates each section independently:

public void Mutate (double MutationProbablity = 0.3, double MutationAmount = 2.0)

{

for (int i = 0; i < Sections.Length; i++)

{

Sections[i].Mutate(MutationProbablity, MutationAmount);

}

}

This is how the NeuralNetwork class should look like right now:

using System;

using System.Collections.Generic;

using System.Collections.ObjectModel;

public class NeuralNetwork

{

public UInt32[] Topology

{

get

{

UInt32[] Result = new UInt32[TheTopology.Count];

TheTopology.CopyTo(Result, 0);

return Result;

}

}

ReadOnlyCollection<UInt32> TheTopology;

NeuralSection[] Sections;

Random TheRandomizer;

public NeuralNetwork (UInt32[] Topology, Int32? Seed = 0)

{

if (Topology.Length < 2)

throw new ArgumentException("A Neural Network cannot contain less than 2 Layers.",

"Topology");

for (int i = 0; i < Topology.Length; i++)

{

if(Topology[i] < 1)

throw new ArgumentException

("A single layer of neurons must contain, at least, one neuron.", "Topology");

}

if (Seed.HasValue)

TheRandomizer = new Random(Seed.Value);

else

TheRandomizer = new Random();

TheTopology = new List<uint>(Topology).AsReadOnly();

Sections = new NeuralSection[TheTopology.Count - 1];

for (int i = 0; i < Sections.Length; i++)

{

Sections[i] = new NeuralSection

(TheTopology[i], TheTopology[i + 1], TheRandomizer);

}

}

public NeuralNetwork (NeuralNetwork Main)

{

TheRandomizer = new Random(Main.TheRandomizer.Next());

TheTopology = Main.TheTopology;

Sections = new NeuralSection[TheTopology.Count - 1];

for (int i = 0; i < Sections.Length; i++)

{

Sections[i] = new NeuralSection (Main.Sections[i]);

}

}

public double[] FeedForward(double[] Input)

{

if (Input == null)

throw new ArgumentException("The input array cannot be set to null.", "Input");

else if (Input.Length != TheTopology[0])

throw new ArgumentException

("The input array's length does not match the number of neurons

in the input layer.",

"Input");

double[] Output = Input;

for (int i = 0; i < Sections.Length; i++)

{

Output = Sections[i].FeedForward(Output);

}

return Output;

}

public void Mutate (double MutationProbablity = 0.3, double MutationAmount = 2.0)

{

for (int i = 0; i < Sections.Length; i++)

{

Sections[i].Mutate(MutationProbablity, MutationAmount);

}

}

}

Now that we have implemented the NeuralNetwork class, it's time to implement the NeuralSection class:

public class NeuralNetwork

{

...

private class NeuralSection

{

...

}

...

}

Each NeuralSection should contain those global variables:

private double[][] Weights;

private Random TheRandomizer;

The NeuralSection class should also contain 2 constructors:

public NeuralSection(UInt32 InputCount, UInt32 OutputCount, Random Randomizer)

{

if (InputCount == 0)

throw new ArgumentException

("You cannot create a Neural Layer with no input neurons.", "InputCount");

else if (OutputCount == 0)

throw new ArgumentException

("You cannot create a Neural Layer with no output neurons.", "OutputCount");

else if (Randomizer == null)

throw new ArgumentException

("The randomizer cannot be set to null.", "Randomizer");

TheRandomizer = Randomizer;

Weights = new double[InputCount + 1][];

for (int i = 0; i < Weights.Length; i++)

Weights[i] = new double[OutputCount];

for (int i = 0; i < Weights.Length; i++)

for (int j = 0; j < Weights[i].Length; j++)

Weights[i][j] = TheRandomizer.NextDouble() - 0.5f;

}

public NeuralSection(NeuralSection Main)

{

TheRandomizer = Main.TheRandomizer;

Weights = new double[Main.Weights.Length][];

for (int i = 0; i < Weights.Length; i++)

Weights[i] = new double[Main.Weights[0].Length];

for (int i = 0; i < Weights.Length; i++)

{

for (int j = 0; j < Weights[i].Length; j++)

{

Weights[i][j] = Main.Weights[i][j];

}

}

}

Now comes the FeedForward function that does all the propagation magic:

public double[] FeedForward(double[] Input)

{

if (Input == null)

throw new ArgumentException

("The input array cannot be set to null.", "Input");

else if (Input.Length != Weights.Length - 1)

throw new ArgumentException("The input array's length

does not match the number of neurons in the input layer.", "Input");

double[] Output = new double[Weights[0].Length];

for (int i = 0; i < Weights.Length; i++)

{

for (int j = 0; j < Weights[i].Length; j++)

{

if (i == Weights.Length - 1)

Output[j] += Weights[i][j];

else

Output[j] += Weights[i][j] * Input[i];

}

}

for (int i = 0; i < Output.Length; i++)

Output[i] = ReLU(Output[i]);

return Output;

}

As we have done in the NeuralNetwork class, there should be a Mutate function in the NeuralSection class too:

public void Mutate (double MutationProbablity, double MutationAmount)

{

for (int i = 0; i < Weights.Length; i++)

{

for (int j = 0; j < Weights[i].Length; j++)

{

if (TheRandomizer.NextDouble() < MutationProbablity)

Weights[i][j] = TheRandomizer.NextDouble() *

(MutationAmount * 2) - MutationAmount;

}

}

}

Finally, we need to add our ReLU activation function to the NeuralSection class:

private double ReLU(double x)

{

if (x >= 0)

return x;

else

return x / 20;

}

This way, the script should end up looking like this:

using System;

using System.Collections.Generic;

using System.Collections.ObjectModel;

public class NeuralNetwork

{

public UInt32[] Topology

{

get

{

UInt32[] Result = new UInt32[TheTopology.Count];

TheTopology.CopyTo(Result, 0);

return Result;

}

}

ReadOnlyCollection<UInt32> TheTopology;

NeuralSection[] Sections;

Random TheRandomizer;

private class NeuralSection

{

private double[][] Weights;

private Random TheRandomizer;

public NeuralSection(UInt32 InputCount, UInt32 OutputCount, Random Randomizer)

{

if (InputCount == 0)

throw new ArgumentException

("You cannot create a Neural Layer with no input neurons.", "InputCount");

else if (OutputCount == 0)

throw new ArgumentException

("You cannot create a Neural Layer with no output neurons.", "OutputCount");

else if (Randomizer == null)

throw new ArgumentException

("The randomizer cannot be set to null.", "Randomizer");

TheRandomizer = Randomizer;

Weights = new double[InputCount + 1][];

for (int i = 0; i < Weights.Length; i++)

Weights[i] = new double[OutputCount];

for (int i = 0; i < Weights.Length; i++)

for (int j = 0; j < Weights[i].Length; j++)

Weights[i][j] = TheRandomizer.NextDouble() - 0.5f;

}

public NeuralSection(NeuralSection Main)

{

TheRandomizer = Main.TheRandomizer;

Weights = new double[Main.Weights.Length][];

for (int i = 0; i < Weights.Length; i++)

Weights[i] = new double[Main.Weights[0].Length];

for (int i = 0; i < Weights.Length; i++)

{

for (int j = 0; j < Weights[i].Length; j++)

{

Weights[i][j] = Main.Weights[i][j];

}

}

}

public double[] FeedForward(double[] Input)

{

if (Input == null)

throw new ArgumentException

("The input array cannot be set to null.", "Input");

else if (Input.Length != Weights.Length - 1)

throw new ArgumentException("The input array's length

does not match the number of neurons in the input layer.", "Input");

double[] Output = new double[Weights[0].Length];

for (int i = 0; i < Weights.Length; i++)

{

for (int j = 0; j < Weights[i].Length; j++)

{

if (i == Weights.Length - 1)

Output[j] += Weights[i][j];

else

Output[j] += Weights[i][j] * Input[i];

}

}

for (int i = 0; i < Output.Length; i++)

Output[i] = ReLU(Output[i]);

return Output;

}

public void Mutate (double MutationProbablity, double MutationAmount)

{

for (int i = 0; i < Weights.Length; i++)

{

for (int j = 0; j < Weights[i].Length; j++)

{

if (TheRandomizer.NextDouble() < MutationProbablity)

Weights[i][j] = TheRandomizer.NextDouble() *

(MutationAmount * 2) - MutationAmount;

}

}

}

private double ReLU(double x)

{

if (x >= 0)

return x;

else

return x / 20;

}

}

public NeuralNetwork (UInt32[] Topology, Int32? Seed = 0)

{

if (Topology.Length < 2)

throw new ArgumentException

("A Neural Network cannot contain less than 2 Layers.", "Topology");

for (int i = 0; i < Topology.Length; i++)

{

if(Topology[i] < 1)

throw new ArgumentException

("A single layer of neurons must contain,

at least, one neuron.", "Topology");

}

if (Seed.HasValue)

TheRandomizer = new Random(Seed.Value);

else

TheRandomizer = new Random();

TheTopology = new List<uint>(Topology).AsReadOnly();

Sections = new NeuralSection[TheTopology.Count - 1];

for (int i = 0; i < Sections.Length; i++)

{

Sections[i] = new NeuralSection

(TheTopology[i], TheTopology[i + 1], TheRandomizer);

}

}

public NeuralNetwork (NeuralNetwork Main)

{

TheRandomizer = new Random(Main.TheRandomizer.Next());

TheTopology = Main.TheTopology;

Sections = new NeuralSection[TheTopology.Count - 1];

for (int i = 0; i < Sections.Length; i++)

{

Sections[i] = new NeuralSection (Main.Sections[i]);

}

}

public double[] FeedForward(double[] Input)

{

if (Input == null)

throw new ArgumentException("The input array cannot be set to null.", "Input");

else if (Input.Length != TheTopology[0])

throw new ArgumentException("The input array's length

does not match the number of neurons in the input layer.", "Input");

double[] Output = Input;

for (int i = 0; i < Sections.Length; i++)

{

Output = Sections[i].FeedForward(Output);

}

return Output;

}

public void Mutate (double MutationProbablity = 0.3, double MutationAmount = 2.0)

{

for (int i = 0; i < Sections.Length; i++)

{

Sections[i].Mutate(MutationProbablity, MutationAmount);

}

}

}

Now that we have our implementation ready, we have to try it on something simple. The XOR function will do as a proof of concept. If you don't know what an XOR function is, here is what you should expect:

Because a picture is worth a thousand words, here is how the training process should work:

If we turn that flow chart into code, this is what we should end up with:

using System;

namespace NeuralXOR

{

class Program

{

static void Main(string[] args)

{

int Iteration = 0;

NeuralNetwork BestNetwork = new NeuralNetwork

(new uint[] { 2, 2, 1 });

double BestCost = double.MaxValue;

double[] BestNetworkResults = new double[4];

double[][] Inputs = new double[][]

{

new double[] { 0, 0 },

new double[] { 0, 1 },

new double[] { 1, 0 },

new double[] { 1, 1 }

};

double[] ExpectedOutputs = new double[] { 0, 1, 1, 0 };

while (true)

{

NeuralNetwork MutatedNetwork = new NeuralNetwork(BestNetwork);

MutatedNetwork.Mutate();

double MutatedNetworkCost = 0;

double[] CurrentNetworkResults = new double[4];

for (int i = 0; i < Inputs.Length; i++)

{

double[] Result = MutatedNetwork.FeedForward(Inputs[i]);

MutatedNetworkCost += Math.Abs(Result[0] - ExpectedOutputs[i]);

CurrentNetworkResults[i] = Result[0];

}

if (MutatedNetworkCost < BestCost)

{

BestNetwork = MutatedNetwork;

BestCost = MutatedNetworkCost;

BestNetworkResults = CurrentNetworkResults;

}

if (Iteration % 20000 == 0)

{

Console.Clear();

for (int i = 0; i < BestNetworkResults.Length; i++)

{

Console.WriteLine(Inputs[i][0] + "," +

Inputs[i][1] + " | " + BestNetworkResults[i].ToString("N17"));

}

Console.WriteLine("Cost: " + BestCost);

Console.WriteLine("Iteration: " + Iteration);

}

Iteration++;

}

}

}

}

Run it, and you'll get that:

Points of Interest

I was really surprised when I found out that only 20,000 iterations could reduce the cost of the XOR function to under 0.03. I did expect it to take some more time, but it just didn't need more time. If anybody has any questions or just wants to talk about anything, you can leave a comment below. Wait for a future article that explains how you can use this implementation to make cars learn how to drive all by themselves in Unity using reinforcement learning. I'm working on something similar to this one made by Samuel Arzt:

Update on 11th December 2017

I finished the second article and it is currently waiting for submission. You can have a look at the demo video. It looks a bit creepy, but... Here you go:

Another Update on 11th December 2017

Looks like Part 2 is submitted, guys. Have fun! And... never think for a bit that we're done here. My current target is to implement 3 Crossover operators to make evolution a bit more efficient and offer the developer more diversity. After that, Backpropagation is the target.

Update on 20th February, 2018

Part 3 is up and running! It shows a substantial improvement over the system discussed in Parts 1 and 2. Tell me what you think!