This article is a short walk-through of how to expose ports over the Internet so you can test the Kubernetes pods externally.

Introduction

In a previous article in this Devops series, we looked at deploying a production ready Kubernetes cluster on Azure using the 'KubeSpray' project, we then set up a data storage volume with Kubernetes on Azure. This quick article is a short walk-through of how to expose ports over the Internet so you can test the pods externally. Note that this is neither production ready nor secure - we will cover that in a future article!

Background

When we develop services in containers on the cloud, often we want to expose these over the Internet for production or development. To do this, we generally use some kind of firewall/port forwarding rule manager. In Azure, we do this by creating inbound security rule mappings, that is, controlling the flow of network traffic from the wide open Internet, inside your virtual network in the cloud.

Locate Ports to Open

In Kubernetes, to identify the ports we are trying to map, we can use KUBECTL:

kubectl get svc -n kube-system

This will print out a list of running services and their ports on the internet Kubernetes network similar to the following. The list details the name of the exposed service, its type, IP, port, external IP (if any) and how long it has been operational (age).

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

heapster ClusterIP 10.233.54.27 <none> 80/TCP 16m

kube-dns ClusterIP 10.233.0.3 <none> 53/UDP,53/TCP 16m

kubernetes-dashboard ClusterIP 10.233.46.183 <none> 80/TCP 16m

monitoring-grafana NodePort 10.233.28.73 <none> 80:31719/TCP 16m

monitoring-influxdb ClusterIP 10.233.17.79 <none> 8086/TCP 16m

weave-scope-app NodePort 10.233.46.240 <none> 80:30243/TCP 16m

In order to expose these services, we need to map ports on our external IP using the firewall to redirect to the internal IP/port combination.

As we can only have one port per IP address, we need to create unique port numbers that we can use to map to the individual internal IP/port services.

For this example, we will map as follows:

- Grafana monitoring service - internal: 10.233.28.73: 80, external: 9001

- Kubernetes dashboard - internal: 10.233.46.183:80, external 9000

- Weave-scope - internal:10.233.46.240: 30243, external 9002

Open Ports

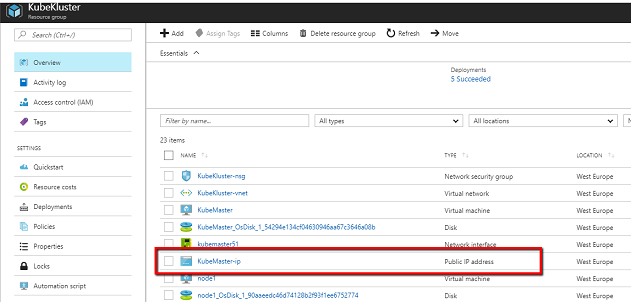

When we set up our cluster, we created one external Internet IP and attached this to the Kubernetes master. We can get this IP from the Azure dashboard by examining the external IP resource attached to the KubeMaster VM.

When you click into this page, you will see a list of existing inbound and outbound rules as the overview.

We need to select and click on the 'Inbound' security rules property to add our new rules.

Once the inbound rules opens, select the NEW button to add a rule.

You enter the new EXTERNAL port we determined earlier, and select 'VirtualNetwork'. You may need to wait a few minutes but that's it. Open a browser window to your KubeMaster public IP discussed in our previous article and you should see the services!

History

- 31st December, 2017: Version 1