Contents

Introduction

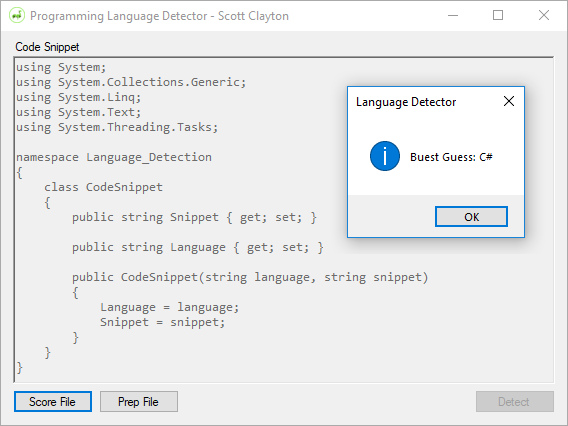

In this article, I explain how I trained a neural network classifier using Azure Machine Learning Studio to achieve 90% accuracy in identifying the programming language of a code snippet.

This is targeted towards individuals with existing ML Studio experience. For a more thorough walkthrough on the basics of ML Studio, please read my other article.

Running the Code

Here is what you need to do to run the sample application.

- Sign up for a free Azure ML Studio account

- Copy my Azure web service.

- Click "Open in Studio"

- Click "Run"

- Click "Set up Web Service"

- Copy your private API Key

- Click "Request/Response"

- Copy your Request URI (endpoint)

- Clone my GitHub repository

- Compile and run

- Enter your service endpoint when prompted

- Enter your API key when prompted

- Enter a code snippet into the text field and click "Detect"

Preprocessing the Data

The data we will be using contains 677 labelled code snippets from 25 different programming languages. Each snippet is wrapped in a <pre> tag and labelled using the lang attribute.

<pre lang="ObjectiveC">

var protoModel : ProtoModel?

override func viewDidLoad() {

super.viewDidLoad()

// create and setup the model

self.protoModel = ProtoModel()

self.protoModel?.delegate = self

// setup the view

labelWorkStatus.text = "Ready for Work"

}

</pre>

The first thing I did was parse these code snippets out of their <pre> tags and into a manageable list of labelled snippets. A bit of Regex did the trick.

public List<CodeSnippet> ExtractLabeledCodeSnippets(string html)

{

List<CodeSnippet> snippets = new List<CodeSnippet>();

MatchCollection matches =

Regex.Matches(html, "<pre.*?lang\\=\\\"(.*?)\\\".*?>(.*?)<\\/pre>",

RegexOptions.Singleline | RegexOptions.IgnoreCase);

foreach (Match m in matches)

{

snippets.Add(new CodeSnippet(m.Groups[1].Value, HttpUtility.HtmlDecode(m.Groups[2].Value)));

}

return snippets;

}

These snippets cannot be fed to a classifier as a big block of text, so I needed to find a way to measure distinct features within the snippets. This was done using a method called feature extraction, where each snippet is broken down into small chunks, hashed, and then counted.

My first attempt was to simply split the code on spaces and new lines so that each chunk of code became its own feature.

class CleanFeatureExtractor : IFeatureExtractor

{

public List<string> ExtractFeatures(string input)

{

return input.Replace("\r", " ").Replace("\n", " ")

.Split(new string[] { " " }, StringSplitOptions.RemoveEmptyEntries).ToList();

}

}

The problem here is that you can go a long time without a space in some languages, so there were an extremely high number of features that appeared only one time in the entire data set.

My next attempt expanded on my previous attempt by also splitting on symbols in addition to spaces and new lines. The symbols seemed critical to the identity of a language, so I kept them in as their own features too.

class NGramFeatureExtractor : IFeatureExtractor

{

public List<string> ExtractFeatures(string input)

{

List<string> features = new List<string>();

input = input.ToLower().Replace("\r", " ").Replace("\n", " ");

features.AddRange(Regex.Replace(input, "[^a-z]", " ")

.Split(new string[] { " " }, StringSplitOptions.RemoveEmptyEntries));

features.AddRange(Regex.Replace(input, "[a-zA-Z0-9]", " ")

.Split(new string[] { " " }, StringSplitOptions.RemoveEmptyEntries));

return features;

}

}

Unfortunately, I was not able to get any decent results here either.

My final attempt, and the attempt I ended up using, was to split the entire snippet into groups of sequential letters. This produced a lot of features for each snippet, but that does not matter since we will remove useless features later before training.

class LetterGroupFeatureExtractor : IFeatureExtractor

{

public int LetterGroupLength { get; set; }

public LetterGroupFeatureExtractor(int length)

{

LetterGroupLength = length;

}

public List<string> ExtractFeatures(string input)

{

Listt<string> features = new Listt<string>();

input = input.ToLower().Replace("\r", "")

.Replace("\n", "").Replace(" ", "");

for (int i = 0; i < input.Length - LetterGroupLength; i++)

{

features.Add(input.Substring(i, LetterGroupLength));

}

return features;

}

}

This will take a code snippet like <b>bold</b> and convert it into a lot of N-character long strings. For N=3, the above code would be split into 9 features: "<b>", "b>b", ">bo"... You get the picture.

I experimented with various lengths of these letter group features, and 3 seemed to be the magic number. Accuracy gradually dropped the larger I made my letter group sizes.

These extracted features were then saved to a CSV file with two columns—one for the known language and one for the list of extracted features (which basically reads like a really long stutter). This is the file that I uploaded to Azure for training.

Before we can train, we need to hash our arbitrarily long list of features so that we get a fixed number of columns to feed the classifier. This is how we reduce a complex set of input data into something we can train on.

I chose to use a 15 bit hash (to give me 32,768 feature columns) and then to throw out all but the 2,000 most useful columns. For this, I chose the Mutual Information scoring method because it resulted in the higheset accuracy (followed by Spearman Correlation and Chi Squared).

The very last thing I did before training is split the data set 80%-20% between training and testing so that later I could calculate an accuracy off a set of data that I did not train on.

Training the Model

For training, I tried all four available multiclass classifiers and found the neural network to give the best results (followed closely by logistic regression). The decision tree and decision forest classifiers never got above 78% accuracy. After playing around with the number of hidden layer neurons, I eventually landed on 100.

After training the model, I was happy to see an overall accuracy of 90.54% on the unseen training data. The model misclassified every ObjectiveC snippet as C++ and seemed to struggle with JavaScript, but overall it did a great job.

From here, I exposed my model as a web service in Azure (read this to see how), wired it up to my application, and then ran the entire set of snippets through. The results were quite satisfying. I managed to get an overall accuracy of 96% across the training and testing sets.

Total: 677

Correct: 653

Incorrect: 24

Accuracy: 96.455%

Improving the Results

My model has a pretty decent accuracy at this point, but it is only based off of a few hundred code samples. To remedy this, I downloaded dozens of large projects from GitHub for 24 different languages and extracted 46,459 labelled code samples.

The majority of the snippets were from just 3 languages, so I decided to limit each language to 2,000 samples. I also excluded duplicates and really small snippets, and tried to remove as many comments as possible. That brought me down to 17,359 total snippets.

These snippets were then divided up so that 90% could be used for training and 10% could be used for testing.

Training took a lot longer (since it had to process 160MB of data), but eventually got me an overall accuracy of 99% on the 1,735 unseen testing snippets.

Finally, I ran the original set of 677 snippets (which were not used for training) through the new, much larger model. Unfortunately, as you can see in the confusion matrix below, it was only able to classify 53% of the snippets.

I believe the varied results are due to just how different the format of my snippets are from the provided snippets. Given a few hundred hours, I might be able to manually clean up the data and get better results, but forget that. I also considered writing a scraper to download a million labelled snippets off Code Project articles, but figured I would rather not get banned.

I tried to find a way to remove comments and string literals and give extra weight to known keywords, but that will only work if you first know what language you are dealing with. The preprocessing step needs to be exactly the same for both the training and real-life data sets, so using the label is off limits (since real-life data is not labelled).

Summary

I managed to train a model capable of classifying 90% of the 67 unseen snippets and 96% of all 677 snippets.

The initial data set was small, so I created my own set for training which got me 99% accuracy on 1,735 unseen snippets, but only 53% accuracy on the original 677 snippets (due to differences in format).

This was a very rewarding project, and I learned a lot while working on it.

Thanks!

History

- 2/16/2018 - Initial release