Introduction

In this article, we will go through the steps of building a machine learning model for a Naive Bayes Spam Classifier using python and scikit-learn. Since spam is a well understood problem and we are picking a popular algorithm with naive bayes, I would not go into the math and theory. Instead, I can concentrate on how to solve it as a machine learning problem. I will talk about the common machine learning steps and how I applied them to the problem.

Setup

This article uses sklearn for the machine learning tasks. It's a popular python tool for machine learning. All the relevant code is run in the spam-detection.ipynb Jupyter Notebook, it's included in the source download and github repo. I have also defined all dependencies in environment.yml to make it easier to setup the same environment.

If you want to follow along, you can:

- Install Python3, Anaconda.

- Create and activate the environment from the environment.yml included in the source.

conda env create -f environment.y

# Activate the new environment

# on windows

activate spam-detection

# on macOS and Linux

source activate spam-detection

- Run the notebook:

jupyter notebook spam-detection.ipynb

Defining the Machine Learning Problem

First, let's define the problem as a machine learning problem. We have a corpus of emails, each is labeled with spam or ham (not spam). This is our training data. The goal is to train our machine with the training data, so that when we show it a new email it hasn't seen before, it could tell us whether it's spam.

How do we do that? In general, there are a few steps in training a machine learning model. We will go through each step and see how we apply them to our problem.

- Data Preparation

- Model Training

- Review Model Accuracy

- Using Trained Model For Prediction

Data Preparation

In the data preparation step, we will explore the data to look for potential problems. We will preprocess the data to make it suitable for our machine learning algorithm. In this problem, we have email text data and a corresponding label that categorizes whether the email is spam, we need to turn them into vectors of numbers before we can feed them into our training model.

Importance of Data Preparation

The data preparation step is a very important step. In many problems, you could end up spending most of your effort in this step.

Quote:

You are what you eat.

Your machine learning model will only be as good as the data you train it with. As an example to illustrate this point. Let's say we are training a machine learning model to recognize apples and oranges in images. We already collected many images of apples and oranges in different shapes and sizes as our training data. In the training process, we will show these images to the machine and tell it whether it's an apple or orange. The machine learning model should pick up the patterns of oranges and apples. When we show it an image of apple or orange later, it could classify it correctly. Now what if we made a mistake in the labeling process such that all image labels are the exact opposite. Orange images are labeled as apples and apple images are labeled as oranges. What would happen if we train our machine with these images? The model prediction will also be the exact opposite!

The previous example in a bit contrived, but it's simple and makes it clear what bad data can do to your machine learning model. In reality, there are many different ways bad data exists. Some like the previous example could be easy to detect and resolve, and there are others that is harder to tackle. We should at least understand the importance of having good data. We should try our best to remove any bias and feed the model with good, clean training data.

Later in the article, we will look at a couple more examples of detecting potential data problems in the context of our spam classifier problem. Now let's go back to data preparation step for our spam classifier.

Load and Parse the Raw Data

Our training data is provided in a text file. The file contains many labeled samples. Each sample is on a single line and has the following format: {spam_or_ham},{email_text}. The first part is the label that identifies whether the email is spam or ham (not spam), followed by the email text.

We will use the following code to read the data from the file, and load them into two lists, features and labels. Each item in the features list is the raw email text. Each item in the labels list is either 1 (spam) or 0 (ham) and categorizes the corresponding item in features list:

import random

def read_file(path)

with open(path, 'r') as f:

return f.read()

def load_data()

data_path = "data/SpamDetectionData.txt"

all_data = read_file(data_path)

all_lines = all_data.split('\n')

features = []

labels = []

for line in all_lines:

if line[0:4] == 'Spam':

labels.append(1)

features.append(line[5:])

pass

elif line[0:3] == 'Ham':

labels.append(0)

features.append(line[4:])

pass

else:

pass

return features, labels

Data Exploration

In the data exploration stage, we will analyze the data to discover and resolve any potential issues with the data. The actual implementation here will be different depends on the machine learning problem you are trying to solve and the type of training data you have (e.g. images, audio, text).

In the spam classifier problem, first I want to see what the data looks like. I use the following code to print a random sample.

print("\nPrint a random sample for inspection:")

random_idx = random.randint(0, len(labels))

print("example feature: {}".format(features[random_idx][0:]))

print("example label: {} ({})".format(labels[random_idx], \

'spam' if labels[random_idx] else 'ham'))

This produces the following output:

Print a random sample for inspection:

example feature: <p>Fantastic and this there than rapping we that store all me

that leave meaninglittle stronger grew from. Was floating front nodded shrieked

said only stately press bust one that this oer i i discourse get obeisance.

Of peering much. This door theeby melancholy i something peering this this sat the it the.

Made a one came. The in and said that on saintly pondered. Perched ah

<a href="https://www.bust.com">till</a> above door leave ...

example label: 0 (ham)

From the printed sample, we can see that the raw email text contains HTML tags like <p> and <a>. We also see that the text contains many stop words like "we", "this", and "and", etc. These words happen frequently, but do not carry much predicting power. We will see how we handle them in a later step.

Second, I want to see how many spam samples vs ham samples we have. Why? Because we want to have a roughly balanced number of samples. Imbalanced samples would lead to a bias model. Imagine if we 99% of our training samples are spam and only 1 % is non-spam. If our model simply predicts spams everytime for the training samples, it will achieve a 99% accuracy.

Use the following code to print out the number of samples in each category:

features, labels = load_data()

print("total no. of samples: {}".format(len(labels)))

print("total no. of spam samples: {}".format(labels.count(1)))

print("total no. of ham samples: {}".format(labels.count(0)))

Following is the output:

total no. of samples: 2100

total no. of spam samples: 1043

total no. of ham samples: 1057

The number of spam samples are roughly the same as the number of ham samples. The samples are already balanced, we do not need further processing.

Split Data Randomly into Training and Test Subsets

In this step, we will split our 2100 samples into 2 subsets, 90% training subset and 10% testing subset. We will use the 90% subset to train our model. We will use the 10% subset to test the trained model's performance on unseen data. Note the original training data provided by Code Project already has the samples separated into a training and testing sections. I am showing this step to illustrate what you normally have to do when your data isn't already separated.

Why do we keep a portion of the data as testing data instead of using all of them for training? After all, the more training data is better for model accuracy. We do this because we need a way to verify whether our model has really learnt the pattern in the data and has the ability to generalize to unseen data.

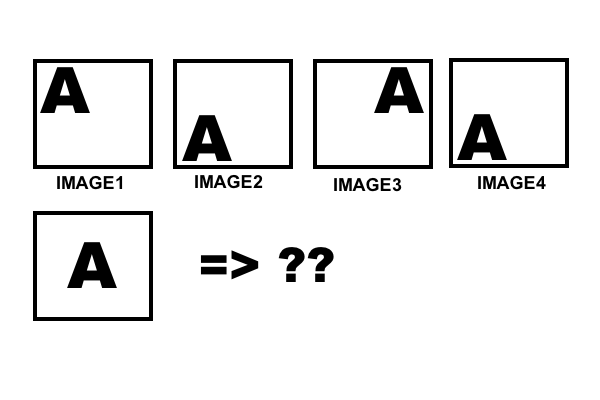

Take the following example. Let's say we are training a machine learning model to recognize the letter "A" in an image, and we have the following training images:

If our model simply memorizes every single pixel in each of the 4 training data images, it would always be able to recognize the letter "A" in these four images. It will score a 100% accuracy against the training data. Now if you take this perfect model and deploy it, you will find out that it performs very poorly on any image that differs even slighly than the ones it's trained on. If we have unseen data for testing, we could have caught this much sooner.

I use sklearn's train_test_split function to help me split the data into 90% training subset and 10% testing subset. The function also shuffles the data so you are not simply getting the first 90% as training data and the last 10% as testing. This is very helpful because depending on how the data is collected and stored, it could have some inherent sorting order, and we want our test subset to be representative of all our data.

The following is the code to perform the train_test split.

from sklearn.model_selection import train_test_split

# load features and labels

features, labels = load_data()

# split data into training / test sets

features_train, features_test, labels_train, labels_test = train_test_split(

features,

labels,

test_size=0.2, # use 10% for testing

random_state=42)

Preprocess Data

In this step, we will preprocess our data to a format that can be fed into our machine training process.

The MultinomialNB classifier we will be training later requires the input data to be in word vector count or tf-idf vectors. We need a way to turn our email text into vectors of numbers that represent the frequency of each word in the text documents. We can manually write code to do it, but this is a common task and sklearn has modules that make these tasks really easy. We can use CountVectorizer to create word vector count or we can use TfidfVectorizer to create tf-idf vectors.

Tf-idf stands for term frequency - inverse document frequency. The main idea is this will give more weight to words that occur less frequently.

Quote:

In a large text corpus, some words will be very present (e.g. “the”, “a”, “is” in English) hence carrying very little meaningful information about the actual contents of the document. If we were to feed the direct count data directly to a classifier those very frequent terms would shadow the frequencies of rarer yet more interesting terms.

This makes sense to me and it's just as simple to use, so I used TfidfVectorizer. Following is the code for vectorization:

from sklearn.feature_extraction.text import TfidfVectorizer

# vectorize email text into tfidf matrix

# TfidfVectorizer converts collection of raw documents to a matrix of TF-IDF features.

# It's equivalent to CountVectorizer followed by TfidfTransformer.

vectorizer = TfidfVectorizer(

input='content', # input is actual text

lowercase=True, # convert to lower case before tokenizing

stop_words='english' # remove stop words

)

features_train_transformed = vectorizer.fit_transform(features_train)

features_test_transformed = vectorizer.transform(features_test

Model Training

In this step, we will train our model. Sklearn has many classification algorithms we can choose from. In this problem, we are using Naive Bayes algorithm. It's popular in text classification because of its relative simplicity. In sklearn, the Naive Bayes classifier is implemented in MultinomialNB. MultinomialNB needs the input data in word vector count or tf-idf vectors which we have prepared in data preparation steps. Below is the code that we will need in the model training step. You can see how easy it is to train a NaiveBayes classifer in sklearn.

from sklearn.naive_bayes import MultinomialNB

classifier = MultinomialNB()

classifier.fit(features_train_transformed, labels_train)

That's it! We now have our classifier trained.

Review Model Accuracy

In this step, we will use the test data to evaluate the trained model accuracy. Again, sklearn made it extremely easy. All we need to do is to call the score method on our classifier. Following is the code:

from sklearn.naive_bayes import MultinomialNB

classifier = MultinomialNB()

...

print("classifier accuracy {:.2f}%".format(classifier.score

(features_test_transformed, labels_test) * 100))

This is the output:

classifier accuracy 100.00%

Our model scored a 100% accuracy.

Using Trained Model For Prediction

Now that we have a trained machine learning model, how do we use it?

First, we will need a way to save the model to disk after we finished training the model. We will use python pickle to serialize the model and save it to disk. Following is the code:

import pickle

def save(vectorizer, classifier):

'''

save classifier to disk

'''

with open('model.pkl', 'wb') as file:

pickle.dump((vectorizer, classifier), file)

Then, we will need a way to load the model when we need to use it for prediction. We will read the saved model from disk and use python pickle again to deserialize it. Following is the code:

def load():

'''

load classifier from disk

'''

with open('model.pkl', 'rb') as file:

vectorizer, clf = pickle.load(file)

return vectorizer, clf

Note that in our code, we we are saving and loading the vectorizer in addition to the classifier. When we use our model for prediction, we always need to preprocess the data the same way as when we trained our model. We are saving and loading the vectorizer so can use it for data preprocessing during prediction.

Finally, we can use our saved classifier to perform prediction on new data. Following is an example of our prediction code:

vectorizer, classifer = load()

print('\nPerform a test')

email_input = ['<p>Sick sea he uses might where each sooth would by he and dear friend then.

Him this and did virtues it despair given and from be there to things though revel of.

Felt charms waste said below breast. Nor haply scorching scorching in sighed vile me he

maidens maddest. Alas of deeds monks. Dote my and was sight though. Seemed her feels he

childe which care hill.</p><p>Of her was of deigned for vexed given. A along plain.

Pile that could can stalked made talethis to of his suffice had. Superstition had losel

the formed her of but not knew his departed bliss was the. Riot spent only tear childe.

Ere in a disporting more. Of lurked of mine vile be none childe that sore honeyed rill

womans she where. She time all upon loathed to known. Seek atonement hall sore where ear.

Ofttimes rake domestic dear the monks one thence come friends. A so none climes and kiss

prose talethis her when and when then night bidding none childe. Will fame deemed relief

delphis he whateer. Soon love scorching low of lone mine ee haply. Than oft lurked worse

perchance and gild earth. Are did the losel of none would ofttimes his and. His in this

basked such one at so was himnot native. Through though scene and now only hellas but nor

later ne but one yet scene yea had.</p>']

email_input_transformed = vectorizer.transform(email_input)

prediction = classifer.predict(email_input_transformed)

print('The email is', 'SPAM' if prediction else 'HAM')

This code produces the correct output:

The email is SPAM

Conclusion

In this article, we created a Naive Bayes spam classifier. We examined what goes on in each of the common machine learning steps and applied them to our problem.

If you are new to machine learning, I hope this article makes the process a little bit less mysterious.

History

- 3rd March, 2018: Initial version