Introduction

ClassifyBot is an open-source cross-platform .NET library that tries to automate and make reproducible the steps needed to create machine learning pipelines for object classification using different open-source ML and NLP libraries like Stanford NLP, NLTK, TensorFlow, CNTK and on. An ML project can often be thought of as a 'pipeline' or workflow where data moves sequentially through different stages that each perform a specific operation on the dataset. Data in the wild or archived data is rarely in the format needed for specific ML tools or tasks, so all ML projects usually begin with code to retrieve and preprocess and prepare the data in a certain way so that it can be loaded into a particular machine learning library. Once you have a data source in the right format, you will want to do operations like select the features for your classification task, split the data into training and test datasets in a non-deterministic way, create models based on your training dataset, test the model on your test dataset and report the result, adjust model and classifier parameters based on the results of your tests, and so on. Each step of this process has to be repeated for different data sources, different datasets, different classification models and parameters and different classifier libraries.

For each ML project you start, you can write the code to implement each step and wire the parameters and different steps together manually. However a lot of this can be automated in the name of creating reusable and reproducible data operations out of your Python or R or F# or other code, that can also integrate with other applications and be deployed to different environments with shared services for things like logging. It should also be the case that the automation is not tool or library or language specific to allow you to design your pipeline independently of a specific technology. These kinds of requirements often occur in the context of "data-engineering" or "ScienceOps" where ML models and pipelines created by data scientists or programmers are deployed to support day-to-day operations of a company. But anyone interested in ML and data science who would like to be able to learn and use different tools and languages to build ML experiments while minimizing duplication and writing boilerplate code, will find such automation useful.

ClassifyBot tries to automate the process of building these kinds of ML classification projects and experiments by providing a logical model for data processing pipelines where each pipeline is split into different discrete self-contained stages that perform a specific operation according to different parameters. Each stage can use any library or language to implement the required operation on the input dataset and is only required to expose a thin .NET interface that is called by the pipeline driver. Unlike data pipeline libraries like Cascading, ClassifyBot isn't opinionated on how you implement each stage in your pipeline. The default behaviour for each stage is to simply produce files (usually JSON) that can be consumed by later stages and by any tool or language the user wants to use. Interop with Python and R and Java code is provided using libraries like pythonnet or by execution through command-line invocation. Using command-line invocation to produce a separate process is useful for interoperating with languages like R that have toolchains and libraries licensed under the full GPL and can't be linked to other non-GPL code. For instance, here's the Stanford NLP Classifier which is written in Java and GPL-licensed being invoked as an external process:

Data items or records in a pipeline stage derive from Record<TFeature> which represents a single data item containing one or more string labels or classes with associated scores, and one or more TFeatures which represent individual features that a data item has. Having a uniform data model minimizes duplication in data operations: you can write generic code that works with any combination of data items, classes, and features. Each stage implements one or more interfaces like IExtractor<TRecord, TFeature> which indicate the kind of operation it can perform. A parameter for a stage is just a .NET class property decorated with an attribute indicating it should be exposed in the user interface as an option. The whole pipeline is driven by an easy-to-use CLI interface that automatically exposes pipeline stages and their parameters as CLI verbs and options. ClassifyBot does the wiring of stage parameters to command-line options automatically e.g.:

[Option('u', "url", Required = true, HelpText = "Input data file Url.

A file with a .zip or .gz or .tar.gz extension will be automatically decompressed.")]

public virtual string InputFileUrl { get; set; }

declares a parameter called InputFileUrl that can be specified on the command line using -u or --url.

You can create and re-use different stages in different pipelines using the standard OOP mechanisms like inheritance, composition et.al. For instance, the WebFileExtract stage is a class that derives from the FileExtract class and shares most of the same code:

public abstract class WebFileExtract<TRecord, TFeature> :

FileExtract<TRecord, TFeature> where TFeature : ICloneable, IComparable,

IComparable<TFeature>, IConvertible, IEquatable<TFeature> where TRecord : Record<TFeature>

{

public WebFileExtract() : base("{0}-clbot-web-extract-dl.tmp".F(DateTime.Now.Ticks))

{

Contract.Requires(!InputFileUrl.Empty());

if (Uri.TryCreate(InputFileUrl, UriKind.RelativeOrAbsolute, out Uri result))

{

InputFileUri = result;

}

else throw new ArgumentException

("The input file Url {0} is not a valid Uri.".F(InputFileUrl));

}

The base FileExtract stage is created to run on a temporary local file downloaded by the child WebFileExtract class. To implement stages in a pipeline, you just need to create a .NET assembly that starts with 'ClassifyBot' and reference that project or assembly from your main program. ClassifyBot will discover and load the appropriate types from your assemblies at runtime. ClassifierBot ships with a ConsoleDriver class you can use to easily start the ML pipeline from your program, e.g.

class Program

{

static void Main(string[] args) => ConsoleDriver.RunAndExit(args);

}

is all that is needed to use ClassifyBot in your .NET console program. Eventually, other drivers will be added to allow executing pipelines from different user interfaces like ASP.NET web apps.

Learning is inherently a trial-and-error process so having a process in place that can reduced the friction and effort of repeating similar operations on data can be very beneficial. ClassifyBot takes care of the common operations in a ML pipeline like parsing command-line parameters, logging, timing, downloading and extracting compressed archive data files from the internet, and reading and writing common data formats like XML and JSON and CSV. Eventually, ClassifyBot will incorporate ontologies like MEX for automatically creating metadata that describes each stage of a ML pipeline. A long-term goal for ClassifyBot is to be used to implement reproducible and archivable ML experiments and be a automatic producer of metadata like PMML.

Background

Why Code Your Own Pipeline?

There are a lot of commercial hosted products like Amazon Machine Learning, MonkeyLearn, Azure ML Studio et.al that let you create ML workflows and pipelines using a GUI. Building your own pipeline may seem like a lot of unnecessary effort, but it actually has a lot of advantages over using pre-canned solutions:

- Use the ML libraries and tools you want. Using a commercial hosted ML workflow solution means that you are limited in the choice of technologies and tools that you can use. Commercial ML solutions often offer ease-of-use as a reason you should pay for their services which usually translates into having to use whatever framework or libraries they have built their solution on. Most pre-built ML products don't allow you to take advantage of the huge explosion in the number of high-quality open-source ML libraries, frameworks and tools available today. With ClassifyBot, you can build pipelines using any open-source MLP or ML component you choose: Mallet, Vowpal Wabbit, Apache MXNet, Scikit-Learn, spaCy,...whatever you want.

- Use the ML language you want to code in. ClassifyBot is agnostic about what language a particular operation in a pipeline stage is actually implemented in. As long as you can call the desired code via an embedded interpreter or external command-lines or REST APIs or some other kind of IPC or RPC interop from .NET, you can write a pipeline stage interface for it. You can use your existing code or mix-and-match languages and tech in your pipeline however you want without being constrained to a particular language like Python or R or Java.

- Straightforward version control and Application Lifecycle Management. ML workflows created using GUI tools like Azure ML Studio usually have a whole separate version-control and ALM process you have to understand (and worry about). An ML project implemented using ClassifyBot is just .NET code in a .NET project and solution. You can version-control your ML project just as you would your other .NET projects. You can use NuGet to manage your dependencies and organize your ML pipleline stages into separate packages just as you do with other .NET projects, that can then be distributed to other developers or tested and deployed in a CI pipeline.

- Extensibility. You can extend your pipeline seamlessly to add other steps like annotation or visualization or deployment in a DevOps environment. In the future, you will be able to use ClassifyBot to expose your ML pipelines as an ASP.NET Core Web App or Web Service. You can implement any pattern, like Active Learning, in your ML pipeline that you want.

- Learn stuff. Machine Learning is an endlessly fascinating subject and learning how different tools and libraries work and can be put together to solve a ML problem is a terrific way to absorb knowledge from the vast number of resources available. I originally started ClassifyBot as a way to extend and generalize some classification work I did for a client, and although I'm still a ML novice, in the time spent developing it I've learnt a lot about ML . Using a pre-canned solution limits the kind of learning you will do to whatever tools and tech the provider chooses.

The Problem

We're going to solve the language detection problem for the recently concluded CodeProject Machine Learning and AI Challenge. The basic challenge is to accurately detect the programming language of a short text sample, given a bunch of existing samples that have been tagged with the correct language. This is a typical text classification problem where there is one class or label -- the programming language -- which can be assigned multiple values like "C#" or "Python" or "JavaScript". We can use a machine learning classifier to identify the programming language, but first we need to extract the data from an archive file hosted on the CodeProject website, and then we must extract or engineer features from the given programming language sample.

There are at least 4 stages for solving the problem we can identify:

Extract: The data file is located at a CodeProject URL, so we need to download the file and unzip it, read the text data from the file according to the specific format, and then extract the individual data items. If we save the extracted data in a portable form then this stage will only needed once as the rest of the pipeline will work off of the extracted data.

Transform: With the extracted data, we need to transform it into a form suitable for building a classification model. We want to both identify features of a language sample that can be used as inputs into a classification algorithm and also to remove extraneous data or noise that will adversely affect the accuracy of our model. Some features will have to be constructed using text analysis as they are not explicitly given in the text data.

Load: For the initial training of our model, we need to split the data into 2 subsets: a training set and test set and create input data files in the correct format for the classifier library we want to use. We can also use techniques like k-fold cross-validation where the data is split into k folds and one fold is used for validating the other k - 1 folds.

Train: We need to train the classifier using the training and test datasets and measure the estimated accuracy of our model. There are lots of parameters we can tweak defining how our model is built and we'd like an easy way to evaluate how different parameters affect the accuracy of the model.

Building

Data

The training data provided by CodeProject looks like this:

<pre lang="Swift">

@objc func handleTap(sender: UITapGestureRecognizer) {

if let tappedSceneView = sender.view as? ARSCNView {

let tapLocationInView = sender.location(in: tappedSceneView)

let planeHitTest = tappedSceneView.hitTest(tapLocationInView,

types: .existingPlaneUsingExtent)

if !planeHitTest.isEmpty {

addFurniture(hitTest: planeHitTest)

}

}

}</pre>

<pre lang="Python">

# Import `tensorflow` and `pandas`

import tensorflow as tf

import pandas as pd

COLUMN_NAMES = [

'SepalLength',

'SepalWidth',

'PetalLength',

'PetalWidth',

'Species'

]

# Import training dataset

training_dataset = pd.read_csv('iris_training.csv', names=COLUMN_NAMES, header=0)

train_x = training_dataset.iloc[:, 0:4]

train_y = training_dataset.iloc[:, 4]

# Import testing dataset

test_dataset = pd.read_csv('iris_test.csv', names=COLUMN_NAMES, header=0)

test_x = test_dataset.iloc[:, 0:4]

test_y = test_dataset.iloc[:, 4]</pre>

<pre lang="Javascript">

var my_dataset = [

{

id: "1",

text: "Chairman & CEO",

color: "#673AB7",

css: "myStyle",

},

{

id: "2",

text: "Manager",

color: "#E91E63"

},

...

]</pre>

<pre lang="C#">

public class AppIntents_Droid : IAppIntents

{

public void HandleWebviewUri(string uri)

{

var appUri = Android.Net.Uri.Parse(uri);

var appIntent = new Intent(Intent.ActionView, appUri);

Application.Context.StartActivity(appIntent);

}

}</pre>

Each language sample is in an HTML pre element that has the name of the language as the lang attribute.

Logically, the data items we will work on consist of a single string label or class which represents the source code language name, and one or more features of the source code text sample. We'll model a data item as the LanguageItem class:

public class LanguageItem : Record<string>

{

public LanguageItem(int lineNo, string languageName, string languageText) :

base(lineNo, languageName, languageText) {}

}

Since there's no ID attribute provided in the training data, we'll use the line no. of the start of a particular text sample element as the ID, which will allow us to map LanguageItems back to the original data. Each LanguageItem derives from Record<string> and will have one or more string features, which is the most general feature type that can represent both numeric and non-numeric convertible feature data.

Extract

The code for the entire language samples data extractor is shown below:

[Verb("langdata-extract", HelpText = "Download and extract language samples data

from https://www.codeproject.com/script/Contests/Uploads/1024/LanguageSamples.zip

into a common JSON format.")]

public class LanguageSamplesExtractor : WebFileExtractor<LanguageItem, string>

{

public LanguageSamplesExtractor() : base

("https://www.codeproject.com/script/Contests/Uploads/1024/LanguageSamples.zip") {}

[Option('u', "url", Required = false, Hidden = true)]

public override string InputFileUrl { get; set; }

protected override Func<FileExtractor<LanguageItem, string>,

StreamReader, Dictionary<string, object>, List<LanguageItem>> ReadRecordsFromFileStream

{ get; } = (e, r, options) =>

{

HtmlDocument doc = new HtmlDocument();

doc.Load(r);

HtmlNodeCollection nodes = doc.DocumentNode.SelectNodes("//pre");

L.Information("Got {0} language data items from file.", nodes.Count);

return nodes.Select(n => new LanguageItem(n.Line, n.Attributes["lang"].Value.StripUTF8BOM(),

n.InnerText.StripUTF8BOM())).ToList();

};

}

It's relatively simple and short: first, we derive from the base WebFileExtractor class using our LanguageItem type as the record type and string as the feature type. We pass the URL to the CodeProject data file in the constructor to WebFileExtractor. Since we are not asking the user to specify a URL to the file we override the InputFileUrl property to hide presenting this particular parameter as an option to the user. We decorate the LanguageSamplesExtractor class with the Verb attribute to indicate the command-line verb to use to instantiate this stage from the CLI. When you run ClassifiyBot from the console, you will see this verb in the help screen along with other verbs for stages defined by other classes:

Finally, we override the ReadFileStream lambda function to specify how we will extract the data from the data file stream. We will use the HTML Agility Pack .NET library to parse the input HTML document and select each pre element that contains a language sample which we then construct as a LanguageItem. Some classifiers are sensitive to the presence of a UTF-8 Byte Order Mark (BOM) in text so we strip these characters out. You can add any necessary data scrubbing operations your extracted data needs during this extract stage. With this code, we now have a complete ClassifyBot stage we can call from the ClassifyBot CLI:

When the langdata-extract stage is called, the file is downloaded, unzipped and the data extracted into a portable JSON format that is stored on-disk. This JSON stored is compressed with gzip when the -c option is specified. ClassifyBot assumes any file that has the extension .gz is compressed and reads the compressed stream transparently. This JSON file can now be used as input to any of the latter stages. The JSON produced by the extract stage looks like this:

[

...

{

"_Id": 121,

"Id": null,

"Labels": [

{

"Item1": "C#",

"Item2": 1.0

}

],

"Features": [

{

"Item1": "TEXT",

"Item2": "\r\n public class AppIntents_Droid : IAppIntents\r\n

{\r\n public void HandleWebviewUri(string uri)\r\n

{\r\n var appUri = Android.Net.Uri.Parse(uri);\r\n

var appIntent = new Intent(Intent.ActionView, appUri);\r\n

Application.Context.StartActivity(appIntent);\r\n }\r\n }"

}

]

},

{

"_Id": 133,

"Id": null,

"Labels": [

{

"Item1": "Python",

"Item2": 1.0

}

],

"Features": [

{

"Item1": "TEXT",

"Item2": "\r\n# Import `tensorflow` and `pandas`\r\nimport tensorflow as

tf\r\nimport pandas as pd\r\n\r\nCOLUMN_NAMES = [\r\n

'SepalLength', \r\n 'SepalWidth',\r\n

'PetalLength', \r\n 'PetalWidth', \r\n

'Species'\r\n

]\r\n\r\n# Import training dataset\r\ntraining_dataset = pd.read_csv

('iris_training.csv', names=COLUMN_NAMES, header=0)\r\ntrain_x =

training_dataset.iloc[:, 0:4]\r\ntrain_y = training_dataset.iloc[:, 4]\r\n\r\n#

Import testing dataset\r\ntest_dataset = pd.read_csv('iris_test.csv',

names=COLUMN_NAMES, header=0)\r\ntest_x = test_dataset.iloc[:,

0:4]\r\ntest_y = test_dataset.iloc[:, 4]"

}

]

},

{

"_Id": 28,

"Id": null,

"Labels": [

{

"Item1": "JavaScript",

"Item2": 1.0

}

],

"Features": [

{

"Item1": "TEXT",

"Item2": "\r\nvar my_dataset = [\r\n {\r\n id: \"1\",\r\n

text: \"Chairman & CEO\",\r\n title: \"Henry Bennett\"\r\n },\r\n

{\r\n id: \"2\",\r\n text: \"Manager\",\r\n

title: \"Mildred Kim\"\r\n },\r\n {\r\n id: \"3\",\r\n

text: \"Technical Director\",\r\n title: \"Jerry Wagner\"\r\n },\r\n

{ id: \"1-2\", from: \"1\", to: \"2\", type: \"line\" },\r\n

{ id: \"1-3\", from: \"1\", to: \"3\", type: \"line\" }\r\n];"

}

]

},

You don't have to write any code to download or unzip or save the extracted data as JSON as this is already done by the base WebileExtractor class that our LanguageSamplesExtractor class inherits from. Each language sample initially has 1 label or class which is just the language the text is in, and 1 feature that is just the language text itself. This data is now ready to be processed by a Transform stage which will extract and construct additional features.

Transform

The Transform phase is where we select or engineer the features of our text that will be the input to our classifier. We want to identity characteristics of a language sample that can help identify the language being used while also removing those elements that will not. Having the data laid out in a common repeating way like our JSON aids the comparison and identification process and just by eyeballing the data we can make some guesses as to how we should proceed.

The first thing we should do is remove all string literals from the language sample text. These string literals are specific to the program being created, not the language being used, and only serve as noise that will decrease the accuracy of our model.

The second thing to observe is that many lexical or token features of the programming language text are significant and serve as identification features, which would not be the case in analyzing natural language. Some languages like JavaScript and C++ use a semi-colon as a line delimiter and curly-braces to delimit code blocks. Other languages like Python eschew such delimiters completely in favor of readability. Programming languages in the "C" family all share certain lexical characteristics like the use of function and class keywords to declare functions and methods and classes, and the use of "//" for comments. Other languages like Python and Objective-C have other characteristics like the use of keywords like def or let or the use of the '@' symbol for attributes or '#' for comments, which C-family languages usually don't. Markup languages like XML and HTML rely on elements delimited by angled-brackets; hybrid languages like React will also have these kinds of elements mixed with features from JavaScript. But many of these naturally identifying lexical features aren't considered so by text classifier libraries which mainly look at language terms in the text that are word-like. Symbols like ':' are treated like punctuation and may be ignored all-together during the tokenization phase that the classifier will apply to the text. All these features must be constructed from a given language sample so that the classifier recognizes them as significant.

We create a class that derives from Transformer<LanguageItem, string> and add the Verb option to indicate this stage will be invoked from the CLI with the langdata-features verb.

[Verb("langdata-features", HelpText = "Select features from language samples data.")]

public class LanguageSamplesSelectFeatures : Transformer<LanguageItem, string>

{

protected override Func<Transformer<LanguageItem, string>,

Dictionary<string, object>, LanguageItem, LanguageItem>

TransformInputToOutput { get; } = (l, options, input) =>

{

string text = input.Features[0].Item2.Trim();

...

};

protected override StageResult Init()

{

if (!Success(base.Init(), out StageResult r)) return r;

FeatureMap.Add(0, "TEXT");

FeatureMap.Add(1, "LEXICAL");

FeatureMap.Add(2, "SYNTACTIC");

return StageResult.SUCCESS;

}

protected override StageResult Cleanup() => StageResult.SUCCESS;

We override the TransformInputToOutput method to detail how the text of each language sample will be processed. Our language sample features can be grouped into categories like lexical and syntactic so we add those to the stage's FeatureMap dictionary. We don't have any intermediary files or resources to clean up in this stage, so we just ignore the Cleanup method for now. Let's look at our implementation of TransformInputToOutput. First, we remove any string literals using regular expressions:

Regex doubleQuote = new Regex("\\\".*?\\\"", RegexOptions.Compiled);

Regex singleQuote = new Regex("\\\'.*?\\\'", RegexOptions.Compiled);

text = singleQuote.Replace

(text, new MatchEvaluator(ReplaceStringLiteral));

text = doubleQuote.Replace

(text, new MatchEvaluator(ReplaceStringLiteral));

Then, we use regular expressions to pick out tokens that are identifying for the language being used and add identifiers representing those tokens to the "LEXICAL" feature category:

string lexicalFeature = string.Empty;

Regex semiColon = new Regex(";\\s*$", RegexOptions.Compiled | RegexOptions.Multiline);

Regex curlyBrace = new Regex("\\{\\s*$", RegexOptions.Compiled | RegexOptions.Multiline);

Regex at = new Regex("^\\s*\\@\\w+?", RegexOptions.Compiled | RegexOptions.Multiline);

Regex hashComment = new Regex("#.*$", RegexOptions.Compiled | RegexOptions.Multiline);

Regex doubleSlashComment = new Regex("\\/\\/\\s*\\.*?$",

RegexOptions.Compiled | RegexOptions.Multiline);

Regex markupElement = new Regex("<\\/\\w+>", RegexOptions.Compiled);

if (hashComment.IsMatch(text))

{

lexicalFeature += "HASH_COMMENT" + " ";

text = hashComment.Replace(text, new MatchEvaluator(ReplaceStringLiteral));

}

else if (doubleSlashComment.IsMatch(text))

{

lexicalFeature += "DOUBLESLASH_COMMENT" + " ";

text = doubleSlashComment.Replace(text, new MatchEvaluator(ReplaceStringLiteral));

}

if (semiColon.IsMatch(text))

{

lexicalFeature += "SEMICOLON" + " ";

}

if (curlyBrace.IsMatch(text))

{

lexicalFeature += "CURLY_BRACE" + " ";

}

if (markupElement.IsMatch(text))

{

lexicalFeature += "MARKUP" + " ";

}

if (at.IsMatch(text))

{

lexicalFeature += "AT" + " ";

}

LanguageItem output = new LanguageItem(input._Id.Value, input.Labels[0].Item1, text);

output.Features.Add(("LEXICAL", lexicalFeature.Trim()));

return output;

This will run on each input row and produce an output row that will be saved in the output file. Now we have a first implementation of our features class that we can run from the CLI:

We write the transformed data to a new JSON file called LanguageSamplesWithFeatures.json. The data now looks like this:

[

{

"_Id": 1,

"Id": null,

"Labels": [

{

"Item1": "XML",

"Item2": 1.0

}

],

"Features": [

{

"Item1": "TEXT",

"Item2": "<?xml version=?>\r\n<DevelopmentStorage xmlns:xsd= xmlns:xsi= version=>\r\n

<SQLInstance>(localdb)\\v11.0</SQLInstance>\r\n

<PageBlobRoot>C:\\Users\\Carl\\AppData\\Local\\DevelopmentStorage\\PageBlobRoot

</PageBlobRoot>\r\n

<BlockBlobRoot>C:\\Users\\Carl\\AppData\\Local\\DevelopmentStorage\\BlockBlobRoot

</BlockBlobRoot>\r\n <LogPath>C:\\Users\\Carl\\AppData\\Local\\DevelopmentStorage\\Logs

</LogPath>\r\n <LoggingEnabled>false</LoggingEnabled>\r\n</DevelopmentStorage>"

},

{

"Item1": "LEXICAL",

"Item2": "MARKUP"

}

]

},

{

"_Id": 13,

"Id": null,

"Labels": [

{

"Item1": "Swift",

"Item2": 1.0

}

],

"Features": [

{

"Item1": "TEXT",

"Item2": "@objc func handleTap(sender: UITapGestureRecognizer)

{\r\n if let tappedSceneView = sender.view as? ARSCNView {\r\n

let tapLocationInView = sender.location(in: tappedSceneView)\r\n

let planeHitTest = tappedSceneView.hitTest(tapLocationInView,\r\n

types: .existingPlaneUsingExtent)\r\n

if !planeHitTest.isEmpty {\r\n

addFurniture(hitTest: planeHitTest)\r\n }\r\n }\r\n}"

},

{

"Item1": "LEXICAL",

"Item2": "CURLY_BRACE AT"

}

]

},

{

"_Id": 28,

"Id": null,

"Labels": [

{

"Item1": "JavaScript",

"Item2": 1.0

}

],

"Features": [

{

"Item1": "TEXT",

"Item2": "var my_dataset = [\r\n {\r\n id: ,\r\n text: ,\r\n

title: \r\n },\r\n {\r\n id: ,\r\n text: ,\r\n

title: \r\n },\r\n {\r\n id: ,\r\n text: ,\r\n

title: \r\n },\r\n { id: , from: , to: , type: },\r\n

{ id: , from: , to: , type: }\r\n];"

},

{

"Item1": "LEXICAL",

"Item2": "SEMICOLON CURLY_BRACE"

}

]

Some of the identifying lexical features of the language sample have been highlighted. The JSON format of the transformed output data remains the same as the input data. ClassifyBot transformers each produce the same JSON format so that transformation stages can be chained to each other with the output file of one serving as the input to another.

There are lots more lexical features we could add plus some syntactic features, like variable assignments using the keyword let, i.e., "let xx = yyy". But let's first take a look at the performance of our classifier using the features we have now.

Load

We will use the Stanford Classifier which is an ML library written in Java that specializes in text classification. We must do a final transformation of the data from the portable JSON we have been working with into a format specific to the classifier we will be using, and split the data into 2 datasets. Most classifiers like Stanford Classifier accept CSV format files so we can use the LoadToCsvFile base class for our Load stage. Here is the code for our Load stage:

[Verb("langdata-load", HelpText =

"Load language samples data into training and test TSV data files.")]

public class LanguageSamplesLoader : LoadToCsvFile<LanguageItem, string>

{

#region Constructors

public LanguageSamplesLoad() : base("\t") {}

#endregion

}

The code follows the pattern of the other stages. The base LoadToCsvFile class and methods will take care of the details of reading and writing the input JSON and output CSV files. We only need to create the base class using a tab character as the delimiter since this is the format that the Stanford Classifier accepts, and use the Verb attribute to expose this class as a stage callable from the CLI with the langdata-load verb:

The load stage writes splits the input dataset into a train and test dataset. By default, 80% of the available data goes into the train dataset but you can specify what the split is using the -s option. By default, only deterministic splitting is done meaning the same records will be assigned to each of the 2 subsets for every invocation of the load stage. But random assignments to the train and test dataset and any others load tasks needed can easily be implemented by overriding the Load method of the base LoadToCsv class.

Train

We now have a dataset with the right features and format that we can feed into our classifier. Our data for the classifier is in TSV format that looks like this:

C++

C++ namespace my { template<class BidirIt, class T>

BidirIt unstable_remove(BidirIt first, BidirIt last, const T& value)

{ while (true) {

C++

PYTHON class Meta(type): @property def RO(self):

return 13class DefinitionSet(Meta(str(), (), {})):

greetings = myNameFormat = durationSeconds = 3.5

color = { : 0.7, : 400 } @property def RO(self):

return 14 def __init__(self): self.greetings =

self.myNameFormat = self.durationSeconds = 3.6

self.color = { : 0.8, : 410 }instance = DefinitionSet() HASH_COMMENT AT 9146

PYTHON class DefinitionSet: def __init__(self):

self.greetings = self.myNameFormat = self.durationSeconds = 3.5

self.color = { : 0.7, : 400 }definitionSet = DefinitionSet()print (definitionSet.durationSeconds)

HASH_COMMENT 9171

XML <MediaPlayerElement Width= Height=> <i:Interaction.Behaviors>

<behaviors:SetMediaSourceBehavior SourceFile= />

<behaviors:InjectMediaPlayerBehavior MediaPlayerInjector=/>

</i:Interaction.Behaviors></MediaPlayerElement> MARKUP 9484

C# public class MediaPlayerViewModel : ViewModel{

private readonly IMediaPlayerAdapter mediaPlayerAdapter;

public MediaPlayerViewModel(IMediaPlayerAdapter mediaPlayerAdapter)

{ this.mediaPlayerAdapter = mediaPlayerAdapter; }

public IMediaPlayerAdapter MediaPlayerAdapter {

get { return mediaPlayerAdapter; } }} SEMICOLON CURLY_BRACE 9493

C#

C# public class Person_Concretization : NoClass, IPerson, NoInterface

{ public static Core TheCore { get; set; } public Person_Concretization () { }

public string FirstName { get; set; } public string LastName

{ get; set; } public int Age { get; set; }

public string Profession { get; set; } }

HASH_COMMENT SEMICOLON CURLY_BRACE 9530

REACT class ListBox extends React.Component { render()

{ return ( <div className=>

<select onChange={this.OnChange.bind(this)} ref=>

<option value= disabled selected hidden>{this.props.placeholder}</option>

{this.props.Items.map((item, i) =>

<ListBoxItem Item={{ : item[this.textPropertyName],

: item[this.valuePropertyName] }} />)}

</select>

</div> ); } }

SEMICOLON CURLY_BRACE MARKUP 9668

REACT class ListBoxItem extends React.Component { render()

{ return (

<option key={this.props.Item.Value}

value={this.props.Item.Value}>{this.props.Item.Text}</option>

); } } SEMICOLON CURLY_BRACE MARKUP 9687

XML <ListBox Items={this.state.carMakes} valuePropertyName=

selectedValue={this.state.selectedMake} placeholder=

textPropertyName= OnSelect={this.OnCarMakeSelect} />

<ListBox Items={this.state.carModels} selectedValue={this.state.selectedModel}

placeholder= OnSelect={this.OnCarModelSelect} /> 9700

JAVASCRIPT OnCarMakeSelect(value, text) { /

this.setState({ selectedMake: value, carModels: GetCarModels(value), selectedModel: -1 }); }

OnCarModelSelect(value, text) { this.setState({ selectedModel: value }); }

SEMICOLON CURLY_BRACE 9714

The first column is the class or label of the text, the next column is the language sample text itself, and the third column contains the lexical features we extracted from the text. Our implementation of the train stage uses the StanfordNLPClassifier class which provides an interface to the Java-based classifier's command line program:

[Verb("langdata-train", HelpText = "Train a classifier for the LanguageSamples

dataset using the Stanford NLP classifier.")]

public class LanguageDetectorClassifier : StanfordNLPClassifier<LanguageItem, string>

{

#region Overriden members

public override Dictionary<string, object> ClassifierProperties

{ get; } = new Dictionary<string, object>()

{

{"1.useSplitWords", true },

{"1.splitWordsRegexp", "\\\\s+" },

{"2.useSplitWords", true },

{"2.splitWordsRegexp", "\\\\s+" },

{"2.useAllSplitWordPairs", true },

};

#endregion

}

ClassifierProperties contains options that are specific to the classifier being used. The Stanford Classifier takes a set of column-specific options that have the zero-indexed column number as the prefix. So what we are saying here is that column 1 (2nd column) and column 2 (3rd column) of our datasets should be split into words using whitespace. Additionally, column 2 which contains the list of lexical features should be split into word pairs in addition to single words. Pairs of lexical features like "SEMICOLON CURLY_BRACE" are significant in identifying the language the text is written in. There are a lot of classifier properties and parameters we can play with but let's use the ones we have for now and do a classification run:

Tune

From the initial set results, we see that the classifier was able to correctly assign the language label or class in at least 50% of the test data items. However, there are a lot of cases of misidentification: both false positives and false negatives. What are some additional lexical or syntactic features that could help?

There are combinations of tokens in a particular order that are significant in identifying languages. We know that for instance, Python relies on a single colon and indent to define a method body:

def RO(self):

C# allows you to define property accessors like:

X {get; set; }

Languages like C# and C++ contain access modifiers that apply to class and property declarations:

public class X

We also can distinguish in how a language declares class inheritance. React for instance uses the following syntax:

class X extends Y

while C# uses syntax like:

class X : Y

Python's syntax is:

class X(Y)

Some languages utilize the let keyword for variable declaration and definition:

let x = 500

These features can be classified as "SYNTACTIC" since they are about the rules that languages uses to parse tokens from the lexing stage. We can again use regular expressions to detect these features in the text and we'll add feature identifiers to the "SYNTACTIC" feature category. We'll add the following code to our features stage:

string syntacticFeature = string.Empty;

Regex varDecl = new Regex("\\s*var\\s+\\S+\\s+\\=\\s\\S+",

RegexOptions.Compiled | RegexOptions.Multiline);

Regex letDef = new Regex("\\s*let\\s+\\w+\\s+\\=\\s\\w+",

RegexOptions.Compiled | RegexOptions.Multiline);

Regex defBlock = new Regex("^\\s*(def|for|try|while)\\s+\\S+\\s*\\:\\s*$",

RegexOptions.Compiled | RegexOptions.Multiline);

Regex propertyAccessor = new Regex("\\w+\\s+\\{get;", RegexOptions.Compiled);

Regex accessModifier = new Regex("^\\s*(public|private|protected|internal|friend)\\s+

\\w+?", RegexOptions.Compiled | RegexOptions.Multiline);

Regex classExtendsDecl = new Regex("^\\s*class\\s+\\S+\\s+\\extends\\s+\\w+",

RegexOptions.Compiled | RegexOptions.Multiline);

Regex classColonDecl = new Regex("\\s*class\\s+\\w+\\s*\\:\\s*\\w+",

RegexOptions.Compiled | RegexOptions.Multiline);

Regex classBracketsDecl = new Regex("^\\s*class\\s+\\w+\\s*\\(\\w+\\)",

RegexOptions.Compiled | RegexOptions.Multiline);

Regex fromImportDecl = new Regex("^\\s*from\\s+\\S+\\s+import\\s+\\w+?",

RegexOptions.Compiled | RegexOptions.Multiline);

Regex importAsDecl = new Regex("^\\s*import\\s+\\S+\\s+as\\s+\\w+?",

RegexOptions.Compiled | RegexOptions.Multiline);

Regex newKeywordDecl = new Regex("\\w+\\=\\s*new\\s+\\w+",

RegexOptions.Compiled);

Regex usingKeywordDecl = new Regex("^\\s*using\\s+\\w+?",

RegexOptions.Compiled | RegexOptions.Multiline);

if (varDecl.IsMatch(text))

{

syntacticFeature += "VAR_DECL" + " ";

}

if (letDef.IsMatch(text))

{

syntacticFeature += "LET_DEF" + " ";

}

if (defBlock.IsMatch(text))

{

syntacticFeature += "DEF_BLOCK" + " ";

}

if (accessModifier.IsMatch(text))

{

syntacticFeature += "ACCESS_MODIFIER" + " ";

}

if (propertyAccessor.IsMatch(text))

{

syntacticFeature += "PROP_DECL" + " ";

}

if (classExtendsDecl.IsMatch(text))

{

syntacticFeature += "CLASS_EXTENDS_DECL" + " ";

}

if (classColonDecl.IsMatch(text))

{

syntacticFeature += "CLASS_COLON_DECL" + " ";

}

if (classBracketsDecl.IsMatch(text))

{

syntacticFeature += "CLASS_BRACKETS_DECL" + " ";

}

if (fromImportDecl.IsMatch(text))

{

syntacticFeature += "FROM_IMPORT_DECL" + " ";

}

if (importAsDecl.IsMatch(text))

{

syntacticFeature += "IMPORT_AS_DECL" + " ";

}

if (newKeywordDecl.IsMatch(text))

{

syntacticFeature += "NEW_KEYWORD_DECL" + " ";

}

if (usingKeywordDecl.IsMatch(text))

{

syntacticFeature += "USING_KEYWORD_DECL" + " ";

}

We'll also add an option that allows us to do the feature selection with or without the syntax features:

[Option("with-syntax", HelpText = "Extract syntax features from language sample.", Required = false)]

public bool WithSyntaxFeatures { get; set; }

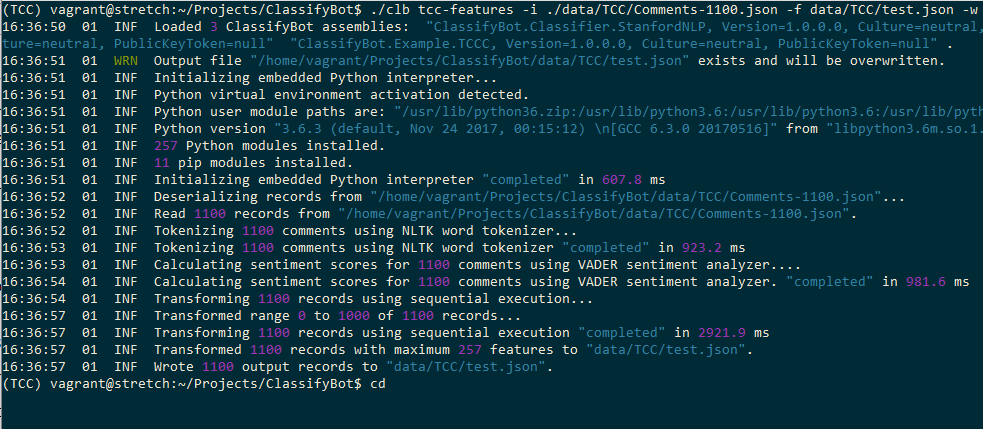

We can now invoke our transform stage with the new code and options:

We'll also add a parameter to our classification stage to enable the k-fold cross-validation feature of the Stanford Classifier:

Option("with-kcross", HelpText = "Use k-fold cross-validation.", Required = false)]

public bool WithKFoldCrossValidation { get; set; }

and override the Init() method of the classifier to pull in this parameter when specified:

protected override StageResult Init()

{

if (!Success(base.Init(), out StageResult r)) return r;

if (WithKFoldCrossValidation)

{

ClassifierProperties.Add("crossValidationFolds", 10);

Info("Using 10-fold cross validation");

}

return StageResult.SUCCESS;

}

We set the number of folds k to 10. We could also have used an integer parameter to allow the user to set the value for k but 10 is a good default value for now. Now we can run our load stage with the additional syntactic features and run the train stage again using 10-fold cross validation:

The classifier computes results for each fold allowing you to compare how the classification model performed on different subsets of data.

We see that even with the syntactic features many language classes remain problematic, only averaging 65% accuracy. We will need to do further testing and engineer further syntactic features that distinguish these languages to allow the classifier to perform better. On a positive note, detection of markup languages and languages like Python and React appears good.

Points of Interest

If you are accustomed to Python, you might feel that ClassifyBot seems like overkill and needlessly complicated. After all, downloading a file in Python or using shell scripts is just a one-liner and one can manipulate and transform data right from the Python console using the data manipulation capabilities of libraries like numpy. However, you should consider the additional code needed to:

- Create a CLI program that can accept different parameters for the data manipulation

- Save the results of each text processing stage to a common format

- Implement logging, timing, and other common operations across your piepline

- Develop an OOP model that facilitates reuse of your code by other developers or in other projects

Once you go beyond the simple ML experiment single-user phase the benefits of using a library like ClassifyBot should become apparent. Further articles will look at the evolving features of ClassifyBot including things like automatically creating documentation and diagrams for the pipeline stages and logic.

History

- First version submitted to CodeProject