Introduction

Some months ago, I posted in this site an article titled A complete anti-theft homemade surveillance system. As the title indicates, it is a software intended to home security, which can use one or more cameras, intruder detectors to trigger the alarm, communication devices to notify alarms, and storage systems to access the photos taken by the cameras in the instant of the alarm.

The system is extensible, so that you can easily write your own drivers for each of these protocols and add them to the application almost without effort.

Microsoft Kinect sensor is especially appropriate for this application. It provides a color camera and an infrared sensor, which can be used as surveillance cameras, along with a human body detection system and a depth sensor, which allow implementing the presence detection protocol to trigger the alarm using two different approaches.

There exist different versions of the sensor, along with different versions of Kinect SDK. The code provided with this article uses the version 2.0 of the SDK, which works with the Kinect Sensor for Xbox One, but not for the Xbox 360 version (at least in my case), so that, if you want or need to use another SDK version, you will have to modify one of the classes of the project in order to adapt it to these version.

You can download the source code of the KinectProtocol project, or you can download the necessary executable files only, and unzip the content of the archive into the path where the ThiefWatcher application is installed. The ThiefWatcher application can be downloaded from the article referenced above.

To compile the KinectProtocol project, you have to add a reference to the WatcherCommons project in the ThiefWatcher main solution.

The code is written in C# using Visual Studio 2015.

Installing the Protocols

Once the Microsoft.Kinect.dll, KinectProtocol.dll files and the es directory, with the Spanish resources, are copied in the ThiefWatcher directory, you only have to use the Install Protocol option in the File menu to select the file KinectProtocol.dll, and the KinectCamera and KinectTrigger protocols will be added to the configuration files.

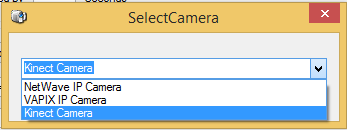

As the sensor can provide infrared and color images at once, you can add one camera of each type with a single device, so that you can take images both with light or in the dark. To configure a Kinect camera, use the New Camera option in the File menu, and select Kinect Camera in the drop down list:

Leave blank all the data in the Camera Access dialog box, as there is no need in this case.

In Camera Settings, you only have two options, color or infrared images, select one of them and close the dialog box.

The last step is to provide a name for the camera and to press the save button to store it in the configuration files.

You can test the camera by using the play button in this same window.

Regarding the trigger protocol, it is configured in the control panel:

In the drop down list, select Kinect Trigger as the trigger protocol. Then, you have to provide a connection string to set up the operation mode and settings.

You have two options in this case. Using the body detection is perfect if you have pets at home, as only the presence of human beings will be detected. The string connection in this case is simply source=body.

On the other hand, you can also use the depth sensor to detect a wide range of changes in the environment. With this option, the data obtained from Kinect are, for each pixel of the image, their distance in millimetres to the sensor. The algorithm used to detect changes is to calculate the difference between two consecutive frames, subtracting each pixel of one image from the corresponding one in the other. If the distance if greater than some threshold, the difference is taken into account. When the count of differences exceeds a given percentage of the total pixels of the image, the trigger is fired.

In the string connection, you have to indicate source=depth, and the threshold (thres parameter) and the percentage of differences (sens parameter), for instance: source=depth;thres=100;sens=15.

Using the Code

The implementation of the camera and trigger protocol is explained in the main article about ThiefWatcher application. So let's focus on the Sensor static class, which encapsulates all the code necessary to interact with the Kinect Sensor and which is the only one you have to modify to adapt the code to other Kinect SDK versions.

The SensorCaps enum is used to indicate the type of inputs that must be processed, in order to optimize the work:

[Flags]

public enum SensorCaps

{

None = 0,

Color = 1,

Infrared = 2,

Depth = 4,

Body = 8

}

In the Sensor.Caps property, you can indicate the appropriate combination of these values.

You have two properties for color images, Sensor.ColorFrameSize, which is read-only, which returns the size of the image as a Size struct, and the Sensor.ColorFrame property, which returns a Bitmap object with the last image captured.

Infrared images are handled with two homologous properties: Sensor.InfraredFrameSize and Sensor.InfraredFrame.

The body detection is performed through the Sensor.BodyTracked Boolean property, and the depth sensor data is obtained, in the form of an ushort array, from the Sensor.DepthFrame property.

The sensor is put into operation using the OpenSensor method, which receives a parameter of SensorCaps type. As this method is called by each camera and by the trigger protocol and it is only necessary to start the sensor once, we keep an account of instances that have called the method in the global variable _instances:

public static void OpenSensor(SensorCaps caps)

{

Caps |= caps;

if (_sensor == null)

{

_instances = 1;

_sensor = KinectSensor.GetDefault();

if (!_sensor.IsOpen)

{

_sensor.Open();

}

Initialize();

_reader = _sensor.OpenMultiSourceFrameReader(FrameSourceTypes.Color

| FrameSourceTypes.Depth

| FrameSourceTypes.Infrared

| FrameSourceTypes.Body);

_reader.MultiSourceFrameArrived +=

new EventHandler<MultiSourceFrameArrivedEventArgs>(OnNewFrame);

}

else

{

_instances++;

}

}

The global variable _sensor, of KinectSensor type, will be null the first time this method is called. In this case, we also create a MultiSourceFrameReader frame reader, in the _reader variable, which allows us to configure several types of data to be read at the same time. In this case, we will always read color and infrared images, depth data and the list of detected bodies.

The way to read them will be through the MultiSourceFrameArrived event, which will trigger every time a new frame is available.

The method to stop an instance opened with OpenSensor is CloseSensor, which will reduce the count of instances until it reaches the last one, leaving in this case the system in its initial state and releasing resources:

public static void CloseSensor()

{

_instances--;

if (_instances <= 0)

{

if (_sensor != null)

{

if (_sensor.IsOpen)

{

_sensor.Close();

_reader.Dispose();

_reader = null;

_sensor = null;

}

}

_instances = 0;

Caps = SensorCaps.None;

}

}

And that’s all, thanks for reading!!