Introduction

This article describes how to use Single-Sign-On (SSO) in a Single Page Application (SPA) being hosted on Amazon Web Services (AWS) with a custom domain name.

That's a lot to unpack so let's break it down. Today we'll learn how to:

- install Node.js to locally run an Express Single Page Application

- sign up for Auth0

- setup a client in Auth0 and obtain metadata

- setup Single-Sign-On (SSO) code in express.js for a Single Page Application

- test the solution locally

- configure Elastic Beanstalk through .ebextensions for HTTPS

- prepare a zip file and deploy it to the Elastic Beanstalk

- setup Amazon Web Services Elastic Beanstalk to host a Node.js website

- setup Amazon Web Services Route 53 to host a custom domain

Background

SAML

SAML stands for Security Assertion Markup Language. We're not going to study SAML in depth here, but briefly:

SAML is an open standard for exchanging authentication and authorization data between parties, in particular, between an identity provider and a service provider. As its name implies, SAML is an XML-based markup language for security assertions (statements that service providers use to make access-control decisions). Wikipedia

The version of the standard we use here is 2.0, which is the current version at the time of this writing (Jun 2018).

The major players in SAML communication, aside from the end-user of course, are the Service Provider (SP) and the Identity Provider (IdP). The SP in this case is the website the user wants to use. The IdP is a service that holds the database of those people who are authorized to access the website. In this demo the SP will be the website that we're building, and the IdP will be Auth0.

SSO

SSO stands for Single-Sign-On. When you have a lot of people who need access to different places and you don't want them to have to have to manage their passwords for all the different systems individually you can implement SSO so that the IdP takes care of it for them.

AWS

Amazon Web Services (AWS), is a collection of many different services. For more in depth information on AWS see aws.amazon.com. You can create an account with AWS for free and many of the services are also free. The services we'll be using, if you decide to follow along, will be Route 53, and Elastic Beanstalk. For more information on those you can refer to the links below.

SAML and SSO

Imagine your enterprise has developed many different systems over the years and each one has operated in its own silo. People who have responsibilities that cross departments have to remember their passwords for each system. They also may have to update them on a regular basis and, to make matters worse, the timing might not be synchronized between them so the passwords expire at different times. Then, through a corporate merger, you wind up doubling your enterprise and acquire even more systems. Wouldn't it be nice if your people could use one site for authentication and gain access to all their services without having to run through the login process several times a day? Introducing SAML and SSO.

As I mentioned earlier, Wikipedia defines SAML as "an open standard for exchanging authentication and authorization data". So what's an open standatd for exchanging authentication and authorization data? SAML is an open standard in that it's not proprietary. It defines the structure of data associated with authenticating a user's access to a particular service. The "SA" in SAML stands for Security Assertion, "ML" for Markup Language. SAML defines XML documents containing information about a user's access. Remember that the interaction going on here is between a Service Provider, "SP", and an Identity Provider, "IdP". The Service Provider will request authentication, and the IdP asserts that the user is authenticated. In our case the SP is a website but that doesn't have to be the case, any application that can communicate via HTTP can participate in the negotiation. (of course you'll probably want to use HTTPS for this)

The process can be implemented in different ways. Your enterprise can create a dashboard that allows the user to sign in and then chose from the options displayed on the dashboard to gain access to the various systems. This is referred to as IdP initiated SSO. Another way this could be implemented is through the various systems that participate. When a user attempts to sign in to any of the systems, that system checks with the IdP. This is called SP initiated SSO. If the user is already signed in at the IdP, then the response is immedidate. If the user isn't authenticated then the IdP will perform that step and then send the response. If the user cannot authenticate or is not granted access to the service, then the response never returns to the SP.

Enough already, let's get started - The sample app

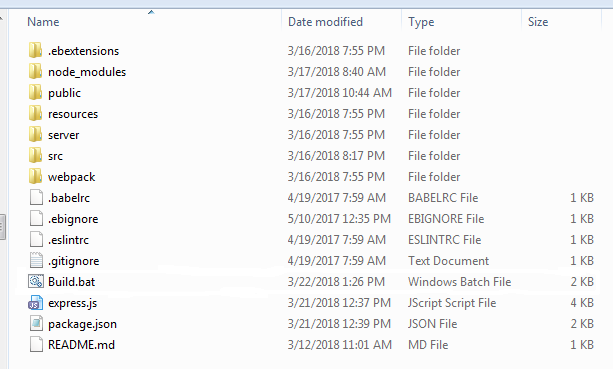

Let's first take a look at the skeleton app. Unzip the sample and look at the folders and files at the root.

We're not going to take an in-depth look at how Express apps are built or the purposes of all the files here in this article. If you want to learn more about Express apps you can visit Express web framework and Express Tutorial. For this article we're going to focus on how we run the site locally for development and testing, how we implemented Single-Sign-On, and how we deploy the website to AWS.

This is a Single Page Application (SPA). Once the user is granted access to the site all traffic goes to the single page "index2.html". It's named index2 so that the webserver won't serve up the 'default' page when the user types in just the domain name. The app runs locally in a Node.js environment. This allows your local machine to run the application without a web server. Find out more information about Node.js here at Nodejs.org. If you don't already have Node.js installed then you can download it and install it from there as well.

When installing Node.js, be sure to check the option to add nodejs to your system's path environment variable. Node.js runs from the command line so open up a command window at the location of the files (shift + right-click in the folder window to include the option for command window in the context menu). The first thing we'll need to do is install the packages needed for the site. If you open up the node_modules folder from the sample you'll see that it's empty. This is often the case with Node.js samples. The dependencies are downloaded as needed. To do this we'll use the Node Package Manager which is automatically installed as part of Node.js.

Let's first see what NPM will install. Navigate back to the root folder and open the package.json file.

{

"name": "AngularJS-AWS-SSO",

"version": "1.0.0",

"description": "Sample site to show SSO on AWS",

"scripts": {

"start": "node express.js",

"start:debug": "npm run build && cross-env NODE_ENV=production node --inspect --trace-warnings express.js",

"start:build": "npm run build && cross-env NODE_ENV=production node express.js",

"build": "webpack --config ./webpack/config.prod.js --progress --colors --optimize-minimize"

},

"dependencies": {

"angular": "^1.6.2",

"angular-route": "^1.6.2",

"body-parser": "^1.17.1",

"cookie-encryption": "^1.6.0",

"cookie-parser": "^1.4.3",

"cors": "^2.7.1",

"express": "^4.15.0",

"morgan": "^1.8.1",

"passport": "^0.2.2",

"samlify": "^2.3.6"

},

"devDependencies": {

"babel-core": "^6.23.1",

"babel-eslint": "^7.1.1",

"babel-loader": "^6.2.10",

"babel-preset-es2015": "^6.22.0",

"copy-webpack-plugin": "^4.0.1",

"cross-env": ".....

},

"license": "ISC",

"author": "Mike Baker"

}

The package.json file contains two sections of "dependencies". One for the runtime, and one for development. Both of these sections will be used by the npm package installer. We can use npm to install just a single package by supplying the name of the package, but right now we want them all.

Type npm install in the command line window and press enter.

The first time I did this several error messages were displayed in the console that referred to Python. If this happens to you then you may need to download and install Python from Python downloads. Be sure to get the 2.7.xx version rather than the newest one. Some of the dependency files require the 2.7 version.

If all goes well then you should see a very long list of packages being downloaded. If you open the node_modules folder again, you should see about 750 folders even though there are not nearly that many in the package.json file. Each of the packages has its own package.json. The node package manager processes each package's own package.json and all the dependencies of each are installed.

The npm package manager, in addition to installing packages, can be used to run the application. Let's look again at the top of the package.json file.

{

"name": "AngularJS-AWS-SSO",

"version": "1.0.0",

"description": "Sample site to show SSO on AWS",

"scripts": {

"start": "node express.js",

"start:debug": "npm run build && cross-env NODE_ENV=production node --inspect --trace-warnings express.js",

"start:build": "npm run build && cross-env NODE_ENV=production node express.js",

"build": "webpack --config ./webpack/config.prod.js --progress --colors --optimize-minimize"

},

The "scripts" section contains several scripts that can be run via npm. For example if we enter npm run start then npm will run the script titled "start" which will run node express.js. We could also just type in node express.js into the command line window to acheive the same result.

We can't get the site running just yet. Remember the page is going to request SSO information from an IdP (Identity Provider). We haven't set one up yet so let's get to it.

More SAML

The Identity Provider (IdP)

There are a number of IdP services available. The service I chose for doing my own internal testing is Auth0. It's free to sign up and so far I haven't been charged for anything. You can sign up for Auth0 by going to Auth0.com. Enter an email to use as user id and create a password.

After logging in for the first time you're asked to create a domain.

Setup account type. After the domain name you fill in some information about the type of company.

The next step is to set up a test user account. After setting up the account you should be seeing the "Dashboard". On the left side of the screen click "Users" and then click the "Create User" button.

Fill in the popover box with the values you want to use for test user and click "SAVE".

Once you're done setting up the company and the test user, we can create the client. The term client in Auth0 refers to the app that will be using SSO. In other words our "Service Provider".

After you create the client we're going to need to save the Client ID for later, and then change a few settings. Click the "Settings" link, copy the "Client ID" to the clipboard and save it someplace. (We'll be saving a few things for use later so you might want to create a folder for some text files.)

We can use the defaults for many of these settings, so we'll only focus on the ones that we need to change here. Scroll down to the "Allowed Callback URLs" section.

The "Allowed Callback URLs" entry tells Auth0 where it can send the results when the user logs in. This is called the "Assertion Consumer Service". The entries need the domain and the Express Route '/sso/acs'. We will be using Node.js to run the site locally during testing so we need to allow a callback to localhost for that. Update the settings to match the image. Be sure to use the domain you'll be using for your site (that step will come later). The image looks like it's two separate lines with return character, but it's not. Only one line, just three items with a comma and space as delimiter. We also need to do the same thing for the Allowed Logout URLs. In those entries the difference is the route of course. '/sso/logout'

One more step to do on this screen. Scroll down to the bottom and click "Show Advanced Settings".

In the Advanced Settings section, click "Endpoints", then click the "Copy" icon by the SAML Metadata URL.

SAML Metadata

I mentioned earlier that SAML uses XML to define the interaction, and to relay information, between the SP (Service Provider) and the IdP (Identity Provider). In order to do this an agreement between the SP and the IdP must be set up in advance. This is done using SAML Metadata XML files. We generate a metadata for the SP and one for the IdP and incorporate these into the SP (our web application). The values in these metadata files are used by the samlify lib to build the information that is sent to the IdP when the user attempts to use the app.

In the last step we copied an 'SAML Metadata URL' onto the clipboard. Paste that URL into a new browser window address bar. Depending on your browser settings, you may see some XML in the browser, or you may be prompted to download the file. If you see the XML in the window then switch to source so you can copy it onto the clipboard and save it in a file named COMPANY_IDP_metadata_localhost.xml. This is the IdP metadata.

Next we'll need the SP metadata. Auth0 doesn't have a tool for generating the SP metadata, but there is one at samltool.com Navigate to the site, click the menu, and select "ONLINE TOOLS".

SSO uses cryptography involving a private key and a certificate to (optionally) encrypt the SAML messages. For the localhost testing, we can use a Self-Signed certificate. Click "X.509 CERTS" and then "Obtain Self-Signed Certs" in the menu on the left. Fill in the info in the boxes...

Scroll down and click the "GENERATE SELF-SIGNED CERTS" button. You will be rewarded with a private key and an X.509 cert. Copy the contents of each of these boxes and save them in separate files.

We're finally ready to build the SP metadata file. Click "BUILD METADATA" and then "SP" in the menu on the left.

You may recall that we saved the Client ID in a text file during an earlier step, as well as the X.509 cert. We'll need those now to fill in these boxes. Find those items and fill in the form as follows:

Scroll down past all the optional items and click the "BUILD SP METADATA" button and a new box will appear with the XML format metadata that we need. Copy the contents of that window and save it to a file named "COMPANY_SP_metadata_localhost.xml".

SAML metadata xml

Let's open up the IDP metadata and take a look at some key values. If you followed along the file should be COMPANY_IDP_metadata_localhost.xml.

<EntityDescriptor entityID="urn:test-dev.auth0.com" xmlns="urn:oasis:names:tc:SAML:2.0:metadata">

<IDPSSODescriptor protocolSupportEnumeration="urn:oasis:names:tc:SAML:2.0:protocol">

<KeyDescriptor use="signing">

<KeyInfo xmlns="http://www.w3.org/2000/09/xmldsig#">

<X509Data>

<X509Certificate>MIIC/zCCAeegAwIB...VBLvQ/NpACq/</X509Certificate>

</X509Data>

</KeyInfo>

</KeyDescriptor>

<SingleLogoutService Binding="urn:oasis:names:tc:SAML:2.0:bindings:HTTP-Redirect" Location="https://test-dev.auth0.com/samlp/G87R....Mr6l/logout"/>

<SingleLogoutService Binding="urn:oasis:names:tc:SAML:2.0:bindings:HTTP-POST" Location="https://test-dev.auth0.com/samlp/G87RO....8Mr6l/logout"/>

<NameIDFormat>urn:oasis:names:tc:SAML:1.1:nameid-format:emailAddress</NameIDFormat>

<NameIDFormat>urn:oasis:names:tc:SAML:2.0:nameid-format:persistent</NameIDFormat>

<NameIDFormat>urn:oasis:names:tc:SAML:2.0:nameid-format:transient</NameIDFormat>

<SingleSignOnService Binding="urn:oasis:names:tc:SAML:2.0:bindings:HTTP-Redirect" Location="https://test-dev.auth0.com/samlp/G87R....Mr6l"/>

<SingleSignOnService Binding="urn:oasis:names:tc:SAML:2.0:bindings:HTTP-POST" Location="https://test-dev.auth0.com/samlp/G87R....Mr6l"/>

<Attribute Name="http://schemas.xmlsoap.org/ws/2005/05/identity/claims/emailaddress" NameFormat="urn:oasis:names:tc:SAML:2.0:attrname-format:uri" FriendlyName="E-Mail Address" xmlns="urn:oasis:names:tc:SAML:2.0:assertion"/>

<Attribute Name="http://schemas.xmlsoap.org/ws/2005/05/identity/claims/givenname" NameFormat="urn:oasis:names:tc:SAML:2.0:attrname-format:uri" FriendlyName="Given Name" xmlns="urn:oasis:names:tc:SAML:2.0:assertion"/>

<Attribute Name="http://schemas.xmlsoap.org/ws/2005/05/identity/claims/name" NameFormat="urn:oasis:names:tc:SAML:2.0:attrname-format:uri" FriendlyName="Name" xmlns="urn:oasis:names:tc:SAML:2.0:assertion"/>

<Attribute Name="http://schemas.xmlsoap.org/ws/2005/05/identity/claims/surname" NameFormat="urn:oasis:names:tc:SAML:2.0:attrname-format:uri" FriendlyName="Surname" xmlns="urn:oasis:names:tc:SAML:2.0:assertion"/>

<Attribute Name="http://schemas.xmlsoap.org/ws/2005/05/identity/claims/nameidentifier" NameFormat="urn:oasis:names:tc:SAML:2.0:attrname-format:uri" FriendlyName="Name ID" xmlns="urn:oasis:names:tc:SAML:2.0:assertion"/>

</IDPSSODescriptor>

</EntityDescriptor>

In the XML sample above, I've highlighted some items. The entityID is the ID of the IdP. this includes the domain you used when setting up the account in Auth0. The next item indicates that this is an IDP item being described. The KeyDescriptor gives the app information about encryption options in play. In this case it tells us the X509 cert data included there is used for signing the data. SingleLogoutService tells us that this IdP supports single logout and the URL for that. Single logout means that when a user logs out of one of the applications it should be logged out of all the applications. SingleSignOnService means the same thing but for Sign On instead of log out. The last bit of info i highlighted is the "identity/claims" section. This section give us the list of all the data items that will be returned to us by the IdP when someone logs in (which we'll be seeing later on). The IdP will authenticate the user and return the info to us, in a sense stating "I claim to have this email address, this first name, this surname" and so on.

Next we'll look at the SP metadata. Remember the filename for that? COMPANY_SP_metadata_localhost.xml

<md:EntityDescriptor xmlns:md="urn:oasis:names:tc:SAML:2.0:metadata"validUntil="2018-06-24T15:04:39Z" cacheDuration="PT604800S" entityID="http://localhost:8080/sso/G87RO....Mr6l">

<md:SPSSODescriptor AuthnRequestsSigned="false" WantAssertionsSigned="false" protocolSupportEnumeration="urn:oasis:names:tc:SAML:2.0:protocol">

<md:KeyDescriptor use="signing">

<ds:KeyInfo xmlns:ds="http://www.w3.org/2000/09/xmldsig#">

<ds:X509Data>

<ds:X509Certificate>MIICjDCCAfWgA...OFN1X+k9gnBbdvVV1lik4wY=</ds:X509Certificate>

</ds:X509Data>

</ds:KeyInfo>

</md:KeyDescriptor>

<md:KeyDescriptor use="encryption">

<ds:KeyInfo xmlns:ds="http://www.w3.org/2000/09/xmldsig#">

<ds:X509Data>

<ds:X509Certificate>MIICjDCCAfWgA...OFN1X+k9gnBbdvVV1lik4wY=</ds:X509Certificate>

</ds:X509Data>

</ds:KeyInfo>

</md:KeyDescriptor>

<mdSingleLogoutService Binding="urn:oasis:names:tc:SAML:2.0:bindings:HTTP-Redirect" Location="http://localhost:8080/sso/logout"/>

<md:NameIDFormat>urn:oasis:names:tc:SAML:1.1:nameid-format:unspecified</md:NameIDFormat>

<md:AssertionConsumerService Binding="urn:oasis:names:tc:SAML:2.0:bindings:HTTP-POST" Location="http://localhost:8080/sso/acs" index="1"/>

</md:SPSSODescriptor>

</md:EntityDescriptor>

The first thing I highlighted is the 'validUntil' value. The tool that generates this value gives you a few days before the expiration. You may want to give yourself more time than that. After that you'll notice that the entityID, copied from the data you filled in on the form. Following that on the next line is SPSSODescriptor so we know we're talking about the SP here. Notice here that there are two sections with KeyDescriptor. We can see that it's used once for signing, and again for encryption. The last two things I highlighted are the SingleLogoutService and the AssertionConsumerService. Since this is the SP we're talking about, the SingleLogoutService specifies the route that will handle logout messages coming from the IdP. AssertionConsumerService specifies the url for the IdP to send POST messages after someone successfully logs in. We're going to step through this process once everything is in place and we can test run the app. We're almost there.

Back to the sample app

Let's refer back to the sample application and this time we'll open the "resources" folder.

Take the files COMPANY_SP_metadata_localhost.xml, and COMPANY_IDP_metadata_localhost.xml and replace them with the ones you saved earlier. Then open the dummy private-key_localhost.pem file (it's just a text file so you can use notepad) and replace its contents with the private key you generated and saved in the SAML tools.

Next back up out of the resources folder and open up the 'build.bat' file. We're not going to make any changes here, just going to look at what it does. Here's an excerpt from the file.

if %~1==production GOTO :production

if %~1==staging GOTO :staging

if %~1==localhost GOTO :localhost

GOTO :syntax

:production

copy resources\https-instance_production.config .ebextensions\https-instance.config

copy resources\private-key_production.pem server\config\private-key.pem

copy resources\COMPANY_IDP_metadata_production.xml server\config\COMPANY_IDP_metadata.xml

copy resources\COMPANY_SP_metadata_production.xml server\config\COMPANY_SP_metadata.xml

GOTO :buildit

:staging....

My typical work process includes initial development and testing on localhost (or a VM server but that's another story), then there's a staging server for a life-like environment but not available to the public, then a production build. The Express app uses the private-key and the metadata files from the "server/config/" folder, but it needs different ones for each build. This batch file uses the resources folder and puts the correct private-key, SP, and IDP metadata files in place based on the item you specify when running the batch file. In the case of the staging and production environments it also packages the zip file that Amazon Web Services will want to be deployed on Elastic Beanstalk. Yes, there are a number of ways I could have done this without a batch file and the use of goto. Maybe next time I'll try Python. For now it uses the WinZip command line tools installed to the default location. Close the batch file and use a command line window (Shift+right-click) to run build localhost.

You should have seen "1 file(s) copied" three times and now the correct metadata and private-key file is in place and ready to go. Let's run the application and see what happens. In the command line window enter npm run start:build. You should see "Express server running at localhost:8080". Now you can open up a browser window and enter "http://localhost:8080" in the address bar. The Auth0 login box should display.

So how did that work? Let's take a look at the code in express.js. Go ahead and open that file and I'll describe each section.

At the top we include the cookie-parser and cookie-encrption. When we get a result back from the IdP we store an encrypted cookie that indicates the person is signed in. We also include the 'samlify' lib that will handle the communication and parsing the data that flows between SP and IdP. Also at the top is the setting of the localhost port that will be used. This can be set by the launch script but we're just letting it default to 8080 (you can of course change this to whatever number you want if you don't want to use 8080).

const cookieParser = require('cookie-parser');

const cookiee = require('cookie-encryption');

...

const saml = require('samlify');

const port = process.env.PORT || 8080;

Next is the creation of the ServiceProvider var 'sp' and the IdentityProvider var 'idp'. You can see here that the code uses the private-key and metadata files from the "./server/config/" folder. This is where they were placed by the batch file, and they were renamed by the batch file so that the same code will work regardless of the build target, localhost, staging, or production. The "privateKeyPass" value is an empty string. When you created the self-signed certificate the instructions didn't include putting in a password although there was a box there for that. If you put in a password then this is where you would enter that.

In the IdP setup notice that isAssertionEncrypted is set to false. If you set this to true, then samlify will include that with the SAML data to have the IdP encrypt the response. When the response comes in, the samlify lib will use the X.509 cert from the metadata to decrypt it prior to parsing.

var ServiceProvider = saml.ServiceProvider;

var IdentityProvider = saml.IdentityProvider;

var sp = ServiceProvider({

privateKey: fs.readFileSync('./server/config/private-key.pem'),

privateKeyPass: '',

requestSignatureAlgorithm: 'http://www.w3.org/2000/09/xmldsig#rsa-sha1',

metadata: fs.readFileSync('./server/config/COMPANY_SP_metadata.xml')

});

var idp = IdentityProvider({

isAssertionEncrypted: false,

metadata: fs.readFileSync('./server/config/COMPANY_IDP_metadata.xml')

});

The next section sets up the cookieVault that we'll use to store the cookie after someone logs in. The COOKIE_CODE is just a random set of characters that I picked.

const COOKIE_CODE = 'w450n84bn09ba0w3ba300730a93nv3070ba5qqvbv07';

const cookieHoursMaxAge = 12;

var cookieVault = cookiee(COOKIE_CODE, {

cipher: 'aes-256-cbc',

encoding: 'base64',

cookie: 'ssohist',

maxAge: cookieHoursMaxAge * 60 * 60 * 1000,

httpOnly: true

});

Next we're going to skip down near the bottom of express.js and look at the app.get(*, .... This is the line that catches all traffic that's not matched in any of the previous items (Express apps work from the top down).

On the 2nd line it reads the cookie to see if it is equal to COOKIE_CODE. Remember, that's our flag to tell if the login is done. First visit here it won't be so it goes down to the else. There's a test to exlude the 'favicon' call and if it's not that then it redirects itself to its own /sso/login route. So let's look at that.

app.get('*', function (req, res) {

if (cookieVault.read(req) === COOKIE_CODE) {

res.sendFile(path.join(__dirname, publicPath, 'index2.html'))

} else {

if (!req.url.includes('favicon'))

res.redirect('/sso/login');

}

})

Here we call on the sp (ServiceProvider) variable we created up above to create a request for the IdP to log in the user. The app then redirects to the url which is on the IdP's system.

app.get('/sso/login', function (req, res) {

url = sp.createLoginRequest(idp, 'redirect');

res.redirect(url.context);

});

Remember in the IdP metadata there was a SingleSignOn entry?

<SingleSignOnService Binding="urn:oasis:names:tc:SAML:2.0:bindings:HTTP-Redirect" Location="https://test-dev.auth0.com/samlp/G87....Mr6l"/>

Samlify uses the idp variable and the 'redirect' option to determine the redirect. The end result is the login dialog box displayed by Auth0.

When the user completes the login step the IdP sends a POST message with the SAML response. This post goes to the URL in our SP_metadata file for AssertionConsumerService. The /sso/acs in that entry matches up with the Express route app.post('/sso/acs/' ...) Our code that handles that is this:

app.post('/sso/acs', function (req, res) {

sp.parseLoginResponse(idp, 'post', req)

.then(parseResult => {

if (parseResult.extract.nameid) {

cookieVault.write(req, COOKIE_CODE);

res.redirect("/");

} else {

res.redirect('/sso/login');

}

})

.catch(console.error);

});

As the comment says, this function makes sure there's a nameid in the response. At the begining of the function we call sp.parseLoginResponse. This returns a Promise and we use .then(parseResult ... to check the respose for a nameid. If a nameid is found, it writes the cookie and redirects to "/". If the nameid isn't found then we're redirected to the /sso/login again which goes back to the IdP for the login dialog. When we're redirected to the site root, "/", the cookie has been written and this time the test passes so the response is index2.html.

You might have noticed that we're not actually using the nameid value here. It's checking to see if it's there and then writing the COOKIE_CODE into the cookie. Depending on the type of app you're building you may want to put something more robust here. For example you could use the nameid to look up a user in a database and allow specific permissions within your application based on who's using it. Also, you can see that the .catch function just echoes the error to the console. If you want to display an error message in the event that the response can't be parsed, this is where you would do that (or you could call a general purpose error reporting module).

SAML traffic

The SSO transaction can be initiated by the SP or the IdP. The sample code provided here uses SP-Initiated login. When a user attempts to use the website, the app.get(*, ...) function intercepts all traffic and checks a cookie to see if this station is already logged in. If the cookie is present then the traffic gets sent to the index2.html file. If the cookie is not present then the traffic is redirected to the /sso/login handler. The /sso/login handler prepares the login request and redirects to that url, hence the SP-initiated login. So what's going on behind the scenes? Can we see this exchange? In fact we can. There are a few extensions for watching SAML traffic while it travels between SP and IdP. You can find these in the Chrome web store.

I chose the first one. After it's installed you can find the SAML extension by pressing F12 for the developers tools, then finding SAML in the tabs (it might be hidden as shown in this image).

After opening the SAML tab we can navigate to the localhost:8080 site and see the traffic interchange between our app and the IdP. In the image you can see two GETs from the app to the IdP, and a POST from the IdP to our /sso/acs. In the tabs on the bottom you can explore the SAML formatted data, the request, and the response.

OK, all done with the localhost testing. Time to prepare the application to be deployed on Amazon Web Services. First let's remember -

SP-initiated login == The SP calls to the IdP to ask for a security assertion.

We include the samlify lib into the express app by const saml = require('samlify');

NodeJS allows us to run the content in localhost for development and testing.

The SP has SP metadata and the IdP has the IdP metadata. A) True but incomplete, the SP has both the SP and the IdP metadata.

Getting the content ready

SAML metadata

Remember we used the samltool website and created our own private-key, a self-signed X.509 cert, and metadata for the SP. That was OK for localhost testing but you might not be able to use it for staging and/or production. It depends on the IdP and how the SP is set up. Some IdP systems require that you upload the SAML metadata for the SP. They incorporate that into their setup for the SP and they can use the info to ensure the traffic is coming from one of their approved sources. In other cases the IdP might require that the Certificate Authority (CA) issue a certificate of its own to be used to establish what's called a "trust anchor" (AKA "anchored trust"). In this scenario the IdP just makes sure that the traffic is signed / encrypted with a certificate was issued by that CA.

In our test case with Auth0 we incorporated the SP metadata and the IdP metadata into the app so that the SP can verify that the assertion posted to /sso/acs comes from the IdP. This will hold true for the staging and production targets but it will use the metadata provided by your particular IdP when those respective systems are configured.

Note: Using the same private-key and cert for the SAML as the one used for SSL in the next section would most likely NOT bet considered a best practice from a security standpoint.

HTTPS certificate

Optaining an SSL certificate is outside the scope of this article (it's long enough already). However, once you have them, we need to get them into our setup so let's do that now.

In order to use HTTPS, an SSL certificate and the associated private-key needs to be installed on the server. When we set up an app on AWS (which we're about to do) server instances are created on demand so you won't be there to do the installation and setup. AWS handles this scenario by getting the certificate from a special file called an EB Extension. AWS will look for the extensions in a folder called .ebextensions at the root of the deployment bundle (a zip file). We have created this folder in our project. There are two files in the folder: https-instance-single.config, and http-instance.config. http-instance-single.config never changes, it's the same for all sites that are going to support SSL.

Resources:

sslSecurityGroupIngress:

Type: AWS::EC2::SecurityGroupIngress

Properties:

GroupId: {"Fn::GetAtt" : ["AWSEBSecurityGroup", "GroupId"]}

IpProtocol: tcp

ToPort: 443

FromPort: 443 CidrIp: 0.0.0.0/0

The file we need to customize is the https-instance.config. Once again we need a different file for the staging and the production targets. The "build" batch file is going to come into play here. The build.bat file uses files in the "resources" folder so open that folder and find the file https-instance_staging.config and open that:

files:

/etc/nginx/conf.d/https.conf:

mode: "000644"

owner: root

group: root

content: |

# HTTPS server

server {

listen 443;

server_name localhost;

ssl on;

ssl_certificate /etc/pki/tls/certs/server.crt;

ssl_certificate_key /etc/pki/tls/certs/server.key;

ssl_session_timeout 5m;

ssl_protocols TLSv1 TLSv1.1 TLSv1.2;

ssl_ciphers "EECDH+AESGCM:EDH+AESGCM:AES256+EECDH:AES256+EDH";

ssl_prefer_server_ciphers on;

location / {

proxy_pass http://nodejs;

proxy_set_header Connection "";

proxy_http_version 1.1;

proxy_set_header Host $host;

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

}

}

/etc/pki/tls/certs/server.crt:

mode: "000400"

owner: root

group: root

content: |

-----BEGIN CERTIFICATE-----

MIIFUTC...

SSL Security Cert in PEM format goes here

make sure each line is indented with 8 spaces

...BWj4iNx5R18HtP

-----END CERTIFICATE-----

-----BEGIN CERTIFICATE-----

MIIFD

If this cert came with intermediate certs. Those are

also included.

Lcw=

-----END CERTIFICATE-----

-----BEGIN CERTIFICATE-----

MIIE/zCC...

Include all the intermediate certificates in order 1, 2, n but not the root

...OzeXEodm2h+1dK7E3IDhA=

-----END CERTIFICATE-----

/etc/pki/tls/certs/server.key:

mode: "000400"

owner: root

group: root

content: |

-----BEGIN RSA PRIVATE KEY-----

MIIEpAIBAAKC...

The private key goes here,

indented 8 spaces just like the certs

...Nn23WsSqctqP2Ce9g==

-----END RSA PRIVATE KEY-----

First thing you see is "files:". That's followed by /etc/nginix/conf.d/http.conf. If you've worked on a Linux box and setup Nginx then you'll recognize the filename as being a configuration file for http. On closer examination you see two other files: /etc/pki/tls/certs/server.crt and /etc/pki/tls/certs/server.key. Each of these has properties "mode", "owner", "group", and "content". So we can conclude that what this does is take the contents of this file and create the other files on the instance before it's started so the configuration we want is in place at startup.

We need to insert the certificate(s) and the private key in a text format. This is called the PEM format. When you generated your private key and the X.509 cert for the SAML metadata it was in that format. Plain text with -----BEGIN... and -----END... tags. When you get a cert from a Certificate Authority (CA), it may come in the PEM format. If it doesn't then you can use the tool here to convert it. To see if yours came in the PEM format just open them up with notepad and see if it's text with the BEGIN and END tags. If they're not text with those tags then check with the certificate authority to make sure you know the format you have. Also there may be intermediate certificates as well. You'll need to make sure each of these is in the PEM format.

Once you have all the certs and the private key in the PEM format, return to this file and find the spots in the /etc/pki/tls/certs/server.crt files where they need to be inserted. As mentioned in the comments, you need to make sure the certs are indented 8 spaces and that they include the begin and end tags. Insert the main cert and the intermediate certs if any exist, but not the root. If you have two intermediates then you'll start with the cert for the domain, then intermediate 1, then intermediate 2.

You'll do the same thing with the private-key. This must be the private key that you submitted to the CA when you requested the certificate. If the private key and the certs don't match up then the server will fail to start up and the mismatch will be noted in the logs.

When the certs and the private key contents are in place for the staging site, repeat the process for the certs and the private key for the production site. If you purchased what's called a wildcard SSL cert, or a multi-domain cert then you can use the same private key and cert for both. Otherwise you'll need to use the files spefic to the target site.

Using the same private-key and cert for the SAML as the one used for SSL would most likely NOT bet considered a best practice from a security standpoint.

Bundling the app

OK, we finished setting up the code for SSO, we've completed the testing of the app, we obtained the certificates and placed them in the project's config files, and we're ready to deploy it to AWS. During the process we'll need to upload the zip file with our site's code and assets. The batch file comes in handy now. Use a command line window to run the batch file using build staging. Now the /server/config/ folder will have the proper SAML metadata, private-key, and the https-instance.config file. The batch file also created a .zip file called COMPANY-YYYYMMDDHHMMSS.zip (the word COMPANY with a timestamp). This is our deployment bundle.

AWS setup

We've gone a long way, we're almost ready with the new live site. In order to perform these next steps you'll need SSL certificates for the new domain. If you don't actually have a live site to deploy then you can follow along with the screen shots.

First thing you'll need is an AWS account. Sign up for one at aws.amazon.com.

Fill in the boxes in the screen that follows:

When you reach the main screen, you'll see all the various services that AWS offers. We're going to be looking for Elastic Beanstalk first.

Click "Create new Application", then enter the values in the popover dialog box.

When you create an application space in AWS it needs an environment in which to run. That's the next step. Click "Actions" then "Create environment".

What we have built is a web application that will run on a web server (but we don't want to bother maintaining a web server so we're using AWS). You might notice that it doesn't mention HTTPS, don't worry - the HTTPS configuration is in the ebextensions that we setup earlier.

AWS read the name of the application and pre-filled the name for the environment. You need to supply the subdomain name and test it with the "Check availabiltiy" button until you find one that's available. After you find a domain that works, scroll down.

We want a preconfigured platform using Node.js, we already created a bundle (the zip file assembled by the batch file) so click the "Upload your code" radio button and then click the "Upload" button.

Click "Choose File" and navigate to the zip file in the project folder. After selecting the file, click "Upload" and when the upload is done click "Create Environment".

When you click "Create Environment" a process will start that performs all the steps to 'spin up' your new server instance. If all goes well you'll see messages about successful startup and be rewarded with a reload of the page focused on your brand new machine.

OOPS!! Something's wrong. When the page reloaded we see the big red ! and the health is "Degraded". Check this out by accessing the "Logs" on the left.

Click on "Request Logs", then "Full Logs", then "Download". You'll get a zip file with a "Logs" folder that includes a lot of different logs. I use "Windows Grep" to search the logs folder for any instance of "error" in all the files.

WindowsGrep shows me a collection of errors referring to the primary key and cert mismatch that I mentioned earlier.

So we fix the issue with the key and the cert mismatch and return to the dashboad. Click the "Upload and Deploy" button to replace the existing bad zip file with the corrected one. The process replaces the zip file and restarts the instance initialization with the new contents.

Success! The app shows green! Can we try it out yet? You might think that we can't because we haven't set up the domain within AWS or given any info to the domain's registrar, so the DNS is not going to resolve to the right place. You're right about that, but there is a way we can test. At the top of the screen you'll see the domain that you set up in an earlier step. In my sample it's "samlapp.us-east-1.elasticbeanstalk.com". You can click on that link and the site will open up in the browser (possibly a new tab or a new window).

If you run the site in the browser you should find yourself in the login screen for the IdP. At this point the login won't work because, as we just mentioned, the domain isn't going to resolve to the right locaation. The IdP doesn't have this URL in its list of approved Callback URLs. We want to get this app running through the companies domain. We have to introduce Amazon's Route 53 service for this. Before we leave Elastic Beanstalk, let's take a quick look at some of the settings for the application you just built. Click on the "Configuration" item in the menu.

The "Software" box tells you what platform you selected to use in this environment, Node.js 6.13.1. The "Instances" box lets us know that currently we have a single instance, what type is being used. "Capacity" tells us this is a single instance, "Load Balancer" tells us there is no load balancer in use. The Capacity and Load Balancer configuration works hand in hand of course. Click inside the Capacity and you can see that the only other option is "Load Balanced". This sample site uses a single instance because the user count is not expected to be high in this specific case. If you expect a lot of traffic on your site then you would want to configure multiple instances and a load balancer.

Use Elastic Beanstalk in Amazon Web Services to create a new application environment.

.ebextensions are used to customize the deployment of the Elastic Beanstalk

The deployment bundle to upload to an Elastic Beanstalk environment is a zip file with the application but not the node_modules folder.

Route 53

We have a custom domain name and we want to use the AWS Elastic Beanstalk application. This means Route 53. At the very top of the AWS screen tap "Services", scroll down a bit and find "Route 53" (or type Route 53 in the search box).

In the screens that follow I'm using the production domain so you don't see "staging" in the names.

When you open Route 53 for the first time you get some quick-start options. We're going to select "DNS Management".

Then select "Create Hosted Zone". This brings you to the screen where your Hosted Zones are listed and you have to hit "Create Hosted Zone" again.

Put the name of your domain in the top box, a comment, and leave the selection set to "Public". Click "Create"

You'll see two Record Sets already created. One set is the NS Record Set which for Name Server. These are the values that you provide to your domain registrar. It may take as long as two days for the names to be propogated throughout the DNS system and start working. In order for us to use our Elastic Beanstalk server for this domain we need to create an "Alias Record Set".

Click "Create Record Set". In the panel on the left we're going to enter values four times. Two for ipv4, one with www and one without, and then two for ipv6, one with the www and one without. So for the first one enter www in the "Name" box, select A - IPv4 address in the Type box, click the "Yes" radio button for "Alias", then tap inside the box by "Alias Target". You should see a select box like the one shown in the picture. Find the Elastic Beanstalk environment you created earlier. After you select it and it's in the box, highlight it and copy it to the clipboard.

Take a moment to look at the options below the "Alias Target". You can set this to a CloudFront distribution name which would work for a static website that you're distributing around the world, a Load Balancer would be your choice if you decided to create multiple instances with load balancing.

Repeat that process again for another "A - IPv4 address" type, but this time put www in the "Name" box. That finishes mydomain and www.mydomain for IPv4. Repeat the process but for the next two select the AAAA - IPv6 address in the "Type" box. This time you might not see the Elastic Beanstalk instance which is why you copied it to the clipboard. Just paste it in. When you're all done, the board should look like this:

Now we have learned

Route 53 is used to direct domain traffic to an AWS resource such as Elastic Beanstalk instance or load balancer.

We have to create four Record Sets to handle the traffic for IPv4 and IPv6.

That's it. A Single Page Application, Single Sign-On using SAML2, deployed to Amazon Web Services Elastic Beanstalk, and served via Route 53 and a custom domain.

Points of Interest

See:

See what you remember:

Q. What does SSO mean?

- Single Sign On

- Seperate Sign On

- Subtle Sign On

A) Single Sign On

SAML stands for:

- Security Assistance Markup Language

- Security Assertion Markup Language

- Secure Access Management List

A) Security Assertion Markup Language

True or False... an IdP is used to control access to a single site or service.

A) False, an IdP can be used to allow many different poeple accecss to many different services.

History

Jun 2018 - First release