Introduction

I recently added the Recursive Descent Parser from Math Function Tutor: Part 2 to my Polynomial Project. While I was at it, I made it a library, replaced the tests in Main() with a Unit Test project called UnitTestProjectMath, and updated the entire project to .NET 4.5.2.

Shortly afterwards, I came across an interesting book at our local library's book sale called "Numerical Mathematics and Computing" (Ward Cheney and David Kincaid, Cengage Learning (c) 2012, 7th Edition). One of the first things that caught my eye was Horner's method for evaluating polynomials (p. 8). However, only pseudocode is presented in the book, so I thought I would write some C# code to implement the algorithm, and an appropriate place to add it is the Polynomial Project since the project already deals with polynomials. Although there are numerous subjects covered in Cheney and Kincaid, I converted the book's pseudocode to C# for six topics, described in detail via the following links:

- Floating Point Representation

- Horner's Method for Evaluating Polynomials

- Bisection Method for Finding Roots

- Gaussian Elimination to Solve System of Equations

- Polynomial Interpolation

- Approximating pi

Using the Code

Download and extract the project, and open Polynomial.sln with Visual Studio. It consists of the following projects:

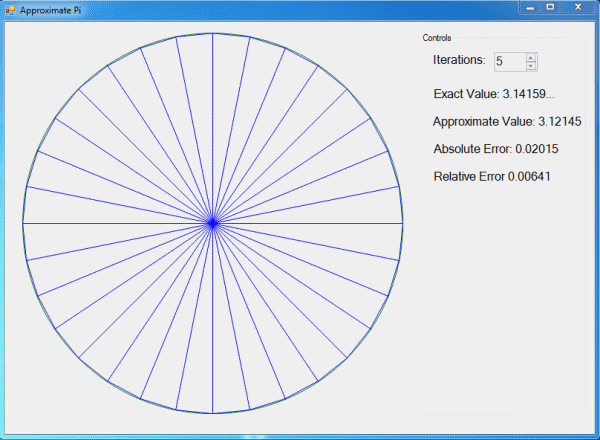

ComputePI - a Windows Forms project to demonstrate computing pi by inscribing triangles as shown in the above imageNumericalMath - the C# code to implement the book's pseudocodePolynomialLib - the original Polynomial Project converted to a libraryRDPLib - the Recursive Descent Parser (RDP). This is the same RDP as in Math Function Tutor: Part 2UnitTestProjectMath - a unit test project for PolynomialLib and NumericalMath

Build the solution by pressing F6.

Floating Point Representation

Here's a quote from the first chapter summing up why it's important to understand the limitations of floating point operations:

Never before in the history of mankind has it been possible to produce so many wrong answers so quickly.

The limitations of floating point math on a computer are familiar to anyone who has taken an introductory Computer Science class when they run a snippet of code like this:

double sum = 0.0;

for (int i = 0; i < 10; i++)

sum += 0.1;

In this example, sum will not have a value of exactly 1.0 as is expected mathematically. This is because 0.1 is not stored exactly. Using Jon Skeet's ToExactString(0.1), we can see 0.1 is actually stored as:

0.1000000000000000055511151231257827021181583404541015625

In the NumericalMath project, in FloatingPoint.cs, I show how a double-precision floating point number is stored. For example, the decimal number -3500.0 is stored as 64 bits: a sign bit, 11 bit exponent, and 52-bit mantissa:

1 10000001010 1011010110000000000000000000000000000000000000000000

The exponent is calculated by converting 100000010102 to its base 10 equivalent, 103410, and subtracting 102310 from it, resulting in 1110. The first bit (1) in the mantissa means 2-1, we ignore the second bit (zero), the third bit means 2-3, the fourth bit means 2-4 and so on, and summing all the fractions results in 0.70898437510. As shown in ToBinaryString() in NumericalMath FloatingPoint.cs, 1.0 is added to that resulting in 1.70898437510. Using the exponent of 1110, we then multiply 211, or 204810, times 1.70898437510, which is 350010. Since the sign bit is 1, the final result is -350010.

Horner's Method for Evaluating Polynomials

Cheney and Kincaid describe a technique known as Horner's Method (or Horner's Rule) for evaluating a polynomial using only addition and multiplication (and no exponential calculations) on page 8. The code is straightforward; I will mention that I provide two versions in NumericalMath HornersMethod.cs, HornerLINQ() and Horner(). The latter, the non-LINQ version, is somewhat faster, as shown by the results in the "Test Explorer" window.

See TestHornerLINQ() and TestHorner() in UnitTest1.cs.

Bisection Method for Finding Roots

Cheney and Kincaid discuss a method of finding the root of a continuous function in an interval on page 114. Using Math Function Tutor: Part 2, we can see from the image below that the root of the equation f(x) = x3.0 - 3.0 * x + 1.0 in the interval [0, 1] is about 0.34.

Implementing Cheney and Kincaid's technique in Bisection() in BisectionMethod.cs, here are the results of finding a root of the above equation in that interval (see MyFunction() in UnitTest1.cs for the function f(x), and see #region debug in NumericalMath BisectionMethod.cs for the code that outputs the numbers in this table):

| Iteration | Root | f(root) | Error |

| 1 | 0.50000 | -3.75e-001 | 5.00e-001 |

| 2 | 0.25000 | 2.66e-001 | 2.50e-001 |

| 3 | 0.37500 | -7.23e-002 | 1.25e-001 |

| 4 | 0.31250 | 9.30e-002 | 6.25e-002 |

| 5 | 0.34375 | 9.37e-003 | 3.13e-002 |

| 6 | 0.35938 | -3.17e-002 | 1.56e-002 |

| 7 | 0.35156 | -1.12e-002 | 7.81e-003 |

| 8 | 0.34766 | -9.49e-004 | 3.91e-003 |

| 9 | 0.34570 | 4.21e-003 | 1.95e-003 |

| 10 | 0.34668 | 1.63e-003 | 9.77e-004 |

Since Epsilon, the maximum absolute value of error, is 0.001, the root of f(x) after 10 iterations is found to be 0.34668.

See TestBisectionMethod() in UnitTest1.cs.

Gaussian Elimination to Solve System of Equations

On page 71, Cheney and Kincaid discuss solving a system of linear equations using a technique called Gaussian Elimination. They show an improvement called Partial Pivoting on page 85. The method GaussSolve() in GaussianElimination.cs shows this technique. It is a two-phase process:

- getting the input matrix in upper-triangluar form

- and then back substituting

The upper-triangluar form is (see #region debug in NumericalMath GaussianElimination.cs for the code that outputs this):

12.00x1 + -8.00x2 + 6.00x3 + 10.00x4 + = 26.00

0.00x1 + -11.00x2 + 7.50x3 + 0.50x4 + = -25.50

0.00x1 + 0.00x2 + 4.00x3 + -13.00x4 + = -21.00

0.00x1 + 0.00x2 + 0.00x3 + 0.27x4 + = 0.27

Now, we do the back substitution starting from the bottom. Thus:

0.27 * x4 = 0.27, x4 = 1.

Then the third equation becomes:

4.00x3 + -13.00 + = -21.00 or

4.00x3 = +8.00, x3 = +2.00, and so on to find x2 and x1, -2 and 1 respectively.

See TestGaussianElimination() in UnitTest1.cs.

Polynomial Interpolation

Cheney and Kincaid describe a method of Polynomial Interpolation on page 153 and it is implemented in NumericalMath PolynomialInterpolation.cs. For example, given a table of known temperatures and viscosities of water like the one below, what is the viscosity for a temperature of 8° C?.

| Temperature °C (x) | Viscosity mPa·s (y) |

| 0.0 | 1.792 |

| 5.0 | 1.519 |

| 8.0 | ? |

| 10.0 | 1.308 |

| 15.0 | 1.140 |

First, we compute the coefficients for a third order polynomial by calling CalculateCoefficients() with the x and y values in the table and an array to receive the coefficients. The double[] coefficients passed to CalculateCoefficients() is then passed to Evaluate() along with the x values and value of 8, the x value for which the y value is desired. The returned y value is 1.38. Using simple linear interpolation...

double viscosity = 1.519 - ((8.0 - 5.0) * (1.519 - 1.308) / (10.0 - 5.0));

...results in a value of 1.39 giving us confidence in the result. See TestPolynomialInterpolation() in UnitTest1.cs.

Approximating Pi

For the last example, I wanted to do something a little more fun. Cheney and Kincaid provide a method used by ancient mathematicians to approximate pi (p. 35) by inscribing triangles inside a unit circle and adding the areas. One such mathematician to do this was Archimedes. In the project ComputePI, I show a Windows Form project to do just that. As you select the number of iterations, the approximate value of pi, the Absolute and Relative errors (Cheney and Kincaid, page 6) are computed as shown in the above image. I believe Archimedes himself would have been impressed with what you can do with a 3GHz quad core processor and some code.

Conclusion

Modern Digital Computers can solve mathematical problems faster than people imagined possible even a few decades ago. However, due to the limitations of the floating point representation of numbers, as so eloquently stated in the opening quotation, one must always be cautious of the results as the answers can range from very accurate to wildly inaccurate, due to round off errors, truncation errors, or an iterative method failing to converge on a solution.

History