Randomness is important but sometimes it is clumpy, especially when it comes to code debugging or report analysis. It sucks when overall results get affected just because of a few random failures which you can't control (or might just not want to kill or fix due to some vigorous reasons and priorities factors on your machine), in these cases, 'a second chance' can fix a huge part of your reports and overall testing result.

Following the idea, in this article, I'll talk about how you can RE-RUN the testcases which have failed due to any random misfire on your machine or environment (of course, this random glitch or action should not be from your testing application or anything from your automation toolset because if your testing is getting interrupted or any of the testcases is failing due to your own application behavior, then it's critical and you should handle it via automation). We will also see how we can oversee how we can merge the results (passing ratios, reports, etc.) as the job-end activities when re-run will be performed via a batch command in your job. In the end, as a bonus part, we will also discuss what is the use of 'non-critical' tag when we include it in our batch command.

Bit of the History

3.0.4 is the current version of Robot Framework (I haven't released it I just read it from Robot framework Official documentation, but few years, testers and Engineers really needed to have an addition in its library to re-execute the testcases and then merge the reports accordingly. So, for this reason –rerunfailed to re-execute the failed tests has been added into Robot Framework 2.8, which was released around the mid of 2013 and then after a very short period of time with the release of Robot Framework 2.8.4, another command option (-merge) to merge the output results is added to its glossary. These two command options are really useful when used in combination to bring some really good overall results and save you from a lot of manual hassle.

How These Simple Options Do the Magical Stuff

Rerun failed option (--rerunfailed) re-executes the failed testcases once the actual test execution has been completed. It gets out the instances of failed testcases using the testcase status and then it re-executes all the testcases (gives it another chance to get passed if it failed intermittently).

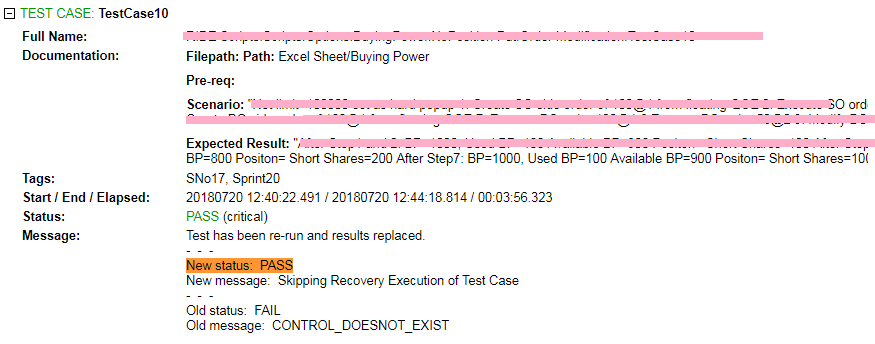

Figure 1: Jenkins showing the rerun status of the test cases.

In the image above, you can clearly notice that this testcase failed in the first run and in the second execution, it passes. Since its status, Old status and Old message are explaining this all. You might have noticed the text of the old message which tells the failure reason in the first run 'Control doesn’t exist', which comes rarely when something unexpected happens. So, in the next execution, the control was found and our testcase got passed.

Figure 2: Jenkins showing the command being run to rerun the tests.

ipy -m robot.run --output output-i.xml --include "BuildNumber"

--exclude "InValid" "RIDE Scripts" & ipy -m robot.run --rerunfailed output-i.xml

--output-ii.xml --include "BuildNumber" --"InValid" "RIDE Scripts"

The above batch command is self-explanatory, but still, it's better to explain it in the chunks. The first ipy block gives commands to robot framework to execute the scripts having any specific build number included as a tag in your test scripts and excludes any test script which has 'InValid' tag added in the test scripts. This command will simply execute all the test scripts placed in the folder named as 'RIDE SCRIPT', and then out will be stored in the output-i.xml output file to generate further report files afterward (Log.html and Report.html).

Interesting Info: It's better to know that the main file which gets generated first after the

execution of test scripts via robot framework is output.xml, which further generates two HTML

files called log.html and report.html, which are finely represented and readable to be used

for analysis purpose.

Command - rebot output.xml

Additionally, the command after this in the second ipy block includes the option-tag that is –rerunfailed, which requires output XML which got generated by the first execution and then we tell the name of another output which will be generated after the execution of these failed testcases (which is output-ii.xml here). Notice that the rerunfailed command could take the include and exclude tags so that you can restrict what is the intentional failure you don’t want to re-run, and many other purposes like that according to your testing requirements.

After this step, you will have two different XML output files, and none of them is truly useful for you until you merge these two output files into one and then have the log and report files from that merged output file.

Figure 3: Writing the command to merge the files were just created.

ipyrebot --rerunmerge --output output.xml --noncritical "non-critical" -l log.html

-r report.html output-i.xml output-ii.xml

Again, this looks simple. Here, we're merging the two XML files and generating one final output.xml, log.html, and report.html file. From which you can see the final results and analyze them, in this report, the failure rate will be a lot better because of the fact that you've re-run the failed testcases again and all the testcases which were failed because of any unexpected behavior or action, would not fail.

In the start, I talked about the non-critical tag, that I'll describe for what purpose we do use this tag and how. Have a look at the following command first.

ipy -m robot.run --output output-i.xml --noncritical "non-critical"

--include "BuildNumber" --exclude "InValid" "RIDE Scripts"

Here in the above batch command after giving the first output XML file name, I've mentioned a tag that is –noncritical and following this, I've enclosed that specific tag name "non-critical", which is just a normal tag added in the script as your other tags. What it will do is, it will consider these testcases as non-critical and because of the failure of testcases with this tag, the impact won't reflect on the whole suit. Moreover, the overall trend and testing summary would be clear in the Jenkins project.

Figure 4: Running the test cases without non-critical flag.

Figure 5: Running the test cases with non-critical flag.

You could see the prompt difference between these two images and how the final summary describes the count of critical and non-critical test case. I've run first Jenkins build without non-critical option included in the command and in the next build, I've added the non-critical option in the batch command.

You would not feel the happiness until you try these commands and see them helping your UI testing in action. Thus far, we've gone through multiple steps and ways that how you could better your robot framework testing results, especially in the case when there is intermittency and it is looking for ways to mess your testing.