Introduction

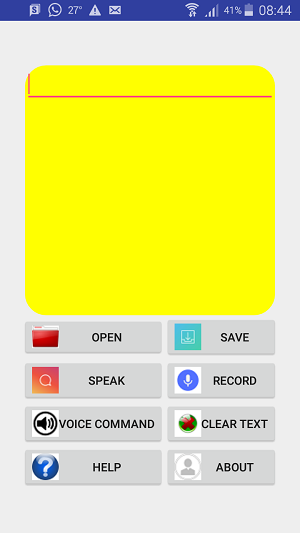

Android SDK text to speech engine is a very useful tool to integrate voice in your Android apps. In this article, we will look at converting text to speech as well as speech to text by using TTS engine. In the process, we will also see how TTS can be practically used in a Notepad app which has voice feature. I have named the app TalkingNotePad. This app has the standard Notepad features like opening and saving text files plus additional features like voice recording, speaking the file contents and performing actions using voice commands. Also, we will briefly look at performing text file input and output operations using Storage Access Framework(SAF). In this app, commands can be executed by using buttons or using voice.

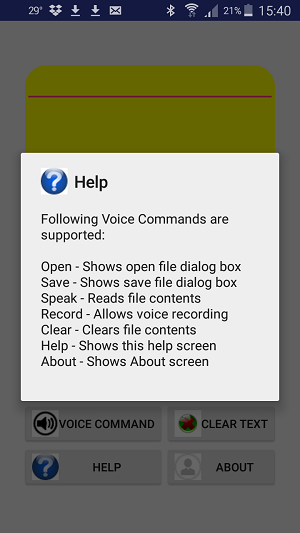

The following options are available by directly using buttons or by using Voice Command:

- Open - To open a file

- Save - To save a file

- Speak - To speak text

- Record - To record voice

- Voice Command - To execute commands using voice

- Clear Text - To clear Text

- Help - To show Help screen

- About - To show About screen

Background

To convert text to speech in any app, an instance of the TextToSpeech class and the TextToSpeech.OnInitListener interface are required. The TextToSpeech.OnInitListener contains the onInit() method which is called when the TextToSpeech engine initialization is complete. The onInit() method has an integer parameter which represents the status of TextToSpeech engine initialization. Once the TextToSpeech engine initialization is complete, we can call the speak() method of the TextToSpeech class to play the text as speech. The first parameter of the speak() method is the text to be spoken and the second parameter is the queue mode. The queue mode parameter can be QUEUE_ADD to add the new entry at the end of the playback queue or QUEUE_FLUSH to overwrite the entries in the playback queue with the new entry.

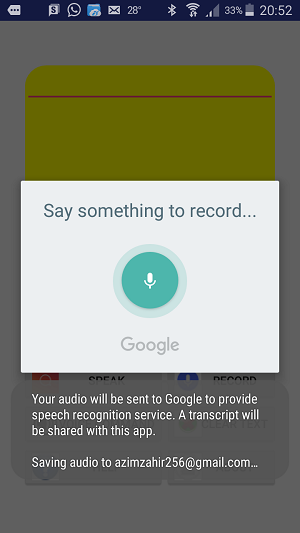

To convert speech to text, we can use the RecognizerIntent class with the ACTION_RECOGNIZE_SPEECH action and startActivityForResult() method and handle the result in the onActivityResult() method.

The ACTION_RECOGNIZE_SPEECH action starts an activity that prompts the user for speech and sends it through a speech recognizer as follows:

The results of recognition are stored in an ArrayList called EXTRA_RESULTS.

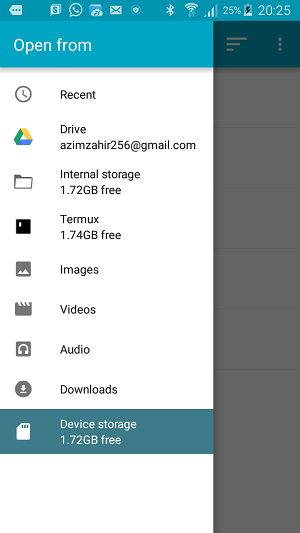

To open or create files, we can use the Storage Access Framework. The Storage Access Framework consists of the following elements:

- Document Provider, which allows accessing files from a storage device.

- Client App, which invokes the

ACTION_OPEN_DOCUMENT or ACTION_CREATE_DOCUMENT intents to work with files returned by document providers. - Picker, which provides the UI to access files from document providers that satisfy the client app's search criteria.

In SAF, we can use the ACTION_OPEN_DOCUMENT and ACTION_CREATE_DOCUMENT intents for opening and creating files respectively. The actual tasks of opening and creating files can be implemented in the onActivityResult() method.

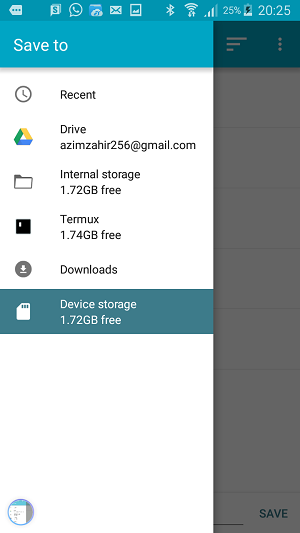

The open and save screens are as follows:

Using the Code

The following layout creates the interface for the Notepad app:

="1.0"="utf-8"

<LinearLayout xmlns:android="http://schemas.android.com/apk/res/android"

android:layout_width="match_parent"

android:layout_height="match_parent"

android:orientation="vertical"

android:gravity="center">

<ScrollView

android:layout_width="600px"

android:layout_height="600px"

android:scrollbars="vertical"

android:background="@drawable/shape">

<EditText

android:id="@+id/txtFileContents"

android:layout_width="match_parent"

android:layout_height="match_parent" />

</ScrollView>

<TableLayout

android:layout_width="wrap_content"

android:layout_height="wrap_content">

<TableRow>

<Button

android:id="@+id/btnOpen"

android:text="Open"

android:drawableLeft="@drawable/open"

android:layout_width="wrap_content"

android:layout_height="wrap_content" />

<Button

android:id="@+id/btnSave"

android:text="Save"

android:drawableLeft="@drawable/save"

android:layout_width="wrap_content"

android:layout_height="wrap_content" />

</TableRow>

<TableRow>

<Button

android:id="@+id/btnSpeak"

android:text="Speak"

android:drawableLeft="@drawable/speak"

android:layout_width="wrap_content"

android:layout_height="wrap_content" />

<Button

android:id="@+id/btnRecord"

android:text="Record"

android:drawableLeft="@drawable/record"

android:layout_width="fill_parent"

android:layout_height="wrap_content" />

</TableRow>

<TableRow>

<Button

android:id="@+id/btnVoiceCommand"

android:text="Voice Command"

android:drawableLeft="@drawable/command"

android:layout_width="wrap_content"

android:layout_height="wrap_content" />

<Button

android:id="@+id/btnClear"

android:text="Clear Text"

android:drawableLeft="@drawable/clear"

android:layout_width="fill_parent"

android:layout_height="wrap_content" />

</TableRow>

<TableRow>

<Button

android:id="@+id/btnHelp"

android:text="Help"

android:drawableLeft="@drawable/help"

android:layout_width="wrap_content"

android:layout_height="wrap_content" />

<Button

android:id="@+id/btnAbout"

android:text="About"

android:drawableLeft="@drawable/about"

android:layout_width="wrap_content"

android:layout_height="wrap_content" />

</TableRow>

</TableLayout>

</LinearLayout>

The background for the EditText is created by the following markup in the drawable folder:

="1.0"="utf-8"

<shape

xmlns:android="http://schemas.android.com/apk/res/android"

android:shape="rectangle"

android:width="300px"

android:height="600px">

<corners android:radius="50px" />

<solid android:color="#FFFF00" />

<stroke android:width="2px"

android:color="#FFFF00" />

</shape>

The following function fires the ACTION_OPEN_DOCUMENT intent:

public void open()

{

Intent intent = new Intent(Intent.ACTION_OPEN_DOCUMENT);

intent.addCategory(Intent.CATEGORY_OPENABLE);

intent.setType("*/*");

startActivityForResult(intent,OPEN_FILE);

}

The above code triggers the execution of the following code in the onActivityResult() method which opens the selected file using stream classes and displays its contents on an EditText control:

if (resultCode == RESULT_OK)

{

try

{

Uri uri = data.getData();

String filename=uri.toString().substring

(uri.toString().indexOf("%")).replace

("%2F","/").replace("%3A","/storage/emulated/0/");

FileInputStream stream=new FileInputStream(new File(filename));

InputStreamReader reader=new InputStreamReader(stream);

BufferedReader br=new BufferedReader(reader);

StringBuffer buffer=new StringBuffer();

String s=br.readLine();

while(s!=null)

{

buffer.append(s+"\n");

s=br.readLine();

}

txtFileContents.setText(buffer.toString().trim());

br.close();

reader.close();

stream.close();

}

catch(Exception ex)

{

AlertDialog.Builder builder=new AlertDialog.Builder(this);

builder.setCancelable(true);

builder.setTitle("Error");

builder.setMessage(ex.getMessage());

builder.setIcon(R.drawable.error);

AlertDialog dialog=builder.create();

dialog.show();

}

}

Similarly, the following function fires the ACTION_CREATE_DOCUMENT intent:

public void save()

{

Intent intent = new Intent(Intent.ACTION_CREATE_DOCUMENT);

intent.addCategory(Intent.CATEGORY_OPENABLE);

intent.setType("text/plain");

intent.putExtra(Intent.EXTRA_TITLE,"newfile.txt");

startActivityForResult(intent,SAVE_FILE);

}

And this results in the execution of the following code to save the contents of the EditText control to a file:

if(resultCode==RESULT_OK)

{

try

{

Uri uri = data.getData();

String filename=uri.toString().substring

(uri.toString().indexOf("%")).replace

("%2F","/").replace("%3A","/storage/emulated/0/");

FileOutputStream stream=new FileOutputStream(new File(filename));

OutputStreamWriter writer=new OutputStreamWriter(stream);

BufferedWriter bw=new BufferedWriter(writer);

bw.write(txtFileContents.getText().toString(),0,

txtFileContents.getText().toString().length());

bw.close();

writer.close();

stream.close();

}

catch(Exception ex)

{

AlertDialog.Builder builder=new AlertDialog.Builder(this);

builder.setCancelable(true);

builder.setTitle("Error");

builder.setMessage(ex.getMessage());

builder.setIcon(R.drawable.error);

AlertDialog dialog=builder.create();

dialog.show();

}

}

In order to speak the contents of the EditText control, the following user defined function is used:

public void speak()

{

if(txtFileContents.getText().toString().trim().length()==0)

{

AlertDialog.Builder builder=new AlertDialog.Builder(this);

builder.setCancelable(true);

builder.setTitle("Error");

builder.setMessage("Nothing to speak. Please type or record some text.");

builder.setIcon(R.drawable.error);

AlertDialog dialog=builder.create();

dialog.show();

}

else

{

tts=new TextToSpeech(getApplicationContext(),new TextToSpeech.OnInitListener()

{

public void onInit(int status)

{

if(status!=TextToSpeech.ERROR)

{

tts.setLanguage(Locale.US);

String str=txtFileContents.getText().toString();

tts.speak(str,TextToSpeech.QUEUE_ADD,null);

}

}

});

}

}

The above code initializes the TextToSpeech engine and sets the language to Locale.US. Then it retrieves the contents of the EditText control into a string variable and finally calls the speak() function to convert the text to speech.

The following code is used to record speech using the ACTION_RECOGNIZE_SPEECH intent:

public void record()

{

Intent intent=new Intent(RecognizerIntent.ACTION_RECOGNIZE_SPEECH);

intent.putExtra(RecognizerIntent.EXTRA_LANGUAGE,Locale.getDefault());

intent.putExtra(RecognizerIntent.EXTRA_LANGUAGE_MODEL,RecognizerIntent.LANGUAGE_MODEL_FREE_FORM);

if(voiceCommandMode && !recording)

{

intent.putExtra(RecognizerIntent.EXTRA_PROMPT,"Speak a command to be executed...");

}

else

{

intent.putExtra(RecognizerIntent.EXTRA_PROMPT,"Say something to record...");

}

startActivityForResult(intent,RECORD_VOICE);

}

The above code checks whether we are executing a voice command or recording normal speech and displays a different prompt depending upon that. It then triggers the execution of the following code in the onActivityResult() function:

if(resultCode==RESULT_OK)

{

ArrayList result=data.getStringArrayListExtra(RecognizerIntent.EXTRA_RESULTS);

if(voiceCommandMode)

{

String command=result.get(0).toUpperCase();

if(command.equals("OPEN")||command.startsWith("OP")||command.startsWith("OB"))

{

Toast.makeText(getBaseContext(),"Executing Open Command",Toast.LENGTH_SHORT).show();

open();

}

else if(command.equals("SAVE")||command.startsWith("SA")||command.startsWith("SE"))

{

Toast.makeText(getBaseContext(),"Executing Save Command",Toast.LENGTH_SHORT).show();

save();

}

else if(command.equals("SPEAK")||command.startsWith("SPA")||

command.startsWith("SPE")||command.startsWith("SPI"))

{

Toast.makeText(getBaseContext(),"Executing Speak Command",Toast.LENGTH_SHORT).show();

speak();

}

else if(command.equals("RECORD")||command.startsWith("REC")||command.startsWith("RAC")||

command.startsWith("RAK")||command.startsWith("REK"))

{

Toast.makeText(getBaseContext(),"Executing Record Command",Toast.LENGTH_SHORT).show();

recording=true;

record();

}

else if(command.equals("CLEAR")||command.equals("KLEAR")||

command.startsWith("CLA")||command.startsWith("CLE")||

command.startsWith("CLI")||command.startsWith("KLA")||

command.startsWith("KLE")||command.startsWith("KLI"))

{

Toast.makeText(getBaseContext(),"Executing Clear Command",Toast.LENGTH_SHORT).show();

clear();

}

else if(command.equals("HELP")||command.startsWith("HAL")||

command.startsWith("HEL")||command.startsWith("HIL")||command.startsWith("HUL"))

{

Toast.makeText(getBaseContext(),"Executing Help Command",Toast.LENGTH_SHORT).show();

help();

}

else if(command.equals("ABOUT")||command.startsWith("ABA")||command.startsWith("ABO"))

{

Toast.makeText(getBaseContext(),"Executing About Command",Toast.LENGTH_SHORT).show();

about();

}

else

{

Toast.makeText(getBaseContext(),"Unrecognized command",Toast.LENGTH_SHORT).show();

}

voiceCommandMode=false;

}

else

{

txtFileContents.setText(result.get(0));

}

}

}

The above code executes one of the voice commands if we had clicked on the "Voice Command" button. Otherwise, it simply displays the spoken text on the EditText control. The code uses the getStringArrayListExtra() method with the EXTRA_RESULTS parameter to get the result ArrayList. Then it extracts the spoken text as the first element using the get() method.

Note: To avoid the problems of voice commands not getting recognized, I have compared the speech with similar sounding words. I am not sure if it is the best way out but it seemed to be a quick solution.

Commands can also be executed by clicking on buttons. The following code in the onClick() method initiates the actions depending on the button clicked:

voiceCommandMode=false;

recording=false;

Button b=(Button)v;

if(b.getId()==R.id.btnOpen)

{

open();

}

if(b.getId()==R.id.btnSave)

{

save();

}

if(b.getId()==R.id.btnSpeak)

{

speak();

}

if(b.getId()==R.id.btnRecord)

{

record();

}

if(b.getId()==R.id.btnVoiceCommand)

{

voiceCommandMode=true;

record();

}

if(b.getId()==R.id.btnClear)

{

clear();

}

if(b.getId()==R.id.btnHelp)

{

help();

}

if(b.getId()==R.id.btnAbout)

{

about();

}

The following permissions need to be added to the androidmanifest.xml file in order to read from and write to external storage:

<uses-permission android:name="android.permission.READ_EXTERNAL_STORAGE"/>

<uses-permission android:name="android.permission.WRITE_EXTERNAL_STORAGE"/>

Points of Interest

This is one example of a voice based Android app using TextToSpeech API. Many more such exciting apps can be created using TextToSpeech API.