Introduction

One can break into machine learning models and make them perform malicious activities by using various machine learning techniques.

In this post, we are going to explore how you can fool artificial neural networks and deep learning malware detectors with Generative Adversarial Networks.

In 2014, Ian Goodfellow, Yoshua Bengio, and their team, proposed a framework called the Generative Adversarial Network (GAN). Generative adversarial networks have the ability to generate images from a random noise. For example, we can train a generative network to generate images for handwritten digits from the MNIST dataset.

Generative adversarial networks are composed of two major parts: a generator and a discriminator.

Training the Generator to Generate Images

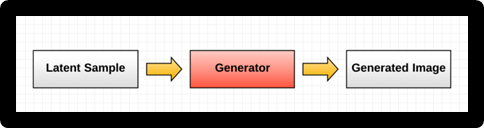

The generator takes latent samples as inputs; they are randomly generated numbers, and they are trained to generate images:

For example, to generate a handwritten digit, the generator will be a fully connected network that takes latent samples and generates 784 data points, reshaping them into 28x28 pixel images (MNIST digits). It is highly recommended to use tanh as an activation function:

generator = Sequential([

Dense(128, input_shape=(100,)),

LeakyReLU(alpha=0.01),

Dense(784),

Activation('tanh')

], name='generator')

Classifying the MNIST Data with the Discriminator

The discriminator is simply a classifier trained with supervised learning techniques to check if the image is real (1) or fake (0). It is trained by both the MNIST dataset and the generator samples. The discriminator will classify the MNIST data as real, and the generator samples as fake:

discriminator = Sequential([

Dense(128, input_shape=(784,)),

LeakyReLU(alpha=0.01),

Dense(1),

Activation('sigmoid')], name='discriminator')

By connecting the two networks, the generator and the discriminator, we produce a generative adversarial network:

gan = Sequential([

generator,

discriminator])

This is a high-level representation of a generative adversarial network:

To train the GAN, we need to train the generator (the discriminator is set as non-trainable in further steps); in the training, the back-propagation updates the generator's weights to produce realistic images. So, to train a GAN, we use the following steps as a loop:

- Train the discriminator with the real images (the discriminator is trainable here)

- Set the discriminator as non-trainable

- Train the generator

The training loop will occur until both of the networks cannot be improved any further.

To build a GAN with Python, use the following code:

import pickle as pkl

import numpy as np

import tensorflow as tf

import matplotlib.pyplot as plt

batch_size = 100

epochs = 100

samples = []

losses = []

saver = tf.train.Saver(var_list=g_vars)

with tf.Session() as sess:

sess.run(tf.global_variables_initializer())

for e in range(epochs):

for ii in range(mnist.train.num_examples//batch_size):

batch = mnist.train.next_batch(batch_size)

batch_images = batch[0].reshape((batch_size, 784))

batch_images = batch_images*2 - 1

batch_z = np.random.uniform(-1, 1, size=(batch_size, z_size))

_ = sess.run(d_train_opt, feed_dict={input_real: batch_images, input_z: batch_z})

_ = sess.run(g_train_opt, feed_dict={input_z: batch_z})

train_loss_d = sess.run(d_loss, {input_z: batch_z, input_real: batch_images})

train_loss_g = g_loss.eval({input_z: batch_z})

print("Epoch {}/{}...".format(e+1, epochs),

"Discriminator Loss: {:.4f}...".format(train_loss_d),

"Generator Loss: {:.4f}".format(train_loss_g))

losses.append((train_loss_d, train_loss_g))

sample_z = np.random.uniform(-1, 1, size=(16, z_size))

gen_samples = sess.run(

generator(input_z, input_size, n_units=g_hidden_size,

reuse=True, alpha=alpha),

feed_dict={input_z: sample_z})

samples.append(gen_samples)

saver.save(sess, './checkpoints/generator.ckpt')

with open('train_samples.pkl', 'wb') as f:

pkl.dump(samples, f)

To build a GAN with Python, we are going to use NumPy and TensorFlow.

Using MalGAN Technique

To generate malware samples to attack machine learning models, attackers are now using GANs to achieve their goals. Using the same techniques we discussed previously (a generator and a discriminator), cyber criminals perform attacks against next-generation anti-malware systems, even without knowing the machine learning technique used (black box attacks). One of these techniques is MalGAN, which was presented in a research project called, Generating Adversarial Malware Examples for Black Box Attacks Based on GAN, conducted by Weiwei Hu and Ying Tan from the Key Laboratory of Machine Perception (MOE) and the Department of Machine Intelligence. The architecture of MalGAN is as follows:

The generator creates adversarial malware samples by taking malware (feature vector m) and a noise vector, z, as input. The substitute detector is a multilayer, feed-forward neural network, which takes a program feature vector, X, as input. It classifies the program between a benign program and malware.

To train the generative adversarial network, the researchers used this algorithm:

While not converging do:

Sample a minibatch of Malware M

Generate adversarial samples M' from the generator

Sample a minibatch of Goodware B

Label M' and B using the detector

Update the weight of the detector

Update the generator weights

End while

Many of the samples generated may not be valid PE files. To preserve mutations and formats, the systems required a sandbox to ensure that functionality was preserved.

Generative adversarial network training cannot simply produce great results; that is why many hacks are needed to achieve better results. Some tricks were introduced by Soumith Chintala, Emily Denton, Martin Arjovsky, and Michael Mathieu, to obtain improved results:

- Normalizing the images between -1 and 1

- Using a max log, D, as a loss function, to optimize G instead of min (log 1-D)

- Sampling from a Gaussian distribution, instead of a uniform distribution

- Constructing different mini-batches for real and fake

- Avoiding ReLU and MaxPool, and using LeakyReLU and Average Pooling instead

- Using Deep Convolutional GAN (DCGAN), if possible

- Using the ADAM optimizer

We learned how to bypass machine learning models and how you can fool them with a GAN. We looked at some real-world cases to learn how to escape anti-malware systems by GANs.

You enjoyed reading an excerpt from the book Mastering Machine Learning for Penetration Testing by Chiheb Chebbi. If you are interested in learning extensive machine learning techniques for cybersecurity, this is the book for you.

History

- 21st September, 2018: Initial version