By Dante Sblendorio

In order to create a neural net, we first need some data to play with. The UCI Machine Learning Repository has plenty of datasets ready to use. We will use the wine dataset. It contains 178 observations of wine grown in the same region in Italy. Each observation is from one of three cultivars (the ‘Class’ feature), and also has 13 constituent features that are the result of a chemical analysis. It is a clean dataset (it has no missing values), so it doesn’t require any data wrangling to get it in the right form for our neural net. This makes our job a bit easier.

import pandas as pd

wine_names = ['Class', 'Alcohol', 'Malic acid', 'Ash', 'Alcalinity of ash', 'Magnesium', 'Total phenols', 'Flavanoids', 'Nonflavanoid phenols', 'Proanthocyanins', 'Color intensity', 'Hue', 'OD280/OD315', 'Proline']

wine_data = pd.read_csv('https://archive.ics.uci.edu/ml/machine-learning-databases/wine/wine.data', names = wine_names)

wine_df = pd.DataFrame(wine_data)

In this first chunk, we import the pandas library and the wine dataset. We then convert the dataset in a pandas DataFrame.

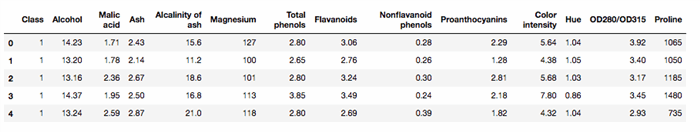

I also pull the names of each feature from the UCI repository. There are two primary types of data structures in pandas: a Series (one-dimensional) and a DataFrame (two-dimensional). Nearly all datasets can utilize these two data structures. Before creating our neural net, it is best to explore the data to get an idea of the general form, properties, etc. Whenever working with a new dataset, this is always the first thing to do (after importing, of course). One of the more useful methods is .head(n). This concatenates the output to the first n observations, allowing you to view any number of observations you desire. You can also use .sample(n), which will output n random observations from your dataset. So, in order to see our features, run:

wine_df.head(5)

Some other useful functions: len() and .nunique()

len(wine_df)

wine_df['Class'].nunique()

The .describe() method will output quick and basic statistical information on all of the features within the DataFrame. This is quite useful when dealing with numerical data.

wine_df.describe()

Hopefully, you have noticed a couple of things about the data. One, every feature but Class is numerical. Two, the Class feature is categorical, meaning it can take on one of three values (coinciding with the cultivar). The UCI repository offers a file that describes the data, but it is nice to confirm the characteristics with our own exploration.

Before we create a neural net with our wine data, it is useful to understand some of the basics of TensorFlow. If you know a bit of linear algebra, some of the concepts will look familiar. The primary data unit used in TensorFlow is the tensor. To put it simply, a tensor is a multidimensional set of numerical values. There are many rigorous mathematical definitions, but for our case, this definition is sufficient. A tensor's rank is its number of dimensions, while its shape is a tuple of integers specifying the array's length along each dimension. These are some examples of tensor values:

3. # a rank 0 tensor; a scalar with shape [],

[1., 2., 3.] # a rank 1 tensor; a vector with shape [3]

[[1., 2., 3.], [4., 5., 6.]] # a rank 2 tensor; a matrix with shape [2, 3]

[[[1., 2., 3.]], [[7., 8., 9.]]] # a rank 3 tensor with shape [2, 1, 3]

In TensorFlow, you explicitly define the type of tensor. For example, if you wanted to define two constants and add them, this is how:

import tensorflow as tf

const1 = tf.constant([[1,2,3], [1,2,3]]);

const2 = tf.constant([[3,4,5], [3,4,5]]);

result = tf.add(const1, const2);

with tf.Session() as sess:

output = sess.run(result)

print(output)

The session is another important concept in TensorFlow. A session provides the environment where operations are executed and tensors are evaluated. When we define const1, const2, and result, no operation is performed. The operation is executed only within the session. It is also advantageous to treat operations within TensorFlow as graphs, or a network of nodes. This concept is fundamental to neural networks. The computational graph for the above operation looks like this:

Using computational graphs to represent neural networks is an effective way of understanding how they are structured and how they perform. TensorFlow contains a visualization tool, called TensorBoard, solely for helping users better understand their code. (More on the basics of TensorFlow can be found here.)

To get your entry code for challenge 3, create a new code cell in your Jupyter notebook and enter the following code:

with tf.Session() as sess:

member_number = tf.constant(12345678)

result = tf.divide(member_number, tf.constant(20))

print(int(sess.run(result)))

And replace 12345678 with your CodeProject member number. The number that is printed will be your entry code for this challenge. Please click here to enter the contest entry code.