Docker has been in the limelight since the days of its very beginning and dockerizing almost everything has been the talk of the day. Being the hype, it’s getting more and more attention whether it’s for developers or enterprises. As the IT world has taken virtualization turn, the networking paradigm has also shifted from configuring physical routers, switches, LAN/WAN to networking components found in virtualized platforms, i.e., VMs, cloud and others. Now that we’re talking about the enchanted world composed of containers, one needs to have strong networking skills to properly configure the container architecture. If you want your deployed container to scale as per the requirements and likes of your microservices, then you’re going to want to get the networking fit in just right. This is where docker networking lays its foot on the ground; balancing out the application requirements and networking environment. And that’s what I’m here for, to give you an overview of docker networking.

We’re going to start small, talk a little bit about basics of docker networking and then stepping up to get containers to talk to each other using more recent and advance options that docker networking provide. Please note that this article is not meant for absolute beginners with docker rather assumes your basic knowledge about docker and containers. I’m not going to talk about what is docker or containers rather my focus would be to make the docker learning curve smooth by giving you an overview of networking so don’t mind me taking some sharp turns if I may.

Some Background

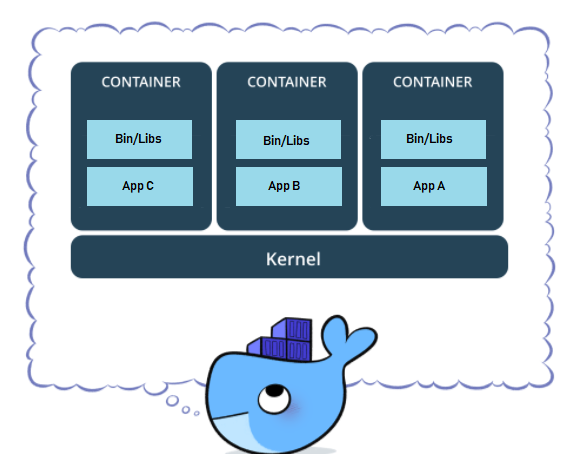

Containers bring a whole new ideology to how networking works across all your hosts as it will include everything needed to run the application: code, runtime, system tools, system libraries, settings and everything.

This should make the idea clear that the container isolates the application from rest of the system to ensure that the environment is constant. That’s what the whole docker (Not to forget here that docker is the most popular containerizing tool) craze is all about, right? To separate the application from your infrastructure to ensure quick development, shipping and running the application. So far so good but there can be 100s or even 1000s of containers per host. Moreover, our microservice can be distributed across multiple hosts. So how do we get containers to talk to external world? Like we need to reach them in some way to use the services that they provide. Also, how would we get containers to talk to host machine or other containers? For this reason, we need some sort of connection between the containers and that’s exactly where the bells ring for docker networking.

To be brutally honest, docker networking wasn’t something to brag about in the early days. It was complex and wasn’t easy to scale either. Not until after 2015; when docker laid its hands-on socket plane; that the docker networking started to shine. Since then, many interesting contributions have been made by the developer community including Pipework, Weave, Clocker, and Kubernetes. Docker Inc. also played the card by establishing the project to standardize networking known as libnetwork which implements the container network model (CNM). Our focus will be the same Container network model for this article.

Container Network Model

Container network model can be thought of as the backbone of docker networking. It aims on keeping the networking as a library separated from container runtime rather implementing it as plugin using drivers (bridge, overlay, weave, etc.)

That's the whole philosophy of CNM. You can think of it serving the purpose of abstraction layer to diversify the complexity of common networking as well as supporting multiple network drivers. The model has three main components as:

Sandbox: contains the configuration of a container's network stack

Endpoint: joins a Sandbox to a Network

Network: group of Endpoints that are able to communicate with each-other directly

Here’s a pictorial representation of the model.

The project is hosted on Github. If you’re interested in knowing more about the CNM model, I would encourage you to go ahead and give it a thorough read.

I heard about CNI as well...?

Well yes! Container Network Interface (CNI) is another standard for container networking which was started by coreOS and is used by cloudfoundry, kubernetes etc. which is beyond the scope of this article. That’s why I didn’t touch that ground. I might talk about that in some upcoming article but my apologies for now. Feel free to search and learn on your own.

Libnetwork

libnetwork implements the same CNM model we just talked about. Docker was introduced under the philosophy of providing great user experience and seamless application portability across infrastructure. Networking follows the same philosophy. Portability, and Extensibility were two main factors behind the libnetwork project. The long-term goal of this project as mentioned officially was to follow Docker and Linux philosophy of delivering small, highly modular and composable tools that work well independently with the aim to satisfy that composable need for Networking in Containers. Libnetwork is not just a driver interface but has much more to it. Some of its main features are:

- builtin IP address management

- multihost networking

- Service discovery and load balancing

- Plugins to extend the ecosystems

Things should make sense by now. Saying that I feel like enough talking and is time to get our hands-on it.

Some Fun with Networking Modes

I’m assuming that you already have docker installed on your machine. If not, please follow the documentation here to get docker. I’m working on Linux (Ubuntu 18.04 LTS) so everything that I’m going to show you now would work fine on the same and more interestingly; since it's docker we are talking about; things shouldn’t be so different for Mac, Windows or any other platform that you might be working on. You can make sure of the installation by simply checking the version.

$ docker version

And you should get the output as follow:

root@mehreen-Inspiron-3542:/home/mehreen# docker version

Client:

Version: 18.06.1-ce

API version: 1.38

Go version: go1.10.3

Git commit: e68fc7a

Built: Tue Aug 21 17:24:51 2018

OS/Arch: linux/amd64

Experimental: false

Server:

Engine:

Version: 18.06.1-ce

API version: 1.38 (minimum version 1.12)

Go version: go1.10.3

Git commit: e68fc7a

Built: Tue Aug 21 17:23:15 2018

OS/Arch: linux/amd64

Experimental: false

Now we are good to rock and roll. You can check the available commands for networks by using the following command:

$ docker network

And it results in the following output:

Usage: docker network COMMAND

Manage networks

Commands:

connect Connect a container to a network

create Create a network

disconnect Disconnect a container from a network

inspect Display detailed information on one or more networks

ls List networks

prune Remove all unused networks

rm Remove one or more networks

Run 'docker network COMMAND --help' for more information on a command.

Let’s see what else is there for us.

Default Networking modes:

By default, there are some networking modes active. They can also be called as single-host docker networking modes. To view docker networks, run:

$ docker network ls

The above command outputs three options as follow.

Pretty self-explanatory it is. Bridge, Host and None networks are by default created for us which are using bridge, host and null drivers. One thing to note here is that it shows the scope as local meaning that these networks would only work for this host. But what is so different about these networks? Let’s see.

Bridge Networking Mode

The Docker deamon creates “docker0” virtual ethernet bridge that forwards packets between all interfaces attached to it. Let's inspect this network a little bit more by using the inspect command and specifying the name or ID of the network.

$ docker network inspect bridge

And it would output all the specifications and configurations of the network.

root@mehreen-Inspiron-3542:/home/mehreen# docker network inspect bridge

[

{

"Name": "bridge",

"Id": "f47f4e8e34ebe75035115b88e301ac9548eb99e429cb8d9d9b387dec07a2db5f",

"Created": "2018-10-13T14:34:39.071898384+05:00",

"Scope": "local",

"Driver": "bridge",

"EnableIPv6": false,

"IPAM": {

"Driver": "default",

"Options": null,

"Config": [

{

"Subnet": "172.17.0.0/16",

"Gateway": "172.17.0.1"

}

]

},

"Internal": false,

"Attachable": false,

"Ingress": false,

"ConfigFrom": {

"Network": ""

},

"ConfigOnly": false,

"Containers": {},

"Options": {

"com.docker.network.bridge.default_bridge": "true",

"com.docker.network.bridge.enable_icc": "true",

"com.docker.network.bridge.enable_ip_masquerade": "true",

"com.docker.network.bridge.host_binding_ipv4": "0.0.0.0",

"com.docker.network.bridge.name": "docker0",

"com.docker.network.driver.mtu": "1500"

},

"Labels": {}

}

]

As you can see above, the docker0 network is represented by bridge. Also the Subnet and Gateway was automatically created for it. Container can be automatically added to this networking by a simple command docker run and if there are containers already attached to the network, then the inspect command would have shown them. Last but not least, inter-container chatter is also enabled by default. We will be making use of all this later in the article.

Host Networking Mode

Let’s inspect this networking mode and see what comes to the screen.

root@mehreen-Inspiron-3542:/home/mehreen# docker network inspect host

[

{

"Name": "host",

"Id": "fbfb142290eeaf9f696467932b0f5d4e350dd3fd5fba22ad8dd495fde42bd9ea",

"Created": "2018-10-13T14:11:27.536955704+05:00",

"Scope": "local",

"Driver": "host",

"EnableIPv6": false,

"IPAM": {

"Driver": "default",

"Options": null,

"Config": []

},

"Internal": false,

"Attachable": false,

"Ingress": false,

"ConfigFrom": {

"Network": ""

},

"ConfigOnly": false,

"Containers": {},

"Options": {},

"Labels": {}

}

]

This enables container to share the networking namespace of the host which means it is directly exposed to the outside world. So there’s no automatically port assignment neither it is routable but you would have to use port mapping to reach services inside the container because configurations inside container are same as outside it.

None Networking Mode

The following is the result of inspecting this network.

root@mehreen-Inspiron-3542:/home/mehreen# docker network inspect none

[

{

"Name": "none",

"Id": "75201eb0dee7bdac624d20c4aab536b73f49c5a6b9230a97d3f5f5424622e4c4",

"Created": "2018-10-13T14:11:27.390186279+05:00",

"Scope": "local",

"Driver": "null",

"EnableIPv6": false,

"IPAM": {

"Driver": "default",

"Options": null,

"Config": []

},

"Internal": false,

"Attachable": false,

"Ingress": false,

"ConfigFrom": {

"Network": ""

},

"ConfigOnly": false,

"Containers": {},

"Options": {},

"Labels": {}

}

]

Wait, I don’t see much difference here from the last one we inspected. Does it mean both are same? No, not at all. None networking mode offers a container-specific network stack which does not have an external network interface. It only has a local loopback interface.

But Where are Talking Containers?

Well Yes! We saw the networks and all but we haven’t added any container to the network yet neither is there any apparent communication between them. Now is the time to add some sweetness to our lives. Let’s create our very own network and see if we can get our containers to talk to each other.

We will create single-host bridge network using bridge driver. Because it’s simple to understand, easy to use and troubleshoot makes it a perfect choice for beginners.

Create the bridge network by executing the following command:

$ docker network create -d bridge testbridge

This will create our network naming testbridge using bridge driver. If you get a long string in response to the above command, means the execution was successful. Let’s quickly check the network list if our network appears there.

We did it. Now if you inspect this network, it would be just like the bridge network we inspected before. Let’s attach some containers to our network.

$ docker run -dt --name cont_test1 --network testbridge alpine

We created our container naming cont1 on the testbridge network that we created earlier. And I used the alpine image to create the container. (There’s no specific reason for using alpine image except that it’s light weight. You can use any other image like ubuntu, etc. The command would work fine.) Response again should be a long string. Let’s create another container just like this one.

$ docker run -dt --name cont_test2 --network testbridge alpine

Now we have two containers on the same bridge network. Do you feel like inspecting the network? I did and here’s what I found.

----------------------------------------------------------------------

"Containers": {

"88ae819d1549527e36b62f50f563c124aa3bc23ae141964201f59601203848f9": {

"Name": "cont_test2",

"EndpointID": "1bd9a68af20b3abf44f94dbd98a2c846bbeb02f016359209a373bf0c54501d69",

"MacAddress": "02:42:ac:13:00:05",

"IPv4Address": "172.19.0.5/16",

"IPv6Address": ""

},

"a1a4f170a616a8c1fa3cac5f746f26470c778f807c30afe4a8e7d44ee702d7ca": {

"Name": "cont_test1",

"EndpointID": "7bb7dbc24b89291b41b31e7d5ffbf0e5c46358122744ad16043f99593da1d41e",

"MacAddress": "02:42:ac:13:00:04",

"IPv4Address": "172.19.0.4/16",

"IPv6Address": ""

},

-------------------------------------------------------------------------

Our both containers are now added to the network. But can they talk? Let’s enter in one of our containers and try to ping the other one to see if it works.

$ docker exec -it cont_test1 sh

We are in our first container. Let’s ping the other one by specifying its name or IP.

root@mehreen-Inspiron-3542:/home/mehreen# docker exec -it cont_test1 sh

/ # ping cont_test2

Boom! Our containers are talking to each other. But wait, how about we try pinging Google?

and it worked!

What’s Next?

Our containers can talk to each other as well as talk to the outside world. You can also make use of the other commands like docker stop and docker rm to stop and them remove the containers. As I mentioned several times, we are talking about single-host networking. If we try talking to a container on another host, that’s not going to work. For that, multi-host networking is required which also brings in the swarm concept. Seems like a lot of learning on the way. I might cover that in some next article. Till then, feel free to explore it as you like.