Note: You can evaluate the AI machine learning solution discussed in this article by visiting: http://ec2-18-222-140-214.us-east-2.compute.amazonaws.com/

Introduction

In today’s real world, the evolution of modern AI machine learning and data mining algorithms, as well as, forthcomings of the new data analysis tools, aroused the dynamically still-growing interest to the high-quality financial markets forecasting. The various applications of computer-based deep learning technologies and tools attracted a very close attention of either scientists or investors, since the conventional methods of data analysis, such as exponential moving averages (EMA), oscillators, various probability-based approaches, and other indicators, were considered to be the least efficient due to the inability of providing adequate and tangible forecasting results.

During the past few decades, there was the large number of attempts made by the various researchers to find an application of modern machine learning algorithms to the process of financial markets forecasting. According to the latest research, the using of artificial neural networks to reveal stocks market trends is one of the most popular and successful applications of the machine learning and data mining approach.

Artificial neural networks (ANN) are typically biologically inspired mathematical models, built on the principle of organization and functioning of biological neural networks - networks of nerve cells inherent in humans and other living organisms. Unlike the other algorithms, these models are mainly based on the processes of data cognition and have a ‘talent’ for prediction. In turn, this makes them perfect candidates for solving the various of forecasting problems, the algorithm of which cannot be hardcoded. In spite, these problems can only be solved via the learning process. Artificial neural networks can be actively used whenever we need to find the solution of a problem, in which there are no linear dependencies between input and output data. For example, we can use neural networks to evaluate all possible values of a certain “unknown” function by solely establishing the non-linear relations between either input or output datasets, based on the learning process itself.

The entire prediction process in modern neural network basically relies on two main steps, such as:

- Maintaining the associative memory (ASM) as the result of performing the supervised learning, during which we’re using datasets that consist of the large number of data samples, containing historical data (cognitive function);

- Predicting the new data values, as a neural network output computation, using the input datasets containing the currently active data;

The associative memory (ASM), maintained during the learning phase, is the memory used to store the relations between input and output data. These relationships between various non-linear data basically define so-called “consistent patterns”, established based on historical data from the past, and used to find the new data, the features of which exactly correspond to the following patterns.

The associative memory (ASM), maintained during the learning phase, is the memory used to store the relations between input and output data. These relationships between various non-linear data basically define so-called “consistent patterns”, established based on historical data from the past, and used to find the new data, the features of which exactly correspond to the following patterns.

The following cognitive feature of neural networks, discussed above, allows us to use them for various of prediction purposes, especially for stocks prices forecasts, which is a special case of time-series prediction.

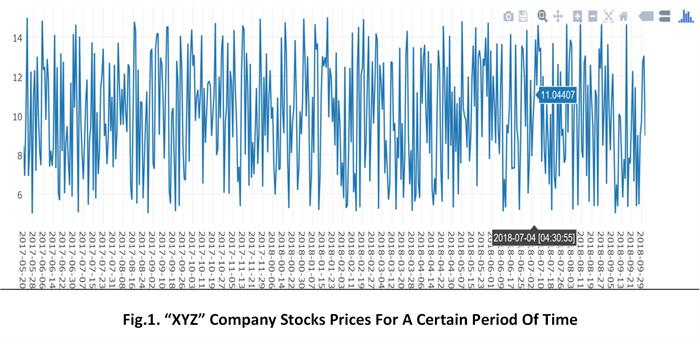

At this point, let’s spend a bit of time and introduce the problem, we’re about to solve by using artificial neural networks. Suppose, we’re having a set of chronological data on a certain company stocks prices in financial market for a certain period of time in the past. Each value in this set is actually a closing stocks price up to a certain date.

As we might already know, the following data shown above has many characteristics that basically describe the various stocks market’s trends, exhibited on the data in a given chart. On the data listed above we can observe the dynamically increasing or decreasing stocks price, as well as its average, etc. Specifically, the plot shown above illustrates the graph of oscillating function, which values are fluctuating from minimum to maximum stocks price values. The very fast dynamical changes in stocks prices on the market indicate the entire process of trading is typically unpredictable. This, in turn, makes the process of stocks market trends detection more complicated.

Besides the minimum and maximum stocks price values, there’s another important characteristic, exhibited on the data shown in the chart illustrated above. The following characteristic is simple moving average (SMA).

Simple Moving Average (SMA) is the data analysis indicator that describes the general behavior of stocks market for a certain period of time. To reveal the stocks market trends, during the analysis, we typically combine both the graph of stocks prices and its moving average. The type of moving average and its period is typically called a length or just a “time window”. The size of time window is selected experimentally by a trader.

In this article, we will demonstrate how to create and deploy a model, based on the recurrent neural network (RNN) that uses long short-term memory (LSTM) cells to predict the future values of simple moving average (SMA). For that purpose we will use TensorFlow.js framework and javascript language to deliver a code implementing the following AI machine learning model.

Background

What’s Time Series…

A time series (defined) is a sequence of discrete data values ordered chronologically and successive equally spaced in time. The values of temperature for a certain period of time, daily closing value of the Dow Jones Index are the most common examples of time series. Time series are used in statistics, signal processing, pattern recognition, econometrics, finance, etc. There’s the entire class of methods used for time series analysis in order to reveal the various characteristics of data such as a meaningful and efficient statistics and trends.

The time series forecasting is one of the known methods for time series analysis. It allows us to predict the future values based on the historical data from the past. Time series prediction appears to be a complex problem, since, in the most cases, time series is basically a set of values for a certain non-linear oscillating function. The figure below illustrates a trivial example of time series:

1,2018-06-21 [19:07:23],6.931127591962067

2,2018-06-22 [11:56:48],5.755516792667871

3,2018-06-23 [10:09:23],5.004250054228651

4,2018-06-24 [14:58:36],12.870827986559083

5,2018-06-25 [01:53:33],13.568728613634492

6,2018-06-26 [17:14:27],13.13768657779183

7,2018-06-27 [15:33:10],10.929333082544815

8,2018-06-28 [02:11:21],13.601266957377506

9,2018-06-29 [22:42:30],5.551803079014633

10,2018-06-30 [03:43:19],5.402588076182846

11,2018-06-31 [05:44:43],14.05994188950518

12,2018-07-01 [09:31:06],5.724896736539122

13,2018-07-02 [13:07:36],6.580816999552471

14,2018-07-03 [06:34:19],6.417378505204129

15,2018-07-04 [10:57:32],12.317070569202714

16,2018-07-05 [00:35:59],13.980893123267668

17,2018-07-06 [11:16:36],12.97290427566331

18,2018-07-07 [15:57:05],14.85185776343266

19,2018-07-08 [20:13:38],9.113754417727836

20,2018-07-09 [01:59:52],12.103680537949657

Typically, these values are independent and very hard to compute or predict due to its non-linearity (e.g. there’s normally no such a function that allows to compute the successive values of time series). That’s actually why, the known time series forecasting methods are mainly based on using mathematical regression analysis to reveal the future values of time series. In terms of technology, we typically use various regression-based methods, such as artificial neural networks (ANN) to predict time-series future values, in case when there’s no implicit connection (i.e. linear dependency) between the time series’ historical data and the future values to be predicted.

In this article, we will discuss how to specifically use various of artificial neural network (ANN) to solve the problem of time series prediction. As an example of predicting time-series we will build and deploy an ANN to predict the values of simple moving average (SMA), discussed below.

Simple Moving Average

In physics and financial statistic simple moving average (SMA) is an algorithm that allows us to compute an average value for each subset belonging to the entire set of data. Each subset having a fixed size is also called a “time window”. The simple movie average is primarily used as a data analysis indicator to filter out the most of short-term fluctuations by smoothing a certain oscillating function. This, in turn, allows us to reveal long term trends or cycles. Simple moving average is one of the variants of low-frequency impulse response filters. In fact, SMA is a particular case of convolution, commonly used in signal processing.

The simple moving average algorithm has the following formulation. Suppose, we’re given a series consisting of N – values and a fixed value M of time-window size. The first value of SMA is computed as an average of M previous values, belonging to the first time-window. Then, we’re proceeding the computation, shifting the time-window forward by a single value (i.e. ‘step’), and estimate the average for the subset of values within the next time-window to obtain the second value of SMA, and so on. Finally, at the end of computation, we will obtain the array of SMA values for each time-window.

Normally, we use the following formula to compute the simple moving average value (SMA) at a moment t:

$\begin{aligned} SMA(t)=\frac{1}{n}\sum_{i=0}^{n-1}P_{t-i}\end{aligned}$

The entire process of SMA computation for the values of certain oscillating function is shown below:

The results of simple moving average computation (SMA) is shown in the chart below:

Before creating and training a neural network to predict future values of SMA, we need to generate some portion of dataset and train our neural network on the dataset being generated. A code snippet that perform training samples generation is listed below:

function ComputeSMA(time_s, window_size)

{

var r_avgs = [], avg_prev = 0;

for (let i = 0; i <= time_s.length - window_size; i++)

{

var curr_avg = 0.00, t = i + window_size;

for (let k = i; k < t && k <= time_s.length; k++)

curr_avg += time_s[k]['price'] / window_size;

r_avgs.push({ set: time_s.slice(i, i + window_size), avg: curr_avg });

avg_prev = curr_avg;

}

return r_avgs;

}

function GenerateDataset(size)

{

var dataset = [];

var dt1 = new Date(), dt2 = new Date();

dt1.setDate(dt1.getDate() - 1);

dt2.setDate(dt2.getDate() - size);

var time_start = dt2.getTime();

var time_diff = new Date().getTime() - dt1.getTime();

let curr_time = time_start;

for (let i = 0; i < size; i++, curr_time+=time_diff) {

var curr_dt = new Date(curr_time);

var hours = Math.floor(Math.random() * 100 % 24);

var minutes = Math.floor(Math.random() * 100 % 60);

var seconds = Math.floor(Math.random() * 100 % 60);

dataset.push({ id: i + 1, price: (Math.floor(Math.random() * 10) + 5) + Math.random(),

timestamp: curr_dt.getFullYear() + "-" + ((curr_dt.getMonth() > 9) ? curr_dt.getMonth() : ("0" + curr_dt.getMonth())) + "-" +

((curr_dt.getDate() > 9) ? curr_dt.getDate() : ("0" + curr_dt.getDate())) + " [" + ((hours > 9) ? hours : ("0" + hours)) +

":" + ((minutes > 9) ? minutes : ("0" + minutes)) + ":" + ((seconds > 9) ? seconds : ("0" + seconds)) + "]" });

}

return dataset;

}

In this code, we first generate the dataset containing time series, in which values are chronologically ordered. For example, the time series, in this particular case, is a set of "XYZ" company's closing stocks prices up to a certain date. To generate a time series dataset we use GenerateDataset(...) function. After that we're invoking ComputSMA(...) function to compute specific SMA values and generate training samples for our neural network to be trained. Finally, We're obtaining the training samples stored in the following format:

1 [ 6.9311,5.7555,5.0043,12.8708,13.5687,13.1377,10.9293,13.6013,5.5518,5.4026 ] 9.27531288119638

2 [ 5.7555,5.0043,12.8708,13.5687,13.1377,10.9293,13.6013,5.5518,5.4026,14.0599 ] 9.988194310950692

3 [ 5.0043,12.8708,13.5687,13.1377,10.9293,13.6013,5.5518,5.4026,14.0599,5.7249 ] 9.985132305337817

4 [ 12.8708,13.5687,13.1377,10.9293,13.6013,5.5518,5.4026,14.0599,5.7249,6.5808 ] 10.142788999870198

5 [ 13.5687,13.1377,10.9293,13.6013,5.5518,5.4026,14.0599,5.7249,6.5808,6.4174 ] 9.497444051734701

6 [ 13.1377,10.9293,13.6013,5.5518,5.4026,14.0599,5.7249,6.5808,6.4174,12.3171 ] 9.372278247291523

7 [ 10.9293,13.6013,5.5518,5.4026,14.0599,5.7249,6.5808,6.4174,12.3171,13.9809 ] 9.456598901839108

8 [ 13.6013,5.5518,5.4026,14.0599,5.7249,6.5808,6.4174,12.3171,13.9809,12.9729 ] 9.660956021150958

9 [ 5.5518,5.4026,14.0599,5.7249,6.5808,6.4174,12.3171,13.9809,12.9729,14.8519 ] 9.786015101756474

10 [ 5.4026,14.0599,5.7249,6.5808,6.4174,12.3171,13.9809,12.9729,14.8519,9.1138 ] 10.142210235627793

The set of values in brackets is the stock prices values within a single time window (from left), used as neural network inputs, a single value (from right) is a computed value of SMA that we will use as the target output value during our neural network training process. The following data is illustrated on the graph plot shown below:

Building A Recurrent Neural Network (RNN) For Time-Series Prediction

In this paragraph we will discuss about the most common scenarios for creating a neural network used for time-series prediction. Specifically, we will create a neural network consisting of layers of various types, such as either dense layers or RNN - layer with LSTM cells:

As we can see from the figure above, the following neural network consists of the first input dense layer, reshape layer, RNN - layer and finally the output dense layer, being inteconnected.

Input Dense Layer

According to the neural network deployment scenario shown on the figure above, the input dense layer is the first layer of the neural network being created. According to the structure of dataset passed to the input of the neural network during the training process, we're using a dense layer as the first layer of the entire network since the input dataset of samples is actually a two-dimensional array, each element of which is a pair of either an array of values within a single time-window or the value of SMA, respectively. Dense layer, unlike the RNN layer, is a layer that is trained by using back propagation training procedure or other gradient descent methods. Dense layer normally consists of neurons, which outputs are computed by using an activation function such as either sigmoidal or hyperbolic tangent function. Each neuron in a dense layer has the number of inputs and only one output. The number of outputs in a dense layer is equal to the number of neurons. During the training process, the output values of the first dense layer are computed and passed to the next RNN - layer.

Reshape Layer

The re-shape layer is the layer that actually performs no output computations. Instead, the following layer is used to transform the data obtained as output of the first input dense layer into three-dimensional array passed to the inputs of the succeeding RNN - layer. Specifically, the re-shape layer, in this particular case, is used to re-distribute one-dimensional dense layer output between certain inputs of the RNN - layer.

Recursive Neural Network Layer

The most of the computations performed by the entire network are held in the RNN-layer. RNN - layer is actually a recurrent neural network having the number of layers, each one consisting of LSTM-cells. A recurrent neural network (RNN) is the network that uses a slightly different method of output computation, rather than other networks of different types. Specifically, the output of each neuron in each neural layer is passed to its input. This, in turn, allows to significantly improve the process of the network training, such as reducing the number of neural layers required to provide the meaningful results of prediction, as well as speed-up the training process by limiting the number of epochs during which the network is trained. As a solution for time-series prediction we've built a RNN with multiple layers consisting of LSTM cells being stacked up.

Output Dense Layer

Similar to the input layer, we're using dense layer as a final output layer for the entire network. According to the structure of data passed to the neural network, for each training sample, there's only one value of SMA obtained as an output of the entire network. That's actually why, we're creating an output dense layer consisting of only one neuron, having multiple inputs and only one output, which is the output for the entire network.

Using TensorFlow.js To Deploy The Recurrent Neural Network With LSTM Cells

Creating A Model

According to the TensorFlow.js framework concepts, in the most cases, we start the deployment of neural network, being discussed, with defining a learning model and instantiating its object. A model (defined) is a collection of layers such as either arbitrary or stacked. Model normally can be trained or computed to use them for prediction. There’re basically two abstract model types supported by TensorFlow.js framework - “regular” and “sequential” model.

A “regular” model is a model, having a graph-based structure and can be used to build various of configurations, in which neural network’s layers might be interconnected arbitrarily, providing more control over the process of model training and outputs computation. The regular models are commonly used whenever we need to implement a custom neural network training and prediction mechanisms.

“Sequential” models are the models that can have only one structure. Each layer within a sequential model is simply stacked up by appending it to the top of stack. Each input of a new layer is interconnected with specific outputs of the previous neural layer. To train a sequential model as well as to compute its outputs during the prediction process we’re using the number of TensorFlow.js model object’s methods such as either model.fit(…) or model.predict(…). These method are thoroughly discussed in the succeeding paragraphs of this article.

In TensorFlow.js models are typically defined prior to maintaining the basic neural network configuration by using the following class factory methods:

const model = model();

const model = sequential();

To create a model, implementing a neural network used for time series predictions, such as financial markets forecasting, discussed in this article, we will obviously use sequential model that in particular case, allows us to simplify the process of model training and computation, and, at the same time, provides a better prediction results, rather than using of own learning and prediction mechanisms.

At the very beginning of code introduced below, we’re normally defining an empty sequential model and then using model.add(…) method to append new layers to the model, according its structure and configuration.

Using Tensors

Tensors (defined) are abstract objects used to hold the datasets passed to either input or output of the model being trained. In TensorFlow.js, there’re tensors that can be used to store one-,two-,three- and four-dimensional arrays of data. Also, tensors provide the functionality for re-shaping various arrays of data by increasing or reducing the number of dimensions. For example, the data stored in two-dimensional tensor can be converted into one-dimensional by using tensor’s object methods discussed in the next paragraph.

Specifically, we use the following tensors to store the input and output data for the model being created:

const xs = tf.tensor2d(inputs, [inputs.length, inputs[0].length]).div(tf.scalar(10));

const ys = tf.tensor2d(outputs, [outputs.length, 1]).reshape([outputs.length, 1]).div(tf.scalar(10));

The first tensor xs, which object is constructed by invoking the tf.tensor2d method, is the two dimensional tensor, having the following shape [samples, features]. In this case the first dimension is equal to the actual number of samples, and the second one – the number of features (i.e. values) in each sample.

In turn the second tensor ys is also used to store one-dimensional flat array re-shaped into two-dimensional by invoking reshape(…) method. The shape of this tensor is [outputs, 1], where the outputs – the number of output SMA values passed as target output values of the model being trained.

Data Normalization

As we can see from the code listed in the previous paragraph, each value stored into a specific tensor is divided by a scalar value of 10. This is typically done to perform the input and output data normalization. In this case, we’re performing the trivial normalization so that the input and output values will reside in the interval of [0;1], according to the nature of problem being solved.

Interconnecting Neural Layers

In this paragraph, we will demonstrate how to deploy a model, based on the neural network, discussed in the previous section and is consisting of layers of various types such as multidimensional recurrent neural network (RNN) with long short-term memory (LSTM) cells, as well as input and output dense layers, having only two dimensions.

Creating Input Layer

According to the structure of input data, it’s recommended to use one dense layer with two-dimensional input shape as an input layer of the entire network:

const input_layer_shape = window_size;

const input_layer_neurons = 100;

model.add(tf.layers.dense({units: input_layer_neurons, inputShape: [input_layer_shape]}));

The input_layer_shape and input_layer_neurons parameters, in this case, are used to define the input shape for the first dense layer, which is equal to the size of time window window_size in each sample. In turn, another parameter is used to define the number of neurons in the input layer, exactly matching the number of this layer’s outputs. Further, the output values, obtained from each neuron in the first dense layer are redistributed between specific inputs of the next neural layer discussed below.

Deploying RNN Layer

Recurrent neural network (RNN) is the next layer of the model being created. To improve the quality of prediction, as it’s already been discussed, we’re using RNN consisting of multiple long short-term memory (LSTM) cells. According to the architecture of RNN, the input of following neural network is a three-dimensional tensor, having the following shape - [samples, time steps, features]. The first dimension of the following shape is the actual number of samples (i.e. sets of data) passed from the output of the input dense layer to the correspondent inputs of the RNN, residing in the next layer of the model being created. The second dimension is the number of RNN’s time steps, that exactly matches the number of times the RNN is recursively trained. And, finally, the third dimension is the number of features (i.e. values) in each sample.

As we’ve already discussed, the first input dense layer’s output is one-dimensional tensor of values. To pass the following tensor’s values to the inputs of RNN we need to transform the structure of this data into the three-dimensional tensor, mentioned in the previous paragraph. To do this we normally have a need to use a so-called “reshape layer”, that actually performs no computation. This could be done by implementing the following code:

const rnn_input_layer_features = 10;

const rnn_input_layer_timesteps = input_layer_neurons / rnn_input_layer_features;

const rnn_input_shape = [rnn_input_layer_timesteps, rnn_input_layer_features];

model.add(tf.layers.reshape({targetShape: rnn_input_shape}));

rnn_input_shape is the target shape for the specific dense layer output data transformation, that can be computed the way its shown at the top of this code. In this case, we’re using the following algorithm for computing the number of samples, time steps and features. The number of neurons (i.e. outputs) in the input dense layer is divided by the number of features in each sample, passed to the input of RNN, to obtain the value of time steps during which the RNN is recursively trained. The value of the number of features is taken experimentally and is equal to 10. Finally, we’ll get the following target shape: [input_layer_neurons, 10, 10] = [100,10,10].

Since we’ve computed the target shape for the RNN input, we’re now appending the re-shape layer to the model being constructed. During the process of learning and predicted values computation the following layer will transform data passed from outputs of input dense layer to the inputs of RNN.

The next step is to configure the RNN that performs the actual learning and predicted results computation. Specifically, we must add the number of LSTM cells to the RNN being created. To do this, we must execute the following code:

const rnn_output_neurons = 20;

var lstm_cells = [];

for (let index = 0; index < n_layers; index++) {

lstm_cells.push(tf.layers.lstmCell({units: rnn_output_neurons}));

}

As we can see from the code above, we’re perform a loop execution, during each iteration of which we’re instantiating an object of lstmCell and add it to the target array lstm_cells. Also, each lstmCell object accepts the value of rnn_output_neurons as an argument of object’s constructor. The following value is the value of neurons number in each LSTM cell. In this case, we’re using the constant value of rnn_output_neurons in each layer, which is equal to the experimentally taken value of 20.

Finally, we must append the RNN object to the entire model being created:

const rnn_input_shape = [rnn_input_layer_timesteps, rnn_input_layer_features];

model.add(tf.layers.rnn({cell: lstm_cells,

inputShape: rnn_input_shape, returnSequences: false}));

The object of RNN normally accepts the following arguments. The first argument is the array of LSTM cells. The second argument is the RNN’s input shape previously discussed. The last argument of the RNN being created is used to specify if the RNN should output three-dimensional tensor of outputs. In this particular case, since we’re passing the RNN’s outputs to another dense output layer, we must set the value of the following argument to ‘false’, so that our RNN will return a two-dimensional tensor of output values.

Output Layer

Similar to the input layer, according to the structure of model being constructed, we’re using another dense layer, responsible for computing the model outputs while performing the actual training or computing predicted values. The input shape of the following layer is a two-dimensional tensor of input values obtained as an output of the RNN:

const output_layer_shape = rnn_output_neurons;

const output_layer_neurons = 1;

model.add(tf.layers.dense({units: output_layer_neurons, inputShape: [output_layer_shape]}));

In this case, the input shape of the dense output layer is a two-dimensional tensor, which shape is the same as the output shape of RNN. output_layer_shape argument defines the number of inputs of the dense output layer. In turn, output_layer_neurons is the argument that basically defines either the number of neurons in the output dense layer or the number of actual outputs of the entire model. In accordance to the problem of simple moving average values prediction, the number of the model outputs is taken equal to 1, since we’re interested in a single value obtained at the end of both training and prediction phases.

Compiling Model

Since we’ve created the model that can be used to predict time series, now it’s time to discuss how to compile the following model, preparing it for the learning phase. This is typically done by invoking the model.compile(…) method:

const opt_adam = tf.train.adam(learning_rate);

model.compile({ optimizer: opt_adam, loss: 'meanSquaredError'});

Obviously, that, model.compile(…) method accepts a couple of arguments as the parameters for this compilation. The first argument is the type of activation function with learning rate parameter. In this particular case, to achieve the most trustworthy results in the SMA values prediction, and, at the same time, provide a sufficient speed-up for the process of learning, we’re using the activation function formulated as the Adam-algorithm. The set of formulas by using which the output value of each neuron is computed shown below:

$\begin{aligned}m_0 := 0 ext{(Initialize initial 1st moment vector)}\end{aligned}$

$\begin{aligned} v_0 := 0 ext{(Initialize initial 2nd moment vector)} \end{aligned}$

$\begin{aligned} t := 0 ext{(Initialize timestep)} \end{aligned}$

The update rule for variable with gradient g uses an optimization:

$\begin{aligned} t := t + 1 \end{aligned}$

$\begin{aligned} lr_t := ext{learning\_rate} * \sqrt{1 - beta_2^t} / (1 - beta_1^t) \end{aligned}$

$\begin{aligned} m_t := beta_1 * m_{t-1} + (1 - beta_1) * g \end{aligned}$

$\begin{aligned} v_t := beta_2 * v_{t-1} + (1 - beta_2) * g * g \end{aligned}$

$\begin{aligned} variable := variable - lr_t * m_t / (\sqrt{v_t} + \epsilon) \end{aligned}$

The default value of 1e-8 for epsilon might not be a good default in general. For example, when training an Inception network on ImageNet a current good choice is 1.0 or 0.1. Note that since AdamOptimizer uses the formulation just before Section 2.1 of the Kingma and Ba paper rather than the formulation in Algorithm 1, the "epsilon" referred to here is "epsilon hat" in the paper.

sparse implementation of this algorithm (used when the gradient is an IndexedSlices object, typically because of tf.gather or an embedding lookup in the forward pass) does apply momentum to variable slices even if they were not used in the forward pass (meaning they have a gradient equal to zero). Momentum decay (beta1) is also applied to the entire momentum accumulator. This means that the sparse behavior is equivalent to the dense behavior (in contrast to some momentum implementations which ignore momentum unless a variable slice was actually used).

The precision loss estimation method is the second argument of the model.compile(…) function. The following argument is used to determine the method by using which the precision accuracy error value is computed during the training process. According to the nature of artificial neural networks (ANN) is the value of precision accuracy error (i.e. ‘loss’) is to be minimized while performing the actual training.

In this particular case, we specify root-means-squared error (RMSE) for computing the value of error during the training process. The RMSE value can be computed by using the following formula:

$\begin{aligned} RMSE=\sqrt{\frac{\sum_{i=1}^{n}(X_{obs,i}-X_{model,i})}{n}} \end{aligned}$

Training Model

Finally, since we’ve already built-up and compiled our model, it’s time to perform the actual model training. In TensorFlow, this is typically done by using model.fit(…) method, thoroughly discussed below:

const rnn_batch_size = window_size;

const hist = await model.fit(xs, ys,

{ batchSize: rnn_batch_size, epochs: n_epochs, callbacks: {

onEpochEnd: async (epoch, log) => { callback(epoch, log); }}});

To train the model using a dataset of samples, all we have to do is to pass the specific tensors as the arguments of the model.fit(…) method, that, as you can see from the code above, is called asynchronously. The batch size is the first argument of the following method. Itself, ‘batchSize’ is the actual number of features (i.e. input data values) processed by the model at the same time. Experimentally, we use the batch actual size equal to the number of window size (i.e. the number of features in each sample). The number of epochs during which the following model is being trained is the second argument of this method. The following value indicates the number of times during which the model is iteratively trained using the same data (i.e. dataset of samples). The third argument onEpochEnd is used to define a callback function executed at the end of each epoch of training.

Prediction

After we've trained our model on the dataset of samples being generated, now, it's time to use it for prediction purposes. Specifically we need to implement the following code to perform the prediction:

const outps = model.predict(tf.tensor2d(inps, [inps.length,

inps[0].length]).div(tf.scalar(10))).mul(10);

return Array.from(outps.dataSync());

To predict the specific SMA values, we're using model.predict(...) method, that accepts a two-dimensional tensor as a first argument. The following tensor is used to store a set of time-window samples consisting of the number of input values. By using this method we're actually passing a portion of data samples being previously generated to the inputs of the model. The returning value of the model.predict(...) method is another two-dimensional tensor that stores the set of predicted output values. Finally, after performing prediction we're converting the following tensor back to an array containing the predicted values by invoking Array.from(outps.dataSync()) method.

Using the code

index.html

<!DOCTYPE html>

<html lang="en">

<head>

<title>TimeSeries@TensorFlow.js</title>

<meta charset="utf-8">

<meta name="viewport" content="width=device-width, initial-scale=1">

<link rel="stylesheet" href="https://maxcdn.bootstrapcdn.com/bootstrap/3.3.7/css/bootstrap.min.css">

<script src="https://ajax.googleapis.com/ajax/libs/jquery/3.3.1/jquery.min.js"></script>

<script src="https://maxcdn.bootstrapcdn.com/bootstrap/3.3.7/js/bootstrap.min.js"></script>

<script src="https://cdn.jsdelivr.net/npm/@tensorflow/tfjs@0.13.3/dist/tf.min.js"></script>

<script src="https://cdn.plot.ly/plotly-1.2.0.min.js"></script>

<script src="./src/generators.js"></script>

<script src="./src/model.js"></script>

<script type="text/javascript">

var input_dataset = [], result = [];

var data_raw = []; var sma_vec = [];

function Init() {

initTabs('Dataset'); initDataset();

document.getElementById("n-items").value = "50";

document.getElementById("window-size").value = "12";

document.getElementById('input-data').addEventListener('change', readInputFile, false);

}

function initTabs(tab) {

var navbar = document.getElementsByClassName("nav navbar-nav");

navbar[0].getElementsByTagName("li")[0].className += "active";

document.getElementById("dataset").style.display = "none";

document.getElementById("graph-plot").style.display = "none";

setContentView(tab);

}

function setTabActive(event, tab) {

var navbar = document.getElementsByClassName("nav navbar-nav");

var tabs = navbar[0].getElementsByTagName("li");

for (var index = 0; index < tabs.length; index++)

if (tabs[index].className == "active")

tabs[index].className = "";

if (event.currentTarget != null) {

event.currentTarget.className += "active";

}

var callback = null;

if (tab == "Neural Network") {

callback = function () {

document.getElementById("train_set").innerHTML = getSMATable(1);

}

}

setContentView(tab, callback);

}

function setContentView(tab, callback) {

var tabs_content = document.getElementsByClassName("container");

for (var index = 0; index < tabs_content.length; index++)

tabs_content[index].style.display = "none";

if (document.getElementById(tab).style.display == "none")

document.getElementById(tab).style.display = "block";

if (callback != null) {

callback();

}

}

function readInputFile(e) {

var file = e.target.files[0];

var reader = new FileReader();

reader.onload = function(e) {

var contents = e.target.result;

document.getElementById("input-data").value = "";

parseCSVData(contents);

};

reader.readAsText(file);

}

function parseCSVData(contents) {

data_raw = []; sma_vec = [];

var rows = contents.split("\n");

var params = rows[0].split(",");

var size = parseInt(params[0].split("=")[1]);

var window_size = parseInt(params[1].split("=")[1]);

document.getElementById("n-items").value = size.toString();

document.getElementById("window-size").value = window_size.toString();

for (var index = 1; index < size + 1; index++) {

var cols = rows[index].split(",");

data_raw.push({ id: cols[0], timestamp: cols[1], price: cols[2] });

}

sma_vec = ComputeSMA(data_raw, window_size);

onInputDataClick();

}

function initDataset() {

var n_items = parseInt(document.getElementById("n-items").value);

var window_size = parseInt(document.getElementById("window-size").value);

data_raw = GenerateDataset(n_items);

sma_vec = ComputeSMA(data_raw, window_size);

onInputDataClick();

}

async function onTrainClick() {

var inputs = sma_vec.map(function(inp_f) {

return inp_f['set'].map(function(val) { return val['price']; })});

var outputs = sma_vec.map(function(outp_f) { return outp_f['avg']; });

var n_epochs = parseInt(document.getElementById("n-epochs").value);

var window_size = parseInt(document.getElementById("window-size").value);

var lr_rate = parseFloat(document.getElementById("learning-rate").value);

var n_hl = parseInt(document.getElementById("hidden-layers").value);

var n_items = parseInt(document.getElementById("n-items-percent").value);

var callback = function(epoch, log) {

var log_nn = document.getElementById("nn_log").innerHTML;

log_nn += "<div>Epoch: " + (epoch + 1) + " Loss: " + log.loss + "</div>";

document.getElementById("nn_log").innerHTML = log_nn;

document.getElementById("training_pg").style.width = ((epoch + 1) * (100 / n_epochs)).toString() + "%";

document.getElementById("training_pg").innerHTML = ((epoch + 1) * (100 / n_epochs)).toString() + "%";

}

result = await trainModel(inputs, outputs,

n_items, window_size, n_epochs, lr_rate, n_hl, callback);

alert('Your model has been successfully trained...');

}

function onPredictClick(view) {

var inputs = sma_vec.map(function(inp_f) {

return inp_f['set'].map(function (val) { return val['price']; }); });

var outputs = sma_vec.map(function(outp_f) { return outp_f['avg']; });

var n_items = parseInt(document.getElementById("n-items-percent").value);

var outps = outputs.slice(Math.floor(n_items / 100 * outputs.length), outputs.length);

var pred_vals = Predict(inputs, n_items, result['model']);

var data_output = "";

for (var index = 0; index < pred_vals.length; index++) {

data_output += "<tr><td>" + (index + 1) + "</td><td>" +

outps[index] + "</td><td>" + pred_vals[index] + "</td></tr>";

}

document.getElementById("pred-res").innerHTML = "<table class=\"table\"><thead><tr><th scope=\"col\">#</th><th scope=\"col\">Real Value</th> \

<th scope=\"col\">Predicted Value</th></thead><tbody>" + data_output + "</tbody></table>";

var window_size = parseInt(document.getElementById("window-size").value);

var timestamps_a = data_raw.map(function (val) { return val['timestamp']; });

var timestamps_b = data_raw.map(function (val) {

return val['timestamp']; }).splice(window_size, data_raw.length);

var timestamps_c = data_raw.map(function (val) {

return val['timestamp']; }).splice(window_size + Math.floor(n_items / 100 * outputs.length), data_raw.length);

var sma = sma_vec.map(function (val) { return val['avg']; });

var prices = data_raw.map(function (val) { return val['price']; });

var graph_plot = document.getElementById('graph-pred');

Plotly.newPlot( graph_plot, [{ x: timestamps_a, y: prices, name: "Series" }], { margin: { t: 0 } } );

Plotly.plot( graph_plot, [{ x: timestamps_b, y: sma, name: "SMA" }], { margin: { t: 0 } } );

Plotly.plot( graph_plot, [{ x: timestamps_c, y: pred_vals, name: "Predicted" }], { margin: { t: 0 } } );

}

function getInputDataTable() {

var data_output = "";

for (var index = 0; index < data_raw.length; index++)

{

data_output += "<tr><td>" + data_raw[index]['id'] + "</td><td>" +

data_raw[index]['timestamp'] + "</td><td>" + data_raw[index]['price'] + "</td></tr>";

}

return "<table class=\"table\"><thead><tr><th scope=\"col\">#</th><th scope=\"col\">Timestamp</th> \

<th scope=\"col\">Feature</th></thead><tbody>" + data_output + "</tbody></table>";

}

function getSMATable(view) {

var data_output = "";

if (view == 0) {

for (var index = 0; index < sma_vec.length; index++)

{

var set_output = "";

var set = sma_vec[index]['set'];

for (var t = 0; t < set.length; t++) {

set_output += "<tr><td width=\"30px\">" + set[t]['price'] +

"</td><td>" + set[t]['timestamp'] + "</td></tr>";

}

data_output += "<tr><td width=\"20px\">" + (index + 1) +

"</td><td>" + "<table width=\"100px\" class=\"table\">" + set_output +

"<tr><td><b>" + "SMA(t) = " + sma_vec[index]['avg'] + "</b></tr></td></table></td></tr>";

}

return "<table class=\"table\"><thead><tr><th scope=\"col\">#</th><th scope=\"col\">Time Series</th>\

</thead><tbody>" + data_output + "</tbody></table>";

}

else if (view == 1) {

var set = sma_vec.map(function (val) { return val['set']; });

for (var index = 0; index < sma_vec.length; index++)

{

data_output += "<tr><td width=\"20px\">" + (index + 1) +

"</td><td>[ " + set[index].map(function (val) {

return (Math.round(val['price'] * 10000) / 10000).toString(); }).toString() +

" ]</td><td>" + sma_vec[index]['avg'] + "</td></tr>";

}

return "<table class=\"table\"><thead><tr><th scope=\"col\">#</th><th scope=\"col\">\

Input</th><th scope=\"col\">Output</th></thead><tbody>" + data_output + "</tbody></table>";

}

}

function onInputDataClick() {

document.getElementById("dataset").style.display = "block";

document.getElementById("graph-plot").style.display = "block";

document.getElementById("data").innerHTML = getInputDataTable();

var timestamps = data_raw.map(function (val) { return val['timestamp']; });

var prices = data_raw.map(function (val) { return val['price']; });

var graph_plot = document.getElementById('graph');

Plotly.newPlot( graph_plot, [{ x: timestamps, y: prices, name: "Stocks Prices" }], { margin: { t: 0 } } );

}

function onSMAClick() {

document.getElementById("data").innerHTML = getSMATable(0);

var sma = sma_vec.map(function (val) { return val['avg']; });

var prices = data_raw.map(function (val) { return val['price']; });

var window_size = parseInt(document.getElementById("window-size").value);

var timestamps_a = data_raw.map(function (val) { return val['timestamp']; });

var timestamps_b = data_raw.map(function (val) {

return val['timestamp']; }).splice(window_size, data_raw.length);

var graph_plot = document.getElementById('graph');

Plotly.newPlot( graph_plot, [{ x: timestamps_a, y: prices, name: "Series" }], { margin: { t: 0 } } );

Plotly.plot( graph_plot, [{ x: timestamps_b, y: sma, name: "SMA" }], { margin: { t: 0 } } );

}

</script>

</head>

<body onload="Init()">

<table>

<tbody>

<tr>

<td>

<nav class="navbar navbar-default">

<div class="container-fluid">

<div class="navbar-header">

<a class="navbar-brand" href="#">TimeSeries@TensorFlow.js</a>

</div>

<ul class="nav navbar-nav">

<li onclick="setTabActive(event, 'Dataset')"><a href="#">Dataset</a></li>

<li onclick="setTabActive(event, 'Neural Network')"><a href="#">Neural Network</a></li>

<li onclick="setTabActive(event, 'Prediction')"><a href="#">Prediction</a></li>

</ul>

</div>

</nav>

</td>

</tr>

<tr>

<td>

<div id="Dataset" class="container">

<table width="100%">

<tr>

<td>

<table width="100%">

<tr>

<td width="60%" align="left">

<table width="100%">

<tr>

<td width="10px"><b> N-Items: </b></td>

<td width="120px"><input class="form-control input-sm" id="n-items" type="text" size="1" value="500"></td>

<td width="120px"><b> Window Size: </b></td>

<td width="100px"><input class="form-control input-sm" id="window-size" type="text" size="1" value="12"></td>

<td width="180px" align="center"><button type="button" class="btn btn-primary" onclick="initDataset()">Generate Data...</button></td>

</tr>

</table>

</td>

<td width="40%" align="right">

<form class="md-form">

<div class="file-field">

<div class="btn btn-primary btn-sm float-left">

<span>select *.csv data file</span>

<input type="file" id="input-data">

</div>

</div>

</form>

</td>

</tr>

</table>

</td>

</tr>

<tr>

<td width="100%" id="dataset"><hr/>

<table width="50%">

<tr>

<td align="left"><button type="button" class="btn btn-primary" onclick="onInputDataClick()">Input Data</button></td>

<td align="right"><button type="button" class="btn btn-primary" onclick="onSMAClick()">Simple Moving Average</button></td>

</tr>

</table>

<hr/>

<div id="data" style="overflow-y: scroll; max-height: 300px;"></div>

</td>

</tr>

<tr><td width="100%" id="graph-plot"><hr/><div id="graph" style="width:100%; height:350px;"></div></td></tr>

</table>

</div>

<div id="Neural Network" class="container">

<table width="100%">

<tr>

<td>

<button type="button" class="btn btn-primary" onclick="onTrainClick()">Train Model...</button><hr/>

<div class="progress">

<div id="training_pg" class="progress-bar" role="progressbar" aria-valuenow="70" aria-valuemin="0" aria-valuemax="100" style="width:0%"></div>

</div>

<hr/>

</td>

</tr>

<tr>

<td>

<table width="100%" height="100%">

<tr>

<td width="80%"><div id="train_set" style="overflow-x: scroll; overflow-y: scroll; max-width: 900px; max-height: 300px;"></div></td>

<td>

<table width="100%" class="table">

<tr>

<td>

<label>Size (%):</label>

<input class="form-control input-sm" id="n-items-percent" type="text" size="1" value="50">

</td>

</tr>

<tr>

<td>

<label>Epochs:</label>

<input class="form-control input-sm" id="n-epochs" type="text" size="1" value="200">

</td>

</tr>

<tr>

<td>

<label>Learning Rate:</label>

<input class="form-control input-sm" id="learning-rate" type="text" size="1" value="0.01">

</td>

</tr>

<tr>

<td>

<label>Hidden Layers:</label>

<input class="form-control input-sm" id="hidden-layers" type="text" size="1" value="4">

</td>

</tr>

</table>

</td>

</tr>

<tr>

<td><hr/><div id="nn_log" style="overflow-x: scroll; overflow-y: scroll; max-width: 900px; max-height: 250px;"></div></td>

</tr>

</table>

</td>

</tr>

</table>

</div>

<div id="Prediction" class="container">

<table width="100%">

<tr><td><button type="button" class="btn btn-primary" onclick="onPredictClick()">Predict</button><hr/></td></tr>

<tr><td><div id="pred-res" style="overflow-x: scroll; overflow-y: scroll; max-height: 300px;"></div></td></tr>

<tr><td id="graph-pred"><hr/><div id="graph" style="height:300px;"></div></td></tr>

</table>

</div>

</td>

</tr>

</tbody>

</table>

</body>

</html>

generators.js

function ComputeSMA(time_s, window_size)

{

var r_avgs = [], avg_prev = 0;

for (let i = 0; i <= time_s.length - window_size; i++)

{

var curr_avg = 0.00, t = i + window_size;

for (let k = i; k < t && k <= time_s.length; k++)

curr_avg += time_s[k]['price'] / window_size;

r_avgs.push({ set: time_s.slice(i, i + window_size), avg: curr_avg });

avg_prev = curr_avg;

}

return r_avgs;

}

function GenerateDataset(size)

{

var dataset = [];

var dt1 = new Date(), dt2 = new Date();

dt1.setDate(dt1.getDate() - 1);

dt2.setDate(dt2.getDate() - size);

var time_start = dt2.getTime();

var time_diff = new Date().getTime() - dt1.getTime();

let curr_time = time_start;

for (let i = 0; i < size; i++, curr_time+=time_diff) {

var curr_dt = new Date(curr_time);

var hours = Math.floor(Math.random() * 100 % 24);

var minutes = Math.floor(Math.random() * 100 % 60);

var seconds = Math.floor(Math.random() * 100 % 60);

dataset.push({ id: i + 1, price: (Math.floor(Math.random() * 10) + 5) + Math.random(),

timestamp: curr_dt.getFullYear() + "-" + ((curr_dt.getMonth() > 9) ? curr_dt.getMonth() : ("0" + curr_dt.getMonth())) + "-" +

((curr_dt.getDate() > 9) ? curr_dt.getDate() : ("0" + curr_dt.getDate())) + " [" + ((hours > 9) ? hours : ("0" + hours)) +

":" + ((minutes > 9) ? minutes : ("0" + minutes)) + ":" + ((seconds > 9) ? seconds : ("0" + seconds)) + "]" });

}

return dataset;

}

model.js

async function trainModel(inputs, outputs, size, window_size, n_epochs, learning_rate, n_layers, callback)

{

const input_layer_shape = window_size;

const input_layer_neurons = 100;

const rnn_input_layer_features = 10;

const rnn_input_layer_timesteps = input_layer_neurons / rnn_input_layer_features;

const rnn_input_shape = [ rnn_input_layer_features, rnn_input_layer_timesteps ];

const rnn_output_neurons = 20;

const rnn_batch_size = window_size;

const output_layer_shape = rnn_output_neurons;

const output_layer_neurons = 1;

const model = tf.sequential();

inputs = inputs.slice(0, Math.floor(size / 100 * inputs.length));

outputs = outputs.slice(0, Math.floor(size / 100 * outputs.length));

const xs = tf.tensor2d(inputs, [inputs.length, inputs[0].length]).div(tf.scalar(10));

const ys = tf.tensor2d(outputs, [outputs.length, 1]).reshape([outputs.length, 1]).div(tf.scalar(10));

model.add(tf.layers.dense({units: input_layer_neurons, inputShape: [input_layer_shape]}));

model.add(tf.layers.reshape({targetShape: rnn_input_shape}));

var lstm_cells = [];

for (let index = 0; index < n_layers; index++) {

lstm_cells.push(tf.layers.lstmCell({units: rnn_output_neurons}));

}

model.add(tf.layers.rnn({cell: lstm_cells,

inputShape: rnn_input_shape, returnSequences: false}));

model.add(tf.layers.dense({units: output_layer_neurons, inputShape: [output_layer_shape]}));

const opt_adam = tf.train.adam(learning_rate);

model.compile({ optimizer: opt_adam, loss: 'meanSquaredError'});

const hist = await model.fit(xs, ys,

{ batchSize: rnn_batch_size, epochs: n_epochs, callbacks: {

onEpochEnd: async (epoch, log) => { callback(epoch, log); }}});

return { model: model, stats: hist };

}

function Predict(inputs, size, model)

{

var inps = inputs.slice(Math.floor(size / 100 * inputs.length), inputs.length);

const outps = model.predict(tf.tensor2d(inps, [inps.length,

inps[0].length]).div(tf.scalar(10))).mul(10);

return Array.from(outps.dataSync());

}

Points of Interest

In this article, we've discussed how to create and deploy a model, that based on using RNN with LSTM layers, perform time-series prediction. The following concept can also be used for other various purposes, including image or voice recognition, or time-series prediction other than simple moving average (SMA).

History

- November 2, 2018 - The first revision of this article has been published;