Introduction

I'm currently documenting the development of a hobbyist robotic project on this site under the series of articles called, "Rodney - A long time coming autonomous robot". On my desk, I have a stack of post-it notes with scribbled ideas for future development. One of these notes has written upon it "AI TensorFlow object detection". I can't remember when or what I was doing that prompted me to write this note, but as Code Project is currently running the "AI TensorFlow Challenge", it seems like an ideal time to look at the subject.

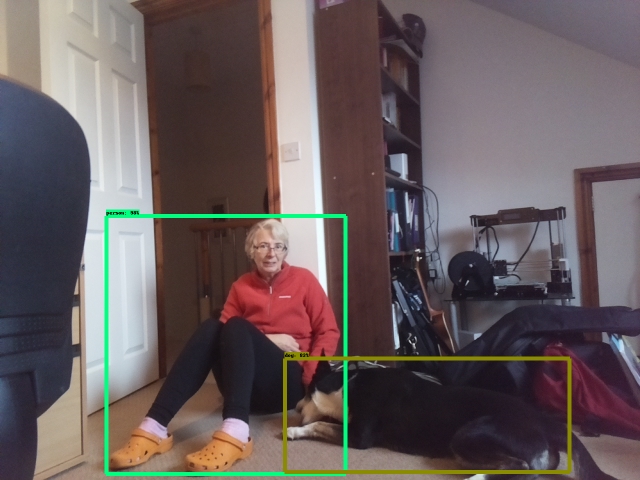

A robot's view

Background

My robot uses the Robot Operating System (ROS). This is a de facto standard for robot programming and in this article, we will integrate TensorFlow into a ROS package. I'll try and keep the details of the ROS code to a minimum but if you wish to know more, can I suggest you visit the Robot Operating System site and read my articles on Rodney.

Rodney is already capable of moving his head and looking around and greeting family members that he recognizes. To do this, we make use of the OpenCV face detection and recognition calls. We will use TensorFlow in a similar manner to detect objects around the home, like for instance a family pet.

Eventually, the robot will be capable of navigating around the home looking for a particular family member to deliver a message to. Likewise, imagine you are running late returning home and wished to check on the family dog. With the use of a web interface, you could instruct the robot to locate the dog and show you a video feed of what it's doing. Now in our house, we like to say our dog is not spoilt, she is loved. Which means she gets to sleep in any room, so the robot will need to navigate throughout the house. But for now in this article, we will just check for the dog within the robots head movement range.

If you don't have an interest in ROS or robotics, I have included a non ROS test script that can be used to run just the code contained in the object detection library.

I recently read a book that suggested in the future all software engineers will need a working knowledge of deep learning. With the rapid development of other technologies that you also need to keep an eye on, that's a bold statement. So if you don't know your "rank-2 tensors" from your "loss function", how do you get started?

In this article, I'll show how to get a complete working system up and running with limited time and with limited TensorFlow knowledge.

Standing on the Shoulder of Giants

OK, so it's obvious so far that we are going to use Google's TensorFlow for our machine learning. However, Google also makes some other resources available that are going to make our object detection application easier to get up and running.

The first of these is Tensorflow Object Detection API. As the GitHub page states, this API "... is an open source framework built on top of TensorFlow that makes it easy to construct, train and deploy object detection models".

Now wait a minute it says "... makes it easy to construct, train ..." a model. Surely, that's still a lot of work and a good understanding of neural networks will be required?

Well, not really, since the second resource we are going to take advantage of is Google's Tensorflow detection model zoo. This contains a number of pre-trained object detection models and we will simply download one, which can recognize 90 different object classes, and access it from our code.

Raspberry Pi Installation

Now I must say this was a bit tricky. Although there are lots of videos and written instructions on the Internet for installing TensorFlow on all sorts of the devices, including the Raspberry Pi, none fitted my setup perfectly.

For my robot development, I use a free Raspberry Pi image from the Ubiquity Robotics website. This image already includes the Kinetic version of ROS, OpenCV and is based on the lightweight version of Ubuntu, lubuntu.

Instructions to replicate my setup on Rodney are available on this GitHub site.

The following is required if you wish to run the non-ros version of the code. The versions I have installed are shown in parenthesis. Even without ROS, you may still want to take a look at the GitHub site for instructions on setting up and compiling Protocol Buffers and setting the Python path.

- OpenCV2 (3.3.1-dev)

- Python (2.7)

- TensorFlow (1.11.0 for Python 2.7)

- Protocol Buffers (3.6.1)

You will also need to download the TensorFlow model repository and a pre-trained model. Since I'm running on a Raspberry Pi, I need a model which will run fast but the downside is it will have a lower accuracy of detection. I'm therefore using the ssdlite_mobilenet_v2_coco model.

If you require higher accuracy in your Raspberry Pi based robot, there are ways to use a slower but better detection model. As ROS can run across a distributed network. You could use a second Pi which is just dedicated to running the model or even run the model on a separate workstation with more computing power.

The Code

I'll describe the ROS package containing the object detection code in detail, the remainder of the robot code will be described using diagrams. This code is described in detail in the "Rodney - A long time coming autonomous robot" articles and all the code is available in the download source zip file for this article.

ROS code can be written in a number of languages and the Rodney project uses both C++ and Python. As the TensorFlow interface and Google's example code for the Object Detection API are both in Python, we will use Python for the object detection node.

Object Detection Package

Our ROS package for the node is called tf_object_detection and is available in the tf_object_detection folder. The package contains a number of sub folders.

The sub folder config contains a configuration file config.yaml. This configures the node by supplying the path to Google's object_detection folder and sets a confidence level. When the model runs as well as the name of objects detected in an image, it gives a confidence level for the detect object. If the level is below that given in the configuration file, the object will be ignored by our code.

object_detection:

confidence_level: 0.60

path: '/home/ubuntu/git/models/research/object_detection'

The sub folder launch contains a file test.launch that can be used to test the node in the robot environment using the bare minimum robot code and hardware. It loads the configuration file into the ROS parameter server, launches two nodes to publish images from the Pi Camera and launches our object detection node.

="1.0"

<launch>

<rosparam command="load" file="$(find tf_object_detection)/config/config.yaml" />

<include file="$(find raspicam_node)/launch/camerav2_1280x960.launch" />

<node name="republish" type="republish" pkg="image_transport"

output="screen" args="compressed in:=/raspicam_node/image/ raw out:=/camera/image/raw" />

<node pkg="tf_object_detection" type="tf_object_detection_node.py"

name="tf_object_detection_node" output="screen" />

</launch>

The sub folder msg contains a file detection_results.msg. This file is used to create a user defined ROS message that will be used to return a list containing the objects detected in each supplied image.

string[] names_detected

The sub folder scripts contains the file non-ros-test.py and a test image. This Python program can be used to test the object detection library outside of the robot code.

The code imports our object detection library and creates an instance of it passing the path which contains the model and a confidence level of 0.5 (50%). It then loads the test image as an OpenCV image and calls our scan_for_objects function that runs the object detection model. The returned list contains the objects detected that were above the confidence level. It also modifies the supplied image with boxes around the detected objects. Our test code goes on to print the names of the objects detected and displays the resulting image.

import sys

import os

import cv2

sys.path.append('/home/ubuntu/git/tf_object_detection/src')

import object_detection_lib

odc = object_detection_lib.ObjectDetection

('/home/ubuntu/git/models/research/object_detection', 0.5)

cvimg = cv2.imread("1268309/test_image.jpg")

object_names = odc.scan_for_objects(cvimg)

print(object_names)

cv2.imshow('object detection', cvimg)

cv2.waitKey(0)

cv2.destroyAllWindows()

cv2.imwrite('adjusted_test_image.jpg', cvimg)

The sub folder src contains the main code for the node and our object detection library. I'll start with a description of the library before moving on to the ROS code.

As stated above, this library can be used without the robot code and can be used by the non-ros-test.py script. It is based on Google's Object Detection API tutorial, which can be found here. I have converted it to a Python class and moved the call to create the TensorFlow session from the run_inference_for_single_image function to the __init__ function. If you recreate the sessions each time calling session run results in the model getting auto tuned each time it runs. This causes a considerable delay in running the model. This way, we only get the delay (around 45 seconds) the first time we supply the code with an image, subsequent calls return within 1 second.

The code starts by importing the required modules, numpy, tensorflow and two modules from the Object Detection API, label_map_util and visualization_utils. label_map_util is used to convert the object number returned by the model to a named object. For example, when the model returns the ID 18, which relates to a dog. The visualization_utils is used to draw the boxes, object labels and percentage confidence levels on to an image.

import numpy as np

import tensorflow as tf

from utils import label_map_util

from utils import visualization_utils as vis_util

The class initialisation function loads the model from the supplied path and opens the TensorFlow session. It also loads the label map file and stores the supplied confidence trigger level.

def __init__(self, path, confidence):

MODEL_NAME = 'ssdlite_mobilenet_v2_coco_2018_05_09'

PATH_TO_FROZEN_GRAPH = path + '/' + MODEL_NAME + '/frozen_inference_graph.pb'

PATH_TO_LABELS = path + '/data/' + 'mscoco_label_map.pbtxt'

self.__detection_graph = tf.Graph()

with self.__detection_graph.as_default():

od_graph_def = tf.GraphDef()

with tf.gfile.GFile(PATH_TO_FROZEN_GRAPH, 'rb') as fid:

serialized_graph = fid.read()

od_graph_def.ParseFromString(serialized_graph)

tf.import_graph_def(od_graph_def, name='')

self.__sess = tf.Session(graph=self.__detection_graph)

self.__category_index = label_map_util.create_category_index_from_labelmap

(PATH_TO_LABELS, use_display_name=True)

self.__confidence = confidence

The run_inference_for_single_image function is where most work with TensorFlow occurs. The first part obtains a list holding all the operations in the tensor graph. Using the list, it creates a dictionary to hold the tensors that we will be interested in when we execute the graph. You can think of these as the output we want from running the graph. Possible tensors we are interested in are named:

num_detections - This will be a value informing us how many objects were detected in the image.detection_boxes - For each object detected, this will contain four co-ordinates for the box to bound the object image. We don't need to worry about the coordinate system as we will use the API to draw the boxes.detection_scores - For each object detected, there will be a score giving the confidence of the system that it has identified the object correctly. Multiply this value by 100 to give a percentage.detection_classes - For each object detected, there will be a number identifying the object. Again, the actual number is not important to us as the API will be used to convert the number to an object name.detection_mask - The model we are using does not have this operation. Basically with a model that does have this operation as well as drawing a bounding box, you can overlay a mask over the object.

def run_inference_for_single_image(self, image):

with self.__detection_graph.as_default():

ops = tf.get_default_graph().get_operations()

all_tensor_names = {output.name for op in ops for output in op.outputs}

tensor_dict = {}

for key in [

'num_detections', 'detection_boxes', 'detection_scores',

'detection_classes', 'detection_masks'

]:

tensor_name = key + ':0'

if tensor_name in all_tensor_names:

tensor_dict[key] = tf.get_default_graph().get_tensor_by_name(tensor_name)

The next part of the function is only applicable if the model does indeed contain the detection_mask operation. I have left the code in my library should we use such a model in the future.

if 'detection_masks' in tensor_dict:

detection_boxes = tf.squeeze(tensor_dict['detection_boxes'], [0])

detection_masks = tf.squeeze(tensor_dict['detection_masks'], [0])

real_num_detection = tf.cast(tensor_dict['num_detections'][0], tf.int32)

detection_boxes = tf.slice(detection_boxes, [0, 0], [real_num_detection, -1])

detection_masks = tf.slice(detection_masks, [0, 0, 0], [real_num_detection, -1, -1])

detection_masks_reframed = utils_ops.reframe_box_masks_to_image_masks(

detection_masks, detection_boxes, image.shape[0], image.shape[1])

detection_masks_reframed = tf.cast(

tf.greater(detection_masks_reframed, 0.5), tf.uint8)

tensor_dict['detection_masks'] = tf.expand_dims(detection_masks_reframed, 0)

Following the detection mask stuff the code obtains the tensor named 'image_tensor:0'. If the other operations were considered to be the outputs, this is the input to the graph where we will feed in the image we want processing. When the graph was created, this was most likely created as a TensorFlow placeholder.

image_tensor = tf.get_default_graph().get_tensor_by_name('image_tensor:0')

Then we finally run the graph to do the object detection. Into the call to run, we pass the dictionary containing the tensors we would like fetched, the resulting tensors will be returned to us by this call in a Python dictionary holding numpy arrays. We also pass in a dictionary which indicates which tensor values we want to substitute. In our case, this is the image_tensor (the input if you like) which we must first change the shape of to suit what the model is expecting.

output_dict = self.__sess.run(tensor_dict,feed_dict={image_tensor: np.expand_dims(image, 0)})

output_dict['num_detections'] = int(output_dict['num_detections'][0])

output_dict['detection_classes'] = output_dict['detection_classes'][0].astype(np.uint8)

output_dict['detection_boxes'] = output_dict['detection_boxes'][0]

output_dict['detection_scores'] = output_dict['detection_scores'][0]

if 'detection_masks' in output_dict:

output_dict['detection_masks'] = output_dict['detection_masks'][0]

return output_dict

When we wish to conduct object detection, we call the function scan_for_objects with the supplied image which is an OpenCV image. In tensor terms, this image will be a tensor of shape, image pixel height by the image pixel width by 3 (red, green, blue values). We then call the run_inference_for_single_image function to run the model. The returned dictionary contains the objects detected classes, co-ordinates for bounding boxes of any objects and confidence levels. Values from this dictionary are fed into visualize_boxes_and_labels_on_image_array which will draw the boxes around the objects and add labels for those whose confidence levels exceed the supplied value. The code then creates a list of objects by name whose confidence level exceeds the threshold and returns the list.

def scan_for_objects(self, image_np):

output_dict = self.run_inference_for_single_image(image_np)

vis_util.visualize_boxes_and_labels_on_image_array(

image_np,

output_dict['detection_boxes'],

output_dict['detection_classes'],

output_dict['detection_scores'],

self.__category_index,

instance_masks=output_dict.get('detection_masks'),

use_normalized_coordinates=True,

line_thickness=8,

min_score_thresh=self.__confidence)

detected_list = []

total_detections = output_dict['num_detections']

if total_detections > 0:

for detection in range(0, total_detections):

if output_dict['detection_scores'][detection] > self.__confidence:

category = output_dict['detection_classes'][detection]

detected_list.insert(0,self.__category_index[category]['name'])

return detected_list

As far as TensorFlow goes, that's all that is required. I'll now briefly describe the rest of the code for this package. If you are not interested in the robotic code, you can skip ahead to the section "Using the code" which will describe running the code outside of the ROS environment.

The ROS code for our object detection node is contained in the tf_object_detection_node.py file.

Each ROS node is a running process. In the main function, we register our node with ROS, create an instance of the ObjectDetectionNode class, log that the node has started and hand over control to ROS with a call to rospy.spin.

def main(args):

rospy.init_node('tf_object_detection_node', anonymous=False)

odn = ObjectDetectionNode()

rospy.loginfo("Object detection node started")

try:

rospy.spin()

except KeyboardInterrupt:

print("Shutting down")

if __name__ == '__main__':

main(sys.argv)

The ObjectDetectionNode contains the remainder of the code for the node. The class __init__ function starts by creating an instance of CVBridge that is used to convert ROS image messages to OpenCV images and back again. We then register the ROS topics (messages) that the node will publish and subscribe to.

The tf_object_detection_node/adjusted_image topic will contain the image that includes the bounded rectangles around detected objects. The tf_object_detection_node/result topic will contain a list of the names of the detected objects.

The first subscribed topic is tf_object_detection_node/start, that when received will result in a call to StartCallback that kicks off the object detection on the next camera image received.

The second topic we subscribe to is camera/image/raw, which will contain the image from the camera and result in a call to Imagecallback.

The remainder of __init__ reads the configuration values from the parameter server and creates the instance of the object detection library.

def __init__(self):

self.__bridge = CvBridge()

self.__image_pub = rospy.Publisher

("tf_object_detection_node/adjusted_image", Image, queue_size=1)

self.__result_pub = rospy.Publisher

("tf_object_detection_node/result", detection_results, queue_size=1)

self.__command_sub = rospy.Subscriber

("tf_object_detection_node/start", Empty, self.StartCallback)

self.__image_sub = rospy.Subscriber("camera/image/raw",Image, self.Imagecallback)

self.__scan_next = False

object_detection_path = rospy.get_param('/object_detection/path',

'/home/ubuntu/git/models/research/object_detection')

confidence_level = rospy.get_param('/object_detection/confidence_level', 0.50)

self.__odc = object_detection_lib.ObjectDetection

(object_detection_path, confidence_level)

When the message on the topic tf_object_detection_node/start is received, all the StartCallback function does is to set a flag indicating that when the next image from the camera is received, we should run object detection on that image.

def StartCallback(self, data):

self.__scan_next = True

When the message on the topic camera/image/raw is received, the Imagecallback function checks to see if an object detection operation is pending. If so we reset the flag, convert the image from a ROS image to an OpenCV image and call the scan_for_objects function with the image. If objects are detected, the supplied image will be updated to contain the bounding boxes and labels. This adjusted image is then published on the tf_object_detection_node/adjusted_image topic. This topic is not used internally by the robot but we can examine it as part of debugging/testing. The final part of the function creates a Python list containing the names of the objects detected. This is then published on the tf_object_detection_node/result topic and will be acted upon by the node that requested the object detection scan.

def Imagecallback(self, data):

if self.__scan_next == True:

self.__scan_next = False

image = self.__bridge.imgmsg_to_cv2(data, "bgr8")

object_names_detected = self.__odc.scan_for_objects(image)

try:

self.__image_pub.publish(self.__bridge.cv2_to_imgmsg(image, "bgr8"))

except CvBridgeError as e:

print(e)

result = detection_results()

result.names_detected = object_names_detected

self.__result_pub.publish(result)

Overview of Robot System

In this section, I'll very briefly describe how the object detection node fits in with other nodes in the system. The easiest way to describe this is to use a graph of the nodes in question. Nodes (processes) are shown in oval shapes and the lines to and from them are topics containing messages. There is a full size image of the graph in the zip file.

On the far left hand side, the keyboard node is actually running on a separate workstation connected to the same Wi-Fi network as the robot. This allows us to issue commands to the robot. For the "search for a dog" command, we select the '3' key for mission 3. The rodney_node receives the keydown message and passes on a mission_request to the rodney_missions node. This request includes the parameter "dog" so that rodney_missions_node knows the name of the object to search for. The rodney_missions_node is a hierarchical state machine and as it steps through the states involved in detecting a dog, it can move the head/camera through requests with the head_control_node and request that the tf_object_detection_node runs the TensorFlow graph.

After each object detection run, the result is returned to the state machine where any objects detected are compared against the name of the object that it is searching for. If the object is not detected, the camera/head is moved to the next position and a new scan is requested. If the object in question is detected, then the camera is held in that position allowing the operator to view the camera feed. When the operator is ready to move on, they can either acknowledge and continue with the search by pressing the 'a' key or cancel the search with the 'c' key.

The node on the far right of the graph, serial_node, is used to communicate with an Arduino Nano which controls the servos moving the head/camera.

From the graph, it looks like some topics like the /missions/acknowledge and tf_object_detection/result are not connected to the rodney_missions_node, this is only because the graph is auto generated and the node connects to these topics on the fly as it steps through the state machine.

In depth details of the nodes can be found in the "Rodney - A long time coming autonomous robot" series of articles.

Using the Code

Testing in a Non ROS Environment

To test just the object detection library, run the following command from the tf_object_detection/scripts folder.

$ ./non-ros-test.py

Note: As the TensorFlow session is opened each time the script is run, the TensorFlow graph takes a while to run as the model will be auto tuned each time.

After a short period of time, an image with the bounded objects and object labels will be displayed and a list of detected objects will be printed at the terminal.

Test input image and the resulting output image

Testing on the Robot Hardware

If not already done, create a catkin workspace on the Raspberry Pi and initialize it with the following commands:

$ mkdir -p ~/rodney_ws/src

$ cd ~/rodney_ws/

$ catkin_make

Copy the packages from the zip file along with the ros-keyboard package (from https://github.com/lrse/ros-keyboard) into the ~/rodney_ws/src folder.

Build the code with the following commands:

$ cd ~/rodney_ws/

$ catkin_make

Having built the code, all the nodes are started on the Raspberry Pi with the rodney launch file.

$ cd ~/rodney_ws/

$ roslaunch rodney rodney.launch

We can build the code on a separate workstation and then start the keyboard node on that workstation with the following commands:

$ cd ~/rodney_ws

$ source devel/setup.bash

$ export ROS_MASTER_URI=http://ubiquityrobot:11311

$ rosrun keyboard keyboard

A small window whose title is "ROS keyboard input" should be running. Make sure keyboard window has the focus and then press '3' key to start the mission 3.

Video of Rodney running mission 3 (Dog detection)

Points of Interest

In this article, not only have we looked into using the Object Detection API and pre-trained models but integrated it into an existing project to use in a practical way.

History

- 2018/12/01: Initial release

- 2018/12/11: Subsection change

- 2019/04/28: Fixed broken link to Raspberry Pi image instructions