Source code can be downloaded only from GIT repository because it has more than 100MB and CodeProject only allows 10.

Preface

The ideas presented in this article have been implemented in my incipit search engine for RISM catalogue which can be found here. The project uses ASP.NET Core, Entity Framework, Blazor, Knockout.js, Manufaktura Controls and MySQL database. The full source code can be found at https://github.com/manufaktura-controls/rism. You can read about other technologies used in this project in my other articles here.

The Problem

Many catalogues of written music such as Oskar Kolberg’s Catalogue or Répertoire International des Sources Musicales (RISM) contain incipits, that is short fragments of music material written in musical notation. These fragments allow the user to search musical sources by musical criteria such as melody and rhythm.

In this article, I am going to discuss querying by melody. The main criteria that should be met by such a search are:

- Searching with transpositions – the same melody can be written in different keys and the search engine should find results regardless of key,

- Ordering results by similarity – variants of the desired melody have to be placed in results and ordered by similarity to the query.

Various approaches have been made to this topic among which it is worth mentioning two:

The main disadvantage of these two methods is that every query is traversing all the records in the database. This effects in poor performance on large sets of data. This is not the case in Kolberg’s Catalogue because the size of the dataset is relatively small. Monochord Project, however, must be deployed on multi-core system and use parallel processing.

The solution to this problem is to pre-filter data before calculating similarity. In this article I will explain how I did this in my search engine for RISM data (http://musicalsources.org/).

Searching Musical Incipits in Metric Space

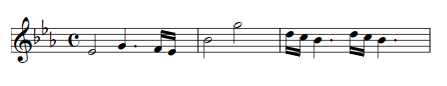

In this approach, we take into account only the intervals, that is, the distances between the sounds expressed in halftones. Each incipit can be represented as a vector in n-dimensional carthesian space where n is the number of intervals. For example this melody:

Can be expressed as a sequence of numbers: 4, -2, -2, 7, 9, -5, -2, -2, 4, -2, -2.

The similarity between two melodies is expressed as a distance between points in space that represent these two melodies. We calculate it using this formula:

We want to calculate the similarity to this melody:

Which is expressed as a sequence of 4, 2, 2. We take into account only the number of intervals that appear in the query so the distance between two melodies is:

To express the similarity in percentage (which is more legible to users) we have to assume that a specific arbitrary distance equals 0% and a distance of 0 equals 100%. We calculate the similarity from the following formula:

If we assume that max distance is 12, the similarity score equals 52.8%.

These calculations have to be done for every record in the database before the results are sorted. Now I am going to show how to filter the dataset before calculating similarity and sorting.

Locality-Sensitive Hashing (LSH)

LSH algorithm divides space into smaller cells than can be used to pre-filter our data because similar melodies fall in the same cell. To explain how the algorithm works we need first to introduce some concepts: space, plane, plane group and LSH hash.

Space is a set that contains all the incipits which are described as vectors or points. Space has a number of dimensions that equals the number of intervals in the melody. For my purposes I use 1, 2, 3, 4, 5 and 6-dimensional spaces. If the melody has more than 6 intervals I only take the first 6 into account.

Plane (or hyperplane) is a space that has 1 dimension less than the space that surrounds it (ambient space). It always divides the higher-dimensional space in two. This table should clarify this concept:

| No of dimensions of space | No of dimensions of plane |

| 1 (line) | 0 (point) |

| 2 (plane) | 1 (line) |

| 3 (space) | 2 (plane) |

| 4 (4-dimensional hyperspace) | 3 (space) |

| 5 (5-dimensional hyperspace) | 4 (4-dimensional hyperspace) |

| 6 (6-dimensional hyperspace) | 5 (5-dimensional hyperspace) |

If we want a plane to pass through the center of the coordinate system we can describe it as a single vector. This approach, however, will favor smaller intervals – bigger intervals will fall into larger “cells”. To make the hash distribution equal, we can describe plane as two vectors: the first defines the “alignment” of the plane and the second defines the translation.

For every point in space, we can determine if it is on the one side or on the other side of the plane. We calculate it using a dot product:

public static double DotProduct(Vector vector1, Vector vector2)

{

if (vector1.Length != vector2.Length) throw new ArgumentException("Lengths do not match.");

var sum = 0d;

for (var i = 0; i < vector1.Length; i++)

{

sum += vector1[i] * vector2[i];

}

return sum;

}

The goal of the algorithm is to generate a fixed number of random planes. Then, for each point (a melody), we determine its relation to every plane. If the point is on one side of the plane, we write 0 or if the point is on the other side of the plane we write 1:

public long ComputeHash(Vector point)

{

long hash = 0;

long orderOfMagnitude = 1;

foreach (var plane in Planes)

{

var pointCopy = point.Clone();

if (plane is TranslatedVector translatedPlane) pointCopy =

pointCopy.Translate(new Vector(translatedPlane.Translation).Invert());

hash += (GetSideOfAPlane(pointCopy, plane) ? 1 : 0) * orderOfMagnitude;

orderOfMagnitude *= 2;

}

return hash;

}

private bool GetSideOfAPlane(Vector point, Vector plane)

{

return Vector.DotProduct(point, plane) > 0;

}

We can write the result as a binary number like 10010 or a decimal number like 18. This is a LSH hash. Note that we have to store separate hashes for every number of dimensions. We can’t just take a higher-dimensional space and fill the remaining dimensions with zeros because zero is treated as a perfect unison.

A plane group is a concept that can be used to avoid situations when similar melodies accidentally fall into wrong cells. We create a few groups of planes and calculate hashes separately for each group so each melody has more than 1 hash. This is optional as it leads to better search accuracy but lower performance.

Effect on Performance

Experiment was done on MySQL database. This is the execution plan of a query without LSH optimization. As you can see the whole table is scanned and then the rows are ordered:

SELECT i.Id, i.MusicalNotation, i.Clef, i.KeySignature, i.TimeSignature,

i.CaptionOrHeading, ms.ComposerName, ms.Id, i.TextIncipit, ms.Title, i.VoiceOrInstrument,

100 - (SQRT(POW(i.Interval1 - -8, 2) + POW(i.Interval2 - 5, 2) + POW(i.Interval3 - -7, 2) +

POW(i.Interval4 - 5, 2) + POW(i.Interval5 - -7, 2) +

POW(i.Interval6 - 2, 2))) * (100 / 12) as Relevance

from incipits i inner join musicalsources ms on ms.id = i.MusicalSourceId

order by Relevance desc LIMIT 100 OFFSET 0

Now we add filtering by spatial hash:

SELECT i.Id, i.MusicalNotation, i.Clef, i.KeySignature, i.TimeSignature,

i.CaptionOrHeading, ms.ComposerName, ms.Id, i.TextIncipit, ms.Title, i.VoiceOrInstrument,

100 - (SQRT(POW(i.Interval1 - -8, 2) + POW(i.Interval2 - 5, 2) +

POW(i.Interval3 - -7, 2) + POW(i.Interval4 - 5, 2) +

POW(i.Interval5 - -7, 2) + POW(i.Interval6 - 2, 2))) * (100 / 12) as Relevance

from incipits i inner join musicalsources ms on ms.id = i.MusicalSourceId

WHERE i.Hash6d = 78126898370

order by Relevance desc LIMIT 100 OFFSET 0

The execution plan looks like this:

Note that LIMIT 100 OFFSET 0 doesn’t improve performance in any of the queries because sorting forces all rows to be scanned. Sorting in the second query is faster because it operates on pre-filtered subset of records.

This table shows how locality-sensitive hashing affects performance of the queries:

Query

(intervals) | No of dimensions | No of planes | Without LSH [s] | With LSH hash [s] | With LSH hash

on indexed column [s] |

| 4 3 5 -1 | 4 | 10 | 5,44 | 4,84 | 5,48 |

| 4 3 5 -1 -4 | 5 | 10 | 5,44 | 5,14 | 7,20 |

| 4 3 5 -1 | 4 | 20 | 5,46 | 4,31 | 3,48 |

| 4 3 5 -1 -4 | 5 | 20 | 5,66 | 4,39 | 2,64 |

| 4 3 5 -1 | 4 | 40 | 5,60 | 4,61 | 3,90 |

| 4 3 5 -1 -4 | 5 | 40 | 5,87 | 4,75 | 2,20 |

| -8 5 -7 5 -7 | 5 | 40 | 5,78 | N/A | 0,02 |

| -8 5 -7 5 -7 2 | 6 | 40 | 5,93 | N/A | 0,17 |

As can be seen, pre-filtering data with spatial hash reduces query time in most cases. Applying index on hash column vastly reduces query time in most queries but can increase the cost in some cases. The best effects are achieved when user enters a rarely occurring melody. If the user searches for common interval patterns like scales or arpeggiatios the effect on performance can be worse because many melodies share the same spatial hash.