Introduction

Why even make a regular expression engine when .NET includes a rich regular expressions library?

First of all, there are two major classes of regular expression engine - backtracking and non-backtracking. Most modern regular expression engines do backtracking. Backtracking engines have greater expressive power than non-backtracking engines, but at the cost of performance.

Why make a non-backtracking engine?

Well, for starters, they out-perform the backtracking engines on short strings over and over. Secondly, they are more suitable for operating directly on a streaming source, whereas backtracking regex engines generally require the text to be preloaded into memory.

More generally, the style of regular expression engine provided by .NET is geared toward matching patterns in long strings. It's not as suitable for lexing tokens out of a stream. Lexing is of primary importance to parsers, and so the regular expressions used for lexing terminals are often implemented using a non-backtracking engine.

Background

Lexing is the process of breaking an input stream of characters into "tokens" - strings of characters that have a "symbol" associated with them. The symbol indicates what type of string it is. For example, the string "124.35" might be reported as the symbol "NUMBER" whereas the string "foo" might be reported as the symbol "IDENTIFIER".

Parsers typically use lexers underneath, and then compose the incoming symbols into a syntax tree. Because lexers are called in core parsing code, the lexing operations must be reasonably efficient. The .NET regular expression isn't really suitable here, and while it can work, it actually increases code complexity while diminishing performance.

Included in this project is a file called "FA.cs" that contains the core code for a regular expression engine using finite automata which resolves to the humble yet ubiquitous state machine.

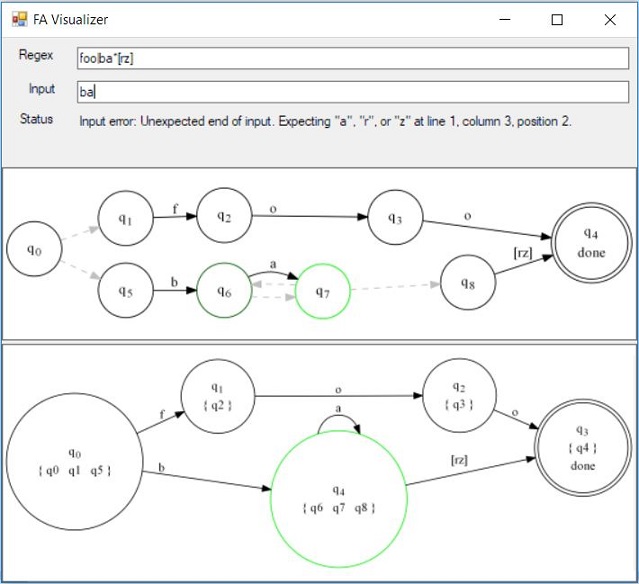

Finite state machines are composed of graphs of states. Each state can reference another state using either an "input transition" or an "epsilon transition". An input transition consumes the specified input before moving to the destination. An epsilon transition moves to a destination state without consuming or examining any input. A machine with one or more epsilon transitions is considered "non-deterministic" (NFA) and may be in more than one state at any given time. Non-deterministic state machines are more complicated and less efficient, but fortunately, there is a deterministic state machine (DFA) for any non-deterministic state machine, and an algorithm to turn an NFA into a DFA using a technique called powerset construction - math that is out of the scope here to explore.

The closure of a state is the state itself and the unique set of all states reachable from that state, on either an epsilon or input transition. The closure represents the state machine indicated by the root state. These graphs can be recursive.

The graph for a state machine matching "foo" is just below:

The closure of q0 is { q0, q1, q2, q3 } because each of those states are connected to q0 either directly or indirectly.

Each time a black arrow is crossed, the input must match the character(s) above the arrow to proceed along that path. Once a double circle (q3) is encountered, the machine "accepts" the input as valid/matching and returns the token under the state name, in this case "foo" is under q3 so it is what will be returned once the input is matched.

Now let's match an identifier of the form [A-Z_a-z][0-9A-Z_a-z]*

As you can see, looping is fairly straightforward.

Now let's get useful. Lexers (aka tokenizers) distinguish between one of many different patterns of input. Let's build one that represents an identifier, integer or a space.

You'll note that there are two ways to match an int (q2, q4), but they each return the same symbol "int" as a result.

Each of the machines presented was deterministic (each is a DFA). A DFA machine will only ever be in one state at a time. There are also non-deterministic machines, or NFAs, which can be in several states at the same time!

You'll note this machine has gray dashed lines in it. These are epsilon transitions or transitions on epsilon. Epsilon simply means "no input" in this context. Every time a machine encounters a dashed arrow, it is automatically in the state pointed to by that arrow as well as itself. It can be said that it transitions on no input.

Starting out in q0 also puts you in q1, q4, q5, q7, q8 and q11 as well! This set of states, that is, the set of states reachable from this state on epsilon transitions is called the epsilon closure.

These NFA machines are easier to compose/build out of several other machines, but harder to run because they're more complex.

As mentioned, there's a DFA machine for every NFA machine, and an algorithm for converting an NFA to a DFA.

This code allows you to painlessly create these machines, to make pretty graphs like the above, (with the help of Graphviz), to generate super fast code to run a given state machine, to run a state machine at runtime without generating the code first, to translate regex expressions to machines and back again, and to otherwise examine and compose the machines.

Using the Code

Below is included in the sample project, and heavily commented to show you the basics of using this library:

var lexer = FA.Lexer(

FA.Parse(@"[A-Z_a-z][0-9A-Z_a-z]*", "id"),

FA.Parse(@"0|([\-]?[1-9][0-9]*)", "int"),

FA.Parse(@" ", "space")

);

lexer.TrimNeutrals();

var dfaLexer = lexer.ToDfa();

dfaLexer.TrimDuplicates();

Console.WriteLine("Rendering graphs");

try

{

lexer.RenderToFile(@"..\..\..\lexer_nfa.jpg");

dfaLexer.RenderToFile(@"..\..\..\lexer_dfa.jpg");

}

catch

{

Console.WriteLine("GraphViz is not installed.");

}

Console.WriteLine("Generating C# Code");

using (var tw = new StreamWriter(@"..\..\..\Generated.cs"))

{

tw.WriteLine("namespace {0}", typeof(Program).Namespace);

tw.WriteLine("{");

tw.WriteLine("partial class Program {");

dfaLexer.WriteCSharpTryLexMethodTo(tw, "Value");

tw.WriteLine();

dfaLexer.WriteCSharpLexMethodTo(tw, "Value");

tw.WriteLine();

tw.WriteLine("}");

tw.WriteLine("}");

}

var test = "foo 456 bar 123 baz";

Console.WriteLine("Runtime Lexing:");

var pc = ParseContext.Create(test);

pc.EnsureStarted();

try

{

while (-1 != pc.Current)

{

var tok = dfaLexer.Lex(pc);

Console.WriteLine("Token: {0}, Value: {1}", tok.Key, tok.Value);

}

}

catch(ExpectingException eex)

{

Console.WriteLine("Error: {0}", eex.Message);

}

Console.WriteLine();

Console.WriteLine("Compiled Lexing:");

pc = ParseContext.Create(test);

pc.EnsureStarted();

try

{

var sb = new StringBuilder();

while (-1 != pc.Current)

{

var tok = LexValue(pc,sb);

Console.WriteLine("Token: {0}, Value: {1}", tok.Key, tok.Value);

}

}

catch (ExpectingException eex)

{

Console.WriteLine("Error: {0}", eex.Message);

}

Let's take a look at the generated code now:

q1:

if((pc.Current>='0'&& pc.Current<='9')||

(pc.Current>='A'&& pc.Current<='Z')||

(pc.Current=='_')||

(pc.Current>='a'&& pc.Current<='z')) {

sb.Append((char)pc.Current);

pc.Advance();

goto q1;

}

return new System.Collections.Generic.KeyValuePair<System.String,string>("id",sb.ToString());

As you can see, this is state q1, which corresponds to the last part of our "id" token in the graph above.

You can see the output transitions from this state encoded into the if.

You can see the arrow pointing back to itself using the goto.

You can see that if the if condition isn't satisfied, it ends with a successful result that returns a token.

All of these properties are reflected in the graph above if you look for them.

Points of Interest

Generating regular expressions - textual representation - from an FA is non-trivial. Parsing them from a textual representation is trivial. This is almost exactly the opposite of how syntax trees usually are in terms of coding them.

The generated DFAs are an order of magnitude faster in release builds than debug builds. I still don't know why. Regardless, they're still generally the fastest option in any build configuration.

The table based lexer can be faster than the pure generated lexer for large state machines, but it takes a bit more memory. The generated lexer is fast for smaller lexers. After more than 100 states it's a good idea to test the performance.

GraphViz Dot is deceptively complicated and expressive.

It took way too many iterations of this design over the years to get one I was happy with that handled general use cases well, including error handling. I finally got it fast, consistent, and easy in the right combinations.

History

- 26th March, 2019: Initial release

- 30th March, 2019: Performance improvements, feature adds, FA visualizer project, and a simple expression evaluator

- 31st March, 2019: Added table based lexer and generation methods