Introduction

Managed memory in .NET is separated into "stack" memory and "heap" memory. The most important types of "heap" are SOH (Small Object Heap) and LOH (Large Object Heap). From the names of memory types, it becomes clear that SOH stores only small objects and LOH stores large objects. In this article, I will consider a possible problem with LOH memory. Other memory types are out of the scope of this article.

LOH

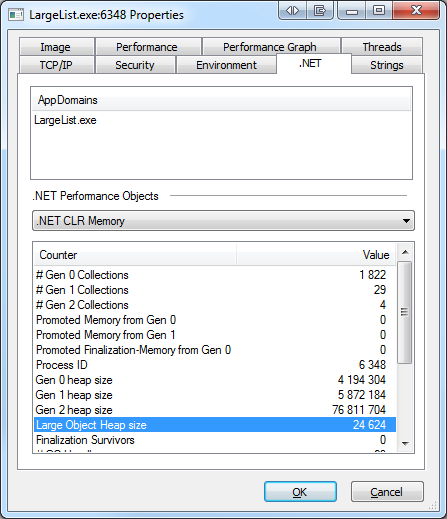

How does the CLR decide which objects must be stored in LOH and which objects must be stored in SOH? Unfortunately, I couldn't find an exact answer to this question, but my own experiments and investigation shows that an object is created in LOH when the object size is more than 80000 bytes. Possibly, Microsoft decided to use this size of memory because tests showed a balance between performance and memory usage, but it's a question to guys from Microsoft. The size of the memory can be obtained programmatically, or you can use some tools, "Process Explorer" for example.

Some LOH features and problems associated with these features

- Objects in LOH cannot be moved; it means that memory becomes fragmented and calls to memory become slower.

- LOH memory only increases and never decreases, even if GC has finished garbage collection. This means that, you could get

OutOfMemoryExceptions. This problem is particularly there in x86 machines where memory is limited, with only 3 GB for each process.

Such problems can occur only if you use large collections of structures. It's not a good practice to create a lot of structures, but what can we do if we already have large arrays with millions of records and all these records have value types? To begin with, we can try to replace all keywords from 'struct' to 'class' in our solution. For most types of applications, this can solve the problem, but we will get another problem: a lot of NullReferenceExceptions will haunt us throughout our solution. My solution for this problem is simple: we can use a paged array (an array of arrays) instead of a simple, one dimensional array.

LargeList implementation

First of all, we should calculate the object size to decide if we should extend an array for this object or use an one dimensional array. The 'sizeof' operator can be used only in an unmanaged context, but the .NET Framework provides a 'Marshal' static class with a 'SizeOf' method in it, and this is exactly what we need. Here is a method that returns the size of any object type.

public static int GetSize<T>()

{

Type type = typeof(T);

int result;

if (type.IsValueType)

{

result = type.IsGenericType ?

Marshal.SizeOf(default(T)) : Marshal.SizeOf(type);

}

else

{

result = IntPtr.Size;

}

return result;

}

All that is needed now is to just implement the IList<T> interface correctly with its methods and properties, and store the collection in a paged array (see the '_items' field in the 'LargeList' class).

public class LargeList<T> : IList<T>

{

private const int DefaulPageSize = 80000;

private readonly int _objectSize;

private readonly int _itemsInPage;

private T[][] _items;

private int _count;

private int _pagesCount;

...

}

In the download file of this article, you can find a LargeList with full implementation and unit tests. I think it's not difficult to understand the code of 'LargeList', especially because 'LargeList' has standard methods of 'IList' and some helpful methods that are very simple. I have done some performance testing and compared the performance of LargeList and the .NET List. The results are not comforting: the standard .NET List from the System.Collections.Generic namespace worked much faster than a LargeList several times. I think it happens because LargeList doesn't have a 'Capacity' property and relocates memory for each Insert/Update/Delete operation. You can implement Capacity in LargeList yourself, or wait till I do it. I'm planning to implement 'Capacity' and do some performance testing; also, I'm planning to implement some kind of sorting in LargeList and improve performance.

Thanks for reading, and hope this article can help somebody save time; but my advice - do not use structures at all.

History

- 1.0 - Now

LargeList supports the 'Capacity' property and doesn't allocate memory after each Insert/Delete.