Note that this supercedes the JsonUtility project.

Introduction

Tuples are a fixture in modern code, and it seems every language has some form or another of them, usually in the form of associative arrays, or sometimes, templates and generics.

JSON itself is an interchange format which lends itself to representing simple data in nested associative arrays and lists, so you can build entire nested "tuple" structures and represent them as JSON.

JSON has many other uses as well, particularly as a repository and wire format, but we're more interested in the tuple aspect of its structure here.

The main limitation of JSON is it's so simple it doesn't handle very many data types. Most notably, it's missing a pure integer, and there's no way to embed binary data directly and efficiently. It's also somewhat more verbose than it needs to be, requiring quotes around field names for example.

Obviously, we aren't here to reinvent a new wire format, but there's no harm in adding some syntatic sugar to the JSON grammar as long as we can emit proper JSON on request. So we don't need quotes around field names. We can extend our grammar to support integer values implicitly, and we can loosen some of the restrictions around string escaping and quoting.

For anything else, like binary fields, we can either avoid rendering them to JSON (exclusively native) or we can simply return the best effort approximation of the field as a JSON compliant value.

Also, we may want to parse fragments - like a field: "value" pair, for example, so we don't want the JSON limitation of a document being rooted by an object { }.

The important thing is - JSON data should work with our code out of the box. Struples are a superset of the JSON grammar - basically an extended JSON syntax that's about 80% syntatic sugar and 20% no-JSON-counterpart extensions.

We need a way to read to and from a textual format, and a way to query and manipulate the structures in order to be properly useful.

Struples use .NET IDictionary<string,object> and IList<object> implementations to store the in-memory trees, which combined with a few extension methods and DLR dynamic object support make querying these in memory trees painless and as familiar as traversing any structure in C#, and make this an effective document object model for struples.

There is a pull parser, StrupleTextReader similar to XmlTextReader for efficiently reading tuples from a streaming source. The parser contains some remedial query methods to select an item by field, as well as the basic reading, skipping and parsing methods.

Any returned struple can be converted to a string, or written to a stream or appended to a string builder so writing is as efficient as parsing, regardless of circumstance.

You'll never have to deal with an entire document loaded into memory if you don't want to, but you can. Because of this, this code should scale well, as long as it's used appropriately.

Speaking of performance, there's an important consideration here - well formedness.

The struple parser trades on speed and bulk data processing. Because almost all JSON data is machine generated, we can make a good-faith assumption that the data is well-formed. Specifically, the performance benefit is worth the risk. The struples code makes assumptions about the well-formedness of the data during many phases of parsing and querying. The upshot is increased performance, the downside is frailty in the face of invalid JSON data. It's almost always worth it.

Using the Code

Briefly, an example:

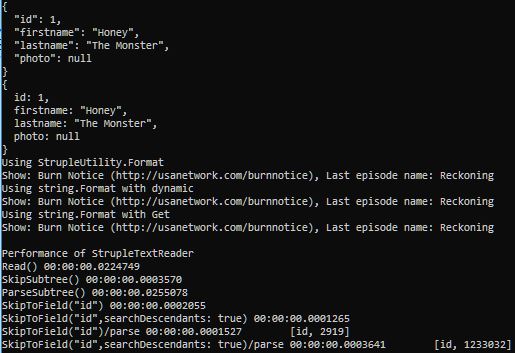

string struple = "{id: 1,firstname: 'Honey',lastname: 'Crisis', photo: ^iVBORw0KGgoAAAANS... }";

var doc = Struple.Parse(struple) as Struple;

var photo = doc["photo"] as byte[];

var id = (int)doc["id"];

var name = string.Concat(doc["firstname"], " ", doc["lastname"]);

dynamic employee = doc;

photo = employee.photo;

id = employee.id;

name = employee.firstname + " " + employee.lastname;

employee.lastname = "The Monster";

employee.photo = null;

StrupleUtility.WriteJsonTo(employee, Console.Out, " ");

Console.WriteLine();

StrupleUtility.WriteTo(employee, Console.Out, " ");

Console.WriteLine();

using (var tr = new StrupleTextReader(

new StreamReader(@"..\..\..\Burn Notice.2919.tv.json")))

{

doc = tr.ParseSubtree() as Struple;

Console.WriteLine("Using StrupleUtility.Format");

Console.WriteLine(

StrupleUtility.Format(

"Show: {name} ({homepage}), Last episode name: {last_episode_to_air.name}",

(Struple)doc)

);

dynamic show = doc;

Console.WriteLine("Using string.Format with dynamic");

Console.WriteLine(

string.Format(

"Show: {0} ({1}), Last episode name: {2}",

show.name,

show.homepage,

show.last_episode_to_air.name

)

);

Console.WriteLine("Using string.Format with Get");

Console.WriteLine(

string.Format(

"Show: {0} ({1}), Last episode name: {2}",

doc.Get("name"),

doc.Get("homepage"),

doc.Get("last_episode_to_air").Get("name")

)

);

Console.WriteLine();

ReadSkipParsePerf();

}

The query capabilities of StrupleTextReader are so limited as to be safely regarded as unfinished. However, what's there works.

History

- 4-1-2019 - Initial release