Glossary

- (A)NN - (Artificial) Neural Network

- HNN - Hopfield neural networkBackground (optional)

Introduction

The article describes the Hopfield model of neural network. The theory basics, algorithm and program code are provided. The ability of application of Hopfield neural network to pattern recognition problem is shown.

Opening

Here I will not talk about NNs in whole. The main goal of this article is to describe architecture and dynamics of Hopfield Neural network. The base concept of NN, like artificial neurons, synapses, weights, connection matrices and so on, are explained in countless books. If you want to know more about these things, I advise you to start with Simon Haykin “Neural networks” book. The Google search is also useful. And finally you can try out very good article of Anoop Madhusudanan’s, here on CodeProject.

Hopfield neural network (a little bit of theory)

In ANN theory, in most simple case (when threshold functions is equal to one) the Hopfield model is described as a one-dimensional system of N neurons – spins (si = ± 1, i = 1,2,…,N) that can be oriented along or against the local field. The behavior of such spin system is described by Hamiltonian (also known as the energy of HNN):

Where si is the state of the ith spin and

is an interconnection matrix organized according to the Hebb rule on M randomized patterns, i.e., on N-dimensional binary vectors Sm=(sm1,sm2,… smN) (m=1,2,…M). The diagonal elements of interconnection matrix are assumed to be zero (Ti,i=0). The traditional approach to such a system is that all spins are assumed to be free and their dynamics are defined only by the action of a local field, along which they are oriented. The algorithm of functioning of HNN is described as follows. The initial spin directions (neuron states) are oriented according the components of input vector. The local field  , which acts on the ith spin at time t (this field is produced by all the remaining spins of NN) is calculated as:

, which acts on the ith spin at time t (this field is produced by all the remaining spins of NN) is calculated as:

The spin energy in this field is  . If the spin direction coincides with the direction of the local field (

. If the spin direction coincides with the direction of the local field ( ), its position is energetically stable and the spin state remains unchanged at the next time step. Otherwise (

), its position is energetically stable and the spin state remains unchanged at the next time step. Otherwise ( ), the spin position is unstable, and the local field overturns it, passing spin into the state si(t+1)=-si(t) with the energy (

), the spin position is unstable, and the local field overturns it, passing spin into the state si(t+1)=-si(t) with the energy ( ). The energy of the NN is reduced reducing each time any spin flips; i.e., the NN achieves a stable state in a finite number of steps. At some precise conditions each stable states corresponds to one of patterns added to interconnection matrix.

). The energy of the NN is reduced reducing each time any spin flips; i.e., the NN achieves a stable state in a finite number of steps. At some precise conditions each stable states corresponds to one of patterns added to interconnection matrix.

The same in other words

So, digressing from math, let’s consider HNN from the practical point of view. Suppose you have M, N-dimensional binary vectors (fig. 3), and you want to store them in neural network.

Fig. 3. The set of binary patterns

In this case, you have to add them into the interconnection matrix, using simple summing (fig. 4).

Fig. 4. The formation of the interconnection matrix

Now the network is ready to work. You must set some initial state of NN and run dynamical procedure. The properties of HNN is such that during dynamics it passes into the some stable state which corresponds to the one of the patterns. And NN will pass in that pattern, which is most like the initial state of HNN.

Playing with demo

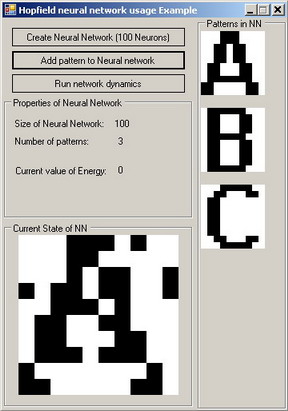

To see how it works in practice, run demo project (HopfieldRecognizer.exe).

- In the main window press "Create Neural Network (100 Neurons)" button. The neural network will be created.

- Then press "Add pattern to Neural Network" button and select any 10x10 image (you can find some in ABC folder). Add for example 3 patterns which correspond to A, B and C images.

- Select one of the added patterns (for example A) by clicking on it and define the value of initial distortion level in percents (you can leave it equals to 10%).

- Press "Run network dynamics" button. And here it is :)

Diving into the code

Let's consider the object model of neural network. It consists of two main classes: Neuron and NeuralNetwork. Neuron is a base class, which contains State property and ChangeState() method. State is an Int32 number, but actually it takes only two values: +1 or -1 (These values are also accessible from static class NeuronStates. Where NeorunStates.AlongField is equal to 1 and NeorunStates.AgainstField is equal to -1). ChangeState() receives value of field acting on the neuron and makes decision, either to change own state or not. ChangeState() returns true if State was changed.

public class Neuron

{

private int state;

public int State

{

get { return state; }

set { state = value;}

}

public Neuron()

{

int r = new Random().Next(2);

switch (r)

{

case 0: state = NeuronStates.AlongField; break;

case 1: state = NeuronStates.AgainstField; break;

}

}

public bool ChangeState(Double field)

{

bool res = false;

if (field * this.State < 0)

{

this.state = -this.state;

res = true;

}

return res;

}

}

NeuralNetwork class contains the typed list of the neurons, methods for add patterns and run dynamics:

public List<Neuron> Neurons;

public void AddPattern(List<Neuron> Pattern)

public void Run(List<Neuron> initialState)

The class constructor initializes all fields, creates lists and arrays and fills the interconnection matrix with zeros:

public NeuralNetwork(int n)

{

this.n = n;

neurons = new List<Neuron>(n);

for (int i = 0; i< n; i++)

{

Neuron neuron = new Neuron();

neuron.State = 0;

neurons.Add(neuron);

}

T = new int[n, n];

m = 0;

for (int i = 0; i < n; i++)

for (int j = 0; j < n; j++)

{

T[i, j] = 0;

}

}

The AddPattern() and AddRandomPattern() adds specified (or randomly generated) pattern into interconnection matrix:

public void AddPattern(List<Neuron> Pattern)

{

for (int i = 0; i < n; i++)

for (int j = 0; j < n; j++)

{

if (i == j) T[i, j] = 0;

else T[i, j] += (Pattern[i].State * Pattern[j].State);

}

m++;

}

The Run() method, runs dynamics of HNN:

public void Run(List<Neuron> initialState)

{

this.neurons = initialState;

int k = 1;

int h = 0;

while(k != 0)

{

k = 0;

for (int i = 0; i < n; i++)

{

h = 0;

for (int j = 0; j < n; j++)

h += T[i, j] * (neurons[j].State);

if (neurons[i].ChangeState(h))

{

k++;

CalculateEnergy();

OnEnergyChanged(new EnergyEventArgs(e,i));

}

}

}

CalculateEnergy();

}

Every time when any spin changes its state, the energy of system changes and NN raises EnergyChanged event. This event allows to subscribers to track the NN state in time.

Using the code

To use this code in your project, you have to add reference to HopfieldNeuralNetwork.dll. Then you need to create an instance of the NeuralNetwork class, and subscribe to EnergyChanged event (optional):

NeuralNetwork NN = new NeuralNetwork(100);

NN.EnergyChanged += new EnergyChangedHandler(NN_EnergyChanged);

private void NN_EnergyChanged(object sender, EnergyEventArgs e)

{

}

After that, you need to add some patterns to the interconnection matrix.

List<Neuron> pattern = new List<Neuron>(100);

NN.AddPattern(pattern);

And finally, you can run the dynamics of the network:

List<Neuron> initialState = new List<Neuron>(100);

NN.Run(initialState);

Last word

The HNN was proposed in 1982, and it is not the best solution for pattern recognition problem. It is very sensible for correlations between patterns. If you’ll try to add some very similar patterns to matrix (for example B and C from (ABC folder), they are flows together and form new pattern called chimera. It is also sensible for number of patterns stored in the interconnection matrix. It couldn’t be more than 10-14% from number of neurons. In spite of such disadvantages the HNN and its modern modifications is simple and popular algorithms.