Introduction

There are many different research works done in the area of evolution computation, what caused a variety of different evolutional algorithms to appear. Many researchers studied these methods extensively and tried their application to the great range of tasks. It is a known fact, that there are many different problems exist, which impossible to solve precisely within a reasonable period of time using traditional methods. Also there are many problems, which do not have a formalized solution approach, what makes their solution very hard or even impossible using traditional methods. As an example is Traveling Salesman Problem (TSP), where it is required to find a shortest path around specified amount of cities, visiting all of them only once and returning back to the start city in the end. To some of such problems evolution computations may be applied, which in many cases allow to find a good solution within a reasonable period of time. These methods do not guarantee to find the exact solution of particular problem, but they can find a very good solution, which may be very close to the best solution. That is why these methods become rather popular for solving many different problems, which can not be solved (or their solution is too hard) with traditional methods.

In this article, a C# library for evolution computation will be discussed. The library implements several popular evolutional algorithms, like Genetic Algorithms (GA), Genetic Programming (GP) and Gene Expression Programming (GEP), and can be applied for solution of many different problems. The usage of this library is demonstrated on four samples: Function Optimization, Symbolic Regression (Approximation), Time Series Prediction, Traveling Salesman Problem. The main idea of the library was to make it flexible and reusable, what allows to use it for solving different problems. The article does not have the aim of detailed description of evolutional algorithms. Instead of this, the article presents a brief introduction into the idea of these algorithms and presents a list of references, which allows to study these methods in details.

Evolution computations

The history of genetic computations begins somewhere in the 1960-ies, when the first work on the topic was presented by John Holland, who presented the first ideas about Genetic Algorithms (GA) based on the idea of evolution. Since that time, there were a lot of research works done in the area of evolution computations, which cased many different methods to appear [1 ]. Most of these methods were studied extensively and applied to many different problems solving, producing very good performance on great part of them. Despite the rather long history of genetic computations, these methods are still actively studied and there are different new approaches appear, which broaden the amount of problems, which could be solved using these methods.

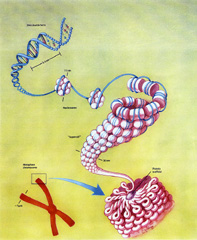

GA is based on the Darwinian principle of reproduction and survival of the fittest and analogs of naturally occurring genetic operations such as crossover (recombination) and mutation. This method operates with a population of individuals (chromosomes), where each of them encodes possible solution of a problem the genetic algorithm was applied to. In GA chromosomes are represented by fixed length strings (arrays of bits, numbers, etc), what makes the implementation of genetic operators very simple. Initially the population is initialized with random chromosomes, but then it starts evolving by applying such genetic operators like crossover, mutation and selection.

The simplest variant of crossover is one point crossover - a random point of two chromosomes is selected and these two chromosomes are exchanged with their parts:

Another popular variant of crossover operator is two point crossover - two random points are selected and chromosomes are exchanged with their parts between these two points. These two variants of crossover are the most popular in GA, but they are not the only existing. Actually there are many different variants of this operator, which vary from problem to problem. There are problems, were these two classical variants of crossover are no applicable at all, so they have their own custom variant specific for the problem.

The mutation operator operates with one chromosome only and it just changes chromosome randomly. The one point mutation changes only one gene in chromosome:

As in case of crossover, mutation operator also has many different variants, which are more specific to certain types of problems.

So, after the initial population is created, each iteration of the GA algorithm consists of the next steps:

- Crossover - random individuals are selected and a crossover operator is applied to them;

- Mutation - random individuals are selected and mutation operator is applied to them;

- Calculate fitness value of each individual;

- Selection - select individuals for the new generation.

The algorithm may be stopped after specified number of iterations or it may be stopped, when a reasonably good solution was found. Calculation of chromosome's fitness value is problem dependent - the value shows how good the chromosome is. The higher the value, the better the chromosome is, what gives it more chances to be selected into the next generation.

There are several selection operators exists [1 ]. The main idea of all of them is to select individuals for the next generation, giving more chances to better individuals - with higher fitness values. One of the most popular methods is called Elitism - certain amount of best chromosomes are selected to the next generations.

In 1992 John Koza proposed a new significantly new approach - Genetic Programming (GP) [2 ]. In GP individual population members (chromosomes) are not fixed length linear character strings that encode possible solution to the problem like in GA, but they are programs that, when executed, provide solution to the problem. These programs are expressed in GP as parse trees of varying sizes and shapes, what makes these methods flexible in their application to the wide range of problems. The difference in chromosomes representation is the main and almost the only difference between this method and GA. The overall Darwinian idea of survival stays the same, but there are changes in mutation and crossover operators and in fitness function calculation. Instead of mutation of an individual gene in a string chromosome, in GP mutation operator may regenerate as a single tree node, as a whole sub tree of the chromosome’s tree. The same happens with crossover – instead of exchanging with chromosome parts of the same length, in GP chromosomes exchange with their sub trees, which may differ as in size, as in shape. However it is always required to do some checking of chromosomes and trim them in case they are growing too long.

To compute fitness value of individual chromosome in GP, the chromosome is not just passed as value to some sort of function, which computes the fitness value. Instead of this, the program, which is represented by the chromosome, is executed and fitness value is calculated depending on the output of the program.

In 2001 Candida Ferreira introduced another one method called Gene Expression Programming (GEP) [3 ]. The method is similar in the idea with both Genetic Programming and Genetic Algorithms. On one hand the method also operates with programs, which after execution provide solution, so this makes it similar to GP. But the representation of these programs differs in GEP. Chromosomes in this method are not represented as trees, but these are linear entities of fixed length as in GA. This change in chromosome representation makes implementation of such genetic operators like mutation and crossover much simpler, but has some small constraints to make this operator safe.

| * + / a b c a |

| (a) | (b) |

| Chromosome representation in GP (a) and GEP (b) |

The above figure shows the difference in chromosome representation in GP and GEP methods. Both chromosomes encode the same program – algebraic expression (a + b) * (c / a). GP represents the chromosome as a parse tree of the expression, but GEP represents it as linear strings, where nodes of the above parse tree are written in the left-right top-down order. The GEP string can be easily transformed back to a parse tree, filling it also from left to right and from top to down, taking into account the information about amount of each function’s parameters.

Using the library

Creating the library, the main idea was to make it flexible and reusable, what allows its usage for solving different problems. The code of the library is not dependent to any particular problem, but implements general concepts of evolution computations and such algorithms like Genetic Algorithms, Genetic Programming and Gene Expression Programming. Such entities of evolution computation like population, chromosomes, selection methods and fitness functions are implemented as separate classes, what makes it easy to combine them together for solving particular problem. In most cases, library user will need only to define a fitness function for his problem and then define type of chromosome, selection algorithm and some other parameters, like population size, mutation and crossover rate, etc. In the case, if particular problem requires some specific variants of chromosomes or genetic operators like mutation and crossover, user can create his own chromosome class implementing IChromosome interface or deriving from one of already existing chromosome's classes. The same refers to selection methods and fitness functions - if somebody requires a custom selection algorithm or fitness function, he need create a class implementing ISelectionMethod or IFitnessFunction interface respectively. After creation of any custom classes, which implement the above defined interfaces, these classes will work with all other classes of the library, extending it and allowing to solve specific problems.

|

To demonstrate the use of this library, four samples will be described, which use different algorithms of evolution computation:

- Function optimization (Genetic Algorithms);

- Symbolic regression (Genetic Programming and Gene Expression Programming);

- Time Series Prediction (Genetic Programming and Gene Expression Programming);

- Traveling Salesman Problem (Genetic Algorithms).

Function Optimization

Function optimization is one of the classical problems for demonstrating Genetic Algorithms. To solve the problem with the help of this library, all you need is to define the optimization function, which is positive on the optimization range, and then create a genetic population, specifying desired parameters of the evolutional algorithm:

public class UserFunction : OptimizationFunction1D

{

public UserFunction( ) :

base( new DoubleRange( 0, 255 ) ) { }

public override double OptimizationFunction( double x )

{

return Math.Cos( x / 23 ) * Math.Sin( x / 50 ) + 2;

}

}

...

Population population = new Population( 40,

new BinaryChromosome( 32 ),

new UserFunction( ),

new EliteSelection( ) );

population.RunEpoch( );

In the above sample, a population of 40 chromosomes is created, where each of them is a binary chromosome of 32 bits length, an elite selection method is used and a fitness function is used, which is aimed for optimization of one-dimensional functions. In the above sample and all other samples, you will no see any clear references to Genetic Algorithms or other evolutional methods. Population creation is the same in all implemented genetic methods. The main thing, which determines the type of method being used, is the chromosome itself, because the chromosome defines the representation of problem solution and the implementation of genetic operators in use.

Symbolic regression (Approximation)

The aim of the symbolic regression problem is to find the best approximation function for the input data. To solve the problem the Genetic Programming or Gene Expression Programming methods could be used. Using both of these methods, it is possible to find an expression, which takes an X value and some constants as parameters and provides an output value, which is to close the real function's Y value.

The code to solve the problem is rather similar to the above code. We will use the same population class and the same selection method's class. The only different parts are the fitness function, what is obvious, because we have another problem, and the chromosome's class. To solve the task we can use as GPTreeChromosome class in case we would like to use Genetic Programming method, or GEPChromosome class in case we would like to use Gen Expression Programming.

double[,] data = new double[5, 2] {

{1, 1}, {2, 3}, {3, 6}, {4, 10}, {5, 15} };

Population population = new Population( 100,

new GPTreeChromosome( new SimpleGeneFunction( 6 ) ),

new SymbolicRegressionFitness( data, new double[] { 1, 2, 3, 5, 7 } ),

new EliteSelection( ),

0.1 );

population.RunEpoch( );

In the above sample, the function, which is going to be approximated, is defined with two-dimensional array of (X, Y) pairs. Another interesting thing of the above sample is the last argument of the Population class's constructor - the value specifies that 10% of new generation will consist of new random chromosomes, but 90% will be the best members of current generation.

Time Series Prediction

The Time Series Prediction problem is aimed to build a model, which predicts future function's values based on the history - on the past function's values. To achieve the task the model is build (trained) on the provided training data samples and, when the model starts producing satisfactory results on the training set, the model is used to predict future function's values.

double[] data = new double[13] { 1, 2, 4, 7, 11, 16, 22, 29, 37, 46, 56, 67, 79 };

double[] constants = new double[10] { 1, 2, 3, 5, 7, 11, 13, 17, 19, 23 };

int windowSize = 5;

Population population = new Population( 100,

new GPTreeChromosome( new SimpleGeneFunction( windowSize + constants.Length ) ),

new TimeSeriesPredictionFitness( data, windowSize, 1, constants ),

new EliteSelection( ) );

population.RunEpoch( );

As it can be seen, the code for time series prediction problem is almost the same as the code for symbolic regression - we only changed the fitness function and some parameters of the algorithm. This fact demonstrates the easy use of the library and its reusability.

Traveling Salesman Problem

The Traveling Salesman Problem is aimed to find the shortest path around the specified amount of cities, visiting each city only once and returning to the start city in the end. The problem is known as NP-hard problem and its solution with traditional methods may take extremely long time in cases of big amount of cities. However, Genetic Algorithms may be used to find a solution of the problem, which can be rather close to the exact solution, within a reasonable period of time.

Population population = new Population( populationSize,

new PermutationChromosome( citiesCount ),

new TSPFitnessFunction( map ),

new EliteSelection( )

);

Taking into account, that PermutationChromosome class is part of the library, we see, that it is required to create only new fitness function (TSPFitnessFunction) for the new problem and use the rest of the existing code to solve the new problem. However, it is possible to improve the performance of the algorithm. The default generic PermutationChromosome class allows to find the solution more or less good solution, but inheriting the class and overriding crossover operator will lead to better performance. The custom TSPChromosome class (see application source codes) implements so called greedy crossover, which allows to find better solution within shorter period of time.

For many years the Genetic Algorithms method have been providing the best results for solving Traveling Salesman Problem. The problem is so popular and actual, that each year a competition is held for the best method to solve the problem. And it is know, that in the end of 1990-ies, another approach was used for the problem solving and it demonstrated better performance. The new method also came from the world of Artificial Intelligence and is based on the idea of Ants Colonies (see Ant System (AS) and Ant Colony System (ACS) algorithms).

Conclusion

The four demonstrated samples show, that the library realized the initial aim - to make it flexible, extendable, reusable and easy to use. Although there are still much work to do, because of current vast range of evolutional computation methods, but still - the library can be used for solution of many different problems and can be easily extended to solve new ones. The work on the library helped me not only to get deeper knowledge of evolutional methods, but the result of it helped me in my research work on the Genetic Programming and Gene Expression Programming algorithms.

References

- Ajith Abraham, Nadia Nedjah and Luiza de Macedo Mourelle, Evolutionary Computation: from Genetic Algorithms to Genetic Programming // Genetic Systems Programming: Theory and Experiences, volume 13 of Studies in Computational Intelligence, pages 1-20. Springer, Germany, 2006.

- John R. Koza, Genetic Programming // Version 2 – Submitted August 18, 1997 for Encyclopedia of Computer Science and Technology.

- Ferreira, C., Gene Expression Programming: A new adaptive algorithm for solving problems // Complex Systems, Vol. 13, No. 2, pp. 87–129, 2001.

History

- [16.10.2006] - Initial publication of the article