Introduction

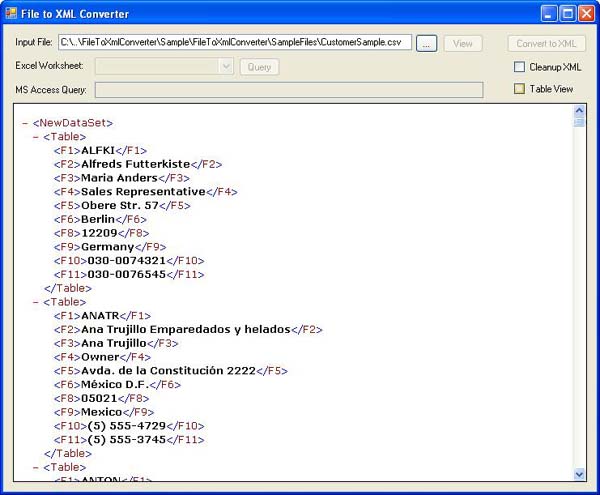

The AnyDataFileToXmlConverter utility is a Windows application that reads and converts data-files of various formats like Access databases, Excel spreadsheets, CSV files, tabbed-delimited files, etc., to the standard DataSet-compatible XML format used in .NET.

Background

A few months ago, I had a project that required data-collection, validation, and processing of data-files from a wide variety of formats. Due to the frequency of how the validation/processing of the data-files would be changing over time, and the need for standardization of the processing format, I decided to use the .NET DataSet-compatible XML format and then later XSLT to process/re-format the data. A number of the file formats could be automatically converted to this file format anyway (like Excel spreadsheets and Microsoft Access databases), but many of the other file formats (like tab-delimited, comma-delimited, pipe-delimited text files) would need to be reprocessed into the .NET DataSet-compatible XML format. The main class and this utility is a result of that work.

Using the Application

The AnyDataFileToXmlConverter utility is quick and easy to use - just open/load the file in the utility and the file will be automatically processed to the .NET XML format. For Excel spreadsheets, the worksheets in the workbook are enumerated, and a worksheet must be selected for processing to XML. In Microsoft Access databases, a query for the specific data to convert to XML must be specified before processing.

Several sample data-files are provided with the utility, which demonstrate the various common data-file formats that can be re-processed to XML. There are additional file-formats that can be processed (like pipe-delimited files), and the class can be easily modified to handle additional formats also. XML files can be loaded into the utility, but no processing is performed since they're already in the end-result format.

Also, there is an optional data-cleanup function that automatically removes any "junk" XML-nodes that are commonly created as a result of processing an Excel spreadsheet that contains empty or cleared rows at the end of a spreadsheet.

You can also change the output/results display from XML to a grid, for easier viewing and sorting.

Data-File Processing Examples

Comma-Separated File

Tab-Delimited File

Excel Spreadsheet

Microsoft Access Database

The AnyDataFileToXmlConverter Engine - How It Works

The main engine/processor of the AnyDataFileToXmlConverter utility is the RawFileConverter class, which uses the file-extension of the loaded file to determine which processing function to use to reprocess the file. For most of the file formats (with text-files being the exception), the Microsoft.Jet.OLEDB provider is used to automatically load the file into a DataSet, and then the XML is retrieved from the DataSet.

For text files, the file contents are evaluated to find the likely character delimiter between the data columns, and then each line of the file is split into columns according to the character delimiter. A DataTable is created and then loaded with all the data in the file, with each line in the file being converted to a DataRow and each field loaded into a column in that DataRow. Once the entire file has been processed to the DataTable, the XML is retrieved from the DataTable's DataSet object.

private static XmlDocument ConvertTextFile(string sFilePath)

{

XmlDocument xmlRaw = null;

StreamReader oSR = null;

try

{

DataSet dsTextFile = new DataSet();

DataTable dtTextFile = new DataTable();

DataRow drRows = null;

sFilePath = PreprocessNonStandardFiles(sFilePath);

char chrDelimiter = GetDelimiterCharacter(sFilePath);

oSR = new StreamReader(sFilePath);

oSR.BaseStream.Seek(0, SeekOrigin.Begin);

string sFirstLine = oSR.ReadLine();

bool bHeaderIsDataRow = false;

string[] sColumns = sFirstLine.Split(chrDelimiter);

if(sColumns.Length > MINIMUM_NUMBER_CSV_COLUMNS)

{

bHeaderIsDataRow = InitializeTableColumns(sColumns,

ref dtTextFile, true);

if (bHeaderIsDataRow == true)

{

oSR.BaseStream.Seek(0, SeekOrigin.Begin);

oSR.Close();

oSR = new StreamReader(sFilePath);

oSR.BaseStream.Seek(0, SeekOrigin.Begin);

}

}

dsTextFile.DataSetName = "NewDataSet";

dsTextFile.Tables.Clear();

dtTextFile.TableName = "Table";

dsTextFile.Tables.Add(dtTextFile);

while (oSR.Peek() > -1)

{

int iFieldIndex = 0;

string sLine = oSR.ReadLine();

string sLineTrimmed = sLine.Trim();

string[] sLineFields = sLine.Split(chrDelimiter);

if ((sLineFields.Length <= 0) ||

(sLineTrimmed.Length < MINIMUM_NUMBER_CSV_COLUMNS))

{

continue;

}

if (sLineFields.Length <= MINIMUM_NUMBER_CSV_COLUMNS)

{

continue;

}

if ((sLineFields.Length > dtTextFile.Columns.Count) &&

(sLineFields.Length > MINIMUM_NUMBER_CSV_COLUMNS))

{

if (dtTextFile.Rows.Count <= 0)

{

InitializeTableColumns(sLineFields, ref dtTextFile, false);

}

}

drRows = dtTextFile.NewRow();

foreach (string strField in sLineFields)

{

string sField = strField.Trim();

sField = sField.Replace("\"", "");

sField = sField.Replace("'", "");

sField = sField.Replace("$", "");

sField = sField.Replace("%", "");

sField = sField.Replace("-0-", "0");

sField = sField.Replace("&", "and");

if (dtTextFile.Columns.Count <= iFieldIndex)

break;

drRows[iFieldIndex] = sField;

iFieldIndex = iFieldIndex + 1;

}

dtTextFile.Rows.Add(drRows);

}

xmlRaw = CleanupRawXml(dsTextFile.GetXml());

}

catch (Exception ex)

{

throw new Exception("Error: ConvertTextFile", ex);

}

finally

{

oSR.Close();

}

return xmlRaw;

}

Conclusion

I hope you find this article and utility useful - it has come in quite handy a number of times, and the core class was recently incorporated into a generic FileDataProvider class for use as another .NET data provider like the SqlDataProvider and OracleDataProvider providers. But that's another article for another day. Enjoy!

License

This article has no explicit license attached to it, but may contain usage terms in the article text or the download files themselves. If in doubt, please contact the author via the discussion board below.

A list of licenses authors might use can be found here.