Introduction

The C++ compiler under VC 2005 has been updated with a number of significant enhancements which should be of interest even to C# developers that interface with C++ developed libraries.

The feature I wish to highlight is interoperability support provided by C++. In 2003 the functionality was simply know as IJW “It just works”. In VC 2005 the nomenclature “It Just Works” still applies but to distinguish the new features from its predecessor it has been dubbed formally as “C++ Interop”.

This technology allows programmers to intermingle managed & unmanaged code with the ability to call native functions directly, all without having to add any additional code. Through the judicious use of #pragma (un)managed, the developer can selectively decide on a function by function basis which are managed & which are native. Mixed and native code assemblies alike can be compiled using the /clr compiler switch. This will provide accessibility to the .NET platform as well as enabling interoperability support. This degree of flexibility should be enough to entice most programmers but there is more.

When used correctly “C++ Interop” can be considered a performance enhancement feature.

In many computationally intensive tasks, it may make sense to have the core functions compiled native, while the rest of the code is compiled managed. The reality is that native code will always have a shorter execution time than managed code. This is by no means a condemnation of managed code. The CLR provides an abundant set of features but all those runtime activities such as garbage collection (GC) consume CPU cycles which translate into a performance cost.

Data Marshalling is by far one the most costly aspects of native & managed interoperability. In C#, marshaling is done implicitly by the CLR when P/Invoke is called upon. At runtime P/Invoke uses a very robust mechanism for ensuring proper type transformations. In the C/C++ spirit of “programming to the metal”, C++ Interop simply copies across the native/managed boundary blittable types. It is up to the programmer to determine if such action is safe and appropriate for the given types. If customized marshaling is required it must be explicitly provided by the programmer. These decisions can be made on a call by call basis which isn’t possible under P/Invoke.

C++ Type

| .NET Framework Type

| Blittable

|

bool

| System.Boolean

| N

|

signed char

| System.SByte

| Y

|

unsigned char

| System.Byte

| Y

|

wchar_t

| System.Char

| N

|

double, long double

| System.Double

| Y

|

Float

| System.Single

| Y

|

int, signed int, long, signed long

| System.Int32

| Y

|

unsigned int, unsigned long

| System.UInt32

| Y

|

__int64, signed __int64

| System.Int64

| Y

|

Unsigned __int64

| System.UInt64

| Y

|

short, signed short

| System.Int16

| Y

|

Unsigned short

| System.UInt16

| Y

|

You still need to exhibit care when using any interoperability technique. Special compiler/linker transitions sequences, called “thunks”, are called each time a program traverses from managed to unmanaged code regardless of the language it was developed under. Interfaces should be designed carefully around this fact. Running chatty interfaces or in other words loops around thunks can be very expensive in terms of performance.

One of the effects of using the /clr switch during compilation is the creation of two entry points in the object module, one managed & one native. Depending upon the relationship between the caller & the called, your code could be subjected to double thunking.

You can do this easily by making a few seemingly innocuous choices while integrating your native & managed libraries. For starters you decide to compile all your modules using the /clr compiler switch to make life easier, at least that is what you thought. Secondly, you decide to keep using the dllexport for exposing various functions. You have just created an environment perfect for double thunking. The dllexport mechanism is native by design therefore if your caller is managed a thunk is performed to native types. The second unwanted thunk occurs if you happen to be calling a managed function in your mixed-mode assembly. If the called is managed your code will need to thunk again to managed in order to make the function call. The lesson learnt is never use the /clr switch across all modules without considering the caller/called relationship.

Per Microsoft MSDN

Double thunking refers to the loss of performance you can experience when a function call in a managed context calls a Visual C++ managed function and where program execution calls the function's native entry point in order to call the managed function.

By default, when compiling with /clr (not /clr:pure), the definition of a managed function causes the compiler to generate a managed entry point and a native entry point. This allows the managed function to be called from native and managed call sites. However, when a native entry point exists, it can be the entry point for all calls to the function. If a calling function is managed, the native entry point will then call the managed entry point. In effect, two calls are required to invoke the function (hence, double thunking). For example, virtual functions are always called through a native entry point.

One resolution is to tell the compiler not to generate a native entry point for a managed function, that the function will only be called from a managed context, by using the __clrcall calling convention.”

In the following example I show the ease & efficiency by which we can create a mixed (managed/unmanaged) assembly (DLL) and reference it from within a C# application. The C++ based DLL consists of four classes; Computation, Internal and AutoPtr and Simple. The Computation & Internal classes are native (unmanaged) structures representing computationally intensive tasks. The Simple & AutoPtr, support template, are built as managed structures which exist for the purpose of exposing various aspects of the computational code to a C# program.

The compiler will generate an error if you attempt to declare an unmanaged structure as a member of a managed class. What is permissible is the declaration of a pointer to the unmanaged code thus the creation of the AutoPtr template. The template serves simply as a convenience in ensuring that I apply the pimpl idiom consistently.

namespace CppNative {

#pragma managed

template<typename

T> refclass

AutoPtr

{

private:

T* _t;

public:

AutoPtr() : _t(new T){;}

AutoPtr(T* t) : _t(t){;}

T* operator->() {return _t;}

protected:

~AutoPtr() {delete _t; _t = 0;}

!AutoPtr() {delete _t; _t = 0;}

};

As an aside; CLI/C++ nows supports deterministic clean-up. CLI Types can now have real destructors, seamlessly mapped to the CLI Dispose idiom. CLI Types can also have finalizers.

CLI/C++ provides significant improvements & alignment with the ISO C++ Standard than the older Managed C++ Extensions which are still supported in VC 2005 as depreciated functionaility.

#pragma unmanaged

class Computation

{

private:

double _ans;

int _cnt;

public:

Computation() {_ans = 0; _cnt = 0;}

void accumulate (double amt) {_cnt++; _ans += amt;}

double mean() {return _ans / _cnt;}

double ans() {return _ans;}

int cnt() {return _cnt;}

};

class Internal

{

private:

Computation _algor;

public:

Internal() {;}

double getMean() {return _algor.mean();}

double getAns() {return _algor.ans();}

int getCnt() {return _algor.cnt();}

void calc()

{

vector<double> tst;

for (int x=1; x <= 10000; x++)

tst.push_back(x);

for_each(tst.begin(), tst.end(),

boost::bind(&Computation::accumulate,

&_algor, _1));

}

};

#pragmamanaged

public ref class Simple

{

private:

AutoPtr<Internal> _i;

public:

Simple() {;}

double getMean() {return

_i->getMean();}

double getAns() {return

_i->getAns();}

int getCnt()

{return _i->getCnt();}

void calc(){_i->calc();}

};

| CLI Reference Types (using ref keyword) factiods :

Not copy constructible. No notion of a copy constructor. Copies can be accomplished by providing implementation logic for the “Clone” virtual function.

Objects can physically exist only on the GC Heap

Full Stack based sematics. (Instantiated conceptually on the Stack.) Objects are allocated on the CLI Heap & an object reference is stored on the stack.

|

Accessing the managed objects of our DLL from within C# is so simple it seems almost magical. All you need to do is set up a reference to the dynamic link library & use it; “It just works”. Intellisense will even provide a list of all the accessible member functions.

using CppNative;

class Program

{

static void Main(string[] args)

{

Simple sim = newSimple();

sim.calc();

int cnt = sim.getCnt();

double ans = sim.getAns();

double mean = sim.getMean();

}

};

In review, at this point I have a mixed mode DLL and a C# console program which integrates quite nicely with it. What about the performance aspect? In order to get a handle on the performance differences, I first replicate the C# test harness in CLI/C++.

The intent is to use the console programs to look at the potential timing diferences between managed & unmanaged calls using blittable and non-blittable parameters from both C# and CLI/C++.

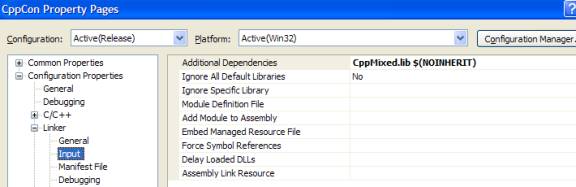

For implicit operations the mixed mode DLL library must be specified in the linker list of additional dependencies.

The main function within the CPP console program serves as a simple shell for looping over the managed & native functions under test. One important factor to take away is that the relative timing associated with the C++ code can be optimized for even better results. When dealing with managed & unmanaged types under C++, you can design your code such that the marshalling is done only once for any number of calls to the mixed mode library. This is not possible using P/Invoke. I am not advocating the use of chatty interfaces but for those instances that are unavoidable, this technique provides a means to average out the overhead across the number of calls being made.

int main(array<System::String ^> ^args)

{

#ifdef INTEROP

Console::WriteLine("Conversion done using : C++

Interop (Implicit P/Invoke)");

#else

Console::WriteLine("Conversion done using :

Explicit P/Invoke");

#endif

Console::WriteLine("Blittable data types");

Console::WriteLine("Ref class calc time, C

Func calc time");

int loop = 10;

for (int x = 0; x < loop; x++)

{

double mmtime = ManagedManaged();

double mutime = ManagedUnmanaged();

Console::WriteLine("{0}, {1}", mmtime, mutime);

}

Console::WriteLine("Non-blittable data types");

Console::WriteLine("Ref class calc time, C Func calc time");

for (int x = 0; x < loop; x++)

{

double mmtime = NonBlitManaged();

double mutime = NonBlitUnmanaged();

Console::WriteLine("{0}, {1}", mmtime, mutime);

}

}

Timing Results:

The timing results clearly show an advantage to using C++ Interop when calling non-blittable native C-functions. For the tests conducted, both C# and C++ yielded similar results when calling functions with blittable types in their signature.

C#

|

|

| C++

|

|

|

|

Non-blittable data types

|

| Non-blittable data types

|

|

|

Ref class calc time

| C-Func calc time

|

| Ref class calc time

| C-Func calc time

| Savings

|

Iterations = 100

|

|

|

|

|

|

|

6.585E-04

| 4.727E-04

|

| 6.367E-04

| 3.067E-04

|

|

|

1.050E-04

| 2.824E-03

|

| 1.087E-04

| 2.319E-04

|

| 91.79%

|

9.415E-05

| 3.246E-04

|

| 1.176E-04

| 2.349E-04

|

| 27.62%

|

8.493E-05

| 3.224E-04

|

| 1.006E-04

| 2.324E-04

|

| 27.90%

|

8.912E-05

| 3.199E-04

|

| 3.009E-03

| 2.190E-04

|

| 31.53%

|

8.968E-05

| 3.498E-04

|

| 8.716E-05

| 2.271E-04

|

| 35.06%

|

1.882E-02

| 3.294E-04

|

| 8.660E-05

| 2.319E-04

|

| 29.60%

|

8.912E-05

| 3.213E-04

|

| 8.716E-05

| 2.280E-04

|

| 29.04%

|

8.660E-05

| 3.199E-04

|

| 9.023E-05

| 2.277E-04

|

| 28.82%

|

8.828E-05

| 3.227E-04

|

| 8.744E-05

| 2.310E-04

|

| 28.40%

|

|

|

|

|

| Median

| 29.04%

|

Iterations = 10

|

|

|

|

|

|

|

5.414E-04

| 1.564E-04

|

| 5.202E-04

| 8.970E-05

|

|

|

2.403E-05

| 3.660E-05

|

| 1.370E-05

| 2.630E-05

|

| 28.14%

|

1.481E-05

| 3.548E-05

|

| 1.450E-05

| 2.600E-05

|

| 26.72%

|

1.788E-05

| 3.548E-05

|

| 1.960E-05

| 2.710E-05

|

| 23.62%

|

1.341E-05

| 3.660E-05

|

| 1.230E-05

| 2.630E-05

|

| 28.14%

|

1.760E-05

| 3.548E-05

|

| 1.060E-05

| 2.600E-05

|

| 26.72%

|

1.257E-05

| 3.492E-05

|

| 1.260E-05

| 2.630E-05

|

| 24.69%

|

1.090E-05

| 3.492E-05

|

| 1.090E-05

| 2.600E-05

|

| 25.55%

|

1.117E-05

| 4.889E-05

|

| 1.060E-05

| 2.600E-05

|

| 46.82%

|

1.117E-05

| 3.492E-05

|

| 1.090E-05

| 2.630E-05

|

| 24.69%

|

|

|

|

|

| Median

| 26.72%

|

Iterations = 1

|

|

|

|

|

|

|

5.272E-04

| 1.221E-04

|

| 5.272E-04

| 7.180E-05

|

|

|

4.470E-06

| 7.822E-06

|

| 4.190E-06

| 6.146E-06

|

| 21.43%

|

3.911E-06

| 6.984E-06

|

| 3.632E-06

| 5.587E-06

|

| 20.00%

|

3.632E-06

| 6.984E-06

|

| 4.190E-06

| 6.146E-06

|

| 12.00%

|

4.470E-06

| 7.822E-06

|

| 3.632E-06

| 5.587E-06

|

| 28.57%

|

3.632E-06

| 6.984E-06

|

| 3.352E-06

| 5.587E-06

|

| 20.00%

|

3.632E-06

| 6.705E-06

|

| 3.352E-06

| 5.308E-06

|

| 20.83%

|

3.632E-06

| 6.705E-06

|

| 1.341E-05

| 5.587E-06

|

| 16.67%

|

3.632E-06

| 6.705E-06

|

| 3.632E-06

| 5.308E-06

|

| 20.83%

|

3.632E-06

| 6.984E-06

|

| 3.352E-06

| 5.308E-06

|

| 24.00%

|

|

|

|

|

| Median

| 20.83%

|

| | | | | | | | |

Conclusion

C++ Interop is an extremely powerful tool that should be understood by C++ and C# developers alike especially when projects need to mix performance tuned C++ with other .NET developed code. C++\CLI is the only .NET language that supports the creation mixed-mode assemblies. Its optimization engine produces the fastest MSIL of all the Microsoft .NET languages. C++\CLI is also the only Microsoft language to offer parallelism through the use of OpenMP.

The complexity & performance demands on systems today necessitate the blending of capabilities provided by various languages. C++\CLI is certainly a first class .NET compliant product while retaining all its native capabilities.