Introduction

As many of you probably already know, .NET apps are not distributed as binary code, but uses a specialized format called 'MSIL' which is an abbreviation for Microsoft Intermediate Language. The idea behind the MSIL format is to provide a machine independent distribution format that promotes the ability to run .NET apps cross platform. This is done by the CLR (the .NET runtime engine), specifically by a 'just in time' compiler (a.k.a. jitter). The CLR hands the MSIL code to the jitter, which in turn compiles the code to its native format. Then, the code in its native format is handed to the CPU for execution. Unfortunately, this important virtue makes .NET apps vulnerable to malicious reverse engineering attacks. In fact, it's so easy to tamper that one might consider whether the advantage of using the framework is worthwhile, taking into account the risk involved in exposing the code to a potential hacker. To demonstrate how easy it is to tamper the code, we will look at an example.

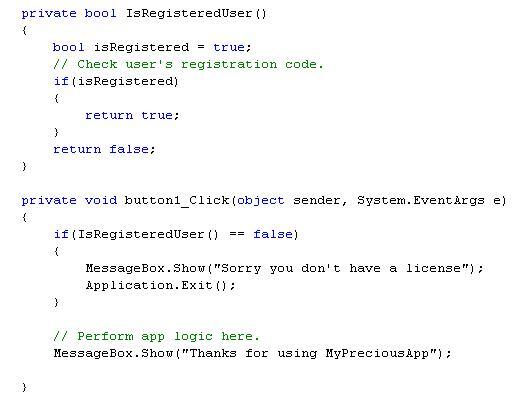

Let's say you are a developing a WinForms application called 'MyPreciousApp'. After spending months developing it, you are now ready to deploy it. Knowing that software pirates await you, you have decided to protect your app using your favourite licensing management component. Your code might look like this:

Readers might find it surprising that to disarm this protection scheme, a hacker doesn't need to bother himself more than a few minutes messing with your app. In fact, you don't need to be a hacker to do that. You will only need two simple utilities provided by Microsoft to do the job. First, you will have to use the ILDasm tool which is Microsoft's IL disassembler. This tool exposes the IL code instructions used by your program.

Now, to disarm the code protection, you will have to edit the IL code by simply removing the call to the 'IsRegisteredUser' method. This is done by removing the code marked by a round rectangle.

To complete the process, you will have to use the ILASM tool, again a tool provided by Microsoft that allows you to assemble a .NET app from MSIL code.

Finally, your application has been tampered with!

Breaking the misconception around strong name assemblies

Given the example shown, I would like to discuss three means that I've come across trying to overcome this problem, a problem I would like to call 'the unbearable lightness of tampering .NET apps'.

First, I would like to discuss 'Strong name assemblies'. It seems that there is much confusion revolving around this issue that I would like to clear away. This facility allows you to uniquely identify an assembly by giving it a strong name. The name actually consists of information used to identify it, and includes the assembly's text name, four-part version number, culture information (if provided), a public key, and a digital signature stored in the assembly's manifest. The digital signature is created during compilation by taking the content of the assembly and running it through some hashing algorithm; the result is encoded using the distributor's private key, and stored in the assembly's manifest.

Once the assembly is ready to be loaded into memory, a verification process is initiated by the CLR. The CLR takes the assembly, and runs it through the same hashing algorithm used during compile time. The result is then compared to the original signature store in it, the same one that was written to during the compilation phase. To decipher the signature, the CLR uses the assembly's public key.

The introduction of 'strong name assemblies' ensures that the same assembly that your program was compiled against will be loaded to memory during runtime. This solves a problem known as 'DLL hell', a situation that arises when a component is updated, potentially breaking other apps that depend on it. Moreover, strong name assemblies form a publisher's identity can be utilized by the user to define the publisher's code access permission. This means, for example, that the user may disallow a certain publisher to access files on its hard disk based on its identity.

Some developers are tempted to think that since strong name assemblies uniquely identify an assembly, they can be used as a code protection tool. They simply trust that if the assembly is tampered with, it will fail to be loaded by the CLR runtime engine. This is all true except that they fail to realize that strong name assemblies were not designed as a tamper resistance facility. Therefore, it's not surprising that the very fact an assembly is strong named can be altered by removing a few MSIL instructions, the same way as was illustrated in the previous section.

Going back to the 'MyPreciousApp' example, we can see that it has been given a strong name. That is evident by the .publickey attribute shown in the figure below. Removing this attribute from the MSIL code and reconstructing the assembly using the ILASM tool breaks the assembly's strong name, actually designating it as a private assembly. Breaking all assemblies belonging to an application in the same manner depicted above results in a program that is completely vulnerable to reverse engineering attacks.

Obfuscation and its drawbacks

A popular code protection technique that is used in the industry today is code obfuscation. Code obfuscators transform an application into one that is functionally identical but is much harder to understand. This is done by renaming meaningful symbol names such as variables, fields, and method names to non-meaningful ones. Code blocks are rearranged, thus making it harder to infer program logic.

While code obfuscators raise the bar for understanding program logic, they have some major drawbacks. First, they don't rename public method names since such methods can be called from other assemblies referencing the obfuscated assembly. This means that inter-assembly calls can be traced easily when looking at the MSIL code of an obfuscated assembly, since the original method names are in use. The following example illustrates exactly that:

The obfuscated code clearly shows that an external call to the System.Windows.Forms assembly is initiated by the main method. Since many programs rely on an external library component to manage authorization and authentication, this fault is major. By simply looking at the MSIL code, these calls can be intercepted and removed.

A second drawback is the issues obfuscation tools introduce when Reflection is used. Method calls that were performed through the usage of Reflection are likely to fail once the application has been obfuscated. This happens since the method has been renamed by the obfuscator but the call site still refers to the method by its original name.

Sure, many obfuscators allow the user to define the methods that shouldn't be renamed by it, but this raises the overhead on the R&D team as well as the QA team that now has to cope with bugs introduced as a result of obfuscating the code.

A third drawback is the ability to trace bugs once they are reported from the field. The ability to recover stack dump information through the usage of the System.Exception.StackTrace method is essential for tracing down the source of bugs once the application has been deployed. Imagine that a user sends you a bug report that says he is having problems using your application. The stack dump information reads as follows:

System.NullReferenceException: Object reference not set to an instance of an object.at a.a()at a.b()at a.c()at a.d()at a.a(Object A_0, EventArgs A_1)at System.Windows.Forms.Control.OnClick(EventArgs e)

This tells very little about the source of the problem, and can make R&D response in this case sluggish.

Code encryption based solution

In an effort to limit the visibility of MSIL to the prying eye, code encryption techniques are used to prevent a potential hacker from reverse engineering the code. Code encryption makes use of standard cryptographic algorithms to cipher the MSIL code, thus making it completely unreadable to humans or a disassembler. In that respect, obfuscation techniques fall short compared to code encryption techniques.

ILDASM or other disassemblers can't dump the content of the assembly simply because it no longer contains MSIL instructions. You might be wondering now 'how come the CLR reads the assembly contents and compiles it to native assembly instructions, given that the assembly doesn't contain any MSIL instructions?'.

The answer is quite simple, since the CLR engine can't cope with the encoded version of the code, it has to be deciphered before it is interpreted by it. This raises an important issue that many encryption based code protection tools I've been working with fail to realize. Once the code is deciphered, it's completely exposed to the prying eyes of a potential hacker in-memory, because the entire assembly loads into the memory in its MSIL form. This poses a security threat since once the assembly loads in-memory, it can be dumped to a file using standard memory dump tools.

You can actually build your own memory dump tool using a few Win32 API functions. First, you have to retrieve a process handle, this can be done using the OpenProcess API function:

HANDLE ph = OpenProcess(PROCESS_QUERY_INFORMATION | PROCESS_VM_READ, FALSE, processId);

'OpenProcess' takes the process access privileges and the process ID, the returned value serves as the process handle. Now, you have to access the specific assembly you would like to dump the contents to a file. Since a process potentially loads more than one assembly, we use the 'EnumProcessModules' API function to retrieve the module handles of all the images loaded by the process.

HMODULE modules[1000];DWORD nModules;

EnumProcessModules(procHndl, (HMODULE*)&modules, 1000*sizeof(HMODULE), &nModules);

Then, you have to identify the specific assembly file you are interested in. 'GetModuleFileNameEx' together with 'GetModuleInformation' can be used to identify that file and to retrieve its memory location.

GetModuleFileNameEx(ph, modules[0], (LPTSTR)&fileName, MAXFILENAME);

All that is left to complete the process is to read the assembly content. To do that, we use 'ReadProcessMemory':

ReadProcessMemory(ph, lpAssemblyBaseAddress, destBuffer, dwBytesToRead, &dwBytesRead);

ReadProcessMemory takes the assembly base address that was retrieved using 'GetModuleInformation' and a buffer to which the memory is written.

The process described shows that keeping MSIL code in its encoded form at all times is key to maintaining a reliable tamper resistant solution. This limits a potential hacker from dumping an assembly's content using the technique I've illustrated above. Keeping the MSIL code protected at all times is what the guys at SecureTeam have been working on for quite some time.