Introduction

If we use common synchronization primitives like mutexes and critical sections, then the following sequence of events occur between two threads that are looking to acquire a lock:

- Thread 1 acquires lock L and executes.

- T2 tries to acquire lock L, but it's already held and therefore blocks incurring a context switch.

- T1 releases the lock L. This signals T2 and at lower level, this involves some sort of kernel transition.

- T2 wakes up and acquires the lock L incurring another context switch.

So there are always at least two context switches when primitive synchronization objects are used. A spin lock can get away with expensive context switches and kernel transition.

Most modern hardware supports atomic instructions and one of them is called 'compare and swap' (CAS). On Win32 systems, they are called interlocked operations. Using these interlocked functions, an application can compare and store a value in an atomic uninterruptible operation. With interlocked functions, it is possible to achieve lock freedom to save expensive context switches and kernel transitions which can be a bottleneck in a low latency application. On a multiprocessor machine, a spin lock (a kind of busy waiting) can avoid both of the above issues to save thousands of CPU cycles in context switches. However, the downside of using spin locks is that they become wasteful if held for a longer period of time, in which case they can prevent other threads from acquiring the lock and progressing. The implementation shown in this article is an effort to develop a general purpose spin lock.

Algorithm

A typical (or basic) spin lock acquire and release function would look something like below:

class Lock

{

volatile int dest = 0;

int exchange = 100;

int compare = 0;

void acquire()

{

While(true)

{

if(interlockedCompareExchange(&dest, exchange, compare) == 0)

{

break;

}

}

}

Void release()

{

dest = 0;

}

};

.......

Here, thread T1 acquires the lock by calling the function acquire(). In this case, the value of dest would become 100. When thread T2 tries to acquire the lock, it will loop continuously (a.k.a. busy waiting) as the values of dest and compare are different and therefore the function InterlockedCompareExchange will fail. When T1 calls release(), it sets the value of dest to 0 and therefore allows T2 to acquire the lock. Because only those threads that acquire() will call release(), mutual exclusion is guaranteed.

Above is a simple implementation of a spin lock. However, this implementation alone is not production fit because spinning consumes CPU cycles without doing any useful work, meaning that the thread spinning will still be scheduled on the processor until it is pre-empted. Another downside of spinning is that it will continuously access memory to re-evaluate the value of dest in the function Interlockedxxx and this also puts the pressure on bus communication.

On a single processor machine, spin wait would be a total waste of CPU as another thread T2 wouldn't even get scheduled until the spinning thread is switched by the kernel.

So far this implementation isn't good enough. A general purpose spin lock requires a bit more work in terms of falling back to true waiting in a worst case scenario when it spins for a longer period. Here are some of the points which must be considered:

Yield Processor

The Win32 function YieldProcessor() emits a 'no operation' instruction on processors. This makes the processor aware that the code is currently performing spin waits and will make the processor available to other logical processors in a hyper threading enabled processor so that the other logical processors can make progress.

Switch to Another Thread

Sometimes it is useful to force a context switch when a spinning thread has already consumed enough time spinning equivalent to its thread time slice allocated by the kernel. Here, it makes good sense to allow another thread to do useful work instead. The function SwitchToThread() relinquishes the calling thread's time slice and runs another thread in the ready state. It returns true when a switch occurs, otherwise false.

Sleeping

SwitchToThread() may not consider all threads on the system for execution, therefore it may be wise to sometimes call Sleep() or Sleepex(). Calling Sleep() with an argument of 0 is a good approach as it does not result in a context switch if there are no threads of equal priority in the ready state. Sleep(0) will result in a context switch if a higher priority thread is in ready state.

Other Considerations

A pure spin lock is only good enough when the lock is held for a very short period of time. Here the critical region may have not more than 10 instructions and practically even simple memory allocation or virtual calls or file I/O can take more than 10 instructions.

Secondly, as mentioned above, it would wasteful to use spin locks when an application runs on a single processor.

Sample Project and Implementation

The sample project in C++ consists of a spin lock implementation considering the points stated above. It also has an implementation of Stack, Queue, and a thin Producer-Consumer class. I'll only focus on then Spin Lock implementation here as the rest of it is easy to follow.

The file SpinLock.h defines these constants:

YIELD_ITERATION set to 30 - What this means is that the thread spinning will spin for 30 iterations waiting for the lock to acquire before it calls sleep(0) to give an opportunity to other threads to progress.MAX_SLEEP_ITERATION set to 40 - This means when the total iteration (or spin) count reaches 40, then it would force a context switch using the function SwitchToThread() in case another thread is in ready state.

The struct tSpinLock acts as a lock object which is declared in the class whose objects are being synchronized. This object is then passed in the constructor to the object of tScopedLock which initializes (references) the lock object passed to it. The tScopedLock() constructor locks the object using the member function of the class tSpinWait. The destructor ~tScopedLock() releases the lock.

The Lock() function in the class tSpinWait has got a nested while loop. This is done on purpose. So if a thread is spinning to acquire the lock, it wouldn't call interlockedxxx() with every iteration, rather it would be looping in the inner while loop. This hack avoids the system memory bus being overly busy due to continuous calls to the interlockedxx function.

while(LockObj.dest != LockObj.compare) {

if(HasThreasholdReached())

{

if(m_iterations + YIELD_ITERATION >= MAX_SLEEP_ITERATION)

Sleep(0);

if(m_iterations >= YIELD_ITERATION && m_iterations < MAX_SLEEP_ITERATION)

SwitchToThread();

}

m_iterations++; if(Helper::GetNumberOfProcessors() > 1)

{

YieldProcessor();

}

else { SwitchToThread(); }

}

The inner while loop just compares the value of dest and compare and if they are not equal, then it tries to acquire them using interlockedxxx. Depending on the iteration count, the thread is either put to sleep or switched. When the application is running on a single CPU, then it always forces a context switch.

Test Results

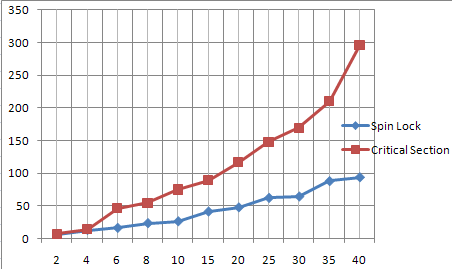

I tested the performance of this Spin Lock implementation by inserting 10000 integers into a queue from multiple threads (each thread inserting 10000 integers into the queue). I then replaced SpinLock with a Critical Section synchronization primitive in the code and ran the same tests. I ran all the tests on an Intel Core DUO CPU T9600 @ 2.80 GHz.

The x-axis is the number of threads and y-axis is the time taken in milliseconds. Both synchronization methods (spinlock and CS) showed close performance when the number of threads were 2 and 4. As the number of threads increased, critical section locking took more than double the time as compared to spin locks. Spin lock seemed to have scaled a lot better when contention increased due to the high number of threads. The time taken is calculated using QueryPerformanceCounter Win32 methods. However, I would suggest performing your own testing on the platform you intend to use.

Here is the table with the results:

| No. of Threads | Time taken (Spin lock) | Time taken (Critical Section) |

| 2 | | 6.6 | 7.14 |

| 4 | | 12.81 | 14.09 |

| 6 | | 16.01 | 46.37 |

| 8 | | 23.32 | 54.34 |

| 10 | | 26.21 | 74.76 |

| 15 | | 41.17 | 89.05 |

| 20 | | 47.63 | 116.82 |

| 25 | | 62.25 | 147.68 |

| 30 | | 64.37 | 169.17 |

| 35 | | 88.02 | 210.07 |

| 40 | | 93.99 | 296.32 |

Future Work

- Profiling the code on different platforms.

- Adding a couple more data structures to the project like associated arrays and hashtable.

Conclusion

This was an effort to develop a general purpose spin lock implementation. Pure spin locking isn't a good option in all scenarios and therefore there is a need for an implementation which allows the spinning thread to be suspended by the kernel.

History

- First draft.

- Revision 1 - Fixed a couple of typos.

- Revision 2 - Code is now re-entrant safe.

- Revision 3 - Lock release now uses

interlockedexchange. - Revision 4 - Added test results.