In the fall of 2006, I created a simple synthesizer using the C# programming language. This was a proof of concept project commissioned by James Squire, an associate professor at the Virginia Military Institute. I had a blast working with James on this project and got bit by the "softsynth" bug. This led me to begin writing a more elaborate "toolkit" for creating software based synthesizers using C#. Special thanks to James for giving me permission to reuse the code from our project here at Code Project.

This is Part I in a planned three part article. Here, I will be giving an overview of the toolkit. Part II will dig deeper into the toolkit by showing you how to create a simple synthesizer. Part III will demonstrate how to create a more sophisticated synthesizer.

If you are familiar with softsynths, the first question you may be asking is if this toolkit is VST compatible. It is not. However, I have heard of efforts being made to create a .NET/VST host. If this becomes a reality, if it hasn't already, it won't be hard to adapt most of the toolkit to work with it.

This is the second major version of the toolkit. The main change from the first version is the removal of MDX (Managed DirectX) for waveform output. In its place is a custom library I have written. The latency is slightly longer but playback is more stable. In addition, the second version supports functionality for creating your own effects. The toolkit comes with two effects: chorus and echo; I hope to add more in the future. Also, version two supports recording the output of the synthesizer to a wave file.

A synthesizer is a software or hardware (or some combination of the two) device for synthesizing sounds. There are a vast number of approaches to synthesizing sounds, but regardless of the approach most synthesizers use the same architectural structure. Typically, a synthesizer has a limited number of "voices" to play notes. A voice is responsible for synthesizing the note assigned to it. When a note is played, the synthesizer assigns a voice that is not currently playing to play the note. If all of the voices are already playing, the synthesizer "steals" a voice by reassigning it to play the new note. There are many voice stealing algorithms, but one of the most common is to steal the voice that has been playing the longest. As the voices play their assigned notes, the synthesizer mixes their output and sends it to its main output. From there, it goes to your speakers or sound card.

A voice is made up of a number of components. Some create the actual sound a voice produces, such as an oscillator. An oscillator synthesizes a repeating waveform with a frequency within the range of human hearing. Some components do not produce a sound directly but are meant to modulate other components. For example, a common synthesizer component is the LFO (low frequency oscillator). It produces a repeating waveform below the range of human hearing. A typical example of how an LFO is used is to modulate the frequency of an oscillator to create a vibrato effect. An ADSR (attack, decay, sustain, and release) envelope is another common synth component. It is used, among other things, to modulate the overall amplitude of the sound. For example, by setting the envelope to have an instant attack, a slow decay, and no sustain, you can mimic the amplitude characteristics of a plucked instrument, such as the guitar or harp. Together, these components synthesize a voice's output. Below is an illustration showing a four voice synthesizer:

Early analog synthesizers were built out of modules, an earlier name for synthesizer components. Each module was dedicated to doing one thing. By connecting several modules together with patch cords, you could create and configure your own synthesizer architecture. This created a lot of sonic possibilities.

When digital synthesizers came on the scene in the early 80s, many of them were not as configurable as their earlier analog counterparts. However, it was not unusual to find digital representation of analog modules such as oscillators, envelopes, filters, LFO's, etc.

Software based synthesizers began hitting the scenes in the 90s and have remained popular to this day. They are synthesizers that run on your personal computer. Because of the versatility of the PC, many software synthesizers have returned to the modular approach of early analog synthesizers. This has allowed musicians to use the power of analog synthesizers within a stable digital environment.

The output of a synthesizer is a continuous waveform. One way to simulate this in software is to use a circular buffer. This buffer is divided into smaller buffers that hold waveform data and are played one after the other. The software synthesizer first synthesizes two buffers of waveform data. These buffers are written to the circular buffer. Then the circular begins playing. As it plays, it notifies the synthesizer when it has finished playing a buffer. The synthesizer in turn synthesizes another buffer of waveform data which is then written to the circular buffer. In this way, the synthesizer stays ahead of the circular buffer's playback position by one buffer. This process continues until the synthesizer is stopped. The result is a seamless playback of the synthesizer's output.

Because it is necessary for the synthesizer to stay one buffer ahead of the current playback position, there is a latency equal to the length of one buffer. The larger the buffers, the longer the latency. This can be noticeable when playing a software synthesizer from a MIDI keyboard. As you play the keyboard, you will notice a delay before you hear the synthesizer respond. It is desirable then to use the smallest buffers possible. A problem you can run into, however, is that if you make the buffers too small, the synthesizer will not be able to keep up; it will lag behind. The result is a glitching effect. The key is to choose a buffer size that minimizes latency while allowing the synthesizer time to synthesize sound while staying ahead of the playback position.

The key challenge in designing this toolkit has been to decide what classes to create and to design them in such a way that they can work together to simulate the functionality of a typical synthesizer. Moreover, I wanted to make it easy for you to create your own synthesizers by simply plugging in components that you create. This took quite a bit of thought and some trial and error, but I think I have arrived at a design that meets my goals. Below I describe some of the toolkit's classes. There are a lot of classes in the toolkit, but the ones below are the most important.

The Component class is an abstract class representing functionality common to all effect and synthesizer components. Both the SynthComponent class and the EffectComponent class are derived from the Component class.

The Component class has the following properties:

The Name property is simply the name of the Component. You can give your Component a name when you create it. For example, you may want to name one of your oscillators "Oscillator 1." The SamplesPerSecond is a protected property and provides derived classes with the sample rate value.

The SynthComponent class is an abstract class representing functionality common to all synthesizer components. A toolkit synthesizer component is very much analogous to the modules used in analog synthesizers. Oscillators, ADSR Envelopes, LFO's, Filters, etc. are all examples of synthesizer components.

The SynthComponent class has the following methods and properties:

- Methods

Synthesize Trigger Release

- Properties

Ordinal SynthesizeReplaceEnabled

The Synthesize method causes the synthesizer component to synthesize its output. The output is placed in a buffer and can be retrieved later. The Trigger method triggers the component based on a MIDI note; it tells the component which note has triggered it to synthesize its output. The Release method tells the component when the note that previously triggered it has been released. All of these methods are abstract; you must override them in your derived class.

The Ordinal property represents the ordinal value of the component. That doesn't tell you very much. I will have more to say about the Ordinal property later. The SynthesizeReplaceEnabled property gets a value indicating whether the synthesizer component overwrites its buffer when it synthesizes its output. In some cases, you will want your component to overwrite its buffer. However, in other cases when a component shares its buffer with other components, it can be useful for the component to simply add its output to the buffer rather than overwrite it.

The toolkit comes with a collection of SynthComponent derived classes, enough to create a basic subtractive synthesizer. These components should be treated as a starting point for components you write.

There are two classes that are derived from the SynthComponent class: MonoSynthComponent and StereoSynthComponent. These are the classes that you will derive your synth components from. They represent synthesizer components with monophonic output and stereophonic output respectively. Both classes have a GetBuffer method. In the case of the MonoSynthComponent class, the GetBuffer method returns a single dimensional array of type float. The StereoSynthComponent's GetBuffer method returns a two dimensional array of type float. In both cases the array is the underlying buffer that the synth component writes its output to.

The EffectComponent class is an abstract class representing functionality common to all effect components. Effects reside at the global level; they process the output of all of the Voices currently playing. At this time, the toolkit comes with two EffectComponent derived classes: Chorus and Echo.

The EffectComponent class has the following methods and properties:

The Process method causes the effect to process its input. In other words, Process causes it to apply its effect algorithm to its input. The Reset method causes the effect to reset itself. For example, Reset would cause an Echo effect to clear its delay lines. The Buffer property represents the buffer the effect uses for its input and output. Effects should read from its buffer, apply its algorithm, and write the result back to the buffer.

Voice Class

The Voice class is an abstract class that represents a voice within a synthesizer. You will derive your own voice classes from this class. The Voice class is derived from the StereoSynthComponent class, and overrides and implements the SynthComponent's abstract members.

The Synthesizer class is the heart and soul of the toolkit. It drives synthesizer output by periodically writing the output from its voices to the main buffer. It also provides file management for a synthesizer's parameters.

The SynthHostForm class provides an environment for running your synthesizer. Typically, what you will do is create a System.Windows.Forms based application. After adding the necessary references to the required assemblies, you derive your main Form from the SynthHostForm class. It has the following members you must override:

- Methods

CreateSynthesizer CreateEditor

- Properties

The CreateSynthesizer method returns a synthesizer initialized with a delegate that creates your custom Voices. This will become clearer in Part II. The CreateEditor returns a Form capable of editing your synthesizer's parameters. And the HasEditor property gets a value indicating whether you can provide an editor Form. Providing an editor Form is optional. If you don't want to create an editor, you can rely on the SynthHostForm to provide a default editor. If no editor is available, CreateEditor should throw a NotSupportedException.

The Voice class treats its synthesizer components as nodes in a directed acyclic graph. Each component has an Ordinal property (as mentioned above). The value of this property is one plus the sum of all of the Ordinal values of the components connected to it. For example, an LFO component with no inputs has an Ordinal value of 1. An oscillator component with two inputs for frequency modulation would have an Ordinal value of 1 plus the sum of the Ordinal values of the two FM inputs. Below is a graph of a typical synthesizer architecture. Each component is marked with its Ordinal value:

When you create your own voice class, you derive it from the abstract Voice base class. You add components to the Voice with a call to its AddComponent method. The Voice adds components to a collection and sorts it by the Ordinal value of each component. As the Synthesizer is running, it periodically calls Synthesize on all of its voices. If a voice is currently playing, it iterates through its collection of components calling Synthesize on each one.

Because the components are sorted by their Ordinal value, the order in which a component synthesizes its output is in sync with the other components. In the example above Oscillator 1's frequency is being modulated by LFO 1 and Envelope 1. The outputs of both LFO 1 and Envelope 1 need to be synthesized before that of Oscillator 1; Oscillator 1 uses the outputs of both LFO 1 and Envelope 1 to modulate its frequency. Sorting by Ordinal value ensures that components work together in the correct order.

This approach to organizing and implementing signal flow through a synthesizer was very much inspired by J. Paul Morrison's website on flow-based programming. The idea is that you have a collection of components connected in some way with data flowing through them. It is easy to change and rearrange components to create new configurations. I'm a strong believer in this approach.

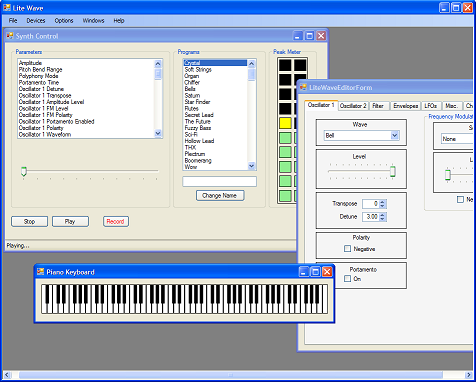

The download Visual Studio solution for this part is the same for Part II and Part III. It includes the toolkit plus two demo projects. One is for a simple synthesizer. The other is for a "Lite Wave" synthesizer that is quite a bit more complex.

The toolkit is dependent on my MIDI toolkit for MIDI functionality. The MIDI toolkit is in turn dependent on several of my other projects. I have included and linked the proper assemblies in the download, so the Solution should compile out of the box.

This has been a brief overview of the synth toolkit. Hopefully, I will be able to improve this article over time as questions come up. It has been challenging to write because there is a lot of information to cover. On the one hand, I have wanted to give a useful overview of the toolkit. On the other hand, I did not want to get bogged down in details. Time will tell if I have struck the right balance.

If you have an interest in softsynths, I hope I've peaked your interest enough for you to continue on to Part II. It provides a more in-depth look by showing you how to create a simple synthesizer using the toolkit.

- July 16, 2007 - First version released

- July 20, 2007 - Added an MP3 file demo of one of the sounds produced by the Lite Wave synthesizer to the download section

- August 16, 2007 - Second version released. DirectX removed and waveform recording added