In this article, I will demonstrate how to integrate the Cognitive Services SDKs in a .NET Core based application and explore how real-world scenarios can be tackled using ML services offered by Microsoft.

With the rise of cloud-hosted solutions being utilized for as-a-service models, organizations aim to purchase subscriptions for their modern applications to support high-end needs of clients and users. The purpose of this is, that they can ignore the management, developmental and operational costs associated with such services. Azure Cognitive Services is one such service that offers state-of-the-art solutions for machine learning (ML) and enables developers to quickly add smart components to their applications. In this article, I will demonstrate how to integrate the Cognitive Services SDKs in a .NET Core based application and explore how real-world scenarios can be tackled using ML services offered by Microsoft.

Cognitive Services are made available as PaaS components that you can use to write your own applications on top of. Cognitive Services includes an Emotion API that can be used to detect the emotion of the person in an image and label it with a couple of known emotions such as happy, sad, angry, and so on. Since Cognitive Services are a set of ML/AI models trained by Microsoft. Azure uses these ML models to process your inputs, then returns a response with an emotion and an indication of confidence in that emotion. The confidence shows how accurate Cognitive Services thinks the results are. Thus, instead of training and publishing your own ML models, you can utilize the models trained and published by Microsoft in Azure Cognitive Services.

Currently, this service is being incorporated into the Face API and the SDKs will be updated for the Face API to support emotion detection, too. I will be using the Face API to detect emotions in photos and will demonstrate how you can extend the SDK itself to write your own helper functions to parse the response and generate a UI.

Setting up Environment

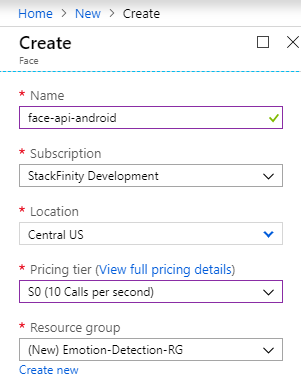

I assume that you already have an Azure subscription, and that you have installed and configured .NET Core as well as Xamarin (if you want to explore Android sample as well). On the Azure portal, you will search for "Face", and select the "Face" solution by Microsoft under the AI category. On the Create tab, enter the name, then select subscription and pricing tier. Since we are merely testing, you can select any location (for production purposes, read the Conclusion section). Also, for the Resource group, name it "Emotion-Detection-RG".

You can also use Docker containers for Azure Cognitive Services, and host these instances yourself. For a quick start, I recommend using Azure as hosting service for this, and not the Docker alternative.

Integrating with .NET Core

First, I’ll demonstrate how you can utilize the Face API SDK in a common .NET Core application. This will help you continue to the Xamarin application and integrate the SDK there.

Create a new .NET Core application either through the terminal or using your favorite IDE.

Next, install the NuGet package "Microsoft.Azure.CognitiveServices.Vision.Face" into the project. For example, you could use the following command in NuGet Package Manager Console for that:

PM> Install-Package Microsoft.Azure.CognitiveServices.Vision.Face

-Version 2.2.0-preview

For this part, I will use an offline image file and detect the emotion in that picture. The code to use is quite simple:

static async Task detectEmotion(string filepath)

{

using (var client = new FaceClient(

new ApiKeyServiceClientCredentials("<your-face-api-key-here>"),

new System.Net.Http.DelegatingHandler[] { }))

{

client.Endpoint = faceEndpoint;

using (var filestream = File.OpenRead(filepath))

{

var detectionResult = await client.Face.DetectWithStreamAsync

(filestream, returnFaceId: true,

returnFaceAttributes: emotionAttribute,

returnFaceLandmarks: true);

foreach (var face in detectionResult)

{

Console.WriteLine(JsonConvert.SerializeObject

(face.FaceAttributes.Emotion));

var highestEmotion = getEmotion(face.FaceAttributes.Emotion);

Console.WriteLine($"This face has emotional traits of

{highestEmotion.Emotion} ({highestEmotion.Value} confidence).");

}

}

}

}

In this code, I pass in the file path, and the program reads the file and sends the file as a stream to Azure, where it is processed and analyzed. Notice that most of these objects are .NET Core native types and support native .NET Framework features such as async/await.

I left the faceEndpoint and emotionAttribute variables out, because they are accessible in the code and quite self-explanatory.

Since the SDK does not provide a built-in method to know which emotion was the highest, I wrote a helper function to detect the value for the highest emotion:

static (string Emotion, double Value) getEmotion(Emotion emotion)

{

var emotionProperties = emotion.GetType().GetProperties();

(string Emotion, double Value) highestEmotion = ("Anger", emotion.Anger);

foreach (var e in emotionProperties)

{

if (((double)e.GetValue(emotion, null)) > highestEmotion.Value)

{

highestEmotion.Emotion = e.Name;

highestEmotion.Value = (double)e.GetValue(emotion, null);

}

}

return highestEmotion;

}

In this code, I am using the C# tuples to return a maximum emotion and the confidence value that is associated with it. Utilizing reflection (since the JSON response is now in the form of a runtime object), I am reading the list of emotion values and capturing the maximum of these values. This maximum value is then used to render the output. I will use this function in Xamarin app later on as well.

The code inside the main function only calls this function and waits for a key input to continue, as shown below:

Now, let’s add this module to a Xamarin application to detect the emotions of captured photos.

Adding Emotion Capabilities to a Native Xamarin App

In the Xamarin.Forms application, I just created a page that will allow users to capture a picture. Xam.Plugin.Media is an open source middleware, supporting cross-platform camera capture functionality. You can follow this GitHub guide and integrate the package in your own Xamarin app.

Once integrated, all you need to do is:

- Copy the code from .NET Core application for

Face API integration. - Write the code to capture the image and forward it to the

Face API objects to detect emotion.

To keep things simple, and to maintain somewhat single-responsibility, I created a separate class that will do this for me. The code is like what is shared above, so we're not adding that code again. Now in the main page, here is the code that captures the image and then forwards it to Azure for analysis:

private async Task captureBtn_Clicked(object sender, EventArgs e)

{

if (await CrossMedia.Current.Initialize())

{

if (CrossMedia.Current.IsCameraAvailable)

{

var file = await CrossMedia.Current.TakePhotoAsync

(new Plugin.Media.Abstractions.StoreCameraMediaOptions

{ Name = Guid.NewGuid().ToString(), CompressionQuality = 50 });

if (file == null)

{

await DisplayAlert("Cannot capture",

"We cannot store the file in the system,

possibly permission issues.", "Okay");

}

else

{

emotionResults.Text = "Processing the image...";

imageView.Source = ImageSource.FromStream(() =>

{

return file.GetStream();

});

await processImage(file);

}

}

else

{

await DisplayAlert("Cannot select picture",

"Your device needs to have a camera,

or needs to support photo selection.", "Okay");

}

}

else

{

await DisplayAlert("Problem",

"Cannot initialize the low-level APIs.", "Okay");

}

}

Upon successful build, and running on an Android device, the following result was achieved:

You can capture and send the images to the server to analyze and detect emotion of the user in the image stream — or you can follow an even more advanced path and use the streaming service for Azure Cognitive Services to analyze the image stream, which has a benefit of almost zero latency.

Cleaning Up Resources

Once you are done with this, it is usually a good practice to clear all the resources on the Azure platform, so that you are not charged any unnecessary charges. If you followed the article, then you have a single resource group named "Emotion-Detection-RG", you can find and delete that resource to quickly remove everything that was created in this article.

Conclusion

In this article, we discussed how quick and easy it is to incorporate Azure Cognitive Services in applications to add state-of-the-art machine learning emotion sensing features. I discussed how you can utilize the Face API of Azure Cognitive Services to add emotion detection capabilities to our Xamarin applications, or to be more general, to .NET Core based applications.

There are some best practices that you should consider while developing production software based on Cognitive Services:

- Your endpoint keys must not be exposed to an external user. You should consider keeping them safe all the times, such as in Azure Key Vault, or using a proxy application as a middleware.

- Your Cognitive Service might have a limit on it, thus keep the code inside a

try…catch or a similar block to handle situations where Azure rejects your request for quota violations. - Since Azure Cognitive Services are available at different regions across the globe, consider using the instance which is closer to the users, to reduce latency.

On Microsoft Azure, you own your data, and to follow the GDPR rules in Europe, you can request Microsoft to delete the data that your users have generated while using the service.

History

- 15th April, 2019: Initial version