Contents

There are plenty of online resources about LINQ and DLinq (later renamed LINQ to SQL). However, at the time of writing this article I could only find examples that demonstrate direct interaction between the UI and the database using embedded queries in the code behind file. In this article and in the accompanying code, I demonstrate using DLinq in more advanced enterprise application scenarios. I don't intend to provide a catalog of possible patterns. My focus is on two popular architecting techniques: the classic three-tier architecture and the new kid on the block, namely, MVP (Model View Presenter) architecture. I don't intend to provide another tutorial on DLinq. A good resource for an overview on DLinq is Scott Guthrie's blog. In this article, I will be covering more advanced concepts in ASP.NET and DLinq, as well as the MVP pattern.

The accompanying code consists of multiple layers of infrastructure components, along with two websites. The first website demonstrates a three-tier enterprise application with CRUD functionality that uses DLinq in the data access layer. The customer listing page in this demo website uses a RAD approach by binding a gridview to an object datasource control. The details/edit and insert web pages use the code behind model along with UI controls such as FormView to implement DML operations on the Customer entity, as well as report the five recent order statistics of that customer. Section A of this article describes the design in detail.

The second website (aptly named MVPWeb) incorporates the MVP pattern into its architecture. More specifically it uses a variant of MVP, namely, supervising controller. More on this is in Section B of this article. The flow comprises multiple layers including the UI, presentation, service, business, DAO and data layers using data transfer objects (DTO) as shared data structures between the layers. This website also uses DLinq in data access and transfer object layers.

Another point that I address is optimization. The attached "Tests" solution measures the load time of DLinq Datacontext when it is instantiated using the typical pattern that is cited in most online reference articles. I suggest an optimization for this within the constraints of the unit of work pattern.

I have used the March 2007 CTP Orcas download VPC image to develop the attached solution. With SQL Server 2005, Microsoft has deprecated good old Northwind in favor of the new Adventureworks database. Hence, I've attached a 2005 version of my favorite Northwind database, which is a requirement for my code to run. The attached "Tests" solution requires a few stored procedures that I have packaged into the Northwind_custom_SPs.sql script.

Update - A Visual Studio 2008 beta 2 upgrade of the original Orcas beta 1 version of the source code for "demo solution" is now available for download.

Most DLinq examples available online suggest creating a new instance of data context. This is along the lines of the unit of work pattern used in NHibernate's ISessionFactory, which is basically a mechanism of tracking changes to an object during its lifetime. According to Martin Fowler, an object that implements the unit of work pattern keeps track of its state changes within a transaction and fires DML queries in a batch to synchronize its state with the database at the very end. DLinq implements this through a change tracking service that exists in the scope of a DataContext instance. However, the mapping source file generated out of Sqlmetal can be huge in the case of enterprise databases. Creating a new instance of the DataContext for each unit of work can lead to a performance degradation. I have optimized this scenario by creating a Singleton MappingSource instance within a context factory. Each unit of work (DataContext) begins with a call to ContextFactory.CreateDataContext() and the factory ensures that the mapping source is read only once during the AppDomain's lifetime.

Another aspect of performance that I was interested in was DML translation. DLinq translates its state changes into parameterized SQL statements using sp_executesql. By default, DLinq ORM mapping relates object properties to table columns. This default mapping results in the generation of DML CRUD statements (insert, update, delete, select). Alternatively, the Orcas designer allows for stored procedure mapping. This can be done in the DBML file by right clicking the entity in the designer and choosing the "Configure Behaviour" selection. Interestingly, the DBML designer will only let you map insert, update and delete entity actions to custom stored procedures.

With default ORM binding, DML statements are generated and fired using sp_executesql. When stored procedure binding is defined, the mapped stored procedures are executed using sp_executesql. Now SQL Server caches execution plans for parameterized queries. The appendix lists the generated queries that were captured in SQL profiler. It may be noted that the generated DML statements do not specify a DBO schema.

The attached Excel file contains two worksheets. The first sheet compares load times using the regular/typical approach versus the optimized approach. The second sheet compares execution times for CRUD operations using the two modes of ORM mapping: entity to table and entity behaviour to stored procedure. It is observed that the latter method of ORM mapping (using stored procedure) is more performant.

I will begin with an overview of the website that uses a three-tier architecture incorporating DLinq, followed by a dive into the other website that implements an N-tier design using the MVP pattern and DLinq. Both architectures incorporate DLinq in the data layer. When the original draft of this article was published, I received enquiries from some readers about my pattern of using DLinq entities across the layers.

One aspect of my architecture is segregation of the generated DataContext and DLinq entity classes into two separate layers. DLinq plugs into the data layer in both the regular and MVP website versions. A detailed explanation of the layers that constitute the call stack will follow in Section B of this article that delves into the MVP website. For now, it would suffice to say that DLinq classes that are generated by SqlMetal are being used in two layers: Northwind.Data (Data Access layer) and Northwind.Data.DTO (Transfer Object layer). I have embedded the mapping file along with entity classes in the Transfer Objects assembly .

The idea is that the UI layer references the transfer objects assembly (Northwind.Data.DTO.dll) and does not have direct access to Dlinq DataContext. The DAL (Northwind.Data.dll) contains the derived DataContext, DAO classes and ContextFactory. DAO classes act as the gateway and have direct reference to the DataContext. In both websites, DLinq entities constituting the transfer objects layer move across the layers all the way up to the UI layer where they are used for data binding. In the next section I touch upon the pros and cons of this approach as well as the scope of this article.

As described earlier in the section on performance, I have created a ContextFactory that resides in the Data Access layer and is called by the Data Access object (DAO) classes. This factory optimizes DataContext loading by using a singleton mapping source that is built by reading an embedded mapping file resource from the assembly's resource stream. The assembly Northwind.Data.DTO contains entity classes, as well as the embedded ORM mapping resource file.

static MappingSource CreateMappingSource()

{

string map = ConfigurationManager.AppSettings[Constants.MappingSourceKey];

if (String.IsNullOrEmpty(map)) map = Constants.DefaultMappingSource;

Assembly a = Assembly.Load(Constants.MappingAssembly);

Stream s = null;

if(a != null) s = a.GetManifestResourceStream(map);

if (s == null) s =

a.GetManifestResourceStream(Constants.DefaultMappingSource);

if(s == null)

throw new InvalidOperationException(

String.Format(@"The XML mapping file [{0}]

must be an embedded resource in the TransferObjects project.",

map));

return XmlMappingSource.FromStream(s);

}

Some readers were interested in the practicality of my architecture in multi tier paradigms such as SOA. SOA is not within the scope of this article. However, the issues of serialization, state maintenance and optimistic concurrency which I have talked about in this article are also present in SOA and multi tier environments with disconnected data. My design of packaging DLinq entities into a transfer objects layer that is shared across the layers is ideal when UI and middleware layers exist on the same physical tier. In circumstances that involve access control constraints on the data object that is returned to the UI or when middleware (or web service) exists on a different tier, one may consider alternatives such as serializable data transfer objects that are constructed by assemblers or plain XML.

Barring circular references, DLinq entity serialization is supported in an SOA scenario. VS designer allows generation of [DataContract] classes for serialization. XmlSerializer can also handle serialization of Dlinq classes. However, at the time of writing this article Dlinq entity classes are not marked [Serializable] and cannot be serialized using BinaryFormatter.

Section A: Typical 3-tier Architecture using DLinq

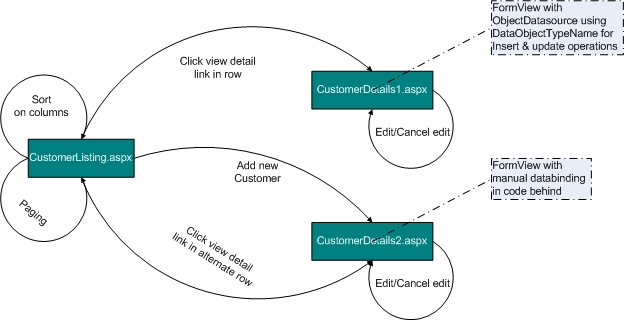

The generic three-tier architecture consists of UI, business and data layers along with a data transfer object (DTO) that moves across the layers. DTO may have different connotations in Java, Microsoft and the Martin Fowler world. In Martin Fowler's context, a DTO works in conjunction with an assembler and serves to reduce the chattiness of client-server RPC. However, I tend to align myself more with Sun's "Transfer Object" pattern, which essentially describes a data structure that is shared across the layers. The page flow is described in the following state transition diagram.

For the sake of demonstration, I have two versions of the CustomerDetails page. CustomerDetails1.aspx consists of a FormView bound to an ObjectDatasource control for select and update operations. Customer DTO is passed as an argument to the update method in CustomerBO. The DataObjectTypeName property of the ObjectDatasource control points to the Customer type. CustomerDetails2.aspx, on the other hand, overrides ItemInserting, ItemUpdating and ModeChanging events of FormView to call methods on the business object (CustomerBO) and to do data binding. The following is a code snippet for updates.

protected void fvCust_ItemUpdating(object sender, FormViewUpdateEventArgs e)

{

Customer c = Util.MarshalObject<Customer>(fvCust);

CustomerBO cbo = new CustomerBO(Util.CreateFactoryInstance());

if (c != null) cbo.UpdateCustomer(c);

SwitchMode(FormViewMode.ReadOnly);

}

IDataSource in the sequence diagram represents an object datasource control that binds data to GridView. Setting the object datasource's TypeName property to Northwind.Business.CustomerBO is not sufficient to instantiate the type as CustomerBO and does not allow a default constructor. Hence, the code behind overrides the ObjectCreating event of the ObjectDatasource control to infer the type of DAOFactory from the application configuration file and pass that as a parameter to the overloaded constructor of CustomerBO. The business object talks to a DAO (Data Access Object). BaseBO (the base entity component) holds a reference to a DAOFactory that is responsible for instantiating a DAO. The sequence diagram above describes the flow in detail.

protected void CustomersDS_ObjectCreating(object sender,

ObjectDataSourceEventArgs e)

{

CustomerBO bo = new CustomerBO(Util.CreateFactoryInstance());

e.ObjectInstance = bo;

}

The entities described in the ORM mapping (generated by SqlMetal.exe, in my case) are being used in a Transfer Object pattern. So, they are being used as shared data structures across the layers. These entities which map to the Northwind database schema were generated using the following command.

sqlmetal /server:. /database:northwind /map:c:\northwindMapping.xml

/pluralize /code:c:\NorthwindDTOs.cs

/language:csharp /namespace:Northwind.Data.DTO

Paging and sorting using ObjectDatasource is as simple as setting AllowPaging and AllowSorting to true on the GridView and configuring the ObjectDatasource properties of SelectCountMethod, SortParameterName, MaximumRowsParameterName and EnablePaging. CustomerBO's GetCustomersByPage method (configured in the TypeName and SelectMethod properties of ObjectDatasource) invokes the corresponding method on the DAO. For details, please refer to CustomerListing.aspx in the accompanying "Web" project. The business object (BO) obtains a reference to the corresponding DAO through a DAOFactory using the abstract factory pattern.

Control flow at the level of business to data layers in the call stack remains the same for both regular and MVP website versions. The difference lies in how it gets to the business layer. More on this is in Section B of the article. GridView bound to an ObjectDatasource control automatically flips sort expressions when the user clicks on column headers. The DAO translates this into DLinq queries that return an enumerable set of DTO objects, as shown in the following snippet.

public IEnumerable <Customer> GetCustomersByPage(int startRowIndex,

int pageSize, string sortParam)

{

NorthwindContext c = ContextFactory.CreateDataContext();

IOrderedQueryable<Customer> qry = null;

switch (sortParam)

{

case "ContactName DESC":

qry = c.Customers.OrderByDescending(cust => cust.ContactName);

break;

case "ContactName":

qry = c.Customers.OrderBy(cust => cust.ContactName);

break;

case "ContactTitle DESC":

qry = c.Customers.OrderByDescending(cust => cust.ContactTitle);

break;

case "ContactTitle":

qry = c.Customers.OrderBy(cust => cust.ContactTitle);

break;

case "CompanyName DESC":

qry = c.Customers.OrderByDescending(cust => cust.CompanyName);

break;

case "CompanyName":

qry = c.Customers.OrderBy(cust => cust.CompanyName);

break;

case "Country DESC":

qry = c.Customers.OrderByDescending(cust => cust.Country);

break;

case "Country":

qry = c.Customers.OrderBy(cust => cust.Country);

break;

case "PostalCode DESC":

qry = c.Customers.OrderByDescending(cust => cust.PostalCode);

break;

case "PostalCode":

qry = c.Customers.OrderBy(cust => cust.PostalCode);

break;

case "City DESC":

qry = c.Customers.OrderByDescending(cust => cust.City);

break;

case "City":

qry = c.Customers.OrderBy(cust => cust.City);

break;

}

return qry == null

? c.Customers.Skip(startRowIndex).Take(pageSize)

: qry.Skip(startRowIndex).Take(pageSize);

}

A report of the number of items, amount spent and other details of the five most recent orders placed by a customer is generated by the following LINQ query.

var dql = (from in ctx.Orders

Where o.CustomerID == CustomerId

join od in ctx.OrderDetails on o.OrderID equals od.OrderID

orderby o.OrderDate descending select new{

OrderID = o.OrderID,

OrderItems = o.OrderDetails.Count,

AmountSpent =

o.OrderDetails.Sum(ord => ord.UnitPrice * ord.Quantity),

ProductId = od.ProductID,

ShipCity = o.ShipCity,

ShipCountry = o.ShipCountry

}

).Take(5);

I am not going into the details of DLinq expressions (Abstract Syntax Trees) to SQL query transformation in this article. As stated earlier, I assume that the reader is familiar with DLinq basics. A great resource on SQL transformation and other DLinq aspects can be found here on MSDN.

As described earlier in the state transition diagram, there are two implementations of the customer details page. CustomerDetails1.aspx uses a RAD approach by binding the FormView to an ObjectDatasource.

<asp:ObjectDataSource ID="CustomerDS" runat="server"

SelectMethod="GetCustomer"

TypeName="Northwind.Business.CustomerBO"

DataObjectTypeName="Northwind.Data.DTO.Customer"

UpdateMethod="UpdateCustomer" InsertMethod="InsertCustomer"

onobjectcreating="CustomerDS_ObjectCreating"

ConflictDetection="CompareAllValues"

OldValuesParameterFormatString="cachedCustData"

>

<selectparameters>

<asp:querystringparameter DefaultValue="0"

Name="id" QueryStringField="custid"

Type="String" />

</selectparameters>

</asp:ObjectDataSource>

The same interface implements create, update and read operations on a customer. In CustomerDetails2.aspx, I use an alternative manual implementation, as ObjectDatasource is not a viable enterprise scale application tool for all cases. In both page flows, CustomerDAO takes the Customer DTO as a parameter for write operations and a customer ID parameter for the read operation.

CustomerDAO exposes a GetCustomerEnumerable method that returns IEnumerable<Customer>to make it bindable to BaseDataBoundControl (which expects an enumerable instance). The two versions of the function used by the corresponding customer details pages are shown below.

public Customer GetCustomer(string id)

{

NorthwindContext ctx = ContextFactory.CreateDataContext();

return (from c in ctx.Customers where c.CustomerID == id select c)

.SingleOrDefault();

}

public IEnumerable <Customer> GetCustomerEnumerable(string id)

{

NorthwindContext ctx = ContextFactory.CreateDataContext();

return (from c in ctx.Customers where c.CustomerID == id select c)

.ToList();

}

The following code inserts a new customer.

public string InsertCustomer(Customer cust)

{

if (cust == null) return null;

NorthwindContext c = ContextFactory.CreateDataContext();

c.Customers.Add(cust);

c.SubmitChanges();

return cust.CustomerID;

}

The biggest advantage of the change tracking mechanism in DLinq is that it infers dirty data and issues an update to only changed fields, obviating the need to do the classic IsDirty checks in the domain object. In disconnected data operations which are typical in client server architectures, update operations involve a little more work. This is because DLinq's identity-cache that is associated with a new DataContext is empty.

DLinq supports multiple ways of doing write operations with or without optimistic concurrency. Keith Farmer of Microsoft has summarized the API in the following discussion . I will briefly touch upon some typical optimistic concurrency patterns that are used in database programming. DLinq incidentally supports these patterns.

- Checking all columns or some of them, w.r.t the snapshot — By default UpdateCheck for all columns is turned on which leads to their participation in concurrency conflict detection. Alternatively, only a few columns of interest can be marked for concurrency checking by specifying

UpdateCheck="Never" in the mapping file for other columns. This leads to their exclusion in the where clause of the generated SQL. - Timestamp — One may use timestamp instead of UpdateCheck for optimistic concurrency checks. There are pros and cons of using a timestamp such as changing the design of a table that does not already have a timestamp column and the overhead of an extra select statement to retrieve the new value of a timestamp after a write operation (although minimal since it is batched in the same call).

- An interesting pattern of conflict detection is to check for the original values of only those columns that a user has modified.

update customers set name=@name where id=@id and name=@original_name

DLinq supports this pattern with the UpdateCheck="WhenChanged" column attribute definition.

I tend to think of #3 as the most "optimistic" of three patterns. The granularity of #1 (all columns) and #2 (timestamp) is the entire row. If one session modifies column A and another session modifies column B before the first one commits changes, the result is an optimistic concurrency conflict in patterns #1 and #2. However, pattern #3 allows both updates to take place as there is no real conflict between sessions 1 and 2 in this case. Pattern #3 is more efficient in data transmission as a column is included in the where clause only if it is modified by the current session. However, if the current session did not modify a column intending it to be unchanged but another session has modified its database value in the mean time, the concurrency conflict would not be detected.

Apart from UpdateCheck metadata, one may proactively define concurrency resolution behavior by specifying RefreshMode on the DataContext as follows. This defines an overwrite behavior before submitting the action query. The new DataContext has no way of determining the original state of the database from the disconnected custData instance. The call to DataContext.Refresh pulls the latest database snapshot and merges it with the custData instance resulting in a refresh of the instance.

public void UpdateCustomer(Customer custData)

{

if (custData == null) return;

NorthwindContext c = ContextFactory.CreateDataContext();

c.Customers.Attach(custData);

c.Refresh(RefreshMode.KeepCurrentValues, custData);

c.SubmitChanges();

}

Unlike the above stateless scenario it is easy to maintain old state in a web environment. I have demonstrated old state maintenance in two ways — CustomerDetails1.aspx maintains previous state on the client side whereas CustomerDetails2.aspx stores it on the server side within the scope of a user's session. ObjectDataSourcee automatically maintains the client side snapshot when ConflictDetection="CompareAllValues".

<asp:ObjectDataSource ID="CustomerDS" runat="server"

TypeName="Northwind.Business.CustomerBO"

DataObjectTypeName="Northwind.Data.DTO.Customer"

UpdateMethod="UpdateCustomer"

ConflictDetection="CompareAllValues"

OldValuesParameterFormatString="cachedCustData"

An update to the GridView calls the UpdateCustomer method on CustomerBO. The OldValuesParameterFormatString="cachedCustData" property setting automatically invokes the corresponding method on CustomerBO that takes an old value parameter with the name "cachedCustData".

Both variants of the CustomerDetails implementation call the UpdateCustomer method in CustomerDAO that takes two parameters of the type of Customer, at a higher level in the call stack. The old snapshot is compared to the current instance and only attributes that were modified find their way into the set clause of the update SQL statement. Attributes in the old snapshot that participate in update checks are set in the where clause. When a concurrency conflict is detected, RefreshMode.KeepCurrentValues indicates to DLinq that the latest changes made in the current session are to be retained. Any changes done by one or more sessions after old snapshot was taken will be rolled back.

public void UpdateCustomer(Customer custData, Customer cachedCustData)

{

if (custData == null) return;

NorthwindContext c = ContextFactory.CreateDataContext();

c.Customers.Attach(custData, cachedCustData);

try {

c.SubmitChanges(ConflictMode.ContinueOnConflict);

}

catch (ChangeConflictException) {

c.ChangeConflicts.ResolveAll(RefreshMode.KeepCurrentValues);

c.SubmitChanges();

}

}

Section B: N-tier MVP Architecture using DLinq

The Model View Presenter (MVP) pattern is a technique to loosely couple interaction between layers in an application. The technique is based on setter and constructor based dependency injection which makes it possible to test the code in isolation, without dependency or impact on the runtime host environment. More information on MVP can be found in this MSDN article.

Martin Fowler has classified MVP into sub-patterns, namely, Supervising Controller, Passive View and Presentation Model. The flavor of MVP that I have used in my website tends towards the supervising controller variant. This pattern takes advantage of data binding and basic infrastructure support of the ASP.NET framework for the view implementation. Any UI logic that is non-trivial is handled by the presenter. In this pattern, as opposed to the Passive view variant, the view is aware of the domain object and data binding introduces a directed dependency from the view to the domain layer. Another point to note is testability of the view. This pattern overlooks testing of view to model data binding and view synchronization. It emphasizes testing only for more involved UI logic that is contained in the presenter. In my opinion, it is the best of both worlds, as we may safely avoid testing ASP.NET infrastructure and instead use our time more productively!

While the rest is obvious from the diagram, the points of interest are the presenter and business assemblies. To allow for unit testing of the presenter, service and business layers, a typical pattern for dependency injection is the separated interfaces pattern. The presenter assembly exposes view interfaces which are implemented in the web assembly (view interfaces may be mocked or stubbed for unit testing presenters). Presenters talk to view implementations through the view interfaces. Similarly, the business layer defines a set of data interfaces and talks to the data layer via these interfaces. This is also an example of the gateway pattern, as it involves an external resource. So, interestingly, this becomes a case of inversion of control where the data access layer has a reference to the business layer and implements the data interfaces defined in the latter.

I have designed a slightly complex but reusable pattern for the initialization behavior across views and presenters. BasePage (shown above) is an abstract view initializer. It contains a DAOFactory property that returns a concrete IDAOFactory instance created using configuration metadata.

public static IDaoFactory CreateFactoryInstance()

{

string typeStr =

ConfigurationManager.AppSettings[Constants.DAO_FACTORY_KEY];

if (String.IsNullOrEmpty(typeStr)) typeStr =

Constants.DEFAULT_FACTORY_TYPE;

IDaoFactory f = null;

try

{

Assembly a = Assembly.Load(Constants.FACTORY_ASSEMBLY);

if (a != null)

{

Type fType = a.GetType(typeStr);

if (fType == null) fType = a.GetType(String.Format("{0}.{1}",

Constants.FACTORY_ASSEMBLY, typeStr));

f = Activator.CreateInstance(fType) as IDaoFactory;

}

}

catch (Exception ex)

{

throw new Exception(String.Format(

"Unable to load {0} from Northwind.Data", typeStr), ex);

}

return f;

}

Any derived view initializer must implement two abstract methods: CreatePresenters() and InitializeViews(). These two methods are invoked in the Init phase of the page lifecycle. CustomerListing.aspx in my demo website acts as a view and also a view initializer. It creates a ListCustomersPresenter and initializes the widgets on the view by wiring up delegates to methods in the presenter. As you may have noticed, I have implemented delegates as anonymous methods within the InitializeViews() method.

protected override void CreatePresenters()

{

_presenter = new ListCustomersPresenter(this, base.DaoFactory);

}

protected override void InitializeViews()

{

CommandEventHandler hnd = delegate(object sender, CommandEventArgs e)

{

int curr = this.CurrentPageIndex;

PageIndexChanged = true;

switch (e.CommandName)

{

case "first":

this.CurrentPageIndex = 0;

break;

case "prev":

CurrentPageIndex = (--curr < 0) ? (0) : curr;

break;

case "next":

CurrentPageIndex = (++curr == TotalPages) ? (curr - 1) : curr;

break;

case "last":

this.CurrentPageIndex = TotalPages - 1;

break;

}

_presenter.ShowCurrentPage();

};

imgF.Command += hnd;

imgL.Command += hnd;

imgN.Command += hnd;

imgP.Command += hnd;

grd.PageIndexChanging += delegate(object sender, GridViewPageEventArgs e)

{

this.CurrentPageIndex = e.NewPageIndex;

_presenter.ShowCurrentPage();

};

grd.Sorting += delegate(object sender, GridViewSortEventArgs e)

{

this.SortPropertyName = e.SortExpression;

_presenter.ShowCurrentPage();

};

}

When a presenter is instantiated in a view initializer's CreatePresenters implementation, the chain of internal method calls ensures that the presenter and the view hold a strongly typed reference to each other. The view-presenter initialization pattern that I designed ensures this initialization sequence with a series of method calls across BasePage, BasePresenter, View and generic parameters. The following elaborates the call sequence. The OnLoad override of the BasePage view initializer is as follows.

protected override void OnLoad(EventArgs e)

{

base.OnLoad(e);

if(!IsPostBack) foreach (IBaseView view in Views) view.InitializeView();

}

The abstract "Views" property is an interesting part of my design. Any derived view initializer implements this property to return a list of Views that it contains. Typically, a view initializer may contain one or more views (implemented as user control widgets) and one or more presenters. Since CustomerListing.aspx is a view by itself, the "Views" property implementation returns a List<IBaseView> that has a reference to itself. The view's implementation of InitializeView(), which is being called from the BasePage OnLoad override shown above, is the following.

public void InitializeView()

{

CurrentPageIndex = 0;

Presenter.InitializeView();

}

Since CustomerListing is also a view, "Presenter" refers to the abstract Presenter property defined in IView<P, DTO>. The view interface hierarchy is defined as follows.

public interfaceIBaseView

{

void InitializeView();

}

public interfaceIView<P, DTO> : IBaseView

where P : BasePresenter<P, DTO>

{

P Presenter { get; set; }

bool PageIndexChanged { get; set; }

void DisplayData(IEnumerable <DTO> dataSource);

void DisplayData(DTO dataSource);

}

As shown in the class diagram above, IViewCustomerListing inherits from IView <ListCustomersPresenter, Customer>.

public interfaceIViewCustomerListing : IView<ListCustomersPresenter,

Customer>

It is interesting to note that BasePresenter is defined with a template argument that points to the derived presenter! This unusual design is a fallout of template casting rules where there is no implicit or explicit casting defined for the enclosing type, although template arguments can be implicitly cast across hierarchies. For example, List<object> lo = new <Liststring>() would not compile for the same reason.

The BasePresenter's implementation of InitializeView is as follows. This takes care of automatically initializing the view that is handled by the presenter on a GET request. If you remember, the call to InitializeView() is initiated by the abstract BasePage view initializer for GET requests.

public virtual void InitializeView()

{

IEnumerable<DTO> dataSource = GetInitialViewData();

if (dataSource != null ) View.DisplayData(dataSource);

}

When a presenter is instantiated in the view initializer's CreatePresenters method, the BasePresenter implementation ensures that the view and presenter get a strongly typed reference to each other.

public BasePresenter(IView<P, DTO> view, IDaoFactory daoFactory)

{

View = view;

_daoFactory = daoFactory;

}

BasePresenter's View setter initializes the underlying view's presenter to itself. Here BasePresenter<P, DTO> is cast to P so that the view holds a strongly typed reference to the derived presenter.

protected virtual IView<P, DTO> View

{

get { return _view; }

set

{

_view = value;

_view.Presenter = (P) this;

}

}

ListCustomersPresenter (the derived implementation of BasePresenter) overrides the BasePresenter's View to create a strongly typed reference to IViewCustomerListing for convenience.

protected new IViewCustomerListing View

{

get { return (IViewCustomerListing)base.View; }

set { base.View = (IView<ListCustomersPresenter, Customer>)value; }

}

The role of a business layer in MVP is questionable. Why have business layers on top of a service layer? In my sample website, I have used the business layer as a gateway for the service layer. That is because I have not introduced business rules into my sample to keep it simple enough for demonstration. In my opinion, the business layer can encapsulate business rules corresponding to an entity. One way of business rule processing in an enterprise application would be for the presenter to access business objects for rule processing and the service object would be a gateway for data access and manipulation. Another pattern for business rule processing would be to have a rule processing engine implemented using the chain of responsibility pattern.

Business rule violations may be handled in the following pattern. The business or rule object notifies the presenter of business rules violation and the presenter sets a view's property to an invalid state in response. The view's implementation of the property sets the "IsValid" property of a validator control, thus notifying the user of the business rule exception. It is a matter of personal opinion, but I would favor a design where it is the job of the presenter to check business rules and the service layer does not have a dependency on the business or rule objects. I would think that it is the job of the presenter to use the rules processor for business rules checking and the service layer essentially acts as a gateway to access data using the data layer.

Typically, the business/service layer uses a DAO in a gateway pattern for data access. The abstract factory pattern is used for unit testing the business/service layer in isolation by injecting mocks or stubs as gateway dependencies in the business object. My business object design uses constructor-based dependency injection rather than using setters.

public CustomerBO(IDaoFactory daoFactory) : base(daoFactory)

{

_dao = base.daoFactory.CreateCustomerDAO();

}

An example of a unit test for the GetAllCustomers() method of the CustomerBO class would be the following (using Rhino Mocks).

[Test]

public void TestCustomerBO()

{

MockRepository rep = new MockRepository();

ICustomerDAO mockCustDAO = rep.CreateMock<ICustomerDAO>();

IDaoFactory mockDAOFactory = rep.CreateMock<IDaoFactory>();

List<Customer> stubList = new List<Customer>();

using (rep.Record())

{

Expect.Call(daoFactory.CreateCustomerDAO()).Return(mockCustDAO);

Expect.Call(mockCustDAO.GetAllCustomers()).Return(stubList);

}

using (_rep.Playback())

{

CustomerBO c = new CustomerBO(mockDAOFactory);

IEnumerable<Customer> custList = c.GetAllCustomers();

}

Assert.IsNotNull(custList);

}

I have packaged the entity classes generated from SqlMetal into the Northwind.Data.DTO namespace.

I have addressed some aspects of architecture, design and performance when using DLinq in enterprise application scenarios. It is by no means exhaustive and there are other ways of achieving the same end result. Rico Mariani's blog is an excellent resource if you are looking for more performance tips on DLinq . Although this article is about DLinq, it may be worth mentioning that competitors include NHibernate and ADO.NET entity framework. Currently, LINQ is still a CTP, unlike NHibernate. In my opinion, Visual Studio Orcas's built-in support for LINQ and query optimization make a compelling case for using DLinq over NHibernate in the near future. Oren Eini, the developer of Rhino Mocks has released an Alpha version of LINQ for NHibernate that aims to put NHibernate back on the map. Another interesting framework to note is LINQ to Entities, also known as ADO.NET Entity Framework. This framework provides an extra layer of abstraction between the entity object model and the underlying relational database, making it a lot more loosely coupled to the database than DLinq.