Introduction

The article deals with explaining the concepts behind implementing multi-threading applications in .NET through a working code example. The article covers the following topics in brief:

- Concepts of threading

- How to implement multi-threading in .NET

- Concepts behind implementing Thread Safe applications

- Deadlocks

What is a Process?

A process is an Operating System context in which an executable runs. It is used to segregate virtual address space, threads, object handles (pointers to resources such as files), and environment variables. Processes have attributes such as base priority class and maximum memory consumption.

Meaning…

- A process is a memory slice that contains resources

- An isolated task performed by the Operating System

- An application that is being run

- A process owns one or more Operating System threads

Technically, a process is a contiguous memory space of 4 GB. This memory is secure and private and cannot be accessed by other processes.

What is a Thread?

A thread is an instruction stream executing within a process. All threads execute within a process and a process can have multiple threads. All threads of a process use their process’ virtual address space. The thread is a unit of Operating System scheduling. The context of the thread is saved / restored as the Operating System switches execution between threads.

Meaning…

- A thread is an instruction stream executing within a process.

- All threads execute within a process and a process can have multiple threads.

- All threads of a process use their process’ virtual address space.

What is Multi-Threading?

Multi threading is when a process has multiple threads active at the same time. This allows for either the appearance of simultaneous thread execution (through time slicing) or actual simultaneous thread execution on hyper-threading and multi-processor systems.

Multi-Threading – Why and Why Not

Why multi-thread:

- To keep the UI responsive.

- To improve performance (for example, concurrent operation of CPU bound and I/O bound activities).

Why not multi-thread:

- Overhead can reduce actual performance.

- Complicates code, increases design time, and risk of bugs.

Thread Pool

The thread pool provides your application with a pool of worker threads that are managed by the system. The threads in the managed thread pool are background threads. A ThreadPool thread will not keep an application running after all foreground threads have exited. There is one thread pool per process. The thread pool has a default size of 25 threads per available processor. The number of threads in the pool can be changed by the SetMaxThreads method. Each thread uses the default stack size and runs at the default priority.

Threading in .NET

In .NET, threading is achieved by the following methods:

- Thread class

- Delegates

- Background Worker

- ThreadPool

- Task

- Parallel

In the sections below, we will see how threading can be implemented by each of these methods.

In a nutshell, multi-threading is a technology by which any application can be made to run multiple tasks concurrently, thereby utilizing the maximum computing power of the processor and keeping the UI responsive. An example of this can be expressed by the block diagram below:

The code

The project is a simple WinForms application which demonstrates the use of threading in .NET by three methods:

- Delegates

- Thread class

- Background Worker

The application executes a heavy operation asynchronously so that the UI is not blocked. The same heavy operation is achieved by the above three ways to demonstrate their purpose.

The “Heavy” Operation

In real world, a heavy operation can be anything from polling a database to streaming a media file. For this example, we have simulated a heavy operation by appending values to a string. String being immutable, a string append will cause a new string variable to be created while discarding the old one. (This is handled by the CLR.) If done a huge number of times, this can really consume a lot of resources (a reason why we use Stringbuilder.Append instead). In the above UI screen, set the up down counter to specify the number of times the string is going to be appended.

We have a Utility class in the backend, which has a LoadData() method. It also has a delegate with signature similar to that of LoadData().

class Utility

{

public delegate string delLoadData(int number);

public static delLoadData dLoadData;

public Utility()

{

}

public static string LoadData(int max)

{

string str = string.Empty;

for (int i = 0; i < max; i++)

{

str += i.ToString();

}

return str;

}

}

The Synchronous Call

When you click the “Get Data Sync” button, the operation is run in the same thread as that of the UI thread (blocking call). Hence, for the time the operation is running, the UI will remain unresponsive.

private void btnSync_Click(object sender, EventArgs e)

{

this.Cursor = Cursors.WaitCursor;

this.txtContents.Text = Utility.LoadData(upCount);

this.Cursor = Cursors.Default;

}

The Asynchronous Call

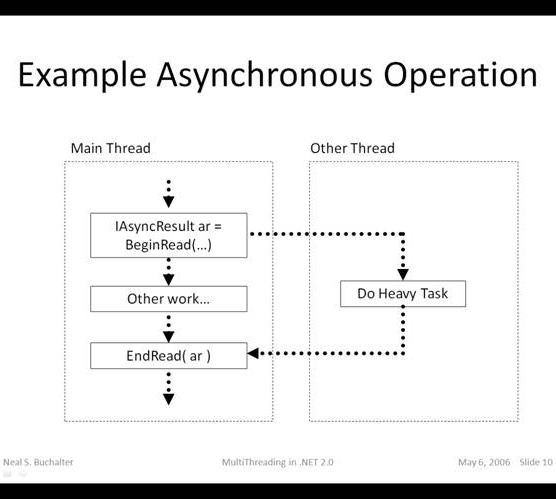

Using Delegates (Asynchronous Programming Model)

If you choose the radio button “Delegates”, the LoadData() method is called asynchronously using delegates. We first initialize the type delLoadData with the address of utility.LoadData(). Then we call the BeginInvoke() method of the delegate. In .NET world, any method that has the name BeginXXX or EndXXX is asynchronous. For example, delegate.Invoke() will call a method in the same thread. While delegate.BeginInvoke() will call the method in a separate thread.

The BeginInvoke() takes three arguments:

- Parameter to be passed to the

Utility.LoadData() method - Address of the callback method

- State of the object

Utility.dLoadData = new Utility.delLoadData(Utility.LoadData);

Utility.dLoadData.BeginInvoke(upCount, CallBack, null);

The Callback

Once we spawn an operation in a thread, we have to know what is happening in that operation. In other words, we should be notified when it has completed its operation. There are three ways of knowing whether the operation has completed:

- Callback

- Polling

- Wait until done

In our project, we use a callback method to trap the finishing of the thread. This is nothing but the name of the method that you had passed while calling the Begininvoke() method. This tells the thread to come back and invoke that method when it has done doing what it was supposed to do.

Once a method is fired in a separate thread, you might or might not be interested to know what that method returns. If the method does not return anything, then it will be a “fire and forget call”. In such a case, you would not be interested in the callback and would pass the callback parameter as null.

Utility.dLoadData.BeginInvoke(upCount, CallBack, null);

In our case, we need a callback method and hence we have passed the name of our callback method, which is coincidentally CallBack().

private void CallBack(IAsyncResult asyncResult)

{

string result= string.Empty;

if (this.cancelled)

result = "Operation Cancelled";

else

result = Utility.dLoadData.EndInvoke(asyncResult);

object[] args = { this.cancelled, result };

this.BeginInvoke(dUpdateUI, args);

}

The signature of a callback method is – void MethodName(IAsyncResult asyncResult).

The IAsyncResult contains the necessary information about the thread. The returned data can be trapped as follows:

result = Utility.dLoadData.EndInvoke(asyncResult);

The polling method (not used in this project) is like the following:

IAsyncResult r = Utility.dLoadData.BeginInvoke(upCount, CallBack, null);

while (!r.IsCompleted)

{

}

result = Utility.dLoadData.EndInvoke(asyncResult);

The wait-until-done, as the name suggests, is to wait until the operation is completed.

IAsyncResult r = Utility.dLoadData.BeginInvoke(upCount, CallBack, null);

result = Utility.dLoadData.EndInvoke(asyncResult);

Updating the UI

Now that we have trapped the ending of the operation and retrieved the result that LoadData() returned, we need to update the UI with that result. But there is a problem. The text box which needs to be updated resides in the UI thread and the result has been returned in the callback. The callback happens in the same thread that it started. So the UI thread is different from the callback thread. In other words, the text box cannot be updated with the result like shown below:

this.txtContents.Text = text;

Executing this line in the callback method will result in a cross thread system exception. We have to form a bridge between the UI thread and the background thread to update the result in the textbox. That is done using the Invoke() or BeginInvoke() methods of the form.

I have defined a method which will update the UI:

private void UpdateUI(bool cancelled, string text)

{

this.btnAsync.Enabled = true;

this.btnCancel.Enabled = false;

this.txtContents.Text = text;

}

Define a delegate to the above method:

private delegate void delUpdateUI(bool value, string text);

dUpdateUI = new delUpdateUI(UpdateUI);

Call the BeginInvoke() method of the form:

object[] args = { this.cancelled, result };

this.BeginInvoke(dUpdateUI, args);

One thing to be noted here is that once a thread is spawned using a delegate, it cannot be cancelled, suspended, or aborted. We have no control on that thread.

Using the Thread Class

The same operation can be achieved using the Thread class. The advantage is that the Thread class gives you more power over suspending and cancelling the operation. The Thread class resides in the namespace System.Threading.

We have a private method LoadData() which is a wrapper to our Utility.LoadData().

private void LoadData()

{

string result = Utility.LoadData(upCount);

object[] args = { this.cancelled, result };

this.BeginInvoke(dUpdateUI, args);

}

The reason we have this is because, Utility.LoadData() requires an argument. We need a thread start delegate to initialize the thread.

doWork = new Thread(new ThreadStart(this.LoadData));

doWork.Start();

The delegate has a void, void signature. In case we need to pass an argument, we have to use a parameterized thread start delegate. Unfortunately, the parameterized thread start delegate can take only objects as parameters. We need a string and would have to implement a type casting.

doWork = new Thread(new ParameterizedThreadStart(this.LoadData));

doWork.Start(parameter);

The Thread class gives a lot of power over the thread like Suspend, Abort, Interrupt, ThreadState, etc.

Using BackgroundWorker

The BackgroundWorker is a control which helps to make threading simple. The main feature of the BackgroundWorker is that it can report progress asynchronously which can be used to update a status bar, keeping the UI updated about the progress of the operation in a visual way.

To do this, we need to set the following properties to true. These are false by default.

WorkerReportsProgress WorkerSupportsCancel

The control has three main events: DoCount, ProgressChanged, RunWorkerCompleted. We need to register these events at initializing:

this.bgCount.DoWork += new DoWorkEventHandler(bgCount_DoWork);

this.bgCount.ProgressChanged +=

new ProgressChangedEventHandler(bgCount_ProgressChanged);

this.bgCount.RunWorkerCompleted +=

new RunWorkerCompletedEventHandler(bgCount_RunWorkerCompleted);

The operation can be started by invoking the RunWorkerAsync() method as shown below:

this.bgCount.RunWorkerAsync();

Once this is invoked, the following method is invoked for processing the operation:

void bgCount_DoWork(object sender, DoWorkEventArgs e)

{

string result = string.Empty;

if (this.bgCount.CancellationPending)

{

e.Cancel = true;

e.Result = "Operation Cancelled";

}

else

{

for (int i = 0; i < this.upCount; i++)

{

result += i.ToString();

this.bgCount.ReportProgress((i / this.upCount) * 100);

}

e.Result = result;

}

}

The CancellationPending property can be checked to see if the operation has been cancelled. The operation can be cancelled by calling:

this.bgCount.CancelAsync();

The below line reports the percentage progress:

this.bgCount.ReportProgress((i / this.upCount) * 100);

Once this is called, the below method is invoked to update the UI:

void bgCount_ProgressChanged(object sender, ProgressChangedEventArgs e)

{

if (this.bgCount.CancellationPending)

this.txtContents.Text = "Cancelling....";

else

this.progressBar.Value = e.ProgressPercentage;

}

Finally, the bgCount_RunWorkerCompleted method is called to complete the operation:

void bgCount_RunWorkerCompleted(object sender, RunWorkerCompletedEventArgs e)

{

this.btnAsync.Enabled = true;

this.btnCancel.Enabled = false;

this.txtContents.Text = e.Result.ToString();

}

Thread Pools

It is not recommended that programmers create as many threads as possible on their own. Creating threads is an expensive operation. There are overheads involved in terms of memory and computing. Also, the computer can only work one thread at a given time per CPU. So if there are multiple threads on a single core system, the computer will only be able to cater to one thread at a time. It does so by allocating time "slices" to each thread and working on the available threads in a round robin manner (which also depends on their priority). This is called context switching which in itself is another overhead. So if we have too many threads practically doing noting or sitting idle, we only have overheads in terms of memory consumption, context switching, etc without any net gain. So as developers we need to be extremely cautious when creating threads and be diligent about the number of existing threads we are working with.

Fortunately the CLR has a managed code library that does this for us. This is the ThreadPool class. This class manages a number of threads in its pool and decides on the need to create or destroy any threads based on our application need. The threadPool has no thread to start with. As and when requests start queuing up, it starts creating the threads. If we set the SetMinThreads property, the threadpool quickly assigns that many number of threads as work items start queuing up. When the threadpool finds out that threads are getting idle or are asleep for a long time, it decides to kill threads appropriately.

So, Thread pools are a great way to tap into the pool of background threads that is maintained by our computer. The ThreadPool class allows us to queue a work item which is then delegated to a background thread.

WaitCallback threadCallback = new WaitCallback(HeavyOperation);

for (int i = 0; i < 3; i++)

{

System.Threading.ThreadPool.QueueUserWorkItem(HeavyOperation, i);

}

The heavy operation is defined as :

private static void HeavyOperation(object WorkItem)

{

System.Threading.Thread.Sleep(5000);

Console.WriteLine("Executed work Item {0}", (int)WorkItem);

}

Notice the signature of the WaitCallBack delegate. It must take an object as a method parameter. This is generally used to pass across state information between threads.

Now that we know how to delegate work to a background thread using ThreadPool, we must explore on the callback techniques that go with it. We capture callbacks by using a WaitHandle. The WaitHandle class lends inheritance to two children classes - AutoResetEvent and ManualResetEvent.

public static void Demo_ResetEvent()

{

Server s = new Server();

ThreadPool.QueueUserWorkItem(new WaitCallback((o) =>

{

s.DoWork();

}));

((AutoResetEvent)Global.GetHandle(Handles.AutoResetEvent)).WaitOne();

Console.WriteLine("Work complete signal received");

}

Here we have a Global class which maintains a singleton instance for the WaitHandles.

public static class Global

{

static WaitHandle w = null;

static AutoResetEvent ae = new AutoResetEvent(false);

static ManualResetEvent me = new ManualResetEvent(false);

public static WaitHandle GetHandle(Handles Type)

{

switch (Type)

{

case Handles.ManualResetEvent:

w = me;

break;

case Handles.AutoResetEvent:

w = ae;

break;

default:

break;

}

return w;

}

} The WaitOne method, blocks the code execution till the value is set on the WaitHandle from the background thread.

public void DoWork()

{

Console.WriteLine("Work Starting ...");

Thread.Sleep(5000);

Console.WriteLine("Work Ended ...");

((AutoResetEvent)Global.GetHandle(Handles.AutoResetEvent)).Set();

}

The AutoResetEvent resets itself after being set automatically. It is analogous to a toll gate on an expressway where two or more lanes merge so that vehicles can only pass one at a time. When a vehicle approaches, the gate is set allowing it to pass through and then immediately resets automatically for the other vehicle.

The following example elaborates on the AutoResetEvent. Consider, we have a server with a method DoWork(). This method is a heavy operation and the application needs to update the log file after calling this method. Consider that several threads access this method asynchronously. Hence, we must make sure that the update log is thread safe or is only available to one thread at a time.

public void DoWork(int threadID, int waitSingal)

{

Thread.Sleep(waitSingal);

Console.WriteLine("Work Complete by Thread : {0} @ {1}", threadID, DateTime.Now.ToString("hh:mm:ss"));

((AutoResetEvent)Global.GetHandle(Handles.AutoResetEvent)).Set();

}

public void UpdateLog(int threadID)

{

if(((AutoResetEvent)Global.GetHandle(Handles.AutoResetEvent)).WaitOne(5000))

Console.WriteLine("Update Log File by thread : {0} @ {1}", threadID, DateTime.Now.ToString("hh:mm:ss"));

else

Console.WriteLine("Time out");

}We create two threads and delegate the DoWork() method simultaneously. Then we call the UpdateLog(). The code execution at update log will wait for each thread to complete their respective task before updating.

public static void Demo_AutoResetEvent()

{

Console.WriteLine("Demo Autoreset event...");

Server s = new Server();

Console.WriteLine("Start Thread 1..");

ThreadPool.QueueUserWorkItem(new WaitCallback((o) =>

{

s.DoWork(1, 4000);

}));

Console.WriteLine("Start Thread 2..");

ThreadPool.QueueUserWorkItem(new WaitCallback((o) =>

{

s.DoWork(2, 4000);

}));

s.UpdateLog(1);

s.UpdateLog(2);

}

The ManualResetEvent differs from the AutoResetEvent by the fact that we need to reset it manually before setting it again. Unlike AutoResetEvent, it does not reset automatically. Consider we have a server which sends messages continuously in a background thread. The server runs a continuous loop awaiting the signal to send messages. When the value is set, the server starts sending messages. When the wait handle is reset the server stops and the process can be repeated.

public void SendMessages(bool monitorSingal)

{

int counter=1;

while (monitorSingal)

{

if (((ManualResetEvent)Global.GetHandle(Handles.ManualResetEvent)).WaitOne())

{

Console.WriteLine("Sending message {0}", counter);

Thread.Sleep(3000);

counter += 1;

}

}

}

public static void Demo_ManualResetEvent()

{

Console.WriteLine("Demo Mnaulreset event...");

Server s = new Server();

ThreadPool.QueueUserWorkItem(new WaitCallback((o) =>

{

s.SendMessages(true);

}));

Console.WriteLine("Press 1 to send messages");

Console.WriteLine("Prress 2 to stop messages");

while (true)

{

int input = Convert.ToInt16(Console.ReadLine());

switch (input)

{

case 1:

Console.WriteLine("Starting to send message ...");

((ManualResetEvent)Global.GetHandle(Handles.ManualResetEvent)).Set();

break;

case 2:

((ManualResetEvent)Global.GetHandle(Handles.ManualResetEvent)).Reset();

Console.WriteLine("Message Stopped ...");

break;

default:

Console.WriteLine("Invalid Input");

break;

}

}

}

The Task Class

.NET 4.0 came up with an extension of the ThreadPool class in the form of a Task class. The concept remains pretty much the same with the exception that we have the power to cancel the task, wait on a task and tap on the thread's status from time to time to check the progress. Consider the following example where we have three methods

static void DoHeavyWork(CancellationToken ct)

{

try

{

while (true)

{

ct.ThrowIfCancellationRequested();

Console.WriteLine("Background thread working for task 3..");

Thread.Sleep(2000);

if (ct.IsCancellationRequested)

{

ct.ThrowIfCancellationRequested();

}

}

}

catch (OperationCanceledException ex)

{

Console.WriteLine("Exception :" + ex.Message);

}

catch (Exception ex)

{

Console.WriteLine("Exception :", ex.Message);

}

}

static void DoHeavyWork(int n)

{

Thread.Sleep(5000);

Console.WriteLine("Operation complete for thread {0}", Thread.CurrentThread.ManagedThreadId);

}

static int DoHeavyWorkWithResult(int num)

{

Thread.Sleep(5000);

Console.WriteLine("Operation complete for thread {0}", Thread.CurrentThread.ManagedThreadId);

return num;

}

We have 3 tasks designed to run these 3 methods. The first thread completes without returning a result. The second thread completes and returns a result while the third is cancelled before completion.

try

{

Console.WriteLine(DateTime.Now);

CancellationTokenSource cts1 = new CancellationTokenSource();

CancellationTokenSource cts2 = new CancellationTokenSource();

CancellationTokenSource cts3 = new CancellationTokenSource();

Task t1 = new Task((o) => DoHeavyWork(2), cts1.Token);

Console.WriteLine("Starting Task 1");

Console.WriteLine("Thread1 state {0}", t1.Status);

t1.Start();

Console.WriteLine("Starting Task 2");

Task<int> t2 = Task<int>.Factory.StartNew((o) => DoHeavyWorkWithResult(2), cts2.Token);

Console.WriteLine("Starting Task 3");

Task t3 = new Task((o) => DoHeavyWork(cts3.Token), cts3);

t3.Start();

Console.WriteLine("Thread1 state {0}", t1.Status);

Console.WriteLine("Thread2 state {0}", t2.Status);

Console.WriteLine("Thread3 state {0}", t3.Status);

t1.Wait();

Console.WriteLine("Task 1 complete");

Console.WriteLine("Thread1 state {0}", t1.Status);

Console.WriteLine("Thread2 state {0}", t2.Status);

Console.WriteLine("Thread3 state {0}", t3.Status);

Console.WriteLine("Task 3 is : {0} and cancelling...", t3.Status);

cts3.Cancel();

t2.Wait();

Console.WriteLine("Task 2 complete");

Console.WriteLine("Thread1 state {0}", t1.Status);

Console.WriteLine("Thread2 state {0}", t2.Status);

Console.WriteLine("Thread3 state {0}", t3.Status);

Console.WriteLine("Result {0}", t2.Result);

Console.WriteLine(DateTime.Now);

t3.Wait();

Console.WriteLine("Task 3 complete");

Console.WriteLine(DateTime.Now);

}

catch (Exception ex)

{

Console.WriteLine("Exception : " + ex.Message.ToString());

}

finally

{

Console.Read();

}

Parallel Programming with .NET 4.0 (Time Slicing)

.NET 4.0 came with a cool feature of parallel processing. Most of the threading examples that we saw above were only about delegating bulk jobs to idle threads. The computer was still processing one thread at a time in a round robin way. In a nutshell we were not multitasking in the true sense of the word. All that is possible with the Parallel class.

Consider you have an Employee class which has a heavy operation ProcessEmployeeInformation

class Employee

{

public Employee(){}

public int EmployeeID {get;set;}

public void ProcessEmployeeInformation()

{

Thread.Sleep(5000);

Console.WriteLine("Processed Information for Employee {0}",EmployeeID);

}

}

We create 8 instances and fire parallel requests. On a 4 core processor, 4 of the requests will be processed simultaneously and the rest will be queued waiting for any thread to free up.

List<employee> empList = new List<employee>()

{

new Employee(){EmployeeID=1},

new Employee(){EmployeeID=2},

new Employee(){EmployeeID=3},

new Employee(){EmployeeID=4},

new Employee(){EmployeeID=5},

new Employee(){EmployeeID=6},

new Employee(){EmployeeID=7},

new Employee(){EmployeeID=8},

};

Console.WriteLine("Start Operation {0}", DateTime.Now);

System.Threading.Tasks.Parallel.ForEach(empList, (e) =>e.ProcessEmployeeInformation());

</employee></employee>

We can control or limit the number of concurrent tasks by using the MaxDegreeOfParallelism property. If it is set to -1, there is no limit.

System.Threading.Tasks.Parallel.For(0, 8, new ParallelOptions() { MaxDegreeOfParallelism = 4 }, (o) =>

{

Thread.Sleep(5000);

Console.WriteLine("Thread ID - {0}", Thread.CurrentThread.ManagedThreadId);

});

The problem with parallelism is that if we fire a set of requests we have no guarantee that the responses will bear the same order. The order in which the threads get processed is non deterministic. The AsOrdered property helps us to ensure just that. The inputs can be processed in any order but the output will be delivered in that order.

Console.WriteLine("Start Operation {0}", DateTime.Now);

var q = from e in empList.AsParallel().AsOrdered()

select new { ID = e.EmployeeID };

foreach (var item in q)

{

Console.WriteLine(item.ID);

}

Console.WriteLine("End Operation {0}", DateTime.Now);

Web Applications

Threading in ASP.NET web applications can be achieved by sending an AJAX request from the client to the server. This makes the client request certain data to the server without blocking the UI. When the data is ready, the client is notified via a callback and only the part of the client concerned is updated, making the client agile and responsive.

Threading in ASP.NET web applications can be achieved by sending an AJAX request from the client to the server. This makes the client request certain data to the server without blocking the UI. When the data is ready, the client is notified via a callback and only the part of the client concerned is updated, making the client agile and responsive.

The most common way to achieve this is by ICallbackEventHandler. Refer to the project Demo.Threading.Web. I have the same interface as Windows with a text box to enter a number and a textbox to show the data. The Load Data button performs the previously discussed “heavy” operation.

<div>

<asp:Label runat="server" >Enter Number</asp:Label>

<input type="text" id="inputText" /><br /><br />

<asp:TextBox ID="txtContentText" runat="server" TextMode="MultiLine" /><br /><br />

<input type="button" id="LoadData" title="LoadData"

onclick="LoadHeavyData()" value="LoadData" />

</div>

I have a JavaScript function LoadHeavyData() which is called on the click event of the button. This function calls the function CallServer with parameters.

<script type="text/ecmascript">

function LoadHeavyData() {

var lb = document.getElementById("inputText");

CallServer(lb.value.toString(), "");

}

function ReceiveServerData(rValue) {

document.getElementById("txtContentText").innerHTML = rValue;

}

</script>

The CallServer function is registered with the server in the script that is defined at the page load event of the page:

protected void Page_Load(object sender, EventArgs e)

{

String cbReference = Page.ClientScript.GetCallbackEventReference(this,

"arg", "ReceiveServerData", "context");

String callbackScript;

callbackScript = "function CallServer(arg, context)" +

"{ " + cbReference + ";}";

Page.ClientScript.RegisterClientScriptBlock(this.GetType(),

"CallServer", callbackScript, true);

}

The above script defines and registers a CallServer function. On calling the CallServer function, the RaiseCallBackEvent of ICallbackeventHandler is invoked. This method invokes the LoadData() method which performs the heavy operation and returns the data.

public void RaiseCallbackEvent(string eventArgument)

{

if (eventArgument!=null)

{

Result = this.LoadData(Convert.ToUInt16(eventArgument));

}

}

private string LoadData(int num)

{

return Utility.LoadData(num);

}

Once LoadData() is executed, the GetCallbackResult() method of ICallbackEventHandler is executed, which returns the data:

public string GetCallbackResult()

{

return Result;

}

Finally, the ReceiveServerData() function is called to update the UI. The ReceiveServerData function is registered as the callback for the CallServer() function in the page load event.

function ReceiveServerData(rValue) {

document.getElementById("txtContentText").innerHTML = rValue;

}

WPF

Typically WPF applications start with two threads -

- Rendering Thread - Runs in the background handling low level tasks.

- UI Thread - Receives input, handles event, paints the screen and runs application code.

Threading in WPF is achieved in the same way as win forms with an exception that we use the Dispatcher object to bridge UI update from a background thread. The UI thread queues work items inside an object called Dispatcher. The Dispatcher selects work items on a priority basis and runs each one to completion. Every UI thread has one Dispatcher and each Dispatcher can execute items in one thread. When an expensive work is completed in a background thread and the UI needs to be updated with the result, we use the dispatcher to queue the item in the task list of the UI thread.

Consider the following example where we have a Grid split into two parts. On the 1st part we have a property called ViewModelProperty bound to the view Model and on the 2nd part we have a bound collection ViewModelCollection. We also have a button which updates these properties. To simulate a "heavy work" we put the thread to sleep before updating the properties.

<DockPanel>

<TextBlock Text="View Model Proeprty: " DockPanel.Dock="Left"/>

<TextBlock Text="{Binding ViewModelProperty}" DockPanel.Dock="Right"/>

</DockPanel>

<ListBox Grid.Row="1" ItemsSource="{Binding ViewModelCollection}"/>

<Button Grid.Row="2" Content="Change Property" Width="100" Command="{Binding ChangePropertyCommand}"/> Here is the View Model. Notice the method DoWork() which is called via a background thread. As discussed we have two properties - ViewModelProperty and ViewModelCollection. These implement the INotifyCollectionChanged and the view model itself inherits from DispatcherObject. The main purpose of this example is to show how a data change from a background thread is passed on to the UI. In the DoWork() method, the change in the property ViewModelProperty is handled automatically but an addition to the collection is queued into the UI thread from the background thread via the Dispatcher object. The key point to note here is that while the WPF run time takes care of the property changed notification from a background thread, the notification from a change in collection has to be handled by the programmer.

public ViewModel()

{

ChangePropertyCommand = new MVVMCommand((o) => DoWork(), (o)=> DoWorkCanExecute());

ViewModelCollection = new ObservableCollection<string>();

ViewModelCollection.CollectionChanged +=

new System.Collections.Specialized.NotifyCollectionChangedEventHandler(ViewModelCollection_CollectionChanged);

}

public ICommand ChangePropertyCommand { get; set; }

private string viewModelProperty;

public string ViewModelProperty

{

get { return viewModelProperty; }

set

{

if (value!=viewModelProperty)

{

viewModelProperty = value;

OnPropertyChanged("ViewModelProperty");

}

}

}

private ObservableCollection<string> viewModelCollection;

public ObservableCollection<string> ViewModelCollection

{

get { return viewModelCollection; }

set

{

if (value!= viewModelCollection)

{

viewModelCollection = value;

}

}

}

public void DoWork()

{

ThreadPool.QueueUserWorkItem((o) =>

{

Thread.Sleep(5000);

ViewModelProperty = "New VM Property";

Dispatcher.Invoke(DispatcherPriority.Background,

(SendOrPostCallback)delegate

{

ViewModelCollection.Add("New Collection Item");

},null);

});

}

private bool DoWorkCanExecute()

{

return true;

}

public event PropertyChangedEventHandler PropertyChanged;

private void OnPropertyChanged(string PropertyName)

{

if (PropertyChanged!=null)

{

PropertyChanged(this, new PropertyChangedEventArgs(PropertyName));

}

}

} Thread Safety

A talk on threads is never over without talking about thread safety. Consider a resource being used by multiple threads. That would mean that the resource is being used and shared by the control over multiple threads. This would result in the resource behaving in an in-deterministic way and the results getting haywire. That is why we need to implement “thread safe” applications so that a resource is only available to one single thread at any point in time.

The following are the ways of implementing thread safety in .NET:

- Interlocked – The Interlocked class treats an operation as atomic. For example, simple addition, subtraction operations are three step operations inside the processor. When multiple threads access the same resource subject to these operations, the results can get confusing because one thread can be preempted after executing the first two steps. Another thread can then execute all three steps. When the first thread resumes execution, it overwrites the value in the instance variable, and the effect of the operation performed by the second thread is lost. Hence we need to use the Interlocked class which treats these operations as atomic, making them thread safe. E.g.: Increment, Decrement, Add, Read, Exchange, CompareExchange.

System.Threading.Interlocked.Increment(object);

- Monitor – The Monitor class is used to lock an object which might be vulnerable to the perils of multiple threads accessing that object concurrently.

if (Monitor.TryEnter(this, 300)) {

try {

}

finally {

Monitor.Exit(this);

}

}

else {

}

- Locks - The Lock class is an enhanced version of the monitor. In other words it encapsulates the features of the monitor without explicitly having to exit as is the case with the Monitor. The most popular example is that of the

GetInstance() method of the Singleton class. Here the method can be used by various modules accessing it concurrently. Thread safety is implemented by locking that block of code with an object syncLock. Note that the object that is used to lock is similar to a real world key of a lock. if two or more resources have the key they can each open the lock and access the underlying resource. Hence we need to make sure that the key (or the object in this case) can never be shared. It is best to have the object as a private member of the class. -

static object syncLock = new object();

if (_instance == null)

{

lock (syncLock)

{

if (_instance == null)

{

_instance = new LoadBalancer();

}

}

} - Reader-Writer Lock - The lock can be acquired by an unlimited number of concurrent readers, or exclusively by a single writer. This can provide better performance than a Monitor if most accesses are reads while writes are infrequent and of short duration. At any point in time readers and writer queue up separately. When the writer thread has the lock, the readers queue up and wait for the writer to finish. When the readers have the lock, all writing threads queue up separately. Readers and writers alternate to get the job done. The below code explains in detail. We have two methods -

<span style="color: rgb(17, 17, 17); font-family: 'Segoe UI', Arial, sans-serif; font-size: 14px;"> </span><code>ReadFromCollection and WriteToCollection to read and write from a collection respectively. Note the use of the methods -AcquireReaderLock and AcquireWriterLock. These methods hold the thread till the reader or writer is free.

static void Main(string[] args)

{

new Thread(new ThreadStart(() =>

{

WriteToCollection(new int[]{1,2,3});

})).Start();

new Thread(new ThreadStart(() =>

{

ReadFromCollection();

})).Start();

new Thread(new ThreadStart(() =>

{

WriteToCollection(new int[] { 4, 5, 6 });

})).Start();

new Thread(new ThreadStart(() =>

{

ReadFromCollection();

})).Start();

Console.ReadLine();

}

static void ReadFromCollection()

{

rwLock.AcquireReaderLock(5000);

try

{

Console.WriteLine("Read Lock acquired by thread : {0} @ {1}", Thread.CurrentThread.ManagedThreadId, DateTime.Now.ToString("hh:mm:ss"));

Console.Write("Collection : ");

foreach (int item in myCollection)

{

Console.Write(item + ", ");

}

Console.Write("\n");

}

catch (Exception ex)

{

Console.WriteLine("Exception : " + ex.Message);

}

finally

{

Console.WriteLine("Read Lock released by thread : {0} @ {1}", Thread.CurrentThread.ManagedThreadId, DateTime.Now.ToString("hh:mm:ss"));

rwLock.ReleaseReaderLock();

}

}

static void WriteToCollection(int[] num)

{

rwLock.AcquireWriterLock(5000);

try

{

Console.WriteLine("Write Lock acquired by thread : {0} @ {1}", Thread.CurrentThread.ManagedThreadId, DateTime.Now.ToString("hh:mm:ss"));

myCollection.AddRange(num);

Console.WriteLine("Written to collection ............: {0}", DateTime.Now.ToString("hh:mm:ss"));

}

catch (Exception ex)

{

Console.WriteLine("Exception : " + ex.Message);

}

finally

{

Console.WriteLine("Write Lock released by thread : {0} @ {1}", Thread.CurrentThread.ManagedThreadId, DateTime.Now.ToString("hh:mm:ss"));

rwLock.ReleaseWriterLock();

}

} - Mutex - A Mutex is used to share resources across the Operating system. A good example is to detect if multiple versions of the same applicationare running concurrently.

There are other ways of implementing thread safety. Please refer to MSDN for further information.

Dead Lock

A discussion on how to create a thread safe application can never be complete without touching on the concept of deadlocks. Let’s look at what that is.

A deadlock is a situation when two or more threads lock the same resource, each waiting for the other to let go. Such a situation will result in the operation being stuck indefinitely. Deadlocks can be avoided by careful programming.

Example:

- Thread A locks object A

- Thread A locks object B

- Thread B locks object B

- Thread B locks object A

Thread A waits for Thread B to release object B and Thread B waits for Thread A to release object A. Consider the below example where we have a class DeadLock. We have two methods with nested locking of two objects - OperationA and OperationB. We will have a deadlock situation when we fire two threads running operation A and operation B simultaneously.

public class DeadLock

{

static object lockA = new object();

static object lockB = new object();

public void OperationA()

{

lock (lockA)

{

Console.WriteLine("Thread {0} has locked Obect A", Thread.CurrentThread.ManagedThreadId);

lock (lockB)

{

Console.WriteLine("Thread {0} has locked Obect B", Thread.CurrentThread.ManagedThreadId);

}

Console.WriteLine("Thread {0} has released Obect B", Thread.CurrentThread.ManagedThreadId);

}

Console.WriteLine("Thread {0} has released Obect A", Thread.CurrentThread.ManagedThreadId);

}

public void OperationB()

{

lock (lockB)

{

Console.WriteLine("Thread {0} has locked Obect B", Thread.CurrentThread.ManagedThreadId);

lock (lockA)

{

Console.WriteLine("Thread {0} has locked Obect A", Thread.CurrentThread.ManagedThreadId);

}

Console.WriteLine("Thread {0} has released Obect A", Thread.CurrentThread.ManagedThreadId);

}

Console.WriteLine("Thread {0} has released Obect B", Thread.CurrentThread.ManagedThreadId);

} }

DeadLock deadLock = new DeadLock();

Thread tA = new Thread(new ThreadStart(deadLock.OperationA));

Thread tB = new Thread(new ThreadStart(deadLock.OperationB));

Console.WriteLine("Starting Thread A");

tA.Start();

Console.WriteLine("Starting Thread B");

tB.Start();

Worker Threads vs I/O Threads

The Operating System has only one concept of threads which is what it uses to run various processes. But the .NET CLR has abstracted out a layer for us where we can deal with two types of threads - Worker Threads and I/O Threads. The method ThreadPool.GetAvailableThreads(out workerThread, out ioThread) shows us the number of each of these threads available. While coding, the heavy tasks in our applications should be classified into two categories - Compute bound or I/O bound operations. A compute bound operation is an operation where the CPU is used for heavy computation like running search results or complex algorithms. The I/O bound operations are those operations which utilize the system I/O hardware or network drives. For example - reading and writing a file, fetching data from database or querying a remote web server. Compute bound operations should be delegated to worker threads and I/O bound operations should be delegated to I/O threads. When we queue items in a ThreadPool we are delegating items to the worker threads. If we use the worker threads to perform I/O bound operations, the threads remains blocked while the device driver performs that operation. A blocked thread is a wasted resource. On the other hand, if we use a I/O thread for the same task, the calling thread will delegate the task to the device driver and return to the thread pool. When the operation is completed, a thread from the thread pool will be notified and handle the task completion. The advantage is that the threads remain unblocked to handle other tasks because when an I/O operation is initiated the calling thread only delegates the task to the part of OS which handles the device drivers. There is no reason why the thread should remain blocked till the task is completed. In the .NET class library the Async Programming Model on certain types handles the I/O threads. For example - BeginRead() and EndRead() in FileStream class. As a thumb rule all methods with BeginXXX and EndXXX fall into this category.

Summary

"With great power comes great responsibility" - ThreadPool

- No application should ever run heavy tasks on the UI thread. There is nothing uglier than a frozen UI. Threads should be created to manage the heavy work asynchronously using thread pools when ever possible.

- The UI cannot update data directly from a non UI or a background thread. Programmers need to delegate that work to the UI thread. This is done using the

Invoke method of the winform class, Dispatcher in WPF or handled automatically when using BackGroundWorker. - Threads are expensive resources and should be treated with respect. The term "More the merrier.." is unfortunately not applicable.

- Problems in our application will not go away by simply assigning a task to another thread. There is no magic happening and we need to carefully consider our design and purpose for maximizing efficiency.

- Creating a Thread with Thread class should be dealt with caution. Wherever possible a thread pool should be used. It is also not a good idea to fiddle around with the priority of a thread as it may stop other important threads from getting executed.

- Setting the

IsBackground property to false carelessly can have catastrophic effect. Foreground threads will not let the application terminate till its task is complete. So if the user wants to terminate an application and there is a task that running in the background which has been marked as a foreground thread, then the application wont be terminated till the task is completed. - Thread synchronization techniques should be carefully implemented when multiple threads are sharing resources in an application. Deadlocks should be avoided through careful coding. Nesting of locks should always be avoided as these may result in deadlocks.

- Programmers should make sure that we do not end up creating more threads than required. Idle threads only give us overheads and may result in 'Out of Memory" exception.

- I/ O operations must be delegated to I/O threads rather than working threads.