Signum Framework Tutorials

Content

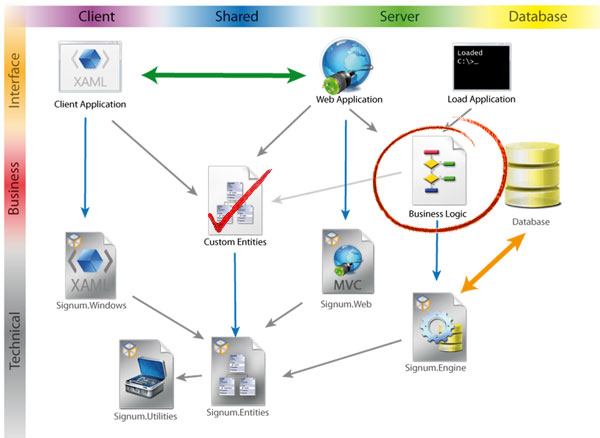

Signum Framework is a new open source framework for the development of entity-centric N-Layer applications. The core is Signum.Engine, an ORM with a full LINQ provider, which works nicely on both client-server (WPF & WCF) and web applications (ASP.Net MVC).

Signum Framework focus is to simplify the creation of composable vertical modules that can be used in many applications, and promotes clean and simple code via encouraging functional programming and the scope pattern.

If you want to know more about what makes Signum Framework different, take a look to the Signum Framework Principles

We are pleased to announce that finally we have released Signum Framework 2.0.

The release took more than expected but, as a result, is much more ambitious. We have been using our framework internally in a daily basis and we think that this release is finished and will glad everyone brave enough to use it.

The new release is focused in different trends:

- Signum.Web: Based in ASP.Net MVC 3.0 and Razor, tries to keep the same feeling and productivity of Signum.Windows without constraining the possibilities of the web (jQuery, Ajax, control of the markup, friendly urls…)

- Keeping up to date with technology: The framework now runs only on .Net 4.0/ ASP.Net MVC 3.0 and the snippets and templates target Visual Studio 2010.

- Improving pretty much everything and fixing bugs: For a complete list look at the change log http://www.signumframework.com/ChangeLog2.0.ashx

In order to show the capabilities of the framework, and have a good understanding of the architecture, we’re preparing a series of tutorials in which we will work on a stable application: Southwind.

Southwind is the Signum version of Northwind, the well-known example database provided with Microsoft SQL Server.

In this series of tutorials we will create the whole application, including the entities, business logic, windows (WPF) and web (MVC) user interface, data loading and any other aspect worth to explain.

In the last tutorial we created the entities for our Southwind application, we learned about Entities, EmbeddedEntities, MList<T>, Lite<T> among other things.

In this tutorial we will focus on writing the business logic, and how to interact with the database.

In a Signum Framework application, we understand the business logic as the piece of code that lives in a different assembly than the entities, in this case Southwind.Logic, and runs in the server.

This assembly contains the code that interacts with the database:

- the business rules

- the processes that are defined in our application

- the queries that will be used

- etc....

By placing all this code in a different assembly, we can use it in different situations:

- a web application

- a windows application through a WCF service

- a loading application with administrative purposes

- a collection of unit tests.

Just as Southwind.Entities had all the entities that will shape our data, Southwind.Logic contains classes that interact with a couple of highly cohesive entities (usually from 1 to 4 entities). We call them the 'Logic classes' and are are responsible for:

- Include these entities in the schema so the application knows that these entities are required to be in the database

- Register queries that will be available to the user (similar to views)

- Define the business logic that will deal with these entities

In opposition to other ORM like NHibernate or Entity Framework, Signum Framework has no concept of session, data context or any other name for the Unit of Work pattern.

Instead, the change tracking of the entities is kept inside of the entities itself, and other related information like the current connection or transaction, or the cache of the entities already retrieved, is kept in implicit contexts using ThreadStatic variables. We call this pattern the Scope Pattern.

The main benefit of using this pattern (and the main reason we bother to make a Framework), is that by not passing around an object that represents our database, we are making our code schema-independent. This means that the code that deals with the database will work fine in a different schema that contains similar tables, making it possible to re-use vertical modules.

Also, we encourage defining our logic classes as static classes, so they usually have no state and you don’t have to instantiate anything before using them. Also you can easily define the methods on them as extension methods over the main entity.

Let’s start by writing a class that will deal with employees. We make it a new static class named EmployeeLogic that looks like this:

public static class EmployeeLogic

{

public static void Start(SchemaBuilder sb, DynamicQueryManager dqm)

{

if (sb.NotDefined(MethodInfo.GetCurrentMethod()))

{

sb.Include<EmployeeDN>();

}

}

}

By convention, we make a Start static method that take a SchemaBuilder and a DynamicQueryManager. In this method we registers every necessary thing to make Employee a fully working module in our application.

The first thing to do is to check if the method has been already called before, to avoid registering the module twice. We use the convenient NotDefined method from SchemaBuilder.

In this case, let’s just include EmployeeDN in our schema. As we see in the last tutorial, just by including EmpoyeeDN, the related entities (in this case TerritoryDN and RegionDN) get automatically included.

Some business logic

Now let’s create some methods in our logic.

The first method, to save a new employee in the database will, be that simple:

public static void Create(EmployeeDN employee)

{

if (!employee.IsNew)

throw new ArgumentException("The employee should be new", "employee");

employee.Save();

}

Note that every IdentifiableEntity has an IsNew property that indicates whether the entity has been saved in the database or not.

Also, is possible that the Save method does not compile because it’s an extension method defined in the static class Database in Signum.Engine namespace. Just add:

using Signum.Engine;

The Database class contains the methods to interact with the database: Save, Retrieve or Delete entities. There are generic and untyped overloads of the methods, as well as overloads that deal with a single entity or a list of them.

public static class Database

{

public static T Save<T>(this T entity) where T : class, IIdentifiable;

public static void SaveList<T>(this IEnumerable<T> entities)

where T : class, IIdentifiable;

public static void SaveParams(params IIdentifiable[] entities);

public static T Retrieve<T>(int id) where T : IdentifiableEntity;

public static T Retrieve<T>(this Lite<T> lite) where T : class, IIdentifiable;

public static IdentifiableEntity Retrieve(Type type, int id);

public static IdentifiableEntity Retrieve(Lite lite);

public static T RetrieveAndForget<T>(this Lite<T> lite)

where T : class, IIdentifiable;

public static IdentifiableEntity RetrieveAndForget(Lite lite);

public static List<T> RetrieveAll<T>() where T : IdentifiableEntity;

public static List<IdentifiableEntity> RetrieveAll(Type type);

public static List<Lite<T>> RetrieveAllLite<T>() where T : IdentifiableEntity;

public static List<Lite> RetrieveAllLite(Type type);

public static List<T> RetrieveFromListOfLite<T>(this IEnumerable<Lite<T>> lites)

where T : class, IIdentifiable;

public static List<IdentifiableEntity> RetrieveFromListOfLite(IEnumerable<Lite> lites);

public static List<T> RetrieveList<T>(List<int> ids) where T : IdentifiableEntity;

public static List<IdentifiableEntity> RetrieveList(Type type, List<int> ids);

public static List<Lite<T>> RetrieveListLite<T>(List<int> ids)

where T : IdentifiableEntity;

public static List<Lite> RetrieveListLite(Type type, List<int> ids);

public static Lite<T> RetrieveLite<T>(int id) where T : IdentifiableEntity;

public static Lite<T> RetrieveLite<T>(Type runtimeType, int id)

where T : class, IIdentifiable;

public static Lite<T> RetrieveLite<T, RT>(int id)

where T : class, IIdentifiable

where RT : IdentifiableEntity, T;

public static Lite RetrieveLite(Type type, int id);

public static Lite RetrieveLite(Type type, Type runtimeType, int id);

public static void Delete<T>(int id) where T : IdentifiableEntity;

public static void Delete<T>(this Lite<T> lite) where T : class, IIdentifiable;

public static void Delete<T>(this T ident) where T : IdentifiableEntity;

public static void Delete(Type type, int id);

public static void DeleteList<T>(IList<int> ids) where T : IdentifiableEntity;

public static void DeleteList<T>(IList<Lite<T>> collection)

where T : class, IIdentifiable;

public static void DeleteList<T>(IList<T> collection) where T : IdentifiableEntity;

public static void DeleteList(Type type, IList<int> ids);

public static bool Exists<T>(int id) where T : IdentifiableEntity;

public static bool Exists(Type type, int id);

public static Lite<T> FillToStr<T>(this Lite<T> lite) where T : class, IIdentifiable;

public static string GetToStr<T>(int id) where T : IdentifiableEntity;

public static Lite FillToStr(Lite lite);

public static IQueryable<T> Query<T>() where T : IdentifiableEntity;

public static IQueryable<S> InDB<S>(this Lite<S> lite) where S : class, IIdentifiable;

public static IQueryable<S> InDB<S>(this S entity) where S : IIdentifiable;

public static IQueryable<MListElement<E, V>> MListQuery<E, V>(

Expression<Func<E, MList<V>>> mlistProperty)

where E : IdentifiableEntity;

public static IQueryable<T> View<T>() where T : IView;

public static int UnsafeDelete<T>(this IQueryable<T> query)

where T : IdentifiableEntity;

public static int UnsafeDelete<E, V>(this IQueryable<MListElement<E, V>> query)

where E : IdentifiableEntity;

public static int UnsafeUpdate<T>(this IQueryable<T> query,

Expression<Func<T, T>> updateConstructor) where T : IdentifiableEntity;

public static int UnsafeUpdate<E, V>(this IQueryable<MListElement<E, V>> query,

Expression<Func<MListElement<E, V>, MListElement<E, V>>> updateConstructor)

where E : IdentifiableEntity;

}

Also, since most of the methods in Database are extension methods (Save, SaveList, Delete...) the code looks clean and natural. But remember that this is just a compilation trick and the entities are defined in a different assembly, are not dependant to the engine neither to the database, and thus the entities can be used in a Windows user interface in a client machine.

Let's see other example of simple business logic:

To retrieve the employee by Id write

public static EmployeeDN Retrieve(int id)

{

Database.Retrieve<EmployeeDN>(id)

}

And to remove an entity from the database just write

public static void Remove(EmployeeDN employee)

{

employee.Delete();

}

Linq to Signum is a robust and complete implementation of a Linq provider that integrates nicely with the framework philosophy. You can expect from it preatty much everything that Linq to Sql currently has (Select, Where, implicit and explicit Join, GroupBy, OrderBy, Skip, Take, Single...) and some new tricks that we will see later.

We can use Linq to Signum in many different situations, typically in your business logic or load application, or you can expose open queries to the end user via the DynamicQueryManager.

Let’s make a query a little more complex, for example, a method that returns the N best employees that report to the current employee.

public static List<Lite<EmployeeDN>> TopEmployees(int num)

{

return (from e in Database.Query<EmployeeDN>()

where e.ReportsTo.RefersTo(EmployeeDN.Current)

orderby Database.Query<OrderDN>().Count(a => a.Employee == e.ToLite())

select e.ToLite()).Take(num).ToList();

}

As you will see, the syntax feels quite natural and similar to other Linq providers with some tiny differences:

- We don’t have an explicit context that represents the database, with a property for each table, instead you will have to use

<code>Database.Query<T>() static method to reach a table of entities. It’s a little longer but has benefits for re-using your code, since you code doesn’t get attached to a particular database schema. - We use

Lite<T> to represent lazy relationships and, on general, the identity information of an entity. In order to compare a Lite<T> with an entity you can use RefersTo(), or ToLite() method (also usefull for casting lites with different static type).

If you already know other IQueryable providers, taking into account these two little differences you should be able to write quite complex queries already.

How a LINQ provider works?

Linq is a wonderfull technology that Microsoft has given to us. It makes writing queries to any data source absolutely similar and more expressive, but the abstraction is so strong, that sometimes it's hard for the developer to write efficient code.

I would like to get deeper in some issues that affect every Linq-Sql provider and that are not properly explained.

As part of the translation process, your query is basically split in 3 parts.

- Constant sub-expressions that should be evaluated in C# and used as SqlParameters

- A SQL string that represents your query

- A lambda expression that translates each DataRow into your result object.

In our last query, for example, it will get split pretty much like this:

(from e in Database.Query<EmployeeDN>()

where e.ReportsTo == EmployeeDN.Current.ToLite()

orderby Database.Query<OrderDN>().Count(a => a.Employee == e.ToLite())

select e.ToLite()).Take(num).ToList()

It’s important to know this since, if the green or blue code is buggy, then it will look like there’s an exception in the Linq provider, but it’s in your code instead.

Also, writing a Linq provider is quite complex task than needs, apart from implementing the translation of all the Linq operators, to solve some hard problems, some of which should be taken into account by the developer:

- Handle nullability mismatch (explicit in C#, implicit in SQL). Sometimes a cast to

Nullable<T> is necessary for value types.

Database.Query<EmployeeDN>().Select(e => (int?)e.ReportsTo.Id)

- Handle boolean expressions (SQL has no boolean expressions, just bit values and conditions). These conversions make some SQL queries awkward.

Database.Query<EmployeeDN>().Where(e => true)

.Select(e => new { IsId2 = e.Id == 2})

- Short-circuit the evaluation and translation of sub-expression that are known to be useless after the evaluation of a previous constant boolean sub-expression.

Database.Query<EmployeeDN>().Where(e => name != null ? e.Name == name: true)

Database.Query<EmployeeDN>().Where(e => name == null || e.Name == name)

- When the result set has sub-collections on each row, a client join is made automatically (no N+1 problem). Works fine, but take it into account.

Database.Query<RegionDN>().Select(r => new { Region = r.ToLite(), r.Territories})

- If there are entities in the result set, reconstruct the entity graph without creating duplicates. As an advice, retrieve just

Lite<T> if possible, its more efficient.

Database.Query<EmployeeDN>()

Dynamic queries for the user

The DynamicQueryManager is a repository of queries associated with a key ‘queryName’. These queries are open in the sense that the end user is able to add filters, change ordering or even add and remove columns.

The main usage of this dynamic queries are search dialogs in windows and web applications, but the concept is general enough to be used by third party consumers, like the tools for exporting the results to excel or for making dynamic graphics in Signum Extensions.

Also, the only current implementation is based in Linq to Signum, taking profit of the localization of the entities and the authorization system in Signum.Extensions, but other implementations based in other Linq providers or plain Sql Views could be created.

Let’s get practical. In the Start method, just after including EmployeeDN in the Schema, we will add a query for each entity in this Employee module:

public static void Start(SchemaBuilder sb, DynamicQueryManager dqm)

{

if (sb.NotDefined(MethodInfo.GetCurrentMethod()))

{

sb.Include<EmployeeDN>();

dqm[typeof(RegionDN)] = (from r in Database.Query<RegionDN>()

select new

{

Entity = r.ToLite(),

r.Id,

r.Description,

}).ToDynamic();

dqm[typeof(TerritoryDN)] = (from t in Database.Query<TerritoryDN>()

select new

{

Entity = t.ToLite(),

t.Id,

t.Description,

Region = t.Region.ToLite()

}).ToDynamic();

dqm[typeof(EmployeeDN)] = (from e in Database.Query<EmployeeDN>()

select new

{

Entity = e.ToLite(),

e.Id,

e.UserName,

e.FirstName,

e.LastName,

e.BirthDate,

e.Photo,

}).ToDynamic();

}

}

Let’s analyze the code. What we do is to associate each query with a type, in the first case typeof(RegionDN).

Since a queryName is an object, we can use any System.Type as a name. When a query is associated with a type it becomes the default query for this type. This is convenient in order to work less in the user interface.

In the right side, what we have is an IQueryable of an anonymous type that is converted to a DynamicQuery<T> using the ToDynamic extension method.

In order to create a dynamic query, the only necessary thing is to provide an IQueryable<T> that contains, among other properties, an Entity property of type Lite. This value will be the entity associated with every record and won’t be shown to the end user.

You can also use Lite<T> for other columns, this way you will have links to the related entities and the filter will have auto-completion.

A dynamic query is open to changes from the user:

- Filters: The user can aggregate and remove filters visually that consist in a

[Column, Operation, Value] tuple. The value and the operation will correspond to the type of the column.

I.E.: Where [Territory.Region.Name, Equals, "UK"] - Orders: The user can order by any column (numbers, strings, entities,...) ascending or descending, and by shift-clicking add multiple order criteria.

I.E.: OrderBy [Territory.Region ASC], [Employee.Name DESC] - Columns: The user can remove existing columns and add new ones. The new columns could be any field of the entity and, if the field has another entity, the fields of this sub entity and so on. A left outer join will be made, so the number of rows won’t change.

I.E.: Add Columns [Territory.Region.Name], [Territory.Region.Id] - Collections: In the case of collection fields, the user will also be able to filter the results depending on the elements in the collection (via

All or Any) or multiply the results by the elements in the collection (implemented using SelectMany). In this case, a message will alert the user that the number of rows is being multiplied.

I.E.: Where [Employee.Territories.Any, EqualsTo, (Territory,2)]

Add columns [Employee.Territories.Element.Name]

OrderBy [Employee.Territories.Element.Id]

All this features make the dynamic queries an invaluable technology for and advanced end-user that, as far as we know, is not possible with any other framework. However, we will have to wait for the next tutorials to see it working.

Let’s make now a ProductLogic static class with a Start method starting with if NotDefined, then we include ProductDN in the schema builder and finally let’s add some queries for the administration of categories:

dqm[typeof(CategoryDN)] = (from s in Database.Query<CategoryDN>()

select new

{

Entity = s.ToLite(),

s.Id,

s.CategoryName,

s.Description,

}).ToDynamic();

Suppliers:

dqm[typeof(SupplierDN)] = (from s in Database.Query<SupplierDN>()

select new

{

Entity = s.ToLite(),

s.Id,

s.CompanyName,

s.ContactName,

s.Phone,

s.Fax,

s.HomePage,

s.Address

}).ToDynamic();

And Products:

dqm[typeof(ProductDN)] = (from p in Database.Query<ProductDN>()

select new

{

Entity = p.ToLite(),

p.Id,

p.ProductName,

p.Supplier,

p.Category,

p.QuantityPerUnit,

p.UnitPrice,

p.UnitsInStock,

p.Discontinued

}).ToDynamic();

And let’s also make a queries for products so we don’t have to filter-out discontinued products all the time:

dqm[ProductQueries.Current] = (from p in Database.Query<ProductDN>()

where !p.Discontinued

select new

{

Entity = p.ToLite(),

p.Id,

p.ProductName,

p.Supplier,

p.Category,

p.QuantityPerUnit,

p.UnitPrice,

p.UnitsInStock,

}).ToDynamic();

Notice how in this second query for products we used an enum, instead of an string, as the queryName. We prefer enums over strings becasue they are strongly typed and easier to localize.

We won’t create any method for dealing with the entities, the default behavior of the user interface will just Save the objects and this is all right in this case.

Quite simple, let’s make it a little bit more complicated.

Let’s suppose that for the administrator would be useful to have the ValueInStock column (UnitPrice * UnitsInStock) to avoid accumulating too much capital in products.

We could just create the column in the query, but then we would have to replicate the code to show the value in the user interface of the entity, in the business logic or in some reports.

A much more elegant solution would be to add a read–only calculated property in the product entity itself, like this:

public class ProductDN : Entity

{

(…)

public decimal ValueInStock

{

get { return unitPrice * unitsInStock; }

}

(…)

}

Unfortunately, the body of the getter of this property will be compiled in MSIL, so the LINQ provider won’t have an idea of what ValueInStock does. What we would like to do is to keep the body as an Expression Tree, so the LINQ provider can understand de definition.

While the C# team adds such support, we have adopted the following convention: whenever the provider cannot find a translation for a property or method, it looks for a static field with the same name but terminating with Expression in the same class. This field should contain a Lambda Expression Tree (Expression<T>) with the same input and output parameters, including the object itself in the case of instance members.

By using this, you can add more semantic information to your entities and factor-out your business logic in reusable functions that can be understood by the queries, making your business logic way simpler and easier to maintain.

Signum Framework setup already installs two snippets to make use of this feature.

expressionProperty [Tab] [Tab] ProductDN [Tab] decimal [Tab] ValueInStock [Tab] p [Enter]

Maybe we will need to add the following namespaces:

using System.Linq.Expressions;

using Signum.Utilities;

Finally we will implement the body of the lambda with our simple formula, but using the parameter p since the field is static. The result should look like this:

public class ProductDN : Entity

{

(…)

static Expression<Func<ProductDN, decimal>> ValueInStockExpression =

p => p.unitPrice * p.unitsInStock;

public decimal ValueInStock

{

get { return ValueInStockExpression.Invoke(this); }

}

(…)

}

Now the LINQ provider understands this property, so we can use it in our queries.

Also, if we use the property in normal in-memory code, the Invoke method (defined in Signum.Utilities) compiles, catches and invokes the lambda expression, so we only have to write the code once.

If you need the definition for the queries to be different than the one for code, just write a different code in the getter.

This technique works for properties and methods (even extension methods) and for static and instance members, and constitutes the simplest way to expand the LINQ provider (there are two ways more).

How the expression trees get generated?

LINQ syntax makes querying some in-memory objects so similar to querying a database that sometimes developers get confused. The reason is that creating an expression tree looks exactly as creating a normal lambda expression.

An expression tree is a run-time representation of a piece of code that can be used by a library (like a LINQ provider). This expression trees can be compiled an executed, but compiled code cannot be converted to expression trees. Only expression trees can be translated to SQL.

The code that creates this tree of nodes is plain C# that uses the Expression class, and can be automatically generated by the C# compiler, or can be written manually.

Currently the C# compiler is able to generate expression trees for lambda expressions witch inferred type is Expression<T><t>, being T some delegate type (like Func, Action, EventHandler,...) and only If the lambda expression has expression body (instead of statement body).

However, when we call the methods on Queryable static class (Select, Where, GroupBy...), the methods are actually executed (contrary to common belief) but their only purpose is to create another IQueryable<T> with an expression that ‘extends’ the expression of the previous IQueryable<T> with the node for the current operator (code that writes itself!).

For example, in our previous query example (once without query comprehensions):

Database.Query<EmployeeDN>()

.Where(e=>e.ReportsTo == EmployeeDN.Current.ToLite())

.OrderBy(e=>Database.Query<OrderDN>().Count(a => a.Employee == e.ToLite()))

.Select(e=>e.ToLite())

.Take(num)

.ToList();

- Expression created by the C# compiler

- Expression created at runtime

This subtle difference is important in the following situations: Imagine that we make an extension method like this:

public static IQueryable<ProductDN> AvailableOnly(this IQueryable<ProductDN> products)

{

return products.Where(a => !a.Discontinued);

}

This code will work all right as long as it's used it in the ‘main query path’ because it will get executed, but if we use it inside of a lambda expression (i.e: where predicate), then the code is not executed and the LINQ provider finds an AvailableOnly method but has no clue what it does.

Using expressionMethods we circumvent this problem:

static Expression<Func<IQueryable<ProductDN>, IQueryable<ProductDN>> AvailableOnlyExpression =

products => products.Where(a => !a.Discontinued);

public static IQueryable<ProductDN AvailableOnly(this IQueryable<ProductDN> entity)

{

return AvailableOnlyExpression.Invoke(entity);

}

Note: In more complex scenarios, like when the method is generic or there are different overloads, we could use MethodExpanderAttribute and IMethodExpander to teach the LINQ provider how to translate the unknown method.

Every ORM has to deal with inheritance somehow (Table per hierarchy, Table per subclass, Table per concrete class).

Signum Framework keeps every concrete class in his own individual table, and uses polymorphic foreign keys for modeling inheritance. Two different attributes we can place on any field that represent a relationship (Entity or Lite<T>) in our entities:

- ImplementedBy: creates a set of mutually exclusive foreign keys to differentiate tables that correspond to just one field of an entity. Useful when there are just a few different implementations.

- ImplementedByAll: creates two columns (id and typeId), allowing to point to any entity in the database, but has no referential integrity and a weaker support from the UI and the LINQ provider (no automatic union).

This solution has some important advantages over other solutions:

- Every entity has just one

Type and Id, and lives in just one table. IE. The Cat with Id 4 has no Id as an Animal - The database doesn’t need to know about all the hierarchy of classes (like abstract classes) only the concrete ones that are included in the schema.

- Since we have a way to override attributes -even without control of the entity- we can use polymorphic foreign keys to add expansion points in our modules.

Let's see an example of inheritance...

Northwind was a traditional schema without any concept of inheritance or polymorphism. In our model, what we will do is to pretend than now Southwind has companies and persons as customers, with some common data and some different one, let’s turn back to Southwind.Entities and make some changes:

- Make

CustomerDN abstract and create two new entities: PersonDN and Com<code>panyDN, both inheriting from CustomerDN. - Move

companyName, contactName and contactTile to CompanyDN. - Add some properties to

PersonDN: title, firstName, lastName, and dateOfBirth (with DateTimePrecissionValidator set to Days). - Override

ToString in both classes returning the CompanyName for CompanyDN, and the concatenation of first and last name for PersonDN.

If we try to start Sothwind.Load application in this moment, we will get an Exception like this:

As we see, we cannot include abstract classes (or interfaces) in the Schema, only concrete classes that can be instantiated.

In order to fix this error we need to indicate to the SchemaBuilder that the relationship to a CustomerDN will be implemented by either a PersonDN or a CompanyDN. If we have control of the class the easiest way is to add an ImplementedByAttribute in the customer field in OrderDN:

[ImplementedBy(typeof(CompanyDN), typeof(PersonDN))]

CustomerDN customer;

[NotNullValidator]

public CustomerDN Customer

{

get { return customer; }

set { Set(ref customer, value, () => Customer); }

}

Let’s try again and now we should be able to synchronize the schema by running the load application.

First the synchronizer detects that CustomerDN table has been removed and that some new tables (CompanyDN and PersonDN) have been created, and ask if CustomerDN has been renamed. We currently have no data inside so it doesn’t really matter, but if we would have some, probably we would like to move it to CompanyDN, so let’s pretend and answer CompanyDN.

Secondly he asks for the idCutomer field in OrderDN, now we would have idCustomer_CompanyDN and idCustomer_PersonDN, if we would like to keep the data we would have to choose the first one.

Voilá! Here is our sync script automatically generated!

DROP INDEX OrderDN.FIX_OrderDN_idCustomer;

ALTER TABLE OrderDN DROP CONSTRAINT FK_OrderDN_idCustomer ;

EXEC SP_RENAME 'CustomerDN' , 'CompanyDN';

ALTER TABLE CompanyDN ALTER COLUMN ContactTitle NVARCHAR(10) NOT NULL;

ALTER TABLE EmployeeDN ADD UserName NVARCHAR(100) NOT NULL

ALTER TABLE EmployeeDN ADD PasswordHash NVARCHAR(200) NOT NULL

EXEC SP_RENAME 'OrderDN.idCustomer' , 'idCustomer_CompanyDN', 'COLUMN' ;

ALTER TABLE OrderDN ADD idCustomer_PersonDN INT NULL

CREATE TABLE PersonDN(

Id INT IDENTITY NOT NULL PRIMARY KEY,

ToStr NVARCHAR(200) NULL,

Ticks BIGINT NOT NULL,

Address_HasValue BIT NOT NULL,

Address_Address NVARCHAR(60) NULL,

Address_City NVARCHAR(15) NULL,

Address_Region NVARCHAR(15) NULL,

Address_PostalCode NVARCHAR(10) NULL,

Address_Country NVARCHAR(15) NULL,

Phone NVARCHAR(24) NOT NULL,

Fax NVARCHAR(24) NOT NULL,

FirstName NVARCHAR(40) NOT NULL,

LastName NVARCHAR(40) NOT NULL,

Title NVARCHAR(10) NOT NULL,

DateOfBirth DATETIME NOT NULL

);

ALTER TABLE OrderDN ADD CONSTRAINT FK_OrderDN_idCustomer_CompanyDN FOREIGN KEY (idCustomer_CompanyDN) REFERENCES CompanyDN(Id);

ALTER TABLE OrderDN ADD CONSTRAINT FK_OrderDN_idCustomer_PersonDN FOREIGN KEY (idCustomer_PersonDN) REFERENCES PersonDN(Id);

CREATE INDEX FIX_OrderDN_idCustomer_CompanyDN ON OrderDN(idCustomer_CompanyDN);

CREATE INDEX FIX_OrderDN_idCustomer_PersonDN ON OrderDN(idCustomer_PersonDN);

UPDATE TypeDN SET

ToStr = 'Company',

FullClassName = 'Southwind.Entities.CompanyDN',

TableName = 'CompanyDN',

CleanName = 'Company',

FriendlyName = 'Company'

WHERE id = 3;

INSERT TypeDN (ToStr, FullClassName, TableName, CleanName, FriendlyName)

VALUES ('Person', 'Southwind.Entities.PersonDN', 'PersonDN', 'Person', 'Person');

As you can see, the synchronizer is able to:

- Create, remove and rename Tables.

- Create, remove and rename Columns taking the types and constraints into account.

- Create and remove Foreign Keys.

- Create and remove Indices and indexed views.

- Insert, Update or Delete the necessary Records in some tables (Enums, etc..)

This feature makes working in group really nice, since each member doesn’t have to maintain a script (or a fancy migration) with the necessary changes and merge it with the rest, a very error prone process. Just get the latest version, create the Sync script, check that no data gets lost and continue working.

In the case when a not null field is added to a table, a commented DEFAULT constraint is written in the sentence for you to define the default value. If the default value depends on some other data you can temporally make the field nullable, fill the data, and then generate the synchronization script again.

In this case we have no records so we can just remove the DEFAULT constraints and run the script. The OrderDN table should look like this now, notice how CustomerDN table is gone:

Ok, let’s create our CustomerLogic (actually we haven’t even created it yet, all this was going on because we included OrderDN in MyEntityLogic).

As usual, a static class with a Start method, we include CompanyDN and PersonDN and add some default queries for them. The result should be like this:

public static class CustomerLogic

{

public static void Start(SchemaBuilder sb, DynamicQueryManager dqm)

{

if (sb.NotDefined(MethodInfo.GetCurrentMethod()))

{

sb.Include<PersonDN>();

sb.Include<CompanyDN>();

dqm[typeof(PersonDN)] = (from r in Database.Query<PersonDN>()

select new

{

Entity = r.ToLite(),

r.Id,

r.FirstName,

r.LastName,

r.DateOfBirth,

r.Phone,

r.Fax,

r.Address,

}).ToDynamic();

dqm[typeof(CompanyDN)] = (from r in Database.Query<CompanyDN>()

select new

{

Entity = r.ToLite(),

r.Id,

r.CompanyName,

r.ContactName,

r.ContactTitle,

r.Phone,

r.Fax,

r.Address,

}).ToDynamic();

}

}

}

Finally, in the case that we wouldn’t have control of the OrderDN entity or CustomerDN entity (because they are implemented in a shared library) we could still override the attributes at run-time at the beginning of our Starter class (the global one) like this:

sb.Settings.OverrideFieldAttributes((OrderDN o) => o.Customer,

new ImplementedByAttribute(typeof(CompanyDN), typeof(PersonDN)));

Our last step is to rename MyEntityLogic to OrderLogic to match its own current responsibility and add a default query for it.

dqm[typeof(OrderDN)] = (from o in Database.Query<orderdn>()

select new

{

Entity = o.ToLite(),

o.Id,

Customer = o.Customer.ToLite(),

o.Employee,

o.OrderDate,

o.RequiredDate,

o.ShipAddress,

o.ShipVia,

}).ToDynamic();

</orderdn>

We also will create a query that shows the content of every order (OrderLinesDN). Again, we will use an enum for the key.

dqm[OrderQueries.OrderLines] = (from o in Database.Query<OrderDN>()

from od in o.Details

select new

{

Entity = o.ToLite(),

o.Id,

od.Product,

od.Quantity,

od.UnitPrice,

od.Discount,

}).ToDynamic();

These queries are all right, bu... Wouldn't be useful to compute the SubTotalPrice for each OrderDetail?

We can do it using expressionProperty snippet again, so we have the property available in any scenario.

[Serializable]

public class OrderDetailsDN : EmbeddedEntity

{

(…)

static Expression<Func<OrderDetailsDN, decimal>> SubTotalPriceExpression =

od => od.Quantity * od.UnitPrice * (decimal)(1 - od.Discount);

public decimal SubTotalPrice

{

get{ return SubTotalPriceExpression.Invoke(this); }

}

(…)

}

We could also do the same for TotalPrice in OrderDN.

[Serializable]

public class OrderDN : Entity

{

(…)

static Expression<Func<OrderDN, decimal>> TotalPriceExpression =

o => o.Details.Sum(od => od.SubTotalPrice);

public decimal TotalPrice

{

get{ return TotalPriceExpression.Invoke(this); }

}

(…)

}

Finally, let’s write a little bit of business logic that actually does something.

Let’s suppose every time a new OrderDN is created in the database, the products have to be removed from the stock. If the user interface tries to create an order with more products than the available ones, it should throw an exception to abort the operation.

This code does that:

public static OrderDN Create(OrderDN order)

{

if (!order.IsNew)

throw new ArgumentException("order should be new");

using (Transaction tr = new Transaction())

{

foreach (var od in order.Details)

{

int updated = od.Product.InDB()

.Where(p => p.UnitsInStock >= od.Quantity)

.UnsafeUpdate<ProductDN>(p => new ProductDN

{

UnitsInStock = (short)(p.UnitsInStock - od.Quantity)

});

if (updated != 1)

throw new ApplicationException("There are not enought {0} in stock"

.Formato(od.Product));

}

order.Save();

return tr.Commit(order);

}

}

Note that most of the body is inside of a <a href="http://www.signumframework.com/Transaction.ashx">Transaction</a>.

Signum.Engine Transaction object mimics TransactionScope syntax, but it doesn’t get promoted to a distributed transaction (neither it needs MSDTC).

Another difference is that, in the case of nested transactions, the nested one does not commit by itself making business logic easier to compose. You can change this behavior forcing the transaction to be independent, or making it a named transaction.

Inside of the foreach loop we can see a new UnsafeUpdate method.

UnsafeUpdate and UnsafeDelete are a lightweight way of modifying and removing records in the database. It mimics SQL UPDATE and DELETE syntax so it’s fast, but knows about all the conventions and primitives of the framework (EmbeddedEntities, enum, Lite, ImplementedBy…) on the other side it doesn’t test any validation, that’s why it’s called Unsafe.

Note that UnsafeUpdate takes a Func<ProductDN, ProductDN>, this function expects an object initializer expression that creates a new ProductDN and sets the properties will be updated, but it won’t create any new object! It’s just a syntax trick.

UnsafeDelete takes and IQueryable<T> where T is a concrete entity type, so it can be used after any query and, just as UnsafeUpdate, returns the number of columns affected.

In this case we are using another useful new friend, InDB.This method creates an IQueryable<T> from a in-memory entity or Lite, and its equivalent to:

od.Product.InDB()

Database.Query<ProductDN>().Where(p => p == od.Product)

Finally, UnsafeUpdate returns the number of rows modified. In this case InDB already select just one product, so if the Where filters the product and we don’t update any row, we know that we don’t have enough stock and we can throw an exception.

The last step is to add all this modules to your application.

We have seen that every Logic module has a Start method, responsible of adding the necessary tables to the schema, registering queries, hooking events and so one…

There’s also global Start method, in the Starter class, this method is responsible for starting all the modules you are going to use in your application by calling the Start method on each of them.

public static class Starter

{

public static void Start(string connectionString)

{

SchemaBuilder sb = new SchemaBuilder();

DynamicQueryManager dqm = new DynamicQueryManager();

sb.Schema.ForceCultureInfo = CultureInfo.InvariantCulture;

ConnectionScope.Default = new Connection(connectionString, sb.Schema, dqm);

EmployeeLogic.Start(sb, dqm);

ProductLogic.Start(sb, dqm);

CustomerLogic.Start(sb, dqm);

OrderLogic.Start(sb, dqm);

}

}

After the Start Method gets called, your application scope is defined and we are ready to Generate the database, Synchronize or try to Initialize the application.

While initialing the application is simple when going to production, (just initialize everything using Schema.Current.Initialize()) it can get a little more tricker in other host applications:

Maybe when running the Load Application you don’t want to start some modules (like Scheduled Tasks, or Background Processes available in the future Signum Extensions).

Also, it’s frequent that you want to save some basic entities (I.E: Anonymous User) using the engine before loading some dependent modules (I.E: Authorization system).

For these scenarios the engine has 5 different levels of initialization (Level 0 to Level 4) and if a module needs some code to be called before running the application (maybe filling some caches for example) it can do it by subscribing to the right level of initialization (using sb.Schema.Initializing[level] += myInitCode).

Let's understand the initialization sequence in the different application hosts:

In this long article we learn a lot about how to write the business logic using Signum Framework, for example:

- Create our own business logic in a modular way.

- How to

Save, Retrieve and Delete objects. - Use Linq to Signum to query the database.

- Create our own database-enabled calculated properties using

expressionProperty template. - Using

ImplementedBy to represent polymorphic relationships of a hierarchy of classes. Synchronize the database.- Using

Transactions. - Use

UnsafeUpdate to change values in the database fast but without any validation ceremony. - How the

Start/Initialize sequence works

Of course there are things that are left to explain about how to write the Logic classes using Signum.Framework:

- Getting the control before and entity is Saved or Retrieved using EntityEvents or overriding methods in the entity itself.

- Hooking into the synchronization and generation of the database to include your own specific scripts.

And, once Signum.Extensions gets published, we could see how the Authorization module is able to protect any resource in the database (types, properties, queries…) and how Operation, Processes and ScheduledTask modules simplify writing the business logic.

In the next tutorial we will use Southwind.Load to move the data from Northwind database using Linq to Sql and Csv files.

Hopefully, soon we will see window with a button that can actually do something  " />

" />