Introduction

To learn what's new in the latest article update please see History.

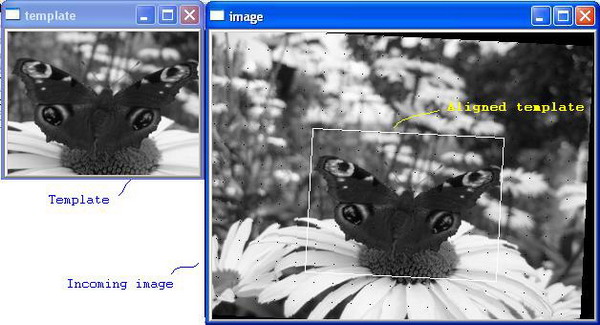

Image alignment is the process of matching one image called template (let's denote it as T) with another image, I (see the above figure). There are many applications for image alignment, such as tracking objects on video, motion analysis, and many other tasks of computer vision.

In 1981, Bruse D. Lucas and Takeo Kanade proposed a new technique that used image intensity gradient information to search for the best match between a template T and another image I. The proposed algorithm has been widely used in the field of computer vision for the last 20 years, and has had many modifications and extensions. One of such modifications is an algorithm proposed by Simon Baker, Frank Dellaert, and Iain Matthews. Their algorithm is much more computationally effective than the original Lucas-Kanade algorithm.

In the Lucas-Kanade 20 Years On: A Unifying Framework series of articles, Baker and Matthews try to classify image alignment algorithms and divide them into two categories: forwards and inverse, depending on the role of the template T. Also, they classify algorithms as additive or compositional, depending on whether the warp is updated by adding parameters increment or by composing transformation matrices. Following this classification, the Lucas-Kanade algorithm should be called a forwards additive algorithm, and the Baker-Dellaert-Matthews algorithm should be called an inverse compositional algorithm.

In this article, we will implement these two algorithms in C language using the Intel OpenCV open-source computer vision library as the base for our implementation. We also will try to compare these two algorithms, and will see which one is faster.

Why is this article needed? First of all, after writing this article, I have a better understanding of image alignment algorithms myself (at least I hope so). There are a lot of articles that provide tons of information on image alignment. Most of them are oriented on advanced readers, not on beginners. Among those articles, I've found several ones that, from my point of view, describe difficult things more simply. But what I really missed is code examples to experiment with. So, the other two purposes of this article are a relatively simple explaination of how image alignment works and providing sample source code.

Who will be interested in reading this? I think, if you remember some mathematics from your university program, are familiar with C or C++, interested in learning how to track an object on video, and have a little patience, you can read this.

Compiling Example Code

It is very easy to run the sample – just download demo_binary.zip and run the executable file.

But to experiment with the code, you will need to compile it yourself. First of all, you need Visual Studio .NET 2005 to be installed. Visual C++ Express Edition also fits our needs.

The next step is to download and install the OpenCV library from here. Download OpenCV_1.0.exe and run it on your computer.

And at last, you need to configure your Visual Studio as follows:

- In the Visual Studio main window, choose the Tools -> Options... menu item, then click the Projects and Solutions -> VC++ Directories tree item.

- In the 'Show directories for..' combo box, select 'Library Files'. Add the following line to the list below:

C:\Program Files\OpenCV\lib

In the 'Show directories for..' combo box, select 'Include Files'. Add the following lines to the list below:

C:\Program Files\OpenCV\cv\include

C:\Program Files\OpenCV\cxcore\include

C:\Program Files\OpenCV\otherlibs\highgui

That's all! Now you can open align_demo.sln, compile, and run it.

Some words about the structure of the sample project.

The auxfunc.h and auxfunc.cpp contain auxiliary image warping functions. The forwadditive.h and forwadditive.cpp files contain an implementation of the Lucas-Kanade algorithm. The invcomp.h and invcomp.cpp contain an implementation of the inverse compositional algorithm. And, the main.cpp contains the main function.

What does it do?

Saying in a few words, this program tries to align the template (the small butterfly image) with the big image (butterfly on the flower). It is not limited to using a butterfly as the template. For example, you can use a face image as a template and determine where the face moves on the next frame of the video sequence.

During the process of alignment, we perform warping operations on the template. We assume that there are three allowed operations: in-plane rotation of the template, and its 2D translation in X and Y directions. These operations are called the allowed set of warps.

We define the allowed set of warps by defining the warp matrix,  . The matrix W depends on the vector of parameters, p=(wz, tx, ty). Here tx and ty are translation components and wz is the rotation component.

. The matrix W depends on the vector of parameters, p=(wz, tx, ty). Here tx and ty are translation components and wz is the rotation component.

It is even possible to use much more complex sets of warps, for example 3D translation and rotation, scaling, and so on. But, here we use a relatively simple set of warps for simplicity.

Now, about the logic of the application.

- After you compile and run the demo, you will see a console window, where we will display the diagnostic information. Enter the components of the vector p=(wz, tx, ty).

- After that, you can see two windows containing the template T and the incoming image I. You also can see a white rectangle on the image I. It is an initial approximation of the butterfly's position. We just assume that the butterfly is somewhere near that area.

- Press any key to start the Lucas-Kanade image alignment algorithm. This algorithm tries to search where the butterfly is located, iteratively minimizing the difference between the template and the image I. In the console window, you will see how it works. You can see the current iteration number, parameter increments, and the mean error value.

- After the process of error minimization converges, the summary is written to the console. The summary includes the algorithm name, the calculation time, the resulting mean error

, and the parameters approximation. You can also see the white rectangle that displays how the template is aligned on the image I.

, and the parameters approximation. You can also see the white rectangle that displays how the template is aligned on the image I. - Now, press any key to run the same process, but using an inverse compositional image alignment algorithm. You can see the current iteration number and the mean error value.

- The summary is displayed for the inverse compositional algorithm. Press any key to exit the program.

Let's see the contents of main.cpp:

int main(int argc, char* argv[])

{

float WZ=0, TX=0, TY=0;

printf("Please enter WZ, TX and TY, separated by space.\n");

printf("Example: -0.01 5 -3\n");

printf(">");

scanf("%f %f %f", &WZ, &TX, &TY);

In OpenCV, we will store our images in the IplImage structure, so here we define the pointers to our images and initially set them with zeros.

IplImage* pColorPhoto = 0; IplImage* pGrayPhoto = 0; IplImage* pImgT = 0; IplImage* pImgI = 0;

In OpenCV, matrices are stored in CvMat structures. Let's define a pointer to our warp matrix W, and initialize it with zero.

CvMat* W = 0;

Now, create two windows for the template T and for the image I. OpenCV has the basic support for the GUI. The GUI support is implemented as a part of OpenCV called the highgui library. The cvLoadImage() function allows to load an image from a JPG file.

Now, allocate memory for the images using the cvCreateImage() function. The first parameter is the size of the image in pixels, the second one is the depth, and the third is the number of channels. RGB images have a depth IPL_DEPTH_8U and three channels. IPL_DEPTH_8U means to use an unsigned char as the image element. IPL_DEPTH_16S means to use a short int as the image element.

We also need to convert our photo to gray-scale format, because image alignment algorithms work with gray-scale images only.

cvNamedWindow("template"); cvNamedWindow("image");

pColorPhoto = cvLoadImage("..\\data\\photo.jpg");

CvSize photo_size = cvSize(pColorPhoto->width,pColorPhoto->height);

pGrayPhoto = cvCreateImage(photo_size, IPL_DEPTH_8U, 1);

pImgT = cvCreateImage(photo_size, IPL_DEPTH_8U, 1);

pImgI = cvCreateImage(photo_size, IPL_DEPTH_8U, 1);

cvCvtColor(pColorPhoto, pGrayPhoto, CV_RGB2GRAY);

Now, let's allocate memory for our warp matrix W using the cvCreateMat() function. The first two parameters define the matrix size (3x3) and the last one is the type of the matrix element. CV_32F means we will use float as the matrix element type.

W = cvCreateMat(3, 3, CV_32F);

Let's define what template we will use. We define the omega rectangle which denotes the butterfly's position.

cvCopy(pGrayPhoto, pImgT);

CvRect omega = cvRect(110, 100, 200, 150);

Let's define what image I we will use, by slightly warping the template.

init_warp(W, WZ, TX, TY);

warp_image(pGrayPhoto, pImgI, W);

cvSetIdentity(W);

draw_warped_rect(pImgI, omega, W);

cvSetImageROI(pImgT, omega);

cvShowImage("template", pImgT);

cvShowImage("image", pImgI);

printf("Press any key to start Lucas-Kanade algorithm.\n");

cvWaitKey(0);

cvResetImageROI(pImgT);

printf("==========================================================\n");

printf("Ground truth: WZ=%f TX=%f TY=%f\n", WZ, TX, TY);

printf("==========================================================\n");

init_warp(W, WZ, TX, TY);

Run the Lucas-Kanade algorithm:

warp_image(pGrayPhoto, pImgI, W);

align_image_forwards_additive(pImgT, omega, pImgI);

Now, wait for a key press and run the inverse compositional algorithm:

warp_image(pGrayPhoto, pImgI, W);

printf("Press any key to start Baker-Dellaert-Matthews algorithm.\n");

cvWaitKey();

align_image_inverse_compositional(pImgT, omega, pImgI);

Wait for a key press, release all the used resources, and exit.

printf("Press any key to exit the demo.\n");

cvWaitKey();

cvDestroyWindow("template");

cvDestroyWindow("image");

cvReleaseImage(&pImgT);

cvReleaseImage(&pImgI);

cvReleaseMat(&W);

return 0;

}

Implementing the Forwards Additive Algorithm

Now, we will describe in detail how the Lucas-Kanade algorithm is implemented. As Baker and Matthews say, a forwards additive algorithm consists of the following steps:

Pre-compute:

- Compute the gradient

of image I.

of image I.

Iterate:

- Warp I with W(x;p) to compute I(W(x,p)).

- Compute the error image T(x) – I(W(x;p)).

- Warp the gradient

with W(x;p).

with W(x;p). - Evaluate the Jacobian

at (x;p).

at (x;p). - Compute the steepest descent images

.

. - Compute the Hessian matrix

- Compute

- Compute

- Update the parameters:

until  .

.

Let's implement this algorithm (see forwadditive.h and forwadditive.cpp).

First of all, let's include the headers for the functions we will use.

#include <stdio.h>

#include <time.h>

#include <cv.h> // Include header for computer-vision part of OpenCV.

#include <highgui.h> // Include header for GUI part of OpenCV.

#include "auxfunc.h" // Header for our warping functions.

Let's define the function that will implement the forwards additive (Lucas-Kanade) algorithm. The template image pImgT, the template bounding rectangle omega, and another image pImgI represent the parameters for the algorithm. In OpenCV, the images are stored as IplImage structures, and a rectangle can be defined using the CvRect structure.

void align_image_forwards_additive(IplImage* pImgT, CvRect omega, IplImage* pImgI)

{

const float EPS = 1E-5f; const int MAX_ITER = 100;

In OpenCV, we will store our images in the IplImage structure, so here we define the pointers to our images and initially set them with zeros.

IplImage* pGradIx = 0; IplImage* pGradIy = 0;

Also, we will need to use matrices. In OpenCV, matrices are stored in CvMat structures.

CvMat* W = 0; CvMat* H = 0; CvMat* iH = 0; CvMat* b = 0; CvMat* delta_p = 0; CvMat* X = 0; CvMat* Z = 0;

Now, let's allocate memory for our matrices using the cvCreateMat() function. The first two parameters define the matrix size (3x3 or 3x1), and the last one is the type of the matrix element. CV_32F means we will use float as the matrix element type.

W = cvCreateMat(3, 3, CV_32F);

H = cvCreateMat(3, 3, CV_32F);

iH = cvCreateMat(3, 3, CV_32F);

b = cvCreateMat(3, 1, CV_32F);

delta_p = cvCreateMat(3, 1, CV_32F);

X = cvCreateMat(3, 1, CV_32F);

Z = cvCreateMat(3, 1, CV_32F);

Allocate memory for images using the cvCreateImage() function. The first parameter is the size of image in pixels, the second one is the depth, and the third is the number of channels. Gray-scale images have a depth IPL_DEPTH_8U and one channel. IPL_DEPTH_16S means to use short int as the image element.

CvSize image_size = cvSize(pImgI->width, pImgI->height);

pGradIx = cvCreateImage(image_size, IPL_DEPTH_16S, 1);

pGradIy = cvCreateImage(image_size, IPL_DEPTH_16S, 1);

Save the current time:

clock_t start_time = clock();

Pre-compute the image gradient  . Note that cvSobel() function produces an enhanced image gradient (becase we use 3x1 or 1x3 Gaussian kernel), so we should call cvConvertScale() to normalize the gradient image.

. Note that cvSobel() function produces an enhanced image gradient (becase we use 3x1 or 1x3 Gaussian kernel), so we should call cvConvertScale() to normalize the gradient image.

cvSobel(pImgI, pGradIx, 1, 0); cvConvertScale(pGradIx, pGradIx, 0.125); cvSobel(pImgI, pGradIy, 0, 1); cvConvertScale(pGradIy, pGradIy, 0.125);

Enter an iteration loop. Iterate until the converged or maximum iteration count is reached:

float wz_a=0, tx_a=0, ty_a=0;

float mean_error=0;

int iter=0; while(iter < MAX_ITER)

{

iter++;

mean_error = 0;

int pixel_count=0;

Init warp W(x,p) and set the Hessian matrix with zeros:

init_warp(W, wz_a, tx_a, ty_a); cvSet(H, cvScalar(0)); cvSet(b, cvScalar(0));

int u, v;

int i, j;

for(i=0; i<omega.width; i++)

{

u = i + omega.x;

for(j=0; j<omega.height; j++)

{

v = j + omega.y;

SET_VECTOR(X, u, v);

cvGEMM(W, X, 1, 0, 0, Z);

float u2, v2;

GET_VECTOR(Z, u2, v2);

int u2i = cvFloor(u2);

int v2i = cvFloor(v2);

if(u2i>=0 && u2i<pImgI->width && v2i>=0 && v2i<pImgI->height)

{

pixel_count++;

float Ix = interpolate<short>(pGradIx, u2, v2);

float Iy = interpolate<short>(pGradIy, u2, v2);

float stdesc[3]; stdesc[0] = (float)(-v*Ix+u*Iy);

stdesc[1] = (float)Ix;

stdesc[2] = (float)Iy;

float I2 = interpolate<uchar>(pImgI, u2, v2);

float D = CV_IMAGE_ELEM(pImgT, uchar, v, u) - I2;

mean_error += fabs(D);

float* pb = &CV_MAT_ELEM(*b, float, 0, 0);

pb[0] += stdesc[0] * D;

pb[1] += stdesc[1] * D;

pb[2] += stdesc[2] * D;

int l,m;

for(l=0;l<3;l++)

{

for(m=0;m<3;m++)

{

CV_MAT_ELEM(*H, float, l, m) += stdesc[l]*stdesc[m];

}

}

}

}

}

if(pixel_count!=0)

mean_error /= pixel_count;

Invert the Hessian matrix:

double inv_res = cvInvert(H, iH);

if(inv_res==0)

{

printf("Error: Hessian is singular.\n");

return;

}

Update parameters:  :

:

cvGEMM(iH, b, 1, 0, 0, delta_p);

float delta_wz = CV_MAT_ELEM(*delta_p, float, 0, 0);

float delta_tx = CV_MAT_ELEM(*delta_p, float, 1, 0);

float delta_ty = CV_MAT_ELEM(*delta_p, float, 2, 0);

wz_a += delta_wz;

tx_a += delta_tx;

ty_a += delta_ty;

printf("iter=%d dwz=%f dtx=%f dty=%f mean_error=%f\n",

iter, delta_wz, delta_tx, delta_ty, mean_error);

if(fabs(delta_wz)<EPS && fabs(delta_tx)<EPS && fabs(delta_ty)<EPS) break;

}

Show the results of the alignment:

clock_t finish_time = clock();

double total_time = (double)(finish_time-start_time)/CLOCKS_PER_SEC;

printf("===============================================\n");

printf("Algorithm: forward additive.\n");

printf("Caclulation time: %g sec.\n", total_time);

printf("Iteration count: %d\n", iter);

printf("Approximation: wz_a=%f tx_a=%f ty_a=%f\n", wz_a, tx_a, ty_a);

printf("Epsilon: %f\n", EPS);

printf("Resulting mean error: %f\n", mean_error);

printf("===============================================\n");

init_warp(W, wz_a, tx_a, ty_a);

draw_warped_rect(pImgI, omega, W);

cvSetImageROI(pImgT, omega);

cvShowImage("template",pImgT);

cvShowImage("image",pImgI);

cvResetImageROI(pImgT);

Now, free all the used resources:

cvReleaseMat(&W);

cvReleaseMat(&H);

cvReleaseMat(&iH);

cvReleaseMat(&b);

cvReleaseMat(&delta_p);

cvReleaseMat(&X);

cvReleaseMat(&Z);

}

Implementing an Inverse Compositional Image Alignment Algorithm

In an inverse compositional algorithm, the template T and the image I revert their roles.

Pre-compute:

- Evaluate the gradient

of image T(x).

of image T(x). - Evaluate the Jacobian

at (x;0).

at (x;0). - Compute the steepest descent images,

.

. - Compute the Hessian matrix,

Iterate:

- Warp I with W(x;p) to compute I(W(x,p)).

- Compute the error image I(W(x;p))-T(x).

- Warp the gradient

with W(x;p).

with W(x;p). - Compute

- Compute

- Update the parameters

until  .

.

The code of this method is located in invcomp.h and invcomp.cpp. Let's see what invcomp.cpp contains.

Include all required headers:

#include <stdio.h>

#include <time.h>

#include <cv.h> // Include header for computer-vision part of OpenCV.

#include <highgui.h> // Include header for GUI part of OpenCV.

#include "auxfunc.h" // Header for our warping functions.

Here we have implementation of Baker-Dellaert-Matthews (inverse-compositional) algorithm:

void align_image_inverse_compositional(IplImage* pImgT, CvRect omega, IplImage* pImgI)

{

const float EPS = 1E-5f; const int MAX_ITER = 100;

Create all matrices and images used internally:

IplImage* pGradTx = 0; IplImage* pGradTy = 0; IplImage* pStDesc = 0;

CvMat* W = 0; CvMat* dW = 0; CvMat* idW = 0; CvMat* X = 0; CvMat* Z = 0;

CvMat* H = 0; CvMat* iH = 0; CvMat* b = 0; CvMat* delta_p = 0;

W = cvCreateMat(3, 3, CV_32F);

dW = cvCreateMat(3, 3, CV_32F);

idW = cvCreateMat(3, 3, CV_32F);

X = cvCreateMat(3, 1, CV_32F);

Z = cvCreateMat(3, 1, CV_32F);

H = cvCreateMat(3, 3, CV_32F);

iH = cvCreateMat(3, 3, CV_32F);

b = cvCreateMat(3, 1, CV_32F);

delta_p = cvCreateMat(3, 1, CV_32F);

IPL_DEPTH_16S means to use short as gradient image element. Here we use short, because cvSobel produces not normalized image, and uchar overflow may occur. To normalize the gradient image we use cvConvertScale().

CvSize image_size = cvSize(pImgI->width, pImgI->height);

pGradTx = cvCreateImage(image_size, IPL_DEPTH_16S, 1);

pGradTy = cvCreateImage(image_size, IPL_DEPTH_16S, 1);

pStDesc = cvCreateImage(image_size, IPL_DEPTH_32F, 3);

clock_t start_time = clock();

cvSobel(pImgT, pGradTx, 1, 0); cvConvertScale(pGradTx, pGradTx, 0.125); cvSobel(pImgT, pGradTy, 0, 1); cvConvertScale(pGradTy, pGradTy, 0.125);

cvSet(H, cvScalar(0));

Now walk through pixels of template image pImgT and accumulate the Hessian matrix H and matrix b:

int u, v; float u2, v2;

int i, j;

for(i=0; i<omega.width; i++)

{

u = i + omega.x;

for(j=0; j<omega.height; j++)

{

v = j + omega.y;

short Tx = CV_IMAGE_ELEM(pGradTx, short, v, u);

short Ty = CV_IMAGE_ELEM(pGradTy, short, v, u);

float* stdesc = &CV_IMAGE_ELEM(pStDesc, float, v,u*3);

stdesc[0] = (float)(-v*Tx+u*Ty);

stdesc[1] = (float)Tx;

stdesc[2] = (float)Ty;

int l,m;

for(l=0;l<3;l++)

{

for(m=0;m<3;m++)

{

CV_MAT_ELEM(*H, float, l, m) += stdesc[l]*stdesc[m];

}

}

}

}

double inv_res = cvInvert(H, iH);

if(inv_res==0)

{

printf("Error: Hessian is singular.\n");

return;

}

Now enter iteration loop to minimize the value of mean error.

cvSetIdentity(W);

float mean_error=0;

int iter=0; while(iter < MAX_ITER)

{

iter++;

mean_error = 0;

int pixel_count=0;

cvSet(b, cvScalar(0));

int i, j;

for(i=0; i<omega.width; i++)

{

int u = i + omega.x;

for(j=0; j<omega.height; j++)

{

int v = j + omega.y;

SET_VECTOR(X, u, v);

cvGEMM(W, X, 1, 0, 0, Z);

GET_VECTOR(Z, u2, v2);

int u2i = cvFloor(u2);

int v2i = cvFloor(v2);

if(u2i>=0 && u2i<pImgI->width && v2i>=0 && v2i<pImgI->height)

{

pixel_count++;

float I2 = interpolate<uchar>(pImgI, u2, v2);

float D = I2 - CV_IMAGE_ELEM(pImgT, uchar, v, u);

mean_error += fabs(D);

float* stdesc = &CV_IMAGE_ELEM(pStDesc, float, v, u*3);

float* pb = &CV_MAT_ELEM(*b, float, 0, 0);

pb[0] += stdesc[0] * D;

pb[1] += stdesc[1] * D;

pb[2] += stdesc[2] * D;

}

}

}

if(pixel_count!=0)

mean_error /= pixel_count;

cvGEMM(iH, b, 1, 0, 0, delta_p);

float delta_wz = CV_MAT_ELEM(*delta_p, float, 0, 0);

float delta_tx = CV_MAT_ELEM(*delta_p, float, 1, 0);

float delta_ty = CV_MAT_ELEM(*delta_p, float, 2, 0);

init_warp(dW, delta_wz, delta_tx, delta_ty);

inv_res = cvInvert(dW, idW);

if(inv_res==0)

{

printf("Error: Warp matrix is singular.\n");

return;

}

cvGEMM(idW, W, 1, 0, 0, dW);

cvCopy(dW, W);

printf("iter=%d mean_error=%f\n", iter, mean_error);

if(fabs(delta_wz)<=EPS && fabs(delta_tx)<=EPS && fabs(delta_ty)<=EPS) break;

}

clock_t finish_time = clock();

double total_time = (double)(finish_time-start_time)/CLOCKS_PER_SEC;

Print the summary information to stdout:

printf("===============================================\n");

printf("Algorithm: inverse compositional.\n");

printf("Caclulation time: %g sec.\n", total_time);

printf("Iteration count: %d\n", iter);

printf("Epsilon: %f\n", EPS);

printf("Resulting mean error: %f\n", mean_error);

printf("===============================================\n");

draw_warped_rect(pImgI, omega, W);

cvSetImageROI(pImgT, omega);

cvShowImage("template",pImgT);

cvShowImage("image",pImgI);

cvResetImageROI(pImgT);

cvReleaseMat(&W);

cvReleaseMat(&dW);

cvReleaseMat(&idW);

cvReleaseMat(&H);

cvReleaseMat(&iH);

cvReleaseMat(&b);

cvReleaseMat(&delta_p);

cvReleaseMat(&X);

cvReleaseMat(&Z);

}

Bilinear Interpolation

In both algorithms we use bilinear pixel interpolation. Interpolation helps to calculate the intensity of a transformed pixel with better accuracy. Transformed pixels usually have non-integer coordinates, so by interpolating their intensities we take intensities of neighbour pixels into account. This also helps to avoid mean error oscillations and improve overall minimization performance.

We use interpolate() template function, that is defined in auxfunc.h:

template <class T>

float interpolate(IplImage* pImage, float x, float y)

{

int xi = cvFloor(x);

int yi = cvFloor(y);

float k1 = x-xi; float k2 = y-yi;

int f1 = xi<pImage->width-1; int f2 = yi<pImage->height-1;

T* row1 = &CV_IMAGE_ELEM(pImage, T, yi, xi);

T* row2 = &CV_IMAGE_ELEM(pImage, T, yi+1, xi);

float interpolated_value = (1.0f-k1)*(1.0f-k2)*(float)row1[0] +

(f1 ? ( k1*(1.0f-k2)*(float)row1[1] ):0) +

(f2 ? ( (1.0f-k1)*k2*(float)row2[0] ):0) +

((f1 && f2) ? ( k1*k2*(float)row2[1] ):0) ;

return interpolated_value;

}

Conclusion

Image alignment has many applications in the field of computer vision, such as object tracking. In this article, we described two image alignment algorithms: the Lucas-Kanade forwards additive algorithm and the Baker-Dellaert-Matthews inverse compositional algorithm. We also saw the C source code for these algorithms.

Now, let's compare which algorithm runs faster on my Pentium IV 3 Ghz (Release configuration). Let's enter the following components of vector p:

-0.01 5 -3

This means we translated the image I five pixels right, three pixels up, and rotated it a little (but it's difficult to say what angle exactly).

Here is the summary for the forwards additive algorithm:

===============================================

Algorithm: forward additive.

Caclulation time: 0.157 sec.

Iteration count: 13

Approximation: wz_a=-0.007911 tx_a=4.893255 ty_a=-3.944573

Epsilon: 0.000010

Resulting mean error: 2.898076

===============================================

Here is the summary for the inverse compositional algorithm:

===============================================

Algorithm: inverse compositional.

Caclulation time: 0.078 sec.

Iteration count: 11

Epsilon: 0.000010

Resulting mean error: 2.896847

===============================================

The calculation time for the forwards additive algorithm is 0.157 sec. The calculation time for the inverse compositional algorithm is 0.078 sec. As we see, the inverse compositional algorithm works faster, because it is more computational effective.

See Also

You may also want to read Image Alignment Algorithms - Part II, where we implement the forwards compositional and inverse additive image alignment algorithms and compare all four implemented algorithms.

Another tutorial on 2D object tracking and sample source code can be found in the Implementing Simple 2D Object Tracker article.

Visit the Image Alignment Algorithms web-site if you are looking for tutorials on object tracking.

History

- August 6, 2008

- Fixed:

cvSobel() is not normalized. Thanks to hbwang_1427 for letting me know about this issue. - Improvement: Implemented bilinear interpolation that allows to calculate the intensity of a warped pixel with subpixel accuracy. That also helps to avoid mean error oscillations.

- Described inverse compositional algorithm code in the article body.