Introduction

Having multiple threads running in your application the right way certainly can make it run more efficiently and be responsive, to just name a few advantages. MTCopy has this advantage. It starts by spawning a number of threads to cooperatively perform I/O operations (multiple files multiple threads scenario, MMT) and divides large files to be copied into several sector aligned blocks (single file multiple threads scenario, SMT) if necessary, whereby you don't have to have your program blocked until a lengthy I/O operation is finished in the single thread scenario. But certainly, the more threads you have doesn't mean the more efficiency you will get, you have to take the thread context switching overheads into consideration; otherwise, the program will spend much of its precious CPU time on context switching instead of on real work it should do.

Background

Writing an article and an accompanying program on CodeProject have always been a dream of mine. Just a few weeks ago, implementing a file copying tool came up to my mind after I saw a similar tool and wondered if I could makee one by myself, and certainly it would be great if I could finish it and post it here. So, here I am with this article and the completed sample program after a couple of weeks! This tool is more a by-product of self-practice that I do in my spare time on thread modeling, thread synchronization, and file operations concepts than a serious product level file copy tool.

Usage of the sample program

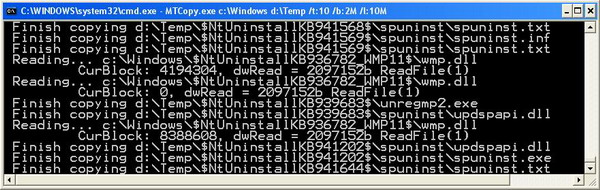

The tool is a console based application, so in order to run it, you need to specify the command line containing "[source file/folder] [destination file/folder] /t: [how many threads will be created during the copying process] /b: [the buffer size in KB/MB used during copying] /l: [threshold over which files of a certain size will be divided into small blocks and copied multithreadedly]". Once it starts to run, it will create a log file named "MTCopy.txt" under the c: drive root.

So you can copy a folder like: "MTCopy.exe "I:\Something" "I:\Something_" /t:2 /b:5m /l:10m" (using two threads for multiple file copying; for single file copying, use another two threads only when its size is equal to or over 10MB), or simply copy a large single file multithreadedly by using: "MTCopy.exe "I:\Something\something.wmv" "I:\Something_" /t:2 /b:5m /l:10m". It is recommended that the buffer size specified should be a multiple of the sector size; that way, the program will directly allocate that amount of memory instead of adjusting the buffer size such that it will be sector aligned.

Please note: the [source file/folder] and [destination file/folder] options should be double quoted; otherwise, they will be interpreted as several options if spaces exist in between.

How it was implemented

The program first starts by creating two thread pools and a file traverse thread.

The file traverse thread will recursively search for all the files inside the specified directory; it runs with the MMT copying thread pool in parallel. Once it finds a file, it will signal the MMT thread pool to copy the file over and put itself to sleep until the MMT pool tells it to wake up and continue traversing. If the folder is found, it will directly create that folder.

Multiple file multithreaded copying thread pool: When the file traverse thread signals this thread pool that it has found a file, it will make a local copy of the source file path for the local thread in the critical section which is accessed only by one thread at any given time, and then the real file copying operation gets executed by each local thread. Each one of the local threads gets a set of local variables of their own that is not shared with their close neighbors. This thread pool runs as long as the file traverse thread is unsignaled (thread/process objects become signaled when they terminate); that is to say, this thread pool will terminate when the file reverse thread finishes traversing the specific directory.

As it receives the file to be copied, it will check each file if its size equals to or is greater than what is specified with the /l: command line option. If this is the case, the file will be put into a dedicated queue as a work item and a dummy file will be created by size for future processing. During this process, this newly created item will be equipped with a series of block metrics such as how many blocks this file should be divided to; again, this block is specified by a '/b:' option. With all of these information in hand, the MMT simply copies the unfiltered files over. The skeleton pseudo code goes something like:

for(;(WaitForSingleObject(g_hCopyThd, IGNORE)) != WAIT_OBJECT_0;)

{

EnterCriticalSection(&lpCS);

WaitForSingleObject(g_hFound, INFINITE);

_tcscpy(tSrcPath, FileName);

SetEvent(g_hStartFind);

LeaveCriticalSection(&lpCS);

...

if (filesize > threshold)

{

AppendToSMT();

}

...

CopyFile(tSrcPath, tDstName, TRUE);

...

}

Single file multithreaded copying thread pool: This thread pool first starts by waiting on a semaphore object to be signaled, this semaphore is signaled when there is an item inside to be copied in the queue; otherwise, it will just put itself to sleep. Each item's blocks will be handled by the local thread running in this thread pool. When finished copying the item, the thread pool will suspend itself and wait for the next item to come. The code skeleton looks like:

for (int i = 0; i < m_pHeadWorkItem->csa[uiIndex].uiLength; i++ )

{

SetFilePointer(hSrcFile,

(LONG)(m_pHeadWorkItem->csa[uiIndex].uiCurrentBlock * CS->m_dwCopyBlockSize)

+ (CS->m_dwCopyBlockSize * i), NULL, FILE_BEGIN);

SetFilePointer(hDstFile,

(LONG)(m_pHeadWorkItem->csa[uiIndex].uiCurrentBlock * CS->m_dwCopyBlockSize)

+ (CS->m_dwCopyBlockSize * i), NULL, FILE_BEGIN);

fFileOp = ReadFile(hSrcFile, pTmp, CS->m_dwCopyBlockSize, &dwRead, NULL);

...

WriteFile(hDstFile, pTmp, dwRead, &dwWritten, NULL);

...

SuspendThread(m_hThd[uiIndex]);

}

Points of interest

CloseHandle when thread is just created: When I saw code like CloseHandle(_beginthreadex(...)) for the first time, I was confused: what's the point in closing the thread handle that you have just created? Later, as I used this code for a while, I realized that this does not actually cause the child's primary thread to terminate; it simply has the system decrement the usage count for the thread from 2 to 1 (the thread born with a usage count of 2), and when the thread exits, this usage count will be decremented to 0 and that in turn will have the object's memory freed; thus, you're saves from the trouble of writing extra code waiting for the thread to terminate in order to close its handle. But of course, this is only the case when you don't need this thread kernel object any more after it is created and just want it to run to completion. This behavior applies to the process kernel object too.

There's another case that CloseHandle(...) can be handy: Suppose that you have a child process and its primary thread spawns off another thread and then the primary thread terminates. At this point, the system can free the child's primary thread object from its memory only if the parent process doesn't have an outstanding handle to this thread object. Otherwise, the system can't free the object until the parent process closes the handle. So, you can use the code like:

BOOL fSuccess = CreateProcess(..., &pi);

if (fSuccess)

{

CloseHandle(pi.hThread);

...

}

Successful wait side effects: For some kernel objects, a successful wait (when the object is signaled) on WaitForSingleObject/WaitForMultipleObjects actually alters the object state. This side effects apply to the auto reset event and the semaphore object. This program uses both side effects. For semaphore successful wait side, the program uses it to guard against the SMT items: unleash the threads when there are items available and put the threads execution into wait state when there's none.

When an item is queued, the semaphore will be incremented by:

ReleaseSemaphore(m_hsemNumElements, CS->m_nThdCnt, NULL);

for (;(WaitForSingleObject(m_hsemNumElements, INFINITE) == WAIT_OBJECT_0);)

{

...

}

The for statement in the above code snippet returns only when there are items available in the queue, and as it returns, it will also decrement the semaphore resource count to zero; otherwise, it will be in the wait state sitting idle for the next item to come.

Non-block wait

(WaitForSingleObject(m_hFindThd, IGNORE) == WAIT_OBJECT_0)

I looked at this parameter in MSDN just when I was looking for some function that could be used to test for the object signalness for the shortest amount of time and returns. So, using it this way, the object's state can be tested and returns immediately. So my retrospect is: read MSDN carefully first before asking any questions.

Critical section leak (orphan critical section)

for(...)

{

EnterCriticalSection(&CS);

break;

...

LeaveCriticalSection(&CS);

}

Watch out for this kind of problems especially when you're in a thread pool where a number threads will execute the above code. During development, I once experienced this issue where I put a break statement between them, so no wonder I ended up with having the whole thread pool blocked when the broke away thread forced not to release the critical section when it entered it; it made the rest of the threads to never ever enter the critical section again.

Thread Local Storage (TLS)

Initially, I thought I should have used TLS in the thread pool for the variables that go with the particular thread, but later I found out that you only need TLS for global or static variables; if you can minimize the use of such variables and rely much more on automatic (stack-based) variables in the thread pool, you can just leave TLS alone, for those variables were all local to the individual thread.

Future thoughts

I've done a little research on the maximization of I/O operations after this program's basic functions were implemented. For I/O to achieve the maximized performance, there are two things among others that you need to consider:

- By passing the file system cache (

CreateFile(...) with FILE_FLAG_NO_BUFFERING). - Using overlapped I/O (asynchronous I/O).

This program takes the advantage of bypassing system buffering so this means the data moves directly into the application via the SCSI adapter using DMA (direct memory access) instead of to the system and then the application. Overlapped I/O can have the same effect that this program has by using just a single thread instead of multiple, where the Read()/Write() function will immediately return after issuing the command to the OS. So, it is the OS which does the I/O works for you on the background and notifies you once the operation finishes. So, this increases throughput by providing the IO subsystem with more work to do at any instant. But this program has not used this mechanism yet, let's leave it for the future.

- A better user friendly UI (progress bar, options setting etc.) also counts.

History

- 7 April, 2008 -- Original version posted.