Introduction

The VList data structure was designed by Phil Bagwell as a replacement for singly-linked lists in functional programming languages. It can be thought of as a compromise between a linked list and a dynamic array (e.g., the .NET Framework's List<T> class) that mixes the advantages of each.

I have created a family of four different VList data types for .NET:

FVList: the standard immutable VList described by Phil Bagwell, in which new items are added at the front (at index [0]). RVList: a VList in reverse order. RVList is a better match for the .NET Framework than FVList because new items are added at the back (at index [Count]).FWList: a mutable version of FVList with performance approaching List<T>. RWList: a mutable version of RVList; practically, a drop-in replacement for List<T>.

Internally, all of these are built on top of a hybrid mutable-immutable VList, which I will describe in the course of this article. An instance of any of these data types can be converted to any of the others in between O(1) and O(log N) time.

Note: Originally FVList was named VList. However, most commonly one wants to use RVList or RWList, because they follow the .NET convention that items are added and removed at the "end" of the list. Therefore, in my Loyc project I'm considering renaming RVList to VList, so that people don't have to think about motor homes every time they make an RVList. But obviously I can't rename RVList to VList unless I rename the forward VList to something else first... so I'm calling it FVList. In this article, though, I am only renaming VList, not RVList, to avoid causing wild confusion to those that have read the article in the past.

In this article, the term "VList" may refer to FVList and RVList collectively, and "WList" may refer to FWList and RWList collectively.

Background

Functional programming languages make heavy use of "persistent linked lists", which are linked lists whose items are immutable (never modified). Because they are immutable, it is always perfectly safe to share parts of a linked list between two linked lists. In case you are not familiar with them, please look at the following complete implementation of a persistent linked list data structure:

public struct PList<T> : IEnumerable<T>

{

private PList(PNode<T> n) { _node = n; }

private PNode<T> _node;

public PList<T> Add(T item)

{

_node = new PNode<T>(item, _node);

return this;

}

public T Head

{

get { return _node.Value; }

}

public PList<T> Tail

{

get { return new PList<T>(_node.Next); }

}

public bool IsEmpty

{

get { return _node == null; }

}

public IEnumerator<T> GetEnumerator()

{

PNode<T> n = _node;

while (n != null)

{

yield return n.Value;

n = n.Next;

}

}

IEnumerator IEnumerable.GetEnumerator()

{

return GetEnumerator();

}

}

private class PNode<T>

{

public PNode(T value, PNode<T> next) { Value=value; Next=next; }

public readonly T Value;

public readonly PNode<T> Next;

}

This is the kind of simple singly-linked list implementation they might teach you to write in high school, but it's already very useful. You can add items with Add(), remove the last item with Tail, and since it implements IEnumerable, you can use foreach or LINQ with it.

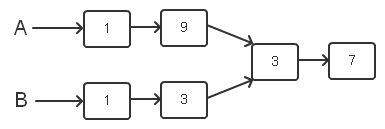

Now, consider the following code:

PList<int> A = new PList<int>();

A.Add(7);

A.Add(3);

PList<int> B = A;

A.Add(9);

A.Add(1);

B.Add(3);

B.Add(1);

In memory, the structure looks like this:

The fact that you cannot modify items in the linked list means that you can treat them like a value type: if you pass a list to a function, you never have to worry that the function will modify your list. The function is free to add or remove items from the list if it wants, but these changes do not affect your copy of the list.

However, the persistent linked list PList<T> isn't quite as good as the standard List<T> that you use every day. The implementation above doesn't provide a way to read, modify, insert, or delete items at any point in the middle of the list. We could certainly add that functionality--we can provide the illusion of changing items at any point in the list--but, it would be slower than List<T> because doing any of these operations at index N of a linked list would take O(N) time. For example, if we added an indexer so you could write:

B[2] = 5;

a copy would have to be made of the first two items to accomplish this:

Moreover, O(N) time is needed to find item [N] before modifying it.

Finally, counting the number of items in the list takes O(Count) time.

The FVList

Phil Bagwell's VList uses a linked list of arrays instead of single items. It is designed to improve upon the persistent linked list by:

- Indexing elements in O(1) time on average (but O(log N) at the far end of the list).

- Counting the elements in O(log N) time (O(1) in my implementation!).

- Storing elements much more compactly. For example, a

PList<int> with N elements requires 16*N bytes of memory (on a 32-bit PC), but an RVList<int> usually requires less than 8*N bytes (roughly the same memory requirement as List<int>). Also, VLists are much more cache-friendly since adjacent elements tend to be adjacent in memory; therefore, they are faster.

Ideally, the linked list of arrays increases exponentially in size, so that the first array in the list is the largest. This is illustrated by this diagram:

A reference to a VList is a pair of values that I call "block" and "local count" (_block and _localCount in the code). For example, "reference C" in the diagram consists of a pointer to Block 2 with a local count of 6. Therefore, VList C contains 12 items (2 in block 0, 4 in block 1, and 6 in block 2). Reference D, meanwhile, has a local count of 8, so it contains 14 items (12 of which are shared with C). Just like a linked list, there can be other references that point to other parts of a VList. The diagram shows four references, which share three memory blocks.

My FVList<T> class, then, behaves very much like PList<T>, but with higher performance and a lot more functionality. FVList and RVList provide the full IList<T> interface, plus additional stuff like Tail, Push/Pop/Front (for using VList as a stack), AddRange, InsertRange, RemoveRange, and ToArray.

A VList always starts with a block of size 2, and when creating new blocks, they are double the size of the previous block. Ideally, the indexer requires only O(1) time on average (when accessing random indexes) because 50-75% of the list is in the first two blocks, and the extra O(log N) time it takes to reach the last few elements doesn't have much effect on the overall runtime (as long as you don't access the last elements more often than the first elements.) In my implementation, each VList block (represented by a VListBlock<T> object) keeps track of the total number of elements in all previous blocks, so the Count property takes O(1) time.

Suppose we try the same example again, but with FVList instead of PList:

FVList<int> A = new FVList<int>();

A.Add(7);

A.Add(3);

FVList<int> B = A;

A.Add(9);

A.Add(1);

B.Add(3);

B.Add(1);

Like PList<T>, FVList<T> is a value type, so a simple assignment statement makes a copy. Here is the result:

Now, suppose that we make a third list that contains { 7, 8, 9 }:

FVList<int> C = A.WithoutFirst(3);

C.Add(8).Add(9);

Since Block0[1] is already in use, a new block must be allocated when we add 8 to C. After adding 9 to C, the memory layout looks like this:

Note that C has no way of knowing whether any references still exist to the value 3 in Block0[1]. The variables A and B might have gone out of scope before we added any items to C, but C doesn't know that. Therefore, C must assume that the value 3 is in use and leave it alone, creating a new array instead of replacing the existing value.

Note also that the new block 3 only has two items instead of 4; that's because the block size is chosen as double the size used in the previous block: C only uses 1 item in Block 0, so double that size is 2. Consequently, when you are doing a lot of sharing and branching with VLists, the blocks tend to be smaller and behave more like linked lists. I believe this is good because otherwise there is a risk that very large blocks are allocated in which only a tiny fraction of the array entries are in use.

For example, suppose we do this:

FVList<int> D = new FVList();

D.Add(1).Add(-1);

D.RemoveAt(0);

D.Add(2).Add(-1);

D.RemoveAt(0);

D.Add(3)

This access pattern (adding 2 and removing 1 repeatedly) is the worst case. It actually forces the VList to degrade into an inefficient linked list:

It's a good thing the block sizes don't increase exponentially in this case, or a lot of space would be wasted very fast.

In fact, to prevent certain pathological problems when sublists are shared and forked, I decided to limit all blocks to at most 1024 items, and my Add() method uses a technique (documented in the source code of VListBlockArray.Add) to avoid keeping an array that is less than 1/3 full alive (from the garbage collector's point-of-view).

VList's "fluent" interface

While developing the FVList<T> structure, I noticed a problem FVList<T> has when used with properties, because it is a value type. Consider what happens when you try to add something to the Foo.List property:

class Foo {

private FVList<int> v;

public FVList<int> List {

get { return v; }

set { v = value; }

}

}

Foo f = new Foo();

f.List.Add(777);

This use of the List property is absolutely wrong because it has no effect at runtime. Why? FVList<int> is a value type, so the List property returns a copy of the list. When you call the Add method, 777 is added to the copy of the list, after which the copy immediately vanishes from existence.

Unfortunately, the C# compiler does not detect this problem (it's too bad there is no [Mutator] attribute I could apply to Add() that would make the compiler emit an error in this case.)

If FVList<T> only implemented the standard Add method that returns void, you would have to get around the problem using code like this:

Foo f = new Foo();

FVList<int> temp = f.List;

temp.Add(777);

f.List = temp;

So, I decided to make things easier by changing the methods with void return values to return a copy of the list being modified instead. That way, you can simply write:

Foo f = new Foo();

f.List = f.List.Add(777);

But, this change also happens to make the list more convenient to use, as you can perform several modifications in one line:

VList<string> Q = new VList<string>("Captain", "Joe");

Q.RemoveAt(1).Add("Kirk").Add("T").Add("James");

I did not implement the same feature for FWList and RWList, which are reference types and therefore do not suffer the same problem.

The RVList

FVList is a little odd to the average C# programmer because items are added at the front (index 0) instead of at the back. That's why I made RVList<T>. It's the same as FVList<T> except that its Add() method adds items to the end of the list instead of the beginning. You can convert FVList<T> to RVList<T> or vice versa in O(1) time (but the order of the items is reversed!), and the list is enumerated in order from index 0 to Count-1, as you would expect.

RVList<T> is nothing more than a different "view" on the list. It's the same as if you wrote a wrapper called BackwardList<T> that reverses the apparent element order of List<T>.

Enumerating the items in RVList<T> occurs in "reverse" order in the sense that going from index 0 to Count is like traversing the linked list from the far end to the front. I decided to implement an enumeration with the help of an algorithm that searches backward through the singly linked list. Ideally, the enumeration takes O(N + (log N)^2) = O(N) time, but if the list has degraded into a linked list, it takes O(N + N^2) = O(N^2) time instead. FVList<T>'s enumerator, in contrast, always takes O(N) time because the linked list is traversed in the natural way.

It would be possible to enumerate any RVList<T> in O(N) time using a temporary list to keep track of the block that needs to be traversed. I may change the implementation if there is demand for it.

The FWList and RWList

As demonstrated above, the FVList<T> sometimes uses memory wastefully, and produces long chains of blocks if you use certain patterns of modifications with it. Also, calling the setter to modify a FVList<T> at index [N] takes at least O(N) time--sometimes more like O(Count) time.

In order to make the VList truly useful, I felt, it would be necessary to have a mutable version that doesn't suffer from these problems. I decided to call it WList, or "writable VList" (MList for "mutable VList" was already claimed), in two variations FWList and RWList (forward and reverse WList).

When used by itself, RWList<T> is a drop-in replacement for the standard List<T>. When used this way, it is guaranteed to always produce a chain of blocks that increases exponentially in size. It can do this because it can overwrite existing items in a block--it never has to "fork" a block.

Therefore, RWList<T> has the same big-O performance as List<T>:

- The indexer takes O(1) time on average for both reading and writing.

- Adding or removing an item at the head of the list takes O(1) time.

- Inserting or deleting an item at index K takes O(K) time.

- Searching for an item located at index K takes O(K) time, or O(N) time if not found.

- Enumerating the list takes O(N) time.

RWList<T>, as you might guess, is the reverse-order form of FWList<T>. RWList<T> is generally preferred over FWList<T> for C# development, because the Add method adds an item at index [<code>Count] instead of index [0].

Of course, having similar big-O performance to List<T> is no reason to replace List<T>, especially since RWList<T> is probably slower overall (though I have not done a benchmark). The real reason FWList and RWList are useful is that you can instantly transform the list--or even just part of the list--into an FVList<T> or RVList<T>. Moreover, once you have done this, you can still continue to modify the FWList<T> or RWList<T> the same as you did before--the illusion of mutability is always preserved.

Under the hood, a FWList or RWList is divided into two parts or "halves": the mutable part and the immutable part. When you create a new list and add items, it is 100% mutable. When you call ToVList() or ToRVList(), it is marked 100% immutable. If you then add items to the end of your WList, it still takes O(1) time, since you are not changing the immutable part of the list. However, if you modify the part of the list you have made immutable, it takes up to O(N) time, because a copy must be made of many or all of the immutable items in order to make them mutable again.

Mutable items are exclusively owned by a single FWList or RWList; immutable items can be shared among different FWLists, RWLists, FVLists, and RVLists. When a list is converted from one form to another, all items in the list are marked as immutable. This is done simply by increasing a property of the block called ImmCount to match the number of items in the list. In a 100% mutable block, ImmCount is 0; in a full 100% mutable block, ImmCount equals the Capacity of the block.

To illustrate how this works, let's go through an example. Suppose we create a FWList with items counting up from 0 to 8:

FWList<int> W = new FWList<int>(9);

for (int i = 0; i < 9; i++)

W[i] = i;

This list is represented by three blocks...

Instead of calling ToFVList(), which would make the entire list immutable, let's use WithoutFirst(4) to get an immutable version of the end of the list, while keeping the first four items mutable:

FVList<int> V = W.WithoutFirst(4);

(Note: RWList<T> has WithoutLast() instead of WithoutFirst(). It keeps the end of the list mutable rather than the beginning.)

Since the first four items are still mutable, we can modify them in O(1) time. For example, we could do:

W[3] = 33;

If, however, we modify an item in the immutable section of the list, WList will make a copy of as many blocks as it needs to in order to modify the immutable item. For instance,

W[4] = 444;

produces the following result:

Note that WLists do not support interleaving of mutable and immutable blocks. Only the head section of the list can be mutable, and only the tail section can be immutable. If you modify a WList at index Count-1 (or if you modify an RWList at index 0), the entire list is forced to become mutable.

I took the liberty of implementing an optimization here: if you are transforming a VList into a mutable WList and the VList has a lot of small blocks (such as the pathological linked list case shown above), WList will attempt to consolidate the small blocks into larger blocks, in an exponential progression, so that the mutable part of the WList becomes more efficient than the VList it was created from. However, it will not copy more blocks than necessary to do the immediate operation you asked of it, which may limit the amount of restructuring done.

If you suspect you have an RVList that has degraded into a long chain, you can use this little function to restructure the chain into an efficient short one:

void AutoOptimize<T>(ref RVList<T> v)

{

int bcl = v.BlockChainLength-2;

if (bcl * (bcl - (bcl>>2)) > v.Count) {

RWList<T> w = v.ToRWList();

w[0] = w[0];

v = w.ToVList();

}

}

VListBlock

All four types of VList use the common block class, VListBlock<T>. Now, FVList and RVList obviously need to share most of the same code, and since C# structures are not allowed to have base classes, I put most of the shared logic for managing VLists in VListBlock<T>. In the source code of VListBlock, the "standard" list is FVList; if a method expects a list as an argument, it takes FVList in preference to RVList. In VListBlock, the term "front" refers to the head of the linked list, and the tail blocks are referred to as the "prior" blocks.

When I added new algorithms designed for mutable VLists, I gave them the prefix Mu to distinguish them from the algorithms designed for immutable lists. Since FWList and RWList are classes, I gave them a base class WListBase<T> which implements IList<T> and contains much of the common code, but the most low-level code goes in VListBlock (or in its two derived classes, which exist for the small-list optimization, described below).

Block ownership

I think I should say something about block ownership, because the current implementation is more complicated than it needs to be. Keeping track of who owns what is important because two WLists can share the same blocks, but at most one WList can own each block. A VListBlock does not know its owner, as it doesn't keep a reference to the WListBase that owns it; instead, the WListBase contains a flag (IsOwner) that specifies whether it owns its head block, and each block contains an implicit flag (PriorIsOwned) that specifies whether that block owns the prior block in the chain. If a WList owns the head block, then it also owns all prior blocks in a row for which the PriorIsOwned property is true. It is not possible for two WLists to claim ownership of one block. Even if they are on different threads, this cannot happen because the MutableFlag is set using an atomic operation (Interlocked.CompareExchange).

The MutableFlag indicates that a block is owned by an FWList or RWList, but it does not indicate who owns it. Two different WLists can certainly share a block that has this flag set, but at least one of the two WLists will only include immutable items from the block. So, a WList relies on its IsOwner flag and PriorIsOwned to determine how many blocks it owns. As I mentioned, PriorIsOwned is an "implicit" flag, which is the messy part. PriorIsOwned returns true if the following conditions are met:

- The prior block is mutable (has the

MutableFlag). Prior._localCount > PriorBlock.ImmCount.

This logic relies on the fact that mutable and immutable blocks cannot be interleaved, and on the fact that a new mutable block is never created until the prior block is full. Because of this, it is guaranteed either that the front item in the Prior list is mutable, or that the Prior list is completely full of immutable items. This logic makes PriorIsOwned return false as soon as ImmCount reaches the Capacity of the block. This is okay because if a block is full of immutable items, then neither it nor any prior blocks can be modified in any way; therefore, the issue of who owns the block is irrelevant.

Now, if one wanted, for example, to modify the source code to allow allocation of a new mutable block before all previous blocks were full (e.g., to support a settable Capacity property, which is not currently available), this logic would no longer be valid, and it would be necessary to introduce and manage an explicit flag indicating whether the prior block is owned.

Thread safety

Thread safety is a concern. Individual list instances are not thread-safe, but I have tried to ensure that different lists that share the same memory are thread-safe. The comments in VListBlock.cs describing _immCount explain how thread safety is handled, but basically, _immCount is the only field for which thread safety is a concern, because there is no other data in a VListBlock that might be modified by two different threads at the same time.

The small-list optimization

For some applications, it is common to have large numbers of short lists (two items or less). For example, abstract syntax trees are N-ary trees, but many nodes have 0, 1, or 2 children. For that reason, I have optimized the memory usage of the first block in a list so that instead of having an array of two items, it has two fields called _1 and _2. The first block is represented by the VListBlockOfTwo class, while all other blocks are represented by the VListBlockArray class (both are derived from VListBlock). VListBlockOfTwo uses about 28 bytes less memory than a VListBlockArray of the same size. In exchange for the smaller size, there is a small performance penalty, because some operations require virtual function calls. If a FVList or RVList has no items, it uses no heap space at all.

Conclusion

So, what are VLists good for? In a nutshell, they are good in functional algorithms as an alternative to persistent linked lists. I will be using them in Loyc, my extensible C#/boo compiler project (which is in very early stages, and which I'm looking for help with, by the way, as the project is just too huge to do alone!).

My idea is that Loyc could be used not only as a compiler, but also in an IDE to provide "intellisense". Now, in order to provide deep inspection of the program as you type it, Loyc would run your code through many "compiler steps" to uncover deep meaning. For example, suppose somebody wrote an extension to support the C preprocessor in C#. Then, code like

#define EMPTY(c) internal class c {}

EMPTY(Foo)

EMPTY(Bar)

would have to be translated to

internal class Foo {}

internal class Bar {}

so that the IDE could learn about the existence of the classes Foo and Bar. Loyc would have to go through a series of transformations like this:

Rather than go through all these steps every time the user presses a character, I thought that eventually, I'd like to do it in a more incremental way, so that instead of re-tokenizing the source file from scratch with each character pressed, only those tokens that potentially changed would be re-parsed. Then, perhaps a series of "fixups" (incremental modifications) could be pushed through all the compiler stages, to produce a reparsed file in less time.

For this to be remotely feasible, it would be necessary to keep an old copy of the token list, and probably an old copy of the original AST. But, since the whole point would be to increase efficiency, it may be counterproductive to actually spend time making complete copies of the token list and AST. Instead, I thought, copies should be made of only parts of the AST, on-demand, when they are needed. The VList, then, helps to support a persistent AST, i.e., an AST where old versions continue to exist as long as somebody holds a reference to them.

Besides, since this compiler is supposed to allow third parties to modify the AST, it might be better for the AST to be immutable and for the compiler stages to be written in a functional style. That way, there would be less chance that one compiler extension would accidentally screw up changes made by another extension, and debugging would be simpler. I am considering a compromise solution, though, as complete immutability may harm performance.

If you can think of other uses of FVList, RVList, FWList, and RWList, please leave a comment with your ideas!

A note about dependencies

I hope you don't mind: the VList source code contains Unit Tests that are dependent on nunit.framework.dll. Also, there is a minor dependency on Loyc.Runtime.dll, a small collection of simple utilities for general-purpose use. The most important dependency is CollectionDebugView<T>, a tiny class described here that allows VLists to look the same as ordinary List<T> objects in the debugger. The other dependency is Localize.From, a pluggable string localization facility. Feel free to strip it out simply by deleting all instances of the string "Localize.From" from the source code.

History

- May 2008: finished

VList and RVList. - April 1, 2009: finished

WList and RWList. Let's call it version 1.0. - December 14, 2009: added LINQ-style

Where, Select, SmartSelect and Transform methods. You do not need C# 3.0 or .NET 3.0 to use these methods (in fact, I am using them in .NET 2.0), but if you are using .NET 2.0, you will need to define Func<T,TResult> manually in the System.Linq namespace. Or use LinqBridge. - December 14, 2009: renamed

VList/WList to FVList/FWList.