Introduction

I am afraid to say that I am just one of those people that unless I am doing something, I am bored. So now that I finally feel I have learnt the basics of WPF, it is time to turn my attention to other matters.

I have a long list of things that demand my attention such as WCF/WF/CLR via C# version 2 book, but I recently went for something (and got, but turned it down in the end) which required me to know a lot about threading. Whilst I consider myself to be pretty good with threading, I thought, yeah, I'm OK at threading, but I could always be better. So as a result of that, I have decided to dedicate myself to writing a series of articles on threading in .NET. This series will undoubtedly owe much to an excellent Visual Basic .NET Threading Handbook that I bought that is nicely filling the MSDN gaps for me and now you.

I suspect this topic will range from simple to medium to advanced, and it will cover a lot of stuff that will be in MSDN, but I hope to give it my own spin also.

I don't know the exact schedule, but it may end up being something like:

I guess the best way is to just crack on. One note though before we start, I will be using C# and Visual Studio 2008.

What I'm going to attempt to cover in this article will be:

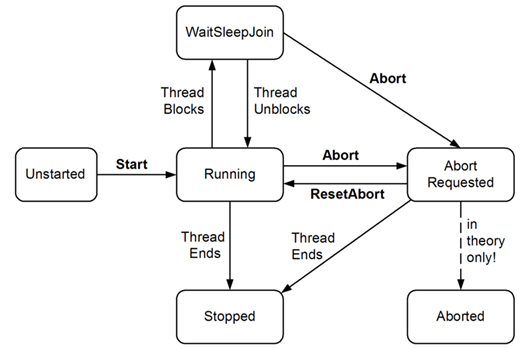

The following figure illustrates the most common thread states, and what happens when a thread moves into each state:

Here a list of all the available thread states:

| State | Description |

Running | The thread has been started, it is not blocked, and there is no pending ThreadAbortException. |

StopRequested | The thread is being requested to stop. This is for internal use only. |

SuspendRequested | The thread is being requested to suspend. |

Background | The thread is being executed as a background thread, as opposed to a foreground thread. This state is controlled by setting the Thread.IsBackground property. |

Unstarted | The Thread.Start method has not been invoked on the thread. |

Stopped | The thread has stopped. |

WaitSleepJoin | The thread is blocked. This could be the result of calling Thread.Sleep or Thread.Join, of requesting a lock - for example, by calling Monitor.Enter or Monitor.Wait - or of waiting on a thread synchronization object such as ManualResetEvent.

(We will be covering all of these locking /synchronization techniques in Part 3.)

|

Suspended | The thread has been suspended. |

AbortRequested | The Thread.Abort method has been invoked on the thread, but the thread has not yet received the pending System.Threading.ThreadAbortException that will attempt to terminate it. |

Aborted | The thread state includes AbortRequested and the thread is now dead, but its state has not yet changed to Stopped. |

Taken from the MSDN ThreadState page.

Examining Some of This in a Bit More Detail

In this section, I will include some code that examines some of threading areas mentioned above. I will not cover all of them, but I'll try and cover most of them.

Joining Threads

The Join method (without any parameters) blocks the calling thread until the current thread is terminated. It should be noted that the caller will block indefinitely if the current thread does not terminate. If the thread has already terminated when the Join method is called, the method returns immediately.

The Join method has an override, which lets you set the number of milliseconds to wait on the thread to finish. If the thread has not finished when the timer expires, Join exits and returns control to the calling thread (and the joined thread continues to execute).

This method changes the state of the calling thread to include WaitSleepJoin (according to the MSDN documentation).

This method is quite useful if one thread depends on another thread.

Let's see a small example (attached demo ThreadJoin project).

In this small example, we have two threads; I want the first thread to run first and the second thread to run after the first thread is completed.

using System;

using System.Threading;

namespace ThreadJoin

{

class Program

{

public static Thread T1;

public static Thread T2;

public static void Main(string[] args)

{

T1 = new Thread(new ThreadStart(First));

T2 = new Thread(new ThreadStart(Second));

T1.Name = "T1";

T2.Name = "T2";

T1.Start();

T2.Start();

Console.ReadLine();

}

private static void First()

{

for (int i = 0; i < 5; i++)

{

Console.WriteLine(

"T1 state [{0}], T1 showing {1}",

T1.ThreadState, i.ToString());

}

}

private static void Second()

{

Console.WriteLine(

"T2 state [{0}] just about to Join, T1 state [{1}], CurrentThreadName={2}",

T2.ThreadState, T1.ThreadState,

Thread.CurrentThread.Name);

T1.Join();

Console.WriteLine(

"T2 state [{0}] T2 just joined T1, T1 state [{1}], CurrentThreadName={2}",

T2.ThreadState, T1.ThreadState,

Thread.CurrentThread.Name);

for (int i = 5; i < 10; i++)

{

Console.WriteLine(

"T2 state [{0}], T1 state [{1}], CurrentThreadName={2} showing {3}",

T2.ThreadState, T1.ThreadState,

Thread.CurrentThread.Name, i.ToString());

}

Console.WriteLine(

"T2 state [{0}], T1 state [{1}], CurrentThreadName={2}",

T2.ThreadState, T1.ThreadState,

Thread.CurrentThread.Name);

}

}

}

And here is the output from this small program, where we can indeed see that thread T1 completes and then thread T2 operations run.

Note: Thread T1 continues to run and then stops, and then the operations that thread T2 specified are run.

Sleep

The static Thread.Sleep method available on the Thread class is fairly simple; it simply suspends the current thread for a specified time. Consider the following example, where two threads are started that run two separate counter methods; the first thread (T1) counts from 0-50, and the second thread (T2) counts from 51-100.

Thread T1 will go to sleep for 1 second when it reaches 10, and thread T2 will go to sleep for 5 seconds when it reaches 70.

Let's see a small example (attached demo ThreadSleep project):

using System;

using System.Threading;

namespace ThreadSleep

{

class Program

{

public static Thread T1;

public static Thread T2;

public static void Main(string[] args)

{

Console.WriteLine("Enter Main method");

T1 = new Thread(new ThreadStart(Count1));

T2 = new Thread(new ThreadStart(Count2));

T1.Start();

T2.Start();

Console.WriteLine("Exit Main method");

Console.ReadLine();

}

private static void Count1()

{

Console.WriteLine("Enter T1 counter");

for (int i = 0; i < 50; i++)

{

Console.Write(i + " ");

if (i == 10)

Thread.Sleep(1000);

}

Console.WriteLine("Exit T1 counter");

}

private static void Count2()

{

Console.WriteLine("Enter T2 counter");

for (int i = 51; i < 100; i++)

{

Console.Write(i + " ");

if (i == 70)

Thread.Sleep(5000);

}

Console.WriteLine("Exit T2 counter");

}

}

}

The output may be as follows:

In this example, thread T1 is run first, so starts its counter (as we will see later, T1 may not necessarily be the thread to start first) and counts up to 10, at which point T1 sleeps for 1 second and is placed in the WaitSleepJoin state. At this point, T2 runs, so starts its counter, gets to 70, and is put to sleep (and is placed in the WaitSleepJoin state), at which point T1 is awoken and run to completion. T2 is then awoken and is able to complete (as T1 has completed, there is only T2 work left to do).

Interrupt

When a thread is put to sleep, the thread goes into the WaitSleepJoin state. If the thread is in this state, it may be placed back in the scheduling queue by the use of the Interrupt method. Calling Interrupt when a thread is in the WaitSleepJoin state will cause a ThreadInterruptedException to be thrown, so any code that is written needs to catch this.

If this thread is not currently blocked in a wait, sleep, or join state, it will be interrupted when it next begins to block.

Let's see a small example (attached demo ThreadInterrupt project):

using System;

using System.Threading;

namespace ThreadInterrupt

{

class Program

{

public static Thread sleeper;

public static Thread waker;

public static void Main(string[] args)

{

Console.WriteLine("Enter Main method");

sleeper = new Thread(new ThreadStart(PutThreadToSleep));

waker = new Thread(new ThreadStart(WakeThread));

sleeper.Start();

waker.Start();

Console.WriteLine("Exiting Main method");

Console.ReadLine();

}

private static void PutThreadToSleep()

{

for (int i = 0; i < 50; i++)

{

Console.Write(i + " ");

if (i == 10 || i == 20 || i == 30)

{

try

{

Console.WriteLine("Sleep, Going to sleep at {0}",

i.ToString());

Thread.Sleep(20);

}

catch (ThreadInterruptedException e)

{

Console.WriteLine("Forcibly ");

}

Console.WriteLine("woken");

}

}

}

private static void WakeThread()

{

for (int i = 51; i < 100; i++)

{

Console.Write(i + " ");

if (sleeper.ThreadState == ThreadState.WaitSleepJoin)

{

Console.WriteLine("Interrupting sleeper");

sleeper.Interrupt();

}

}

}

}

}

Which may produce this output:

It can be seen from this output that the sleeper thread starts normally, and when it gets to 10, is put to sleep so goes into the WaitSleepJoin state. Then the waker thread starts and immediately tries to Interrupt the sleeper (which is in the WaitSleepJoin state, so the ThreadInterruptedException is thrown and caught). However, as the initial sleeper thread's sleep time elapses, it is again allowed to run until completion.

I personally haven't really had to use the Interrupt method that often, but I do consider interrupting threads to be fairly dangerous, as you just can't guarantee where a thread is.

"Interrupting a thread arbitrarily is dangerous, however, because any framework or third-party methods in the calling stack could unexpectedly receive the interrupt rather than your intended code. All it would take is for the thread to block briefly on a simple lock or synchronization resource, and any pending interruption would kick in. If the method wasn't designed to be interrupted (with appropriate cleanup code in finally blocks), objects could be left in an unusable state, or resources incompletely released.

Interrupting a thread is safe when you know exactly where the thread is."

-- Threading in C#, Joseph Albahari.

Pause

There used to be a way to pause threads using the Pause() method. But this is now deprecated, so you must use alternative methods, such as WaitHandles. To demonstrate this, there is a combined application that covers Pause/Resume and Abort of background threads.

Resume

There used to be a way to pause threads using the Resume() method. But this is now deprecated, so you must use alternative methods, such as WaitHandles. To demonstrate this, there is a combined application that covers Pause/Resume and Abort of background threads.

Abort

First, let me state that there is an Abort() method, but this is not something you should use (and in my own opinion, at all). I would just like to first quote two reputable sources on the dangers of using the Abort() method:

"A blocked thread can also be forcibly released via its Abort method. This has an effect similar to calling Interrupt, except that a ThreadAbortException is thrown instead of a ThreadInterruptedException. Furthermore, the exception will be re-thrown at the end of the catch block (in an attempt to terminate the thread for good) unless Thread.ResetAbort is called within the catch block. In the interim, the thread has a ThreadState of AbortRequested.

The big difference, though, between Interrupt and Abort is what happens when it's called on a thread that is not blocked. While Interrupt waits until the thread next blocks before doing anything, Abort throws an exception on the thread right where it's executing - maybe not even in your code. Aborting a non-blocked thread can have significant consequences."

-- Threading in C#, Joseph Albahari.

"A common question that emerges once you have kicked off some concurrent work is: how do I stop it? Here are two popular reasons for wanting to stop some work in progress:

You need to shut down the program. The user cancelled the operation. In the first case, it is often acceptable to drop everything mid flow and not bother shutting down cleanly, because the internal state of the program no longer matters, and the OS will release many resources held by our program when it exits. The only concern is if the program stores state persistently - it is important to make sure that any such state is consistent when our program exits. However, if we are relying on a database for such state, we can still often get away with abandoning things mid flow, particularly if we are using transactions - aborting a transaction rolls everything back to where it was before the transaction started, so this should be sufficient to return the system to a consistent state.

There are, of course, cases where dropping everything on the floor will not work. If the application stores its state on disk without the aid of a database, it will need to take steps to make sure that the on-disk representation is consistent before abandoning an operation. And in some cases, a program may have interactions in progress with external systems or services that require explicit cleanup beyond what will happen automatically. However, if you have designed your system to be robust in the face of a sudden failure (e.g., loss of power), then it should be acceptable simply to abandon work in progress rather than cleaning up neatly when shutting the program down. (Indeed, there is a school of thought that says that if your program requires explicit shutdown, it is not sufficiently robust - for a truly robust program, sudden termination should always be a safe way to shut down. And given that, some say, you may as well make this your normal mode of shutdown - it's a very quick way of shutting down!)

User-initiated cancellation of a single operation is an entirely different matter, however.

If the user chooses to cancel an operation for some reason - maybe it is taking too long - she will expect to be able to continue using the program afterwards. It is therefore not acceptable simply to drop everything on the floor, because the OS is not about to tidy up after us. Our program has to live with its internal state after the operation has been cancelled. It is therefore necessary for cancellation to be done in an orderly fashion, so that the program's state is still internally consistent once the operation is complete.

Bearing this in mind, consider the use of Thread.Abort. This is, unfortunately, a popular choice for cancelling work, because it usually manages to stop the target thread no matter what it was up to. This means you will often see its use recommended on mailing lists and newsgroups as a way of stopping work in progress, but it is really only appropriate if you are in the process of shutting down the program, because it makes it very hard to be sure what state the program will be in afterwards."

-- How To Stop a Thread in .NET (and Why Thread.Abort is Evil), Ian Griffiths.

So with all this in mind, I have created a small application, which I believe is a well-behaved worker thread, that allows the user to carry out some background work, and Pause/Resume and Cancel it, all safely and easily. It's not the only way to do this, but it's a way.

Let's see a small example (attached demo ThreadResumePause_StopUsingEventArgs project).

Unfortunately, I had to include some UI code here, to allow the user to click on different buttons for Pause/Resume etc., but I shall only include the parts of the UI code that I feel are relevant to explaining the subject.

So first, here is the worker thread class; an important thing to note is the usage of the volatile keyword.

The volatile keyword indicates that a field can be modified in the program by something such as the Operating System, the hardware, or a concurrently executing thread.

The system always reads the current value of a volatile object at the point it is requested, even if the previous instruction asked for a value from the same object. Also, the value of the object is written immediately on assignment.

The volatile modifier is usually used for a field that is accessed by multiple threads without using the lock statement to serialize access. Using the volatile modifier ensures that a thread retrieves the most up-to-date value written by another thread.

using System;

using System.ComponentModel;

using System.Threading;

namespace ThreadResumePause_StopUsingEventArgs

{

public delegate void ReportWorkDoneEventhandler(object sender,

WorkDoneCancelEventArgs e);

public class WorkerThread

{

private Thread worker;

public event ReportWorkDoneEventhandler ReportWorkDone;

private volatile bool cancel = false;

private ManualResetEvent trigger = new ManualResetEvent(true);

public WorkerThread()

{

}

public void Start(long primeNumberLoopToFind)

{

worker = new Thread(new ParameterizedThreadStart(DoWork));

worker.Start(primeNumberLoopToFind);

}

private void DoWork(object data)

{

long primeNumberLoopToFind = (long)data;

int divisorsFound = 0;

int startDivisor = 1;

for (int i = 0; i < primeNumberLoopToFind; i++)

{

trigger.WaitOne();

divisorsFound = 0;

startDivisor = 1;

while (startDivisor <= i)

{

if (i % startDivisor == 0)

divisorsFound++;

startDivisor++;

}

if (divisorsFound == 2)

{

WorkDoneCancelEventArgs e =

new WorkDoneCancelEventArgs(i);

OnReportWorkDone(e);

cancel = e.Cancel;

if (cancel)

return;

}

}

}

public void Pause()

{

trigger.Reset();

}

public void Resume()

{

trigger.Set();

}

protected virtual void OnReportWorkDone(WorkDoneCancelEventArgs e)

{

if (ReportWorkDone != null)

{

ReportWorkDone(this, e);

}

}

}

public class WorkDoneCancelEventArgs : CancelEventArgs

{

public int PrimeFound { get; private set; }

public WorkDoneCancelEventArgs(int primeFound)

{

this.PrimeFound = primeFound;

}

}

}

And here is the relevant parts of the UI code (WinForms, C#). Note that I have not checked whether an Invoke is actually required before doing an Invoke.

MSDN says the following about the Control.InvokeRequired property:

Gets a value indicating whether the caller must call an Invoke method when making method calls to the control because the caller is on a different thread than the one the control was created on.

Controls in Windows Forms are bound to a specific thread and are not thread safe. Therefore, if you are calling a control's method from a different thread, you must use one of the control's Invoke methods to marshal the call to the proper thread. This property can be used to determine if you must call an Invoke method, which can be useful if you do not know what thread owns a control.

So one could use this to determine if an Invoke is actually required. Calling InvokeRequired/Invoke/BeginInvoke/EndInvoke are all thread safe.

using System;

using System.Collections.Generic;

using System.ComponentModel;

using System.Data;

using System.Drawing;

using System.Linq;

using System.Text;

using System.Windows.Forms;

using System.Threading;

namespace ThreadResumePause_StopUsingEventArgs

{

public partial class Form1 : Form

{

private WorkerThread wt = new WorkerThread();

private SynchronizationContext context;

private bool primeThreadCancel = false;

public Form1()

{

InitializeComponent();

context = SynchronizationContext.Current;

}

void wt_ReportWorkDone(object sender, WorkDoneCancelEventArgs e)

{

context.Post(new SendOrPostCallback(delegate(object state)

{

this.lstItems.Items.Add(e.PrimeFound.ToString());

}), null);

e.Cancel = primeThreadCancel;

}

private void btnStart_Click(object sender, EventArgs e)

{

wt.Start(100000);

wt.ReportWorkDone +=

new ReportWorkDoneEventhandler(wt_ReportWorkDone);

primeThreadCancel= false;

}

private void btnCancel_Click(object sender, EventArgs e)

{

primeThreadCancel= true;

}

private void btnPause_Click(object sender, EventArgs e)

{

wt.Pause();

}

private void btnResume_Click(object sender, EventArgs e)

{

wt.Resume();

}

}

}

And when run, this looks like this:

So how does all this work? There are a few things in here that I has hoping not to get on to until Part 4, but there is simply no way they could be avoided, so Ill try and cover them just enough. There are a couple of key concepts here, such as:

- Start the worker thread using an input parameter

- Marshall the worker thread's output to a UI thread

- Pause the worker thread

- Resume the worker thread

- Cancel the worker thread

I will try and explain each of these parts in turn.

Start the Worker Thread Using an Input Parameter

This is easily achieved by the use of a ParameterizedThreadStart where you simply start the thread, passing in an input parameter like worker.Start(primeNumberLoopToFind), and then in the actual private void DoWork(object data) method, you can get the parameter value by using the data parameter, like long primeNumberLoopToFind = (long)data.

Marshal the Worker Thread's Output to a UI Thread

The worker thread raises the ReportWorkDone event which is used by the UI, but when the UI attempts to use this ReportWorkDone EventArg object properties to add items to the UI owned ListBox control, you will get a cross thread violation, unless you do something to marshal the thread to the UI thread. This is know as thread affinity; the thread that creates the UI controls owns the controls, so any calls to the UI controls must go through the UI thread.

There are several ways of doing this; I am using the .NET 2.0 version of this, which makes use of a class called SynchronizationContext which I obtain with the Form's constructor. Then, I am free to marshal the worker threads results to the UI thread so that they may be added to the UI's controls. This is done as follows:

context.Post(new SendOrPostCallback(delegate(object state)

{

this.lstItems.Items.Add(e.PrimeFound.ToString());

}), null);

Pause the Worker Thread

To pause the worker thread, I make use of a threading object called a ManualResetEvent which may be used to cause a thread to both wait and resume its operation, depending on the signal state of ManualResetEvent. Basically, in a signaled state, the thread that is waiting on the ManualResetEvent will be allowed to continue, and in a non-signaled state, the thread that is waiting on the ManualResetEvent will be forced to wait. We wil now examine the relevant parts of the WorkerThread class.

We declare a new ManualResetEvent which starts in signaled state:

private ManualResetEvent trigger = new ManualResetEvent(true);

We then attempt to wait for the signaled state in the workerThread DoWork method. As the ManualResetEvent started out in the signaled state, the thread proceeds to run:

for (int i = 0; i< primeNumberLoopToFind; i++)

{

trigger.WaitOne();

....

....

So for the pause, all we need to do is put the ManualResetEvent in the non-signaled state (using the Reset method), which causes the worker to wait for the ManualResetEvent to be put into a signaled state again.

trigger.Reset();

Resume the Worker Thread

The resume is easy; all we need to do is put the ManualResetEvent in the signaled state (using the Set method), which causes the worker to no longer wait for the ManualResetEvent, as it is in a signaled state again.

trigger.Set();

Cancel the Worker Thread

If you read Ian Griffith's article that I quoted above, you'll know that he simply suggests keeping things as simple as possible, but the use of a boolean flag that is visible to both the UI and the worker thread. I have also done this, but I use a CancelEventArgs, which allows the user to have a cancel state for the worker thread directly into the CancelEventArgs, such that the worker thread can use this to see if it should be cancelled. It works like this:

- Worker started

- Worker raises

WorkDone event, with CancelEventArgs - If user clicks Cancel button,

CancelEventArgs cancel is set - Worker thread sees

CancelEventArgs cancel is set, so breaks out of its work - As there is no more work for the worker thread to do, it dies

I just feel this is a little safer than using the Abort() method.

There are some very obvious threading opportunities, which are as follows:

BackExecution Order

If a task can successfully be run in the background, then it is a candidate for threading. For example, think of a search that needs to search thousands of items for matching items - this would be an excellent choice for a background thread to do.

External Resources

Another example may be when you are using an external resource such as a database/Web Service/remote file system, where there may be a performance penalty to pay for accessing these resources. By threading access to these sorts of things, you are alleviating some of the overhead incurred by accessing these resources within a single thread.

UI Responsiveness

We can imagine that we have a User Interface (UI) that allows the user to do various tasks. Some of these tasks may take quite a long time to complete. To put it in a real world context, let us say that the app is an email client application that allows users to create / fetch emails. Fetching emails may take a while to complete, as the fetching of emails must interact with a mail server to obtain the current user's emails. Threading the fetch-emails code would help to keep the UI responsive to further user interactions. If we don't thread tasks that take a long time in UIs and simply rely on the main thread, we could easily end up in a situation where the UI is fairly unresponsive. So this is a prime candidate for threading. As we will see in a subsequent article, there is the issue of Thread Affinity that needs to be considered when dealing with UIs, but I'll save that discussion for the subsequent article.

Socket Programming

If you have ever done any socket programming, you may have had to create a server that was able to accept clients. A typical arrangement of this may be a chat application where the server is able to accept n-many clients and is able to read from clients and write to clients. This is largely achieved by threads. Though, I am aware that there is an asynchronous socket API available within .NET, you may choose to use that instead of manually created threads. Sockets are still a valid threading example.

The best example of this that I have seen is located here at this link. The basic idea when working with sockets is that you have a server and n-many clients. The server is run (main thread is active), and then for each client connection request that is made, a new thread is created to deal with the client. At the client end, it is typical that a client should be able to receive messages from another client (via the server) and the client should also allow the xlient user to type messages.

Let us just think about the client for a minute. The client is able to send messages to other clients (via the server), so that implies that there is a thread that needs to be able to respond to data that the user enters. The client should also be able to show messages from other clients (via the server), so this implies that this also needs to be on a thread. If we use the same thread to listen to incoming messages from other clients, we would block the ability to type new data to send to other clients.

I don't won't to labour on this example as it's not the main drive of this article, but I thought it may me worth talking about, just so you can see what sort of things you are up against when you start to use threads, and how they can actually be helpful.

In this section, I will discuss some common traps when working with threads. This is by no means all the traps, rather some of the most common errors.

Execution Order

If we consider the following code example (attached demo ThreadTrap1 project):

using System;

using System.Threading;

namespace ThreadTrap1

{

class Program

{

static void Main(string[] args)

{

Thread T1 = new Thread(new ThreadStart(Increment));

Thread T2 = new Thread(new ThreadStart(Increment));

T1.Name = "T1";

T2.Name = "T2";

T1.Start();

T2.Start();

Console.ReadLine();

}

private static void Increment()

{

for (int i = 0; i < 100000;i++ )

if (i % 10000 == 0)

Console.WriteLine("Thread Name {0}",

Thread.CurrentThread.Name);

WriteDone(Thread.CurrentThread.Name);

}

private static void WriteDone(string threadName)

{

switch (threadName)

{

case "T1" :

Console.WriteLine("T1 Finished");

break;

case "T2":

Console.WriteLine("T2 Finished");

break;

}

}

}

}

From looking at this code, one would assume that the thread named T1 would always finish first, as it is the one that is started first. However, this is not the case; it may finish first sometimes, and other times, it may not. See the two screenshots below that were taken from two different runs of this same code:

In this screenshot, T1 does finish first:

In this screenshot, T2 finishes first:

So this is a trap; never assume the threads run in the order you start them in.

Execution Order / Unsynchronized Code

Consider the following code example (attached demo ThreadTrap2 project):

using System;

using System.Threading;

namespace ThreadTrap2

{

class Program

{

protected static long sharedField = 0;

static void Main(string[] args)

{

Thread T1 = new Thread(new ThreadStart(Increment));

Thread T2 = new Thread(new ThreadStart(Increment));

T1.Name = "T1";

T2.Name = "T2";

T1.Start();

T2.Start();

Console.ReadLine();

}

private static void Increment()

{

for (int i = 0; i < 100000; i++)

if (i % 10000 == 0)

Console.WriteLine("Thread Name {0}, Shared value ={1}",

Thread.CurrentThread.Name, sharedField.ToString());

sharedField++;

WriteDone(Thread.CurrentThread.Name);

}

private static void WriteDone(string threadName)

{

switch (threadName)

{

case "T1":

Console.WriteLine("T1 Finished, Shared value ={0}",

sharedField.ToString());

break;

case "T2":

Console.WriteLine("T2 Finished, Shared value ={0}",

sharedField.ToString());

break;

}

}

}

}

This code is similar to the previous example; we still can't rely on the execution order of the threads. Things are also a little worse this time as I introduced a shared field that the two threads have access to. It can be seen in the screenshots below that we get different values on different runs of the code. This is fairly bad news; imagine this was your bank account. We can solve these issues using "Synchronization", as we will see in a future article in this series.

This screenshot shows the result of the first run, and we get these end results:

This screenshot shows the result of another run, and we get different end results. Oh, bad news.

So this is a trap; never assume threads and shared data play well together, because they don't.

Loops

Consider the following problem. "The system must send an invoice to each user who has placed an order. This process should run in the background and should not have any adverse effect on the user interface."

Consider the following code example (attached demo ThreadTrap3 project).

Don't run this, it's just to show a bad example.

using System;

using System.Collections.Generic;

using System.Threading;

namespace ThreadTrap3

{

class Program

{

static void Main(string[] args)

{

List<Customer> custs = new List<Customer>();

custs.Add(new Customer { CustomerEmail = "fred@gmail.com",

InvoiceNo = 1, Name = "fred" });

custs.Add(new Customer { CustomerEmail = "same@gmail.com",

InvoiceNo = 2, Name = "sam" });

custs.Add(new Customer { CustomerEmail = "john@gmail.com",

InvoiceNo = 3, Name = "john" });

custs.Add(new Customer { CustomerEmail = "ted@gmail.com",

InvoiceNo = 4, Name = "ted" });

InvoiceThread.CreateAllInvoices(custs);

Console.ReadLine();

}

}

public class InvoiceThread

{

private static Customer currentCustomer;

public static void CreateAllInvoices(List<Customer> customers)

{

foreach (Customer cust in customers)

{

currentCustomer=cust;

Thread thread = new Thread(new ThreadStart(SendCustomerInvoice));

thread.Start();

}

}

private static void SendCustomerInvoice()

{

Console.WriteLine("Send invoice {0}, to Customer {1}",

currentCustomer.InvoiceNo.ToString(),

currentCustomer.Name);

}

}

public class Customer

{

public string Name { get; set; }

public string CustomerEmail { get; set; }

public int InvoiceNo { get; set; }

}

}

This example is bad as it creates a new thread for every customer that it needs to send an invoice to.

foreach (Customer cust in customers)

{

currentCustomer=cust;

Thread thread = new Thread(new ThreadStart(SendCustomerInvoice));

thread.Start();

}

But just why is this so bad? It seems to make some sort of sense; after all it could take quite a while to send an invoice off using email. So why not thread it? When we create a new thread within a loop as I have done here, each thread needs to be allocated some CPU time, and as such, the CPU will spend so much time context switching (a context switch consists of storing context information from the CPU (registers) to the current thread's kernel stack, and loading the context information to the CPU from the kernel stack of the thread selected for execution) to allow each thread some CPU time, that very little of the actual thread instructions will be performed, and the system may even lock up.

That's one thing; there is also quite a lot of overhead with creating a thread to begin with. This is why a ThreadPool class exists. We will be seeing that in a later article.

What would make more sense is to have a single background thread and use that to send out all the invoices, or use a thread pool, where once a thread is finished, it can go back into a shared pool. We will be looking at thread pools in a subsequent article in this series.

Locks Held For Too Long

We have not covered locks yet (Part 3 will talk about these), so I don't want to spend too much time on this one, but I will just briefly mention them.

We can imagine two or more threads sharing some common data, which we need to ensure is kept safe. Now in .NET, there are various ways of doing this, one of which is by using the "lock" keyword (we will cover this in Part 3), which ensures mutually exclusive access to the code within the "lock" section. One possible problem may be that a programmer locks an entire method to try and ensure the shared data is kept safe, but really they only needed to lock a couple of lines of code that dealt with the shared data. I think a good description I once heard for this called it "Lock Granularity", which I think sums it up quite well. Basically, only lock what you really need to lock.

We're Done

Well, that's all I wanted to say this time. Threading is a complex subject, and as such, this series will be quite hard, but I think worth a read.

Next Time

Next time we will be looking at synchronization.

Could I just ask, if you liked this article, could you please vote for it, as that will tell me whether this threading crusade that I am about to embark on will be worth creating articles for.

I thank you very much.

Bibliography

- Threading in C#, Joseph Albahari

- How to Stop a Thread in .NET (and Why Thread.Abort is Evil)

- System.Threading MSDN page

- Visual Basic .NET Threading, Wrox