Introduction

I'm afraid to say that I am just one of those people, that unless I am doing something, I am bored. So now that I finally feel I have learnt the basics of WPF, it is time to turn my attention to other matters.

I have a long list of things that demand my attention such as (WCF/WF/CLR Via C# version 2 book), but I recently went for a new job (and got it, but turned it down in the end) which required me to know a lot about threading. Whilst I consider myself to be pretty good with threading, I thought, yeah I'm OK at threading, but I could always be better. So as a result of that, I have decided to dedicate myself to writing a series of articles on threading in .NET.

This series will undoubtedly owe much to an excellent Visual Basic .NET Threading Handbook that I bought that is nicely filling the MSDN gaps for me and now you.

I suspect this topic will range from simple to medium to advanced, and it will cover a lot of stuff that will be in MSDN, but I hope to give it my own spin also. So please forgive me if it does come across a bit MSDN like.

I don't know the exact schedule, but it may end up being something like:

I guess the best way is to just crack on. One note though before we start, I will be using C# and Visual Studio 2008.

What I'm going to attempt to cover in this article will be:

This article will be all about how to control the synchronization of different threads.

The Need For Thread Pooling

There is a real need for thread pooling; if we remember the previous articles, we discovered there was certainly an overhead incurred by having a new thread. This is something that the ThreadPool addresses. Here are some reasons why thread pooling is essential:

- Thread pooling improves the responsive time of an application as threads are actually available within a pool of threads and as such don't need to be created.

- Thread pooling saves the overhead of creating a new thread and claiming back its resources when the thread is finished.

- Thread pooling optimizes the current time slices, according to the current process running.

- Thread pooling allows us to create several tasks without having to assign a priority for each task (though this could be something we want to do; more on this later).

- Thread pooling enables us to pass state information as an object to the procedure arguments for the task that is being executed.

- Thread pooling can be used to fix a maximum number of threads within the client application.

Thread Pooling Concept

One of the major problems affecting the responsiveness of multi-threaded applications is the time spent spawning threads for new tasks.

For example, imagine a web server is a multi threaded application that can service several client requests simultaneously. For argument's sake, let's assume that 10 clients are accessing the web server at the same time:

If the server operates a thread per client policy, it will create 10 new threads to service the 10 clients. There is the overhead of firstly creating the threads and then managing the threads and their associated resources through their entire lifecycle. It is also a consideration that the machine may actually run out of resources at some point.

As an alternative, if the web server used a pool of threads to satisfy client requests, then it would save the time involved with spawning a new thread each time a client request is seen.

This is the exact principle behind thread pooling.

The Windows OS maintains a pool of threads for servicing requests. If our application requests a new thread, Windows will try and fetch it from the pool. If the pool is empty, it will spawn a new thread and give it to us. Windows will dynamically manipulate the thread pool size to increase the response time for our application.

Once a thread completes its assigned task, it returns to the pool and waits for the next assignment.

The Size of the Thread Pool

The .NET Framework provides the ThreadPool class located in the System.Threading namespace for using thread pools in our applications. If at any time one of the threads in the pool is idle, then the thread pool will induce a worker to keep all the processors busy. If all the threads in the pool are busy and work is pending, then the ThreadPool will spawn new threads to complete the pending work. However, the number of threads created can not exceed the maximum number specified. You can alter the size of the pool using the ThreadPool.SetMaxThreads() and ThreadPool.SetMinThreads() methods.

In the case of additional thread requirements, the requests are queued until some thread finishes its assigned task and returns to the pool.

Thread Pooling Glitches

There is no doubt that the ThreadPool is a useful class that will certainly be useful when developing multi-threaded applications. There are, however, some things that should be considered before using a ThreadPool; these are as follows:

- The CLR assigns the threads from the

ThreadPool to tasks, and releases them back to the ThreadPool when the task is completed. There is no way to cancel a task once it starts. - Thread pooling is effective for short lived tasks. A

ThreadPool shouldn't really be used for long living tasks, as you would effectively be tying up one of the available ThreadPool threads for a long time, which is one of the reasons that makes the ThreadPool so appealing in the first place. - All the threads in the

ThreadPool are multi-threaded apartments. If we want to place our threads in a single threaded apartment, ThreadPools are not the way to go. - If we need to identify the thread and perform tasks such as start/suspend/abort it, then

ThreadPool is not the way to do this. - There can only be one

ThreadPool associated with a given process. - If a task within the

ThreadPool becomes locked, then the thread is never released back to the ThreadPool.

Exploring the ThreadPool

The main methods of the ThreadPool class are listed below:

| Name | Description |

|---|

BindHandle | Overloaded. Binds an Operating System handle to the ThreadPool. |

GetAvailableThreads | Retrieves the difference between the maximum number of thread pool threads returned by the GetMaxThreads method and the number currently active. |

GetMaxThreads | Retrieves the number of requests to the thread pool that can be active concurrently. All requests above that number remain queued until the thread pool threads become available. |

GetMinThreads | Retrieves the number of idle threads the thread pool maintains in anticipation of new requests. |

QueueUserWorkItem | Overloaded. Queues a method for execution. The method executes when a thread pool thread becomes available. |

RegisterWaitForSingleObject | Overloaded. Registers a delegate that is waiting for a WaitHandle. |

SetMaxThreads | Sets the number of requests to the thread pool that can be active concurrently. All requests above that number remain queued until the thread pool threads become available. |

SetMinThreads | Sets the number of idle threads the thread pool maintains in anticipation of new requests. |

UnsafeQueueNativeOverlapped | Queues an overlapped I/O operation for execution. |

UnsafeQueueUserWorkItem | Registers a delegate to wait for a WaitHandle. |

UnsafeRegisterWaitForSingleObject | Overloaded. Queues the specified delegate to the thread pool. |

-- From MSDN.

By far the most common methods that I think you will see used are the following methods:

QueueUserWorkItemRegisterWaitForSingleObject

So the demo code will focus on these two methods.

Demo Code

Like I say, the two most common methods of the ThreadPool are:

QueueUserWorkItemRegisterWaitForSingleObject

So let's consider these in turn.

QueueUserWorkItem(WaitCallback)

Queues a method for execution. The method executes when a thread pool thread becomes available. This is an overloaded method that allows you to create a new ThreadPool task, either using a state object or without a state object. This first example puts a new task on the ThreadPool, but doesn't use any state object.

using System;

using System.Threading;

namespace QueueUserWorkItemNoState

{

class Program

{

static void Main(string[] args)

{

ThreadPool.QueueUserWorkItem(new WaitCallback(ThreadProc));

Console.WriteLine("Main thread does some work, then sleeps.");

Thread.Sleep(1000);

Console.WriteLine("Main thread exits.");

Console.ReadLine();

}

static void ThreadProc(Object stateInfo)

{

Console.WriteLine("Hello from the thread pool.");

}

}

}

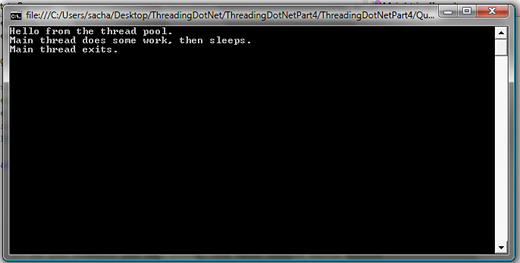

Which is about as simple as it gets. This results in the following:

The second overload of QueueUserWorkItem allows us to pass state to the task. Let's see that now, shall we?

QueueUserWorkItem(WaitCallback, Object)

Queues a method for execution, and specifies an object containing data to be used by the method. The method executes when a thread pool thread becomes available.

This example uses a simple ReportData state object to pass to the ThreadPool worker item (task).

using System;

using System.Threading;

namespace QueueUserWorkItemWithState

{

public class ReportData

{

public int reportID;

public ReportData(int reportID)

{

this.reportID = reportID;

}

}

class Program

{

static void Main(string[] args)

{

ReportData rd = new ReportData(15);

ThreadPool.QueueUserWorkItem(new WaitCallback(ThreadProc), rd);

Console.WriteLine("Main thread does some work, then sleeps.");

Thread.Sleep(1000);

Console.WriteLine("Main thread exits.");

Console.ReadLine();

}

static void ThreadProc(Object stateInfo)

{

ReportData rd = (ReportData)stateInfo;

Console.WriteLine(string.Format("report {0} is running", rd.reportID));

}

}

}

Which results in the following:

This demonstrates how to create simple tasks that can be managed by the ThreadPool. But as we know from Part 3 of this series, we sometimes need to synchronize threads to make sure that they are well behaved and run in the correct order. This is fairly easy to do with threads that we manually created; we could use WaitHandles to ensure that our threads run as expected. But how does all this work when working with the ThreadPool?

Well, luckily, the ThreadPool also has another method (ThreadPool.RegisterWaitForSingleObject()) that allows us to provide a WaitHandle, such that we can ensure the ThreadPool worker items (tasks) are well behaved and run in a certain order.

The RegisterWaitForSingleObject method has the following overloads:

| Method | Description |

|---|

RegisterWaitForSingleObject(WaitHandle, WaitOrTimerCallback, Object, Int32, Boolean) | Registers a delegate to wait for a WaitHandle, specifying a 32-bit signed integer for the time-out in milliseconds. |

RegisterWaitForSingleObject(WaitHandle, WaitOrTimerCallback, Object, Int64, Boolean) | Registers a delegate to wait for a WaitHandle, specifying a 64-bit signed integer for the time-out in milliseconds. |

RegisterWaitForSingleObject(WaitHandle, WaitOrTimerCallback, Object, TimeSpan, Boolean) | Registers a delegate to wait for a WaitHandle, specifying a TimeSpan value for the time-out. |

RegisterWaitForSingleObject(WaitHandle, WaitOrTimerCallback, Object, UInt32, Boolean) | Registers a delegate to wait for a WaitHandle, specifying a 32-bit unsigned integer for the time-out in milliseconds. |

I shall not be looking at all of these, but shall look at one of them just to outline the principle. The reader is encouraged to read around the rest of these overloads and also the other ThreadPool methods.

The attached demo project covers usage of the following overload.

RegisterWaitForSingleObject(WaitHandle, WaitOrTimerCallback, Object, Int32, Boolean)

For this example, I will create two tasks within a ThreadPool, one of which will be a simple task with a state object, which is enqueued using the ThreadPool.QueueUserWorkItem() which we saw earlier, and the second will be the RegisterWaitForSingleObject(WaitHandle, WaitOrTimerCallback, Object, Int32, Boolean) where it will be enqueued to the ThreadPool, but will not be able to execute until a WaitHandle on which it is waiting is signaled.

using System;

using System.Threading;

namespace RegisterWaitForSingleObject

{

public class ThreadIdentity

{

public string threadName;

public ThreadIdentity(string threadName)

{

this.threadName = threadName;

}

}

class Program

{

static AutoResetEvent ar = new AutoResetEvent(false);

static void Main(string[] args)

{

ThreadIdentity ident1 =

new ThreadIdentity("RegisterWaitForSingleObject");

ThreadPool.RegisterWaitForSingleObject(ar,

new WaitOrTimerCallback(ThreadProc),

ident1, -1, true);

ThreadIdentity ident2 =

new ThreadIdentity("QueueUserWorkItem");

ThreadPool.QueueUserWorkItem(new WaitCallback(ThreadProc), ident2);

Console.WriteLine("Main thread does some work, then sleeps.");

Thread.Sleep(5000);

Console.WriteLine("Main thread exits." +

DateTime.Now.ToLongTimeString());

Console.ReadLine();

}

static void ThreadProc(Object stateInfo)

{

ThreadIdentity ident1 = (ThreadIdentity)stateInfo;

Console.WriteLine(string.Format(

"Hello from thread {0} at time {1}",

ident1.threadName,DateTime.Now.ToLongTimeString()));

Thread.Sleep(2000);

ar.Set();

}

static void ThreadProc(Object stateInfo, bool timedOut)

{

ThreadIdentity ident1 = (ThreadIdentity)stateInfo;

Console.WriteLine(string.Format("Hello from thread {0} at time {1}",

ident1.threadName, DateTime.Now.ToLongTimeString()));

}

}

}

Which results in the following, where it can be seen that the first work item (task) that was enqueud was the one done by using the RegisterWaitForSingleObject() method. As it was started with a WaitHandle being passed into the ThreadPool method, it is not able to start until such a time that the WaitHandle is signaled, which is only done when the other work item enqueued using the QueueUserWorkItem() method, completes.

Using this code, it should be pretty easy to see how the rest of the overloads for the RegisterWaitForSingleObject() methods actually work.

Further Reading

There is an excellent article right here at CodeProject which is "The Smart Thread Pool" project, by Ami Bar, which has the following features:

- The number of threads dynamically changes according to the workload on the threads in the pool.

- Work items can return a value.

- A work item can be cancelled if it hasn't been executed yet.

- The caller thread's context is used when the work item is executed (limited).

- Usage of minimum number of Win32 event handles, so the handle count of the application won't explode.

- The caller can wait for multiple or all the work items to complete.

- A work item can have a

PostExecute callback, which is called as soon the work item is completed. - The state object that accompanies the work item can be disposed automatically.

- Work item exceptions are sent back to the caller.

- Work items have priority.

- Work items group.

- The caller can suspend the start of a thread pool and work items group.

- Threads have priority.

It's really good actually.

The "The Smart Thread Pool" project by Ami Bar can be found at this URL: Smart Thread Pool.

We're Done

Well, that's all I wanted to say this time. I just wanted to say, I am fully aware that this article borrows a lot of material from various sources; I do, however, feel that it could alert a potential threading newbie to classes/objects that they simply did not know to look up. For that reason, I still maintain there should be something useful in this article. Well, that was the idea anyway. I just hope you agree; if so tell me, and leave a vote.

Next Time

Next time, we will be looking at Threading in UIs.

Could I just ask, if you liked this article, could you please vote for it? I thank you very much.

Bibliography

- Threading in C#, Joseph Albahari

- System.Threading MSDN page

- Threading Objects and Features MSDN page

- Visual Basic .NET Threading, Wrox