Introduction

Recently, I wrote an article that examined the basics of Windows Memory Management. I am writing this paper in response to some feedback in order to provide an extension of that initial paper. Many times, an application developer, systems administrator, or device driver writer will have his Operating System begin to leak memory. Understanding the basics of the Windows Memory Manager, the various process memory counters used to instrument code, and the differences behind those counters can lead to a more stable Operating System, enhance the development process, and prevent that chance of data corruption. For instance, if an application has a bug due to a resource that was accessed by a process and then never closed, the bug could even overwrite the original source code. Catching something like this off the bat could help the developer to try and get a memory snapshot of the process' address space in the debugger and perhaps perform a triage.

The components of the Windows Memory Manager are part of the Windows Executive, and therefore exist in the file Ntsokrnl.exe. No component of this memory manager exists in the Hardware Abstraction Layer. As such, the memory manager components are able to create the illusion of an executable image being fully loaded immediately after launching that application into runtime. In reality, only pieces of the executable are loaded as needed and determined by user interaction.

Windows IT people call this the "lazy allocator". In other words, if you launch an application such as Notepad, Windows does not launch the entire application and necessary DLLs into physical memory. It does so as the application demands: as Notepad touches code pages, as Notepad touches data pages, it is at that point where the memory manager will make a connection between the virtual (logical) memory and the physical (absolute) memory, reading contents off disk as needed. In fact, we'll see how these memory demands and paging rates are used by Windows to determine at system boot up how much physical memory it should assign to a process (the working set). It has monitored these demands and rates and even recorded them in a pre-fetch file (logical pre-fetching). Logical pre-fetching, as of Windows XP, enhances application start-up by having recorded these behaviors.

Process Handle Leaks

Suffice it to say that a handle is a reference to an object, a reference to a resource made available to a process. A handle leak normally doesn't directly affect the committed virtual memory. It might affect system memory, like one of the system memory kernel heaps, or it could affect system virtual memory without being the private virtual memory explicitly allocated by the process. A handle, again, is a reference to an open Operating System resource (an open instance to an object), such as a file, Registry key, TCP/IP port, etc.

Processes that open these handles get resources allocated for them. If the process doesn't close the handle, it can exhaust system memory. That is, if they don't close the resource, they end up exhausting system memory because the system is allocating memory to store the data associated with those objects, or simply to store the memory used to keep track of the process' handle table. These resources could be used by another process, but are left opened to consume system kernel memory. There is no built-in limit as far as how many handles are opened on a system-wide basis. There is a limit on how many handles an individual process can create, however. The fixed limit is around 16 million handles per process. Or one process could create 16 million handles. Because the table that Windows maintains to keep track of opened handles comes from a system-wide memory resource called paged pool, indirectly, a process handle leak (which is normally a bug in a user application) could ultimately exhaust system memory.

The "-h" switch on Testlimit.exe causes this test program to create a single object and open handles repeatedly to that same object. This is an example of how a process handle table growth causes kernel memory consumption. For example, run C:\Windows\System32> testlimit.exe -h, and handles will start being creating for a single created object. With the System Information box open, the paged kernel memory increases radically. Non-paged memory does not really change that much. As the process is creating handles, the Operating System is extending the handle table for that process, and that extension is coming out of the kernel memory paged pool. If we kept launching several instances of Testlimit.exe using that "-h" switch, it would eventually exhaust kernel memory and bring a system to its knees. This means that a bug in a user application could eventually result in data corruption. Note that the handle count on the System Information box increases in the millions. This is how you can determine if you have a handle leak. When you need to locate the process that has the handle leak, you would use the Process view of Process Explorer. Go to the "Selected Columns" option on the "View" choice of the toolbar. Go to "Process Performance", and select "handle count". When this becomes apparent on the Process view, then note if the number is fixed, or if it is increasing. If the number is increasing, then you must kill the process.

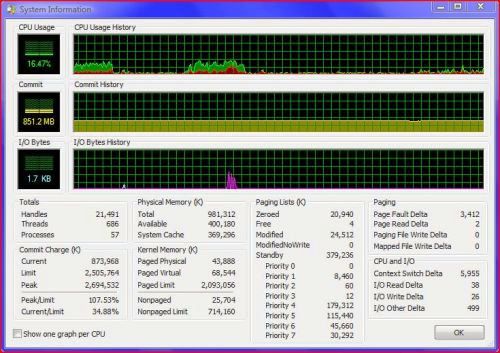

One of the common attributes of any leaker, whether it is in kernel mode or in user mode, is that the current allocation is close or equal to the peak, and it will always be growing. If we look at the difference behind the various process memory counters -- the private bytes, the virtual memory bytes, and the working set size -- then the most effective counter is the private bytes counter. Note that a counter is counting something (likes bytes) per second (or some other time interval). This means that the counter could count or specify either an actual quantity or an actual rate per second. The private bytes counter column used in Process Explorer represents the actual number of bytes that are private to a process. For example, the text that you type in Notepad is private data - no other process is interested in that data. This is then part of the private virtual memory, or private bytes. Processes share memory, and several processes can access the same DLL. A DLL is only loaded once. Below is an image of the System Information box that is an icon on the Process Explorer tool bar pane. Note that on the lower left hand side, you'll see a "Commit" section:

The current allocation is 873,968 KB, as stated in the Commit Charge column. Note that the Commit graph shows 851.2 MB, which is relatively the same. Note that there is a Limit and a Peak. To demonstrate a command line tool written by Mark Russinovitch called Testlimit.exe, we first note that current allocation. We run Testlimit.exe (or Testlimit64.exe if it is a 64 bit system that you are running), and we note that there is steep climb in the current allocation; that a process is allocating virtual memory on a continuing basis and not releasing it. Instead, it approaches the allocation and then continues to grow. Testlimit.exe has an "m" switch to leak memory. To run the command line program, we type C:\Windows\System32>testlimit.exe -m 10. This command causes the process to leak 10 MB each half a second, or 20 MB a second. Note the change in the Commit graph:

The current allocation is going to exceed the Commit Limit and probably pass the Commit Peak. With Process Explorer open, and the private bytes, the private bytes delta, and the private bytes history counter columns dragged and dropped so as to border each other, you would have a growth in the Testlimit.exe process that would depart from a flat yellow line and approach a thick yellow line:

The private bytes history column shows that the yellow line for this test program is departing from a flat line to a thick line. Notice that this leak is even causing Explorer.exe to release a little memory. The private bytes column specifies a large number of private bytes to that process, memory that cannot be shared with any other process. This information would not be revealed in the memusage column in Task Manager.

Those Private Bytes are not Shared

Windows automatically shares any memory that is shareable (not private to a process). This means code: pieces of any executable or DLL. Only one copy of an executable or a DLL is in memory at any one time. In order for multiple processes to reference one loaded DLL, Windows uses memory-mapped files, which are internally called section objects. The page file is a physical page of memory. A "small" page is 4096 bytes, or 4 KB, whereas a large page is 16 KB. One copy of an executable image allowed in memory at one time does not just apply to stand-alone desktops. This also includes servers like a Terminal Server.

If several users are logged and they are using Outlook, one copy of Outlook is read off disk (or the demanded pieces of it) to be resident in memory. If a user starts using other features of Microsoft Outlook, they are read off of disk on demand. This indirectly defines a process' working set. The amount of physical memory that Windows assigns to a process is called its working set. Every process starts out with an empty or zero-sized working set. As the threads that run within that process begin to touch virtual addresses, the working set begins to grow. When the Operating System begins to boot up, it has to decide how much physical memory will be given with respect to each process, as well as how much physical memory (RAM) it needs to keep for itself to both store cached data and to keep free. Windows monitors the behaviors of the processes for the Windows Memory Manager to ultimately determine that amount based on a process' memory demands and paging rates.

Brief Overview to Understand the Benefits of Memory-Mapped Files

Most programs require some form of dynamic memory management. This need arises whenever it is necessary to create data structures whose size cannot be determined statically when the program is being built. Search trees, similar to binary trees, symbol tables, and linked lists, are common examples of data structures. Note that symbols represent the variables and methods written in the source code (whether they are from predefined header files used in C, or class libraries used in C++). These are sometimes preprocessed rather than included in the input stream to the compiler proper. These symbols can also represent where their line number is in the source code text files. The case in point is that the size of these data structures can change, so while providing a flexible mechanism for these data structures, it is important to note that often a program will create a temporary file (that must be deleted prior to program termination). The Windows Memory Manager provides memory-mapped files to associate a process' address directly with a file, allowing the Operating System to manage all data movement between the file and memory to avoid a programmer's dependence on some I/O functions. So what does that mean? That dynamic memory allocated in heaps must be physically in a paging file. The Windows Memory Management controls page movement between physical memory and the paging file, and also maps the process' virtual address to the paging file. When the process terminates, the physical space in the file is deallocated.

How Do We Size the Paging File?

Many technical help documents have asserted that the size of the page file be a multiplier of RAM, say 1.5 times the size of RAM. You are better off using Process Explorer and taking the sum of the private virtual memory bytes of all processes system-wide and then multiplying that by something like 1.5 or 1.2, because in order to size the paging file correctly, you need to understand what gets in to the paging file. If you run several application programs simultaneously, then you need a larger paging file because the concurrent execution would result in dumping more modified private data into the paging file. If you run these applications sequentially, there is then less of a need for a large paging file. But you should not size your paging based on the size of RAM. Nor should you, if, after you receive a message box stating that you are low on virtual memory, increase the size of your paging file. This would only delay getting the same message box after the reboot.

The only data that gets sent out to the paging file is modified private virtual data, for example, the text you would type into Notepad. The memory storing that text must be in some savable location on disk that it could then re-fetch it from, and that area is the paging file. If Notepad's code gets pulled out of Notepad's working set, Windows can actually reuse that physical memory without having to save that memory because it has already been saved on disk: it is on Notepad's image on disk. So code, and mapped file data that comes from files other than the paging file, can be reread from the disk without having to enter to the paging file first. So what gets paged out? Modified private data, not code, gets paged out. This data is sent out to the paging file only when necessary. Only when it has been pulled out of a process' working set and Windows needs to reuse that memory for another purpose.

When the number of modified pages that go out to the paging file gets to be a number (a little large than 2 MB) that reaches a threshold, the modified page writer will kick in and start sending that stuff out to the paging file because it can then move those pages to the standby list and contribute to immediately available memory. The modified page writer is one of several key components that run in the context of six different kernel-mode system threads. Out of priorities that range from 0-31, the modified page writer (priority 17) writes dirty pages on the modified list back to the appropriate paging files. This thread is awakened when the size of the modified list needs to be reduced.

More to the point, when the modified page list gets too big, or if the size of the zeroed and standby list falls below a minimum threshold, one of two system threads are awakened to write pages back to disk and move the pages to the standby list. One system thread writes out modified pages to the paging file, and a second one writes modified pages to mapped files.

For a page to read from the paging file, it has to go unreferenced for a long time. That is, the process has not made a reference to it, but it has given no indication that it can let it go for good. When a process has to give up a page out of its working set (either because it referenced a new page and its working set was full, or the memory manager trimmed up its working set (it decided that the process was getting too big; this is why the working set counter is not a good indicator when tracing a leaker)), the page goes to the standby list if it was clean (unmodified - written to by that process to store data), or to the modified page list if the page was modified while it was resident.

So, again, private memory in a process working set would be taken out if unreferenced for a period of time and put on the modified list. If it remained unreferenced, it would be sent to the paging file. However at that point in time, the page remains in memory on the standby list. If the process continues to not reference that page long enough for that page to cycle through all of the pages on the standby list, Windows takes that page, uses it for another purpose, and then, finally, the process calls for that page; only at that point would Windows have reread that page from the paging file. Process Monitor can show page file reads. If you can see pages that were or are constant reads from the paging file, you would need more memory. The page is there to allow Windows to dump modified private memory that has not been deleted or saved to some other disk file onto the disk so that Windows can reuse that memory for some other purpose.

Let's return to the issue about sizing the page file. The worst case scenario for sizing the paging file is if you summed up all the private virtual memory usages for all of the processes running at any point in time, and then all of that got squeezed out from physical RAM to make use for other purposes. That would be the size of the paging file required to hold private data that might be read back in by those processes. That total sum has no connection with how much RAM you have in your system. It depends on the Operating System and the user's behavior.

The total amount of committed private virtual memory that could be paged out (like a potential page file usage) is a number that Windows keeps on hand. It is called the system "commit charge". It is the total amount of private committed virtual memory. It is the sum of the private bytes of each process plus the sum of the kernel memory private bytes, or kernel memory paged pool. Total private virtual memory usage is the commit charge peak; enough to hold all of the simultaneously allocated private virtual memory you have running at any point in time. Multiply that by something like 1.5 or 1.2. That is, the commit charge peak plus some amount or some multiplier, but do not size your paging file based on the size of RAM alone.

The paging file should be contiguous. As Windows does write out to the paging file, it does so in blocks, so if there is a break in pieces of the paging file between one of those writes, Windows would have to make a single write into multiple writes. The TechNet Sysinternals tool Pagedfrg.exe defragments the paging file at system boot. This tool can defragment the page file to get it as consolidated as it can.

This section touched on the basics as to how Windows Memory Manager implements virtual memory management. Some of the troubleshooting tips given indicate that when a process begins to allocate virtual memory or create process handles repeatedly, then certain system limits or kernel paged-pool thresholds are exceeded, and that with a continuing growth. Each process is given access to a private virtual address space, protecting one process' memory from another, but allowing processes to share memory efficiently. A very basic but effective test would be to download "tswebset.exe" from Microsoft. This is a Terminal Server web browser. If you run an XP machine and have Remote Desktop Access configured, then install tswebsetup.exe in your C:\Inetpub\wwwroot directory. Type in the default.htm page. Have the IP and machine name (as well as the logon information) in order to remotely analyze a terminal. Then sift though the PSTools Suite from TechNet's Sysinternals, and attempt remote installations of tools that will extract data from the system. This, of course, would require that you document any changes in the remote system due to your own presence. Perhaps the pre-fetch .pf file in the Windows directory?

References

- Windows Internals, 4th Edition by Mark Russinovich and David Solomon.

- Sysinternals Video Library by Mark Russinovich and David Solomon.

- Windows Systems Programming by Johnson M. Hart.