Introduction

Database updates are never easy. There are no problems to tweak the schema during development, but you cannot have the latest and greatest in the production without effort. Then concurrent development chimes in: While you have the latest and greatest, your buddies might still use old schema or have their own changes, and there’s no way you want to wipe out their changes. In a search for a better way to manage database schema changes, I came across an excellent series of blogs by K. Scott Allen (via):

Simply said, the idea is to have a baseline DB schema and apply a series of incremental updates every time schema changes. All schema changes are being tracked with a special table in the same DB, so no update is applied more than once or missed. Both baseline schema and updates could be easily stored in version control system of choice. It sounds perfect to me, except... There's no tool for that.

This article describes design, development and testing of a home-grown tool for database schema updates. One of the intents of the article was to demonstrate how TDD may significantly speed up development and debugging of the complex logic behind the subsystems in software.

Functional Requirements

In order to automate the whole DB schema versioning task, we need a tool. The tool must be able to perform both batch updates, when a bunch of SQL commands are executed one-by-one, and schema updates, which are aware of update history and may apply all needed updates to bring the schema to the current state. Hence, the list of sample command lines the tool must understand, which cover the aforementioned scenarios:

1. dbtool.exe /update:batch [/db:database] [/user:username] [/notransaction]

[[/swallow:]script.ddl...]

2. dbtool.exe /update:schema /db:database [/user:username] /schema:schema.ddl

3. dbtool.exe /update:list /db:database [/user:username]

The first command will execute scripts, listed in a command line one-by-one, optionally swallowing any exceptions during the execution. The second command will install and update schema. The third command will list all installed schema updates.

Design

Given the functional requirements, there’re three different algorithms, applied to the same subject – DB. I think it’s a good idea to use a Strategy pattern here: the client (dbtool.exe) will use the /update switch as a selector for an algorithm implementation, to configure the algorithm and execute it.

Obviously, the development of such a versatile tool, like our dbtool, is a complex process and may go wrong in many places. The last thing we want is to sit in a debugger session trying to catch as many bugs as we can. Instead we’ll use TDD and ask potential bugs to come to the party. Bringing TDD to the picture means that more likely we’ll need to simulate certain subsystems in order to isolate the functionality we’re testing, and therefore it’s a good idea to employ a Dependency Injection pattern to enable this kind of functionality. We will use xUnit.net framework as our unit testing platform.

Since both client and its tests are sharing the same components, we’ll better use an Abstract Factory pattern to instantiate and configure concrete objects wherever possible.

To simplify troubleshooting process when our tool will be released and used in production, we’ll use logging facilities provided by log4net framework.

Implementation

The functional requirements document clearly describes the workflow for the dbtool:

- User launches dbtool.exe with a set of command line arguments.

- Dbtool parses command line and chooses which algorithm to use.

- Dbtool executes selected algorithm with parameters set by the user via the command line.

How Database Schema Updates Are Performed

Dbtool supports batch database updates out of the box with no extra requirements. However, in order to enable schema update functionality, your database must have a special table – schema_information – which will store the information about installed updates:

CREATE TABLE schema_information (

id INT IDENTITY(1,1) NOT NULL,

major INT,

minor INT,

release INT,

script_name VARCHAR(255),

applied DATETIME,

CONSTRAINT PK_schema_information PRIMARY KEY (id)

)

GO

Besides, your baseline schema script must also have an extra statement which determines the version of schema installed:

INSERT INTO [schema_information] ([major], [minor], [release], [script_name], [applied])

VALUES (1, 0, 0, 'baseline', GETDATE())

GO

And the last requirement is for update scripts. All updates which upgrade schema versions must reside in the Updates folder under the folder where schema is and each update file name must be in update-xxx.yyy.zzz.ddl format:

C:\temp\SchemaTest\Schema.ddl

C:\temp\SchemaTest\Updates\update-001.001.002.ddl

C:\temp\SchemaTest\Updates\update-001.001.001.ddl

C:\temp\SchemaTest\Updates\update-001.000.001.ddl

During the schema update process dbtool with query the schema_information table. If there’s no schema information, schema files will be applied and the version number will be re-queried again. Then dbtool will look for update scripts in the Updates folder in the folder where the schema file resides and will extract version information out of the file name. All updates with major version numbers (xxx) less than schema major number will be rejected. For the rest of updates a transaction will be started and all updates will be applied one-by-one in ascending order (i.e., 001.000.001, then 001.001.001, then 001.001.002, then 002.000.001). After executing an update script, dbtool will update version information in THE schema_information table. Once the last update is applied, the transaction will be commited.

Object Model

The workflow basically introduces two bedrock entities of dbtool: Configuration and Strategy. Configuration will parse command line into the object model, and Strategy will use Configuration to perform specific job, requested by the user.

Since there’re three modes of operation in the dbtool, we’re talking about three classes for holding configuration data and three strategies which utilize these configurations.

Configuration

We’ll have three classes for handling configuration data:

BatchUpdateConfiguration – keeps configuration for batch DB update process:

- Whether to perform updates in transacted mode (optional /notransaction switch).

- List of updates to apply to DB.

SchemaUpdateConfiguration – keeps configuration for DB schema update process:

- File with baseline schema location.

- List of updates, located under updates folder of baseline schema location.

UpdateListConfiguration – keeps configuration for DB schema updates listing process.

All three configurations have common parameters that are provided by users through the command line – database name (/db switch) and user name (/user switch) – and structures which will be populated during the configuration process, like scripts collection, which keeps a list of scripts to execute, and database password, which the user enters when the tool prompts for it. Besides, there’s a process which all three of them are sharing: Command line parsing and validation. Therefore, it makes sense to have a common parent for all configuration classes (ConfigurationBase) which will handle parsing and validation process and also will handle common configuration process.

The type of configuration to use depends on the /update command line switch. Since we want to have both dbtool client and tests to use same configurations, we’ll isolate the process of configuration object instantiation in the ConfigurationFactory class. Its CreateConfiguration() method accepts an array of command line arguments and by utilizing simple logic instantiates a proper configuration object.

Testing

We have four players to handle the configuration process. All four need to be tested properly in order to make sure that we cover various command line argument combinations, both valid and incorrect ones.

It is very important to note that at least two configuration classes interact with the file system in order to resolve paths to files with DB update scripts. Since there could be absolute and relative paths involved and various nesting levels involved, it’s not a good idea to have the unit test to interact with file system directly. Given our functional requirements, all file operations boil down to locating files and very basic reading data from files. Usually you will use System.IO.Directory and System.IO.File for these operations, but now it’s a good idea to define two simple interfaces – IFileListProvider and IFile. IFileListProvider will let us locate files and IFile will handle specific file operations. For dbtool client concrete implementations will simply forward calls to its methods to corresponding System.IO.Directory and System.IO.File methods, but for our tests we’ll implement two very special FakeFileListProvider and FakeFile classes. They will use dictionaries to specify which files the client may find, list or read. Consider this snippet:

fakeFileListProvider.Paths[@"C:\Temp\updates\*.ddl"] = new string[] {

@"C:\Temp\updates\two.ddl", @"C:\Temp\updates\four.ddl" };

Basically here we’re instructing our fake list provider instance to return two file names when a specific file mask is provided by the client.

This snippet instructs fake file classes to return the contents of the invalid DB script:

fakeFile.Files["invalid.ddl"] = "adkfjlkajdf a;lksdjf\rgo aksdfasdjfaslkdjf asdl;asdf";

In order to use IFileListProvider and IFile, we’ll modify our ConfigurationFactory and ConfigurationBase implementations, so they will have special properties which will default to proper FileListProvider and File instances, but could be set to FakeFileListProvider and FakeFile instances by our tests.

public sealed class ConfigurationFactory

{

private IFileListProvider fileListProvider = new FileListProvider();

public IFileListProvider FileListProvider

{

get { return fileListProvider; }

set { fileListProvider = value; }

}

}

public abstract class ConfigurationBase

{

private IFileListProvider fileListProvider = new FileListProvider();

public IFileListProvider FileListProvider

{

get { return fileListProvider; }

set { fileListProvider = value; }

}

}

This might look like an overhead, but having file operations isolated, we have a huge freedom with how our tests are going to be implemented: We don’t have to maintain a file system in some state and may come up with absolutely weirdest file paths ideas to prove our configuration classes are implemented properly.

You will find tests for our configuration sub-system in ArgumentTests and ConfigurationTests classes.

Strategies

Strategy is nothing more than an algorithm which takes configuration as an input and performs specific operations using configuration data. Therefore, all our strategies need to do is to implement an interface with one single method – Execute().

Dbtool will have three different strategies, implemented in BatchUpdateStrategy (executes scripts one-by-one), SchemaUpdateStrategy (performs DB schema update) and UpdateListStrategy (lists all installed schema updates) classes.

Again, because we have both dbtool client and tests working with strategies, we’ll use the StrategyFactory class which will figure out which strategy class to instantiate (by using the type of configuration as a criteria), create an instance of the strategy class and configure it, so all the client will have to do is to call the Execute() method.

Testing

Dbtool is supposed to work with databases and pretty much fancy logic is involved in the process. It is very important to test the core functionality in order to eliminate any further surprises. Basically the tool has to call proper scripts at proper times, handle successful scenarios and failures. Testing all that against a real database will be a huge pain. It’s much easier to introduce a new entity – database manager – which will take care of all database operations in the dbtool client and yet will give access to strategy operations outcome in tests. This leads us to the introduction of a new interface – IDatabaseManager – which will define five crucial operations with databases: DatabaseExists(), GetSchemaRevision(), ListSchemaRevisions(), ExecuteScript() and UpdateSchema().

Just five operations which cover everything we’re planning to do with a database. Dbtool client will implement a real SqlDatabaseManager class which will use SqlDataAdapter and SqlCommand to perform IDatabaseManager operations. Our test suite will implement a FakeDatabaseManager class, which will provide tests with several additional properties that will allow tests to simulate various successful and failing scenarios. Consider the following snippet:

[Fact]

public void StrategyPerformsUpdatesOnlyAndCompletesTransaction()

{

using (TransactionScope scope = new TransactionScope())

{

IStrategy strategy = CreateDefaultStrategy();

strategy.Execute();

scope.Complete();

}

Assert.Equal(4, databaseManager.ExecutedScripts.Length);

int i = 0;

foreach (string update in databaseManager.ExecutedScripts)

Assert.Equal(FileSystem.GetUnderUpdatesOf(defaultSchemaFile,

updateScriptsInOrder[i++]), update);

Assert.Equal(new SchemaRevision(1, 1, 1),

databaseManager.GetSchemaRevision(DatabaseName));

}

It doesn’t make sense to try to access strategy's internals to understand which operations it performed and verify if it was done in an expected way. It’s much more productive to measure the results of operations performed. In the example above FakeDatabaseManager records all scripts that were “executed” (in quotes, because no actual job was done) by the strategy and we can easily check their order.

The following snippet demonstrates failed scenario test:

[Fact]

public void IfUpdateFailsThrowsAndAbortsTransaction()

{

databaseManager.Revision = null;

databaseManager.FailScriptIndex = 2;

Assert.Throws<TransactionAbortedException>(delegate()

{

using (TransactionScope scope = new TransactionScope())

{

try

{

IStrategy strategy = CreateDefaultStrategy();

strategy.Execute();

Assert.False(true, "Wrong way: strategy should've thrown an exception.");

}

catch

{

scope.Complete();

}

}

});

Assert.Equal(2, databaseManager.ExecutedScripts.Length);

Assert.Equal(defaultSchemaFile, databaseManager.ExecutedScripts[0]);

Assert.Equal(FileSystem.GetUnderUpdatesOf(defaultSchemaFile,

updateScriptsInOrder[0]), databaseManager.ExecutedScripts[1]);

}

Here we instructed the FakeDatabaseManager instance to fail the third script in an update pipeline and then verify that transaction was cancelled, so no updates will be actually recorded in a DB, and therefore DB state will remain unchanged.

In order to accommodate IDatabaseManager, our StrategyFactory is being configured with a concrete instance of database manager class. Dbtool uses SqlDatabaseManager and all tests are using FakeDatabaseManager instances.

You will find strategy tests in BatchUpdateTest, SchemaUpdateTest and UpdateListTests classes.

Putting All Together

If you’re wondering how the dbtool looks like after creating such enormous amount of “extra” classes, consider the following snippet (diagnostic and error handling code omitted):

ConfigurationBase configuration = (new ConfigurationFactory()).CreateConfiguration(args);

using (SqlDatabaseManager databaseManager = new SqlDatabaseManager())

{

Console.Write("Enter {0}'s password > ", configuration.UserName);

configuration.Password = Console.ReadLine().Trim();

Console.WriteLine();

ConfigureScriptMacros(databaseManager);

IStrategy strategy = (new StrategyFactory()).CreateStrategy(configuration,

databaseManager);

strategy.Execute();

}

That’s all.

Integration Testing

Developing and testing dbtool against fakes was a great deal of fun, but our tool is going to work in a real world with real files and real databases. This is where integration testing kicks in. Basically, the integration testing process boils down to writing a bunch of scripts that will be executed by dbtool and cover all possible scenarios (both successful and failing) we can come up with. The best part is that we’ve already tested all the logic behind the configuration handling and strategies, so all we need to do is to make sure that our real SqlDatabaseManager works as we expect it and our dbtool client can actually do its job.

The integration testing will also help us to verify if we’re ready to real world database behavior. For instance, the command line argument /notransactions for batch update scenarios wasn’t there before I ran a batch update which was creating a database – you see, database creation process cannot happen within a transaction. I couldn’t catch this with testing against fakes only.

We could perform integration testing by creating another suite which uses real SqlDatabaseManager and file/file list providers and then performs checks in a programmatic way, but I think this would be an overkill for this tool, so I ended up with creating a dozen of scripts which create database, apply updates in batch and schema update modes, list updates, try to perform some invalid operations and so on and by running them one-by-one I was simply observing the behavior and manually checking the database structure after each run. Since most of the logic was verified with fakes, it’s not much of a burden.

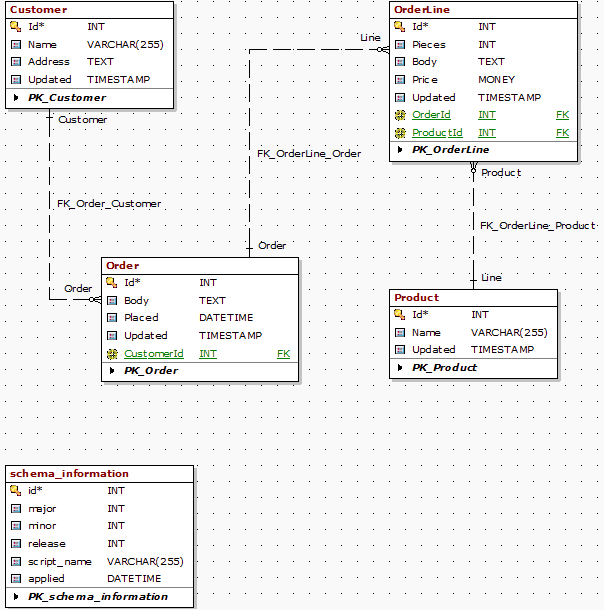

You will find integration test scripts under Integration folder in the test suite. There’s also a Schema.jpg file which depicts a test database schema.

Thanks for your interest!

Hope you’ll find use for both the tool and the article in your daily practices.