Understanding the value mapping problem in a typical integration!

In several integration projects, one has to map source and destination values repeatedly. Consider a scenario where the destination values have to be looked up before any mapping can be done. In other words, for every request to insert or update a destination record, a lookup with the destination system has to be performed. This would mean invoking too many calls on the desination system, just for making a correct insert or update.

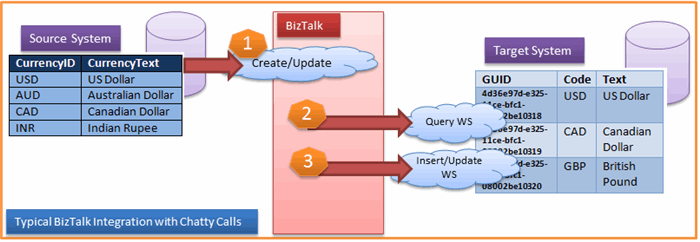

Consider a scenario where a payment record with a currency value has to be updated. In order to update this record on the destination system one has to fetch the currency GUID for the currency value (USD for example) and then send another request to the destination system to update the payment record with the correct value inserted. This is known as chatty calls which means too many calls to the destination system.

- Step 1: A

web service call is invoked by the source system in order to update or insert a record in the destination system. - Step 2: In order to

insert or update a value in the destination system, the query WS is used to retrieve the appropriate GUID value. - Step 3: The

GUID value is then used to invoke, the insert/update WS to correctly update the payment record.

This is NOT a good approach since we are making too many calls to the destination system.

Is there a better way?

The idea is to build a cache and refresh it occasionally. The cache would hold all the destination mapped values for instance all the GUIDs for the various currencies in our example scenario.

Why do you want to Cache mapped values?

This shall be immensely beneficial in order to improve the performance of the system by atleast two fold.

What would you like to Cache?

This would contain all the destination mapped values. In our scenario, all the GUIDs for the various currencies.

A typical cache DB would consist of the GUID values which can be retrieved by passing the currency code and the destination code. This cache table is updated periodically by the orchestration. This shall help us, reduce the method call volume to the destination system, there by enhancing the performance.

Understanding the Value Map caching pattern

Step 1: Loop until you have data in the cache

Declare a boolean flag to determine when to exit the main loop. Observe the orchestration structure carefully, if you set to terminate flag to false (default), the orchestration would wait for a pre-defined amount of time before refreshing the cache again.

Step 2: Coding the meat of the orchestration

An exception scope is used to invoke a web service in order to retrieve all the values for building the value map cache. Note that this orchestration is used only to build the cache in the custom integration database.

Step 3: The cache refresh period

The timespan object is used to configure the delay shape of the orchestration. This shall determine the time lag between the cache refresh cycles.

Step 4: Don't forget to add the configuration entries

We would need to configure the terminate flag and the delay time to refresh the cache either in the BizTalk configuration file or using a SSO DB configuration.

Do we have any other easier alternatives?

The good news is that we do. There are several easier alternatives especially in using BizTalk Xref Data which is described in great detail by Michael Stephenson's article, see references section.

References for further reading