Introduction

Azure is Microsoft's operating system for cloud computing, which enables hosting and running applications on cloud. Cloud is nothing but Microsoft's datacenter. Applications are hosted in cloud in form of roles. There are two major kinds of role: Web role and Worker role. Both the roles have different purposes to accomplish.

A part of any application which we host on IIS should be created as web role while hosting it on cloud. Web roles work as a bridge between application users and worker role.

Worker roles, as the name suggests, accomplishes tasks assigned by web roles. It runs continuously on cloud handles tasks asynchronously.

With this basic foundation, let us start the topic. Every service exposes set of endpoints to the external world or client to that service. Consumer of the service gets to know about the metadata or offerings of the service using these endpoints. On cloud, every hosted role (web or worker) is a service, which can be consumed from an on-premise application or browser, defines endpoints for this purpose.

In this article, the concentration will be more on endpoints and setting network rules rather than other configuration schema members.

Role Endpoints

Azure role endpoints are defined in role configuration definition file (.csdef) under “Endpoints” schema node.

<Endpoints>

<. . . />

</Endpoints>

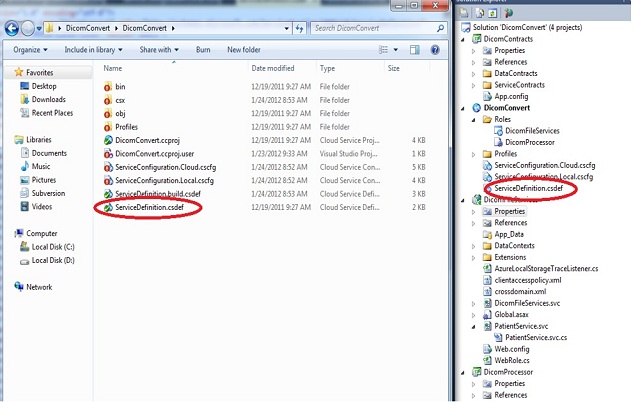

We can find the definition file in the corresponding project folder itself. Following is a snapshot for an application called “DicomConvert” and places where we can find definition file in folder and solution explorer:

Figure 1

Each endpoint definition has three basic properties: “Name”, “Protocol” and “Port”. Following is an example of an Input endpoint definition:

<Endpoints>

<InputEndpoint name="Endpoint1" protocol="http" port="80" />

</Endpoints>

This endpoint can be accessed using http protocol at port 80.

Note: If we make any changes in the definition file, we will have to redeploy (either ‘publish’ or ‘package and upload’) the application on cloud. In azure management portal, there is no provision for changing definition (.csdef) file on the fly, while we can change configuration file (.cscfg) through the portal even when the application is already deployed and still can see the changes on the fly.

We can either open definition file in Visual Studio text editor to write for configuring endpoints, or we can do it using a user interface provided by the same.

Double click on the role created in solution explorer to get the configuration UI. We get options for adding, deleting endpoints. Along with that in the left hand pane, other configuration options are also available.

Figure 2

Once done, save (‘Ctrl+S’) the setting and it will automatically reflect in definition file.

Windows Azure only provides accessibility to your roles when we explicitly specify endpoints in service definition. By defining endpoints, we instruct the Fabric controller to route web traffic to particular role using a load balancer. Initially, Windows Azure did not allow connecting worker roles directly, i.e., every request to the worker role has to go through load balancer; including requests from the role instances of the same application present in same virtual network. This design had response latency. The limitation was removed when the Azure platform moved to production by introducing two kinds of endpoints, one for handling external requests from outside the firewall and another for handling requests happening behind the firewall among instances of application roles.

Refer to the following snapshot for complete definition file and endpoint definition. Note the endpoint settings in the previous snapshot:

Figure 3

Types of Endpoints

Azure has two types of endpoints: Input and Internal.

Input endpoint - Input endpoints are the endpoints which are accessible over internet (external facing). Initially, this was the only endpoint definition available for Azure. It supports for http and https protocols. This kind of endpoint is responsible for handling internet traffic coming through load balancer. Following are the configuration attributes available:

| Attribute | Description |

name | Required.

A unique name for the external endpoint. |

protocol | Required.

The transport protocol for the external endpoint. For a web role, possible values are HTTP, HTTPS, or TCP. Note TCP is only supported when the input endpoint is defined as a child of the Endpoints element. |

port | Required.

The port for the external endpoint. You can specify any port number you choose, but the port numbers specified for each role in the service must be unique.

Certificate required for an HTTPS endpoint. The name of a certificate is defined by a Certificate Element.

|

localPort | Optional.

Specifies a port used for internal connections on the endpoint. The localPort attribute maps the external port on the endpoint to an internal port on a role. This is useful in scenarios where a role must communicate to an internal component on a port that different from the one that is exposed externally. If not specified, the value of Set localPort is the same as the one set in the port attribute. Set localPort to “*” to allow the Windows Azure fabric assigns an unallocated port that is discoverable using the runtime API.

Note: The localPort attribute is only available using the Windows Azure SDK version 1.3 or higher. |

ignoreRoleInstanceStatus | Optional.

When the value of this attribute is set to true, the status of a service is ignored and the endpoint will not be removed by the load balancer. The default value is false. Setting this value to true is useful for debugging busy instances of a service.

Note: An endpoint can still receive traffic even when the role is not in a Ready state. |

Internal endpoint - Internal endpoints are accessible within an Azure node (inside a virtual network) to the role instances of same application. Roles of one application cannot access internal endpoints of other application roles. This communication happens directly without any involvement of load balancer. So a worker role, which is used only by web role and both of the roles are hosted on cloud, need not have an input endpoint defined. In that case, the worker role can have a set of internal endpoint which is exposed to hosted web roles, and the web role can in turn have a set of input endpoints exposed to the internet. It supports for http, https and tcp protocols.

<Endpoints>

<InputEndpoint name="HttpIn" protocol="http" port="80" />

<InternalEndpoint name="HttpInternal" protocol="http" port="8000"></InternalEndpoint>

</Endpoints>

The above configuration snippet is of a web role, which has one input endpoint for outbound communication and one internal endpoint for cross role communication. So, by now it is understandable that endpoint creation in Azure is a decision based on architecture of hosted application.

Setting Network Traffic Rules

Using internal endpoints, we can set guidelines to set communication rules among role instances. In case of violations, Azure throws exceptions. These rules can be designed to implement security features also, in case we don’t want role to be accessed by other web or worker role.

Out of many possible scenarios, we will take few for setting network traffic rules. Suppose, we have a web role “WB1” and set of worker roles “WR1”, “WR2”. And as application requirement, we need to set network rule as follows:

WB1  WR1

WR1  WR2

WR2

- Web role instance can access only

WR1 and WR1 only can access WR2. WR2 can't be accessed directly from the web role.

WB1 can access both worker role instances.

When we configure an application to have one web role and two worker roles, the following configuration definition file gets created:

<ServiceDefinition name="MyService"

xmlns="http://schemas.microsoft.com/ServiceHosting/2008/10/ServiceDefinition">

<WebRole name="WebRole1" vmsize="Small">

<Sites>

<Site name="WB1">

<Bindings>

<Binding name="HttpIn" endpointName="HttpIn" />

</Bindings>

</Site>

</Sites>

<Endpoints>

<InputEndpoint name="HttpIn" protocol="http" port="80" />

<InternalEndpoint name="Internal1" protocol="tcp" port="5000" />

</Endpoints>

</WebRole>

<WorkerRole name="WR1">

<Endpoints>

<InternalEndpoint name="Internal2" protocol="tcp" port="5001" />

</Endpoints>

</WorkerRole>

<WorkerRole name="WR2">

<Endpoints>

<InternalEndpoint name="Internal2" protocol="tcp" port="5001" />

</Endpoints>

</Endpoints>

</WorkerRole>

</ServiceDefinition>

To define network traffic rules, open “.csdef” file in Visual Studio text editor. Here all internal endpoints are configured to use TCP protocol, which could have been HTTP also. Please note that the network traffic rules node should be the last node defined in configuration definition file. Otherwise, sometimes it throws exception when application is hosted on cloud.

Rules are set based on traffic source and destination roles and almost self explanatory.

For Scenario 1

<NetworkTrafficRules>

<OnlyAllowTrafficTo>

<Destinations>

<RoleEndpoint endpointName="Internal2" roleName="WR1"/>

</Destinations>

<WhenSource matches="AnyRule">

<FromRole roleName="WB1"/>

</WhenSource>

</OnlyAllowTrafficTo>

</NetworkTrafficRules>

<NetworkTrafficRules>

<OnlyAllowTrafficTo>

<Destinations>

<RoleEndpoint endpointName="Internal3" roleName="WR2"/>

</Destinations>

<WhenSource matches="AnyRule">

<FromRole roleName="WR1"/>

</WhenSource>

</OnlyAllowTrafficTo>

</NetworkTrafficRules>

For Scenario 2

<NetworkTrafficRules>

<OnlyAllowTrafficTo>

<Destinations>

<RoleEndpoint endpointName="Internal2" roleName="WR1"/>

<RoleEndpoint endpointName="Internal3" roleName="WR2"/>

</Destinations>

<WhenSource matches="AnyRule">

<FromRole roleName="WB1"/>

</WhenSource>

</OnlyAllowTrafficTo>

</NetworkTrafficRules>

Similarly, we can think of any combination for communication and set the respective rule for it.

Limitation on Allowed Number of Endpoints

For any hosted service on Azure, the allowed number of roles has been increased from 5 to 25 (web and worker role). A hosted service totally can have 25 input and 25 internal endpoints. Distribution of endpoints among roles can be done in any fashion. So, the allowed number of internal or input endpoints per role is 25. I tried to create 26 internal endpoints for a worker role and tried to publish it on Azure. Following is the snapshot of failed deployment notification with error message circled in red, clearly mentioning the limit.

Fig: 4

Conclusion

Azure endpoints and associated network traffic rules enable a role to access only other relevant roles or services. This helps in secured and versioned access (in case of, two versions of the same worker role). These rules could be very useful for big applications which are getting continuously revamped.

References