Introduction

Azure is Microsoft's operating system for cloud computing, which enables hosting and running applications on cloud. Cloud is nothing but Microsoft's datacenter. Applications are hosted in cloud in the form of roles. There are two major kinds of role: Web role and Worker role. Both the roles have different purposes to accomplish.

Web role: A part of any application which we host on IIS, should be created as web role while hosting it on cloud. Example: WCF, ASP.NET, Silverlight, etc. Web role accepts the requests directly from client and responds back. Web roles work as a bridge between application users and worker role.

Worker roles: Worker roles, as the name suggests, accomplishes tasks assigned by web roles. It runs continuously on cloud handles tasks asynchronously.

Note: More information on web role and worker role can be found at the following links:

Objective

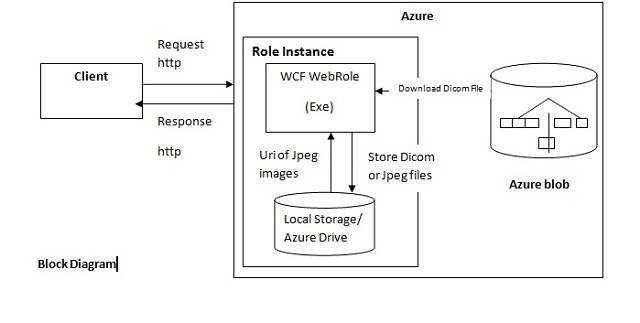

The objective of our little exercise is to show how a non .NET EXE can be executed from a Windows Azure web role. We will build WCF service which will process a dicom image and convert into a .jpeg image or images depending on whether we input a single frame or multi frame dicom image. For the sake of simplicity, we will assume that the dicom images are already stored in Windows Azure blob storage (alternatively a different application module can have the functionality to upload a dicom file to Windows Azure blob storage).The converted jpeg images will be stored on local storage or blob and access them through Rest API.

Block Diagram

Creating our sample application would involve the following steps:

- Including EXE as a part of the project

- Getting EXE path and parameters

- Download dicom file from Azure blob storage to local storage or Azure drive

- Run EXE in web role (WCF service)

1) Including EXE as a Part of the Project

Include EXE in a Web Role project and change the property of EXE as shown below:

2) Getting EXE Path and Parameters

Input and output parameters for the EXE are specified in string format as below.

We need to specify input dicom image path and output jpeg image folder where we want to store.

Exe in a web role : EXEPath = Path.Combine(Environment.GetEnvironmentVariable

("RoleRoot") + @"\", @"approot\bin\dcm2jpg.EXE");

Exe in a worker role : : EXEPath = Path.Combine

(Environment.GetEnvironmentVariable("RoleRoot") + @"\", @"approot\dcm2jpg.exe");

EXEArguements = @"arguments " + output file path + " " + input file path;

Non.NET and .NET EXE

We can run any kind of EXE on cloud.

- Non .NET EXE : C, C++, etc. If CPP EXE we need to add CPP runtime libraries like msvcp100.dll, msvcr100.dll.

- .NET EXE: C#, VB, etc. No need of adding any libraries.

3) Download File to Local Storage or Azure Drive

Dicom to jpeg converter EXE takes input dicom file path as local file system path:

Example: C:\DicomFiles\image1.dcm.

But we have all the dicom images stored on Azure blob storage. Images can be accessed only through Rest APIs or blob URLs which cannot be recognized by our EXE as a valid path.

We have two options to solve this:

- Download Dicom images to Local storage

- Download Dicom images Azure drive

Local Storage

A local storage resource is a reserved directory in the file system of the virtual machine in which an instance of a role is running. Code running in the instance can write to the local storage resource when it needs to write to or read from to a file. For example, a local storage resource can be used to cache data that may need to be accessed again while the service is running in Windows Azure. A local storage resource is declared in the service definition file. We can declare local storage resource in service definition file.

To specify local storage size, right click on role -> properties -> Local storage->Add localStorage.

You can specify how much storage space you want. Maximum space you can allot is the size of virtual machine where that role is running.

In service definition file,

Local storage element takes 3 attributes:

name sizeInMB: Specifies the desired size for this local storage resource cleanOnRoleRecycle: Specifies whether the local storage resource should be wiped clean when a role instance is recycled, or whether it should be persisted across the role life cycle. The default value is true.

Accessing a Local Storage at Runtime

- The

RoleEnvironment.GetLocalResource method returns a reference to a named LocalResource object.

Code snippet:

LocalResource locRes;

locRes = RoleEnvironment.GetLocalResource("LocalImageStore");

- To determine the path to the local storage resource's directory, use the

LocalResource.RootPath property:

localStoragePath = string.Format(locRes.RootPath);

- If your service is running in the development environment, the local storage resource is defined within your local file system, and the

RootPath property would return a value similar to the following:

When our application is running locally, the local storage path refers to our file system like C:\User\MyApp\localstorage\.

When your service is deployed to Windows Azure, the path to the local storage resource includes the deployment ID, and the RootPath property returns a value similar to the following:

C:\Resources\directory\f335471d5a5845aaa4e66d0359e69066.MyService_WebRole.localStoreOne\

Code for downloading image from blob to local storage is:

Create a directory in a local storage to store converted jpeg files:

Azure Drives

A Windows Azure drive acts as a local NTFS volume that is mounted on the server’s file system and that is accessible to code running in a role. The data written to a Windows Azure drive is stored in a page blob defined within the Windows Azure Blob service, and cached on the local file system. Because data written to the drive is stored in a page blob, the data is maintained even if the role instance is recycled. For this reason, a Windows Azure drive can be used to run an application that must maintain state, such as a third-party database application.

The Windows Azure Managed Library provides the CloudDrive class for mounting and managing Windows Azure drives. The CloudDrive class is part of the Microsoft.WindowsAzure.StorageClient namespace.

Once a Windows Azure drive has been mounted, you can access it via existing NTFS APIs. Your Windows Azure service can read from and write to the Windows Azure drive via a mapped drive letter (e.g., X:\).

With Windows Azure Drive, your Windows Azure applications running in the cloud can use existing NTFS APIs to access a durable drive. This can significantly ease the migration of existing Windows applications to the cloud. The Windows Azure application can read from or write to a drive letter (e.g., X:\)

Mounting Drive as Page Blob

- Definition file:

<ConfigurationSettings>

<Setting name="DicomDriveName"/>

</ConfigurationSettings>

- Configuration file:

<ConfigurationSettings>

<Setting name="DicomDriveName" value="dicomDrivePageBlob" />

</ConfigurationSettings>

- Create and Mount Drive:

CloudDrive drive = null;

string drivePath = string.Empty;

CloudPageBlob pageBlob = null;

pageBlob = cloudBlobContainer.GetPageBlobReference

(RoleEnvironment.GetConfigurationSettingValue("DicomDriveName"));

-

drive = new CloudDrive(pageBlob.Uri, storageAccount.Credentials);

-

drive.CreateIfNotExist(2048);

drivePath = drive.Mount(0, DriveMountOptions.None);

IDictionary<string, Uri> drives = CloudDrive.GetMountedDrives();

4) Run EXE in Web Role (WCF Service)

To run EXE, we need to invoke a new process. Exepath is given as an input to run EXE and as we have already seen EXE takes Dicom file path as input and converted jpeg images will be stored at specified location. Detailed syntax shown below:

Conclusion

We can execute any EXE which takes different input and output parameters.

In case of worker Role queues will come into picture as worker role has to communicate with webRole for input. Windows Azure provides capabilities to execute .NET or non .NET EXEs in WebRole or WorkerRole.

References