Introduction

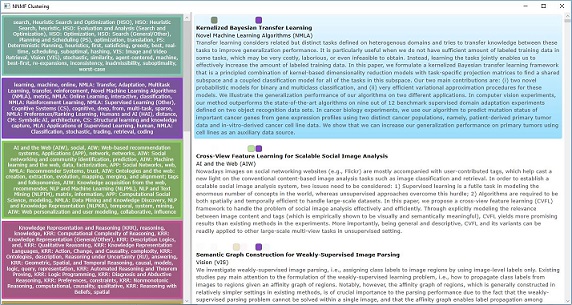

This WPF application finds clusters within a list of accepted papers to AAAI 2014 (an artificial intelligence conference). It shows the keywords in each cluster, and allows you to sort the list of results based on membership in a cluster.

Non Negative Matrix Factorization (NNMF) is a relatively simple technique that uses matrix factorisation to create a predetermined number of clusters within some single matrix.

NNMF clustering can be applied to any uniformly positive matrix, but in this application, it is applied to the document/term matrix that has been created from the list of documents and their meta data.

Vector space model techniques have been used in information retrieval and indexing for many decades.

If we have a collection of documents, we can count the words in each of those documents and store the result in a two dimensional array (or matrix) like this:

This is called a document/term matrix, and simply lists the documents and the count of the terms that they contain. The above document/term matrix can also be normalised so that each value falls between 0 and 1.

From this matrix, we can then think of each row as a single dimensional array (or vector), and compute the similarity of it against any other vector in the same matrix by using the inner product of the two vectors. There is a classic use of this technique in information retrieval, in which a vector is constructed from the query and then the dot product of each document against the query is calculated, which results in an ordered list of documents, sorted by relevance to the query.

NNMF goes beyond single row comparisons, and tries to find two matrices (features and weights) from the document/term matrix, that when multiplied together will reconstruct it. These features and weights cannot contain negative values, hence the Non Negative part.

The features matrix contains a row for each feature and a column for each term, with the value giving the degree to which the term belongs in the feature.

The weights matrix contains a row for each document and a column for each feature, with the value giving the degree to which each document belongs in the feature.

Linear Algebra

The application uses the open source Bright Wire machine learning library to create and normalise the term document matrix and to convert that matrix into dense vectors that are used by the NNMF class.

Using the Application

The sample application immediately downloads the document data set and clusters the links. Clicking on a cluster sorts the document list by its relevance in that cluster. Clicking each document searches for that document on Google.

Using the Code

The dataset is downloaded from the UCI machine learning repository. Then the CSV is parsed into a data table, features are extracted and normalised and then dense vectors are created from the sparse feature sets.

A lookup table is created to associate the dense vectors with the AAAIDocuments from which they were created.

var docList = new List<AAAIDocument>();

using (var client = new WebClient()) {

var data = client.DownloadData(uri);

var dataTable = new StreamReader(new MemoryStream(data)).ParseCSV(',');

dataTable.ForEach(row => docList.Add(new AAAIDocument {

Abstract = row.GetField<string>(5),

Keyword = row.GetField<string>(3).Split(KEYWORD_SPLIT, StringSplitOptions.RemoveEmptyEntries).Select(str => str.ToLower()).ToArray(),

Topic = row.GetField<string>(4).Split(TOPIC_SPLIT, StringSplitOptions.RemoveEmptyEntries),

Group = row.GetField<string>(2).Split(TOPIC_SPLIT, StringSplitOptions.RemoveEmptyEntries),

Title = row.GetField<string>(0)

}));

}

var docTable = docList.ToDictionary(d => d.Title, d => d);

var stringTable = new StringTableBuilder();

var classificationSet = new SparseVectorClassificationSet {

Classification = docList.Select(d => d.AsClassification(stringTable)).ToArray()

};

var encodings = classificationSet.Vectorise(true);

var documentClusterList = new List<DocumentCluster>();

using (var lap = Provider.CreateLinearAlgebra()) {

var lookupTable = encodings

.Select(d => Tuple.Create(d, lap.Create(d.Data).AsIndexable()))

.ToDictionary(d => d.Item2, d => docTable[d.Item1.Classification])

;

var vectorList = lookupTable.Select(d => d.Key).ToList();

These dense vectors are then clustered by NNMF.

public IReadOnlyList<IReadOnlyList<IIndexableVector>> Cluster(

int numIterations,

Action<float> callback,

float errorThreshold = 0.001f

) {

for (int i = 0; i < numIterations; i++) {

using (var wh = _weights.Multiply(_features)) {

var cost = _DifferenceCost(_dataMatrix, wh);

callback(cost);

if (cost <= errorThreshold)

break;

using (var wT = _weights.Transpose())

using (var hn = wT.Multiply(_dataMatrix))

using (var wTw = wT.Multiply(_weights))

using (var hd = wTw.Multiply(_features))

using (var fhn = _features.PointwiseMultiply(hn)) {

_features.Dispose();

_features = fhn.PointwiseDivide(hd);

}

using (var fT = _features.Transpose())

using (var wn = _dataMatrix.Multiply(fT))

using (var wf = _weights.Multiply(_features))

using (var wd = wf.Multiply(fT))

using (var wwn = _weights.PointwiseMultiply(wn)) {

_weights.Dispose();

_weights = wwn.PointwiseDivide(wd);

}

}

}

return _weights.AsIndexable().Rows

.Select((c, i) => Tuple.Create(i, c.MaximumIndex()))

.GroupBy(d => d.Item2)

.Select(g => g.Select(d => _data[d.Item1]).ToArray())

.ToList()

;

}

This function iterates numIterations times, each time trying to improve its score against the _DifferenceCost function by slightly improving the matrix factorisation.

Because the initial values are completely random, every time the clustering algorithm runs, it will find slightly different clusters. However sets of documents tend to remain somewhat fixed - i,e. the same documents will tend to occur together between runs.

The difference cost simply finds the distance between the factored matrices and the original matrix:

float _DifferenceCost(IMatrix m1, IMatrix m2)

{

return m1.AsIndexable().Rows

.Zip(m2.AsIndexable().Rows, (r1, r2) => _costFunction.Compute(r1, r2))

.Average()

;

Conclusion

NNMF of a term/document matrix can yield some interesting results. Finding the the strongest correlated sets of terms with each cluster and having degrees of membership between each document and cluster can be useful.

History

- March 22, 2009: First version.

- May 18, 2009: Bug fixes, service definition update.

- February 21, 2017: Major revision and application migrated to .net 4.6.1.