Introduction

This article explores the pros and cons, implications, and common pitfalls of caching in BizTalk Server orchestrations. This topic has been covered before in a few other interesting articles and blogs (Richard Seroter and Saravana Kumar), but there are some thread safety issues which have not been explored and need to be addressed. If you have implemented caching in BizTalk before and just want to read the essential bits, you may go straight to the Thread Safety section.

Caching

Caching is a useful programming pattern which, in principle, is nothing but storing static or mostly static data in memory. We all are more or less familiar with caching concepts in .NET applications, especially ASP.NET web applications. Caching is a common approach where:

- Cached data rarely change

- Retrieval of data from the data store is expensive because

- Increased load on the data store causes concern

- Round-trip to the data store can limit network bandwidth and increase delay

- Retrieval of data is expensive

- Very high performance and low latency is required

By removing the need for the application to go to the data store every time static data is required, performance can be greatly improved. If this data is located in a store which requires calls to external services (database, Web Service, etc.), a round trip to the messagebox is also saved, which again means better performance. A slight performance boost is also achieved by not having to create new objects and hence garbage collector will be triggered less often. By reducing the time BizTalk spends on running the orchestration, throughput will also improve.

Needless to say, caching, although somehow similar to storing context/session data, is different in the sense that unlike context/session data storage, it deals with application-wide static data. Yet, the approach is very similar, and the only concern with storage of context will be with implementing a limitation for the size of the cache so that cache only stores the most recently used context data.

A singleton (preferred over a plain static) object is used to contain the cached data. A lazy load pattern is usually employed for loading the data where it is cached the first time it is requested, not the first moment the application is started. If data is static, data stays loaded until the application is shut down. If data could change, a cache refresh policy needs to be considered, which is out of the scope of this article. This could sometimes inject considerable complexity to the logic and potentially negate the benefits of caching.

Here, we would only consider caching of purely static data.

BizTalk and Memory

A lot of thought and care has gone into designing BizTalk to make it memory-efficient and, fair to say, even memory-tight. Running out of memory, which was a common “feature” of the earlier versions of BizTalk, virtually never happens under recent versions of BizTalk (2006 and R2). “Flat memory footprint” means when a message is travelling through a BizTalk box, it is almost transparent in terms of memory consumption.

Those who have toyed with the idea of creating a custom pipeline component might have noticed the extreme care in handling memory and streams in Microsoft SDK samples. The FixMsg sample deals with a scenario in which a prefix and a postfix need to be added to the message. A typical implementation could have been reading the whole message data into a new stream while prepending and appending the prefix and postfix, respectively. The Microsoft samples implementation, however, treats the underlying stream with extreme care, and implements a new stream which reads the underlying stream only upon request. This said, most of the custom pipelines I have seen do not deal with memory as economic as described above; quite commonly, the whole data is read into memory, and then is manipulated; admittedly, this is sometimes inevitable.

Now, with BizTalk so memory stringent, would it not be better to conserve the memory by not implementing the caching, and instead go to the data store every time? After all, it is the memory we need to be so careful about (similar concerns here by Yossi Dahan)?

My response is how much memory is it we are going to use? If it is a few hundred megs, probably not such a good idea. Few megabytes? Definitely caching! At the end of the day, if you have a lot of unused memory on your server, you might put a small portion of it into use to improve the throughput of your application.

Now, how do we calculate the memory requirement for our cached objects? Well, as most of you would know, Int32 and float are four bytes, double and DateTime 8 bytes, char is actually two bytes (not one byte), and so on. String is a bit more complex, 2*(n+1), where n is the length of the string. For objects, it will depend on their members: just sum up the memory requirement of all its members, remembering all object references are simply 4 byte pointers on a 32 bit box. Now, this is actually not quite true, we have not taken care of the overhead of each object in the heap. I am not sure if you need to be concerned about this, but I suppose, if you will be using lots of small objects, you would have to take the overhead into consideration. Each heap object costs as much as its primitive types, plus four bytes for object references (on a 32 bit machine, although BizTalk runs 32 bit on 64 bit machines as well), plus 4 bytes for the type object pointer, and I think 4 bytes for the sync block index. Why is this additional overhead important? Well, let’s imagine we have a class with two Int32 members; in this case, the memory requirement is 16 bytes and not 8.

If you are caching primitive types such as Int32, DateTime (those inherited from ValueType) etc., always use generics Lists and Dictionaries, and not Hashtable or ArrayList. The reason is the latter were designed to work with heap objects, and in this case, every access will incur the cost of boxing and unboxing, and ultimately the garbage collection overhead. Generic lists and dictionaries are optimised for primitive types, and must be used whenever possible.

BizTalk and AppDomain

In caching, we use static objects so that the data can be shared. It is important to remember that static objects are shared only among applications loaded within the same AppDomain. How many AppDomains are loaded within a BizTalk host? Richard’s great article covers this subject, and I am not going to repeat it all over again here, but I am going to describe a few tests that confirm this:

- As soon as a BizTalk host is started, a default AppDomain is created.

- All the code for processing send and receive ports will run in this AppDomain. This includes any custom pipeline component and maps – where you would be able to implement and use any caching.

- Orchestrations run in an XLANG/s specific AppDomain, which is created as soon as the first orchestration is run. This includes any maps called from your orchestration. This AppDomain will be shared by all orchestrations and applications running in that host.

I have created a small BizTalk application which:

- Receives a message to trigger the orchestration using a file receive port/location.

- Increments a static counter in an expression shape and outputs to Trace.

- Runs Map1 in a transform shape which increments the same static counter in the map.

- At the end, message is sent using a send port. This port uses Map2 to transform the output and make a call to our static counter.

- We can try this test in two different scenarios: one in which the send port and the orchestration run in the same host instance, and the other in which they run in different host instances.

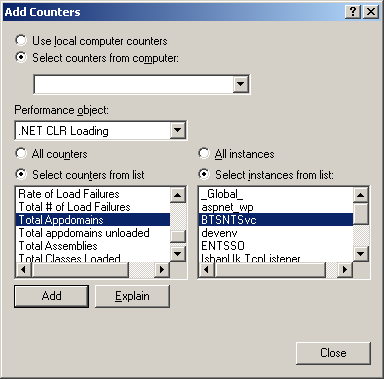

After compiling, deploying, and setting up the test BizTalk project, shut down all host instances apart from the ones running your test orchestration. Open perfmon and add Total AppDomains from .NET CLR Loading:

Select all BTSNTSvc.exe processes you have and add counters. Here, we will setup the system to use the same host instance for send as well as for orchestrations. This is the output you will see in perfmon (the host instance is only just started and the scale is 10):

So, one AppDomain is loaded by default and used by the EndPoint Manager service. The second AppDomain is created for running the orchestrations (XLANG/s service), this is the same AppDomain explained in the articles mentioned above. Here is the output from DebugView:

Please note that the procIds of all trace outputs are the same, but the code triggered by the map inside the send port runs in the default AppDomain while all the others run in the XLANG/s AppDomain.

Now, let’s separate the hosts for the orchestration and the send port. And then in the same fashion, we open perfmon and select the same counters, but this time, we need to select the two hosts:

Here is the output from our perfmon (scale is 10):

If you keep loading more and more orchestrations, even for different applications, these numbers do not change. So basically, this shows we can share data between objects of different BizTalk applications using static objects. Send or receive hosts do not create a separate dedicated AppDomain for executing send or receive ports. The output from DebugView will look like this (notice the difference in procIds):

Now, one question is how many times will the cache be loaded into memory? The answer depends on the logical and physical design of the application. If the cache is used for sharing data among objects of orchestrations, it will be loaded once for any host instance running the orchestrations. This happens in multi-server scenarios where we can have more than one instance per host.

BizTalk and Thread Safety

The topic covered here is not specific to BizTalk, and any C# developer can benefit from it. But, if you are a BizTalk developer and are using static objects within your orchestrations, you will be more likely to see strange threading errors popping up. Multi-threading errors tend to get very nasty, and are difficult to reproduce, and quite commonly, happen only in production. One problem in reproducing threading issues is that they happen under extreme load and when numerous messages arrive at the same time. This is actually very hard to reproduce in the development environment using BizTalk orchestrations, so here I will describe a method to reproduce - pretty easily - the same threading problems you experience in production, and you may use this for non-BizTalk projects as well, and the testing itself does not involve any BizTalk code.

I remember, a few years back when I was reading up on multi-threading, I was thinking why everyone believes multi-threading to be so difficult and error-prone. After a few years and developing quite a few multi-threaded applications in C#, I think I can now see why it is deemed so. You almost never stop experiencing new surprises with it.

Going back to our caching, an ideal cache must:

- Implement Lazy-Load

- Use locking for synchronisation

- Not use locking excessively, i.e., only use locking when loading the cache

We will see that achieving all three above is more difficult than it seems at first. Now, I would start with Savarana’s caching class. This class is thread aware (and we will see it is not thread safe), and uses the lock statement here:

if (_authorIds == null)

{

_authorIds = new Dictionary<string,string>();

lock (_authorIds)

{

…

}

}

In the code above, we have four main steps, and we will see that these four steps will be always present in any cache: condition check for loading the cache, creating the cache, locking some object, and finally loading the cache. The code above does not implement singleton, but I do not think that is a major problem. What can cause problems here is synchronisation.

Let’s imagine the cache is empty and three messages arrive at the same time. So three instances of the main orchestration is loaded, and each will try to load the cache, all in the same AppDOmain, but each having its own thread. All of these threads reach the condition check at the same time, and since the cache is empty, the condition is true, so each one creates a new instance of the cache. It is important to note that each one will overwrite the other’s cache instance, which is one of the issues. After creating the cache for three times, one of them will be able to lock the cache, and the other two will wait on the lock. After the first one is finished loading the cache, the lock is released and then the next thread will lock it and try to load the cache again! Guess what? We will have an exception since the second thread will try to load the same items!

Now, how can we create this theoretical scenario? Well, this is not too difficult, and I have implemented it as a unit test. All you need for it is NUnit.

[Test()]

public void SaravanaCacheTest()

{

AutoResetEvent[] resets = new AutoResetEvent[NUMBER_OF_THREADS];

for (int i = 0; i < NUMBER_OF_THREADS; i++)

{

Thread th = new Thread(new ParameterizedThreadStart(AccessSaravanaCache));

resets[i] = new AutoResetEvent(false);

th.Name = "Thread " + (i + 1).ToString();

th.Start(resets[i]);

Thread.Sleep(_random.Next(0, 1));

}

WaitHandle.WaitAll(resets);

Assert.AreEqual(NUMBER_OF_THREADS, _successCount,

"There were some failures");

}

As it can be seen, we create a number of threads, and then use a WaitHandle to wait until all threads are done. We use Thread.Sleep() to create occasional delays, simulating the production environment. An instance of AutoResetEvent is passed to the method run by the thread to be set in order to signal the end of work by the thread. At the end, we check to see how many times we succeeded, and if there were any failures, the test fails. Please bear in mind, this is not a typical unit test since a typical unit test produces consistent results, but this one will produce different results every time it runs. You might have to run it a few times to see the test failing:

On the console window, we see the exact error we were expecting:

OK, so what is the solution? Well, we can solve the problem in a few different ways. First of all, we can simply add an extra condition before adding an entry to the cache to check if it exists. A better solution will be adding a condition to check the number of items in the cache and populating it only if the cache count is 0.

But this is not going to solve all our problems. I have actually created some other cache classes: Cache1, Cache2, Cache25, and Cache3, to demonstrate other approaches and their problems. The code in these caches is very similar, and it is quite surprising how diverse our results are. Each is a simple cache class that creates a dictionary with 3000 numbers. I have used a dedicated object of type Object for my locking, although I could have used the dictionary for the same purpose. There is some built-in delay or console output which can be seen on the console window of NUnit.

using System;

using System.Collections.Generic;

using System.Text;

namespace BizTalkCaching

{

public class Cache1

{

private static Cache1 _instance = null;

private Dictionary<int,int> _numbers = new Dictionary<int,int>();

public Dictionary<int,int> Numbers

{

get { return _numbers; }

}

private Cache1()

{

}

public static Cache1 Instance

{

get

{

if (_instance == null)

{

_instance = new Cache1();

DataStoreSimulator store = new DataStoreSimulator();

store.LoadCache(_instance._numbers);

}

return Cache1._instance;

}

}

}

}

Cache1 does not implement any locking or synchronisation at all. Needles to say, it fails miserably on our unit test.

using System;

using System.Collections.Generic;

using System.Text;

namespace BizTalkCaching

{

public class Cache2

{

private static Cache2 _instance = null;

private Dictionary<int,int> _numbers = new Dictionary<int,int>();

private static object _padlock = new object();

public Dictionary<int,int> Numbers

{

get { return _numbers; }

}

private Cache2()

{

}

public static Cache2 Instance

{

get

{

if (_instance == null)

{

_instance = new Cache2();

lock (_padlock)

{

DataStoreSimulator store = new DataStoreSimulator();

store.LoadCache(_instance._numbers);

}

}

return Cache2._instance;

}

}

}

}

Cache2 does implement a locking, but displays the same problem experienced before. Also, it is interesting that each thread is actually able to call the singleton and get the count, which is actually not 3000 as we expected, it is always much less than that. This is potentially a much more serious problem since it is only a logical error and does not cause a crash in the application.

using System;

using System.Collections.Generic;

using System.Text;

namespace BizTalkCaching

{

public class Cache25

{

private static Cache25 _instance = null;

private Dictionary<int,int> _numbers = new Dictionary<int,int>();

private static object _padlock = new object();

public Dictionary<int,int> Numbers

{

get { return _numbers; }

}

private Cache25()

{

DataStoreSimulator store = new DataStoreSimulator();

store.LoadCache(_numbers);

}

public static Cache25 Instance

{

get

{

if (_instance == null)

{

lock (_padlock)

{

_instance = new Cache25();

}

}

return Cache25._instance;

}

}

}

}

Cache25 is an improvement on Cache2 with initialising the cache in the private constructor (true Singleton design pattern), but this is inefficient since the cache is loaded by each and every thread.

using System;

using System.Collections.Generic;

using System.Text;

namespace BizTalkCaching

{

public class Cache3

{

private static Cache3 _instance = null;

private Dictionary<int,int> _numbers =

new Dictionary<int,int>();

private static object _padlock = new object();

public Dictionary<int,int> Numbers

{

get { return _numbers; }

}

private Cache3()

{

DataStoreSimulator store = new DataStoreSimulator();

store.LoadCache(_numbers);

}

public static Cache3 Instance

{

get

{

lock (_padlock)

{

if (_instance == null)

{

_instance = new Cache3();

}

}

return _instance;

}

}

}

}

Cache3 is robust, and will not cause any error, either logical or exception. But it is inefficient as it implements locking every time the cache is accessed.

using System;

using System.Collections.Generic;

using System.Text;

namespace BizTalkCaching

{

public class TopCache

{

private static TopCache _instance = null;

private Dictionary<int,int> _numbers = new Dictionary<int,int>();

private static object _padlock = new object();

public Dictionary<int,int> Numbers

{

get { return _numbers; }

}

private TopCache()

{

DataStoreSimulator store = new DataStoreSimulator();

store.LoadCache(_numbers);

}

public static TopCache Instance

{

get

{

if (_instance == null)

{

lock (_padlock)

{

if (_instance == null)

_instance = new TopCache();

}

}

return _instance;

}

}

}

}

Finally, we get to the ideal cache which I have called TopCache. It is a true singleton, implements Lazy-Load, does not acquire unnecessary locking, and is robust and efficient. The trick is implementing a double condition, one outside the lock and one inside it. Remember, instantiating the cache must always be inside the lock statement.

So the magic bullet is the double condition!

Conclusion

In this article, we covered various aspects of caching in BizTalk. We also reviewed thread safety issues for caching in general, including BizTalk orchestrations.