Contents

- Loosely coupled design pattern

- Service Model (Contract + Application)

- Logical Centralized Model in the Repository

- Contract First

- Service Virtualization

- Logical Endpoint

- Message and Service Mediation

- Deployment Push Model (only for IIS/WAS)

- Deployment Pull Model (custom hosting on the Windows Services)

- Tooling support

- msi installation

- Supported (tested) Operating Systems: Windows Vista Ultimate Service Pack 1, Windows Server 2008 Standard

- Supported platform is 32-bit for this download

- The .Net 3.5 SP1

- MMC 3.0

- Microsoft SQL Server 2005/2008 (default setup is SQLEXPRESS)

- Web Deployment Tool Release Candidate 1 (x86) (for deployment feature)

- Visual Studio 2008 SP1 (for development feature)

Recently, PDC 2008 conference showcased the Road Map of the Microsoft Technologies focusing on the End-To-End Solutions driven by metadata and virtually hosting anywhere. This approach requires many vertical and horizontal changes for architecting application(s) and quality different development thinking where it is more focused on "What" rather than "How" to do it. This is a very important step for Microsoft Technologies and its is comparable to the introduction of a .Net manageable code Technology.

We can see the Microsoft Technology goal on this road such as offering Technologies for mapping a business model into the physical model for its projecting by using more declaratively than imperatively implementation. Decoupling a business model into small business activities (code units) and orchestrating them in the metadata driven model enables us to encapsulate the business from the technologies and infrastructures. On the PDC 2008, Microsoft introduced xaml stack as a common declaration of the metadata driven model for runtime projecting. With xaml stack, the component can be projected during the runtime in the front-end and back-end based on the hosting environment, for instances: desktop, mobile, server or cloud. Declaratively packaging activities into the service enables us to consume our service by business logical model in the business-to-business fashion.

The services are represented by logical connectivity (interoperability) for decoupling a business model in the metadata driven distributed architecture. For this architecture, the service model is required to manage connectivity and service mediation based on the metadata, in other words, the ability to manage the business behind the endpoint in the declaratively manner. Note, that this concept doesn't have a limitation about what must be within the service, it can be a small business model that represents a simple workflow or full application (SaaS - Software as a Service).

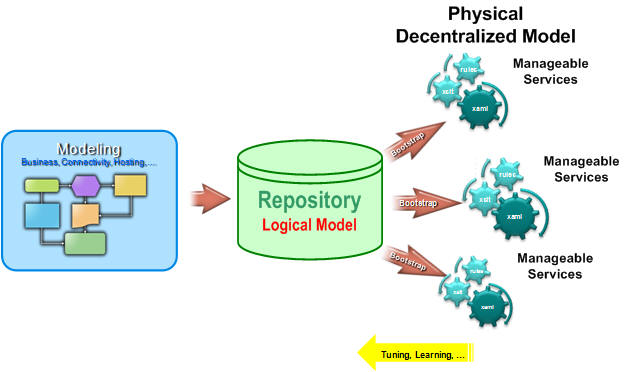

Lastly, the PDC 2008 shown how important the Service Model is in the next generation of Microsoft Technologies. For instance: Windows Azure platform, Dublin, Oslo Modeling platform, Dublin and etc. This strategy is based on the Modeling, storing metadata in the Repository and Deploying metadata for runtime projecting, therefore Model-Repository-Deploy is an upcoming thinking for enterprise applications. The last step, such as Deploy will enable us to deploy our logical centralized model into the decentralized physical model virtually to any hosting environment. It can be a custom hosting, Dublin or Windows Azure platform (cloud computing). From the Service point of the view, the Windows Azure platform represents a Service Bus for logical connectivity between Services. This is a big challenge for these technologies and of course for developer's quality (different) thinking process.

The following picture shows strategy of the Manageable Services:

The business model is decomposed into Service Models described by metadata stored in the Repository. The Repository represents a Logical centralized model, which actually is a large Knowledge Base of the business activities, components, connectivity, etc. This Knowledge Base is learned manually by Modeling Tools during the design time, but there is also a possibility to learn on the fly from other sources for tuning purposes, for instance. Based on the capacity, we can build next model based on the previous one and so on, therefore increasing the level of the knowledge in the Repository. There is also model of the deployment, which allowing to deploy our knowledge base into runtime environment. Of course, the Repository can be pre-built with some minimum common knowledge such as WokflowServiceModel, ServiceModel, deployment for Dublin, Azure, etc.

One more thing, I can see the Repository (as a Knowledge Base of models) as a big future for the Enterprise Applications. Hosting Repository on the Cloud Server (for instance Windows Azure), the models can be published and consumed by other models or it can be privately deployed, transformed to other models, etc.

The manageable services enabled architecture is allowing us to decompose the business model into the services and then deploy the metadata to the host environment such can be Cloud, Dublin, IIS/WAS or Custom Host. The following picture shows this concept:

The deployed services based on the modeling can be connected to the Service Bus and consumed by other private contracts. From the business workflow point of the view, the services represent business activities modeled during the design time. Decomposition of the business logic into the activities located in the business toolbox (catalog) will enable us to orchestrate them within the service. The result of this strategy is a very flexible driven model solution.

This article mentioned above a strategy for Manageable Services driven by metadata stored in the Repository using the edge of the current Microsoft Technologies such as .NetFX 3.5. This project has been started when WorkflowServices (.NetFX 3.5) has been introduced and relayed in my past articles published on the codeproject such as VirtualService for ESB and Contract Model for Manageable Services. I do recommend reading them for additional details. This article focuses on the Application Model (Service Model). However, this article includes all parts for modeling (tooling), storing, deploying and projecting metadata.

Let's start, I have a loots of stuff to show up, I hope you are familiarly with the latest Microsoft Technologies, especially .NetFX 3.5.

Firstly, lets focus on deploying metadata from Repository to the Host. Note, the Repository from the generic point of the view is holding models; these models can be models of the other models stored in relational tables, etc. Based on the deployment model, the application model must be exported to the runtime in the fashion of understandable resources by runtime projector. Basically, there are two kinds of deployed processors in this feature based on the how the metadata are exported from the Repository.

1. Push Metadata from Repository

This scenario is based on the Local Repository of the runtime metadata, located in the hosting environment. The following picture shows this scenario:

The Push deployment is a typical scenario of the deployment process. The first step of this scenario is pushing (xcopy, etc.) resources to the specific storage, usually it is a file system for resources such as config, xaml (xoml/rules), xslt, etc. in the off-line fashion, when an application is not running. The next step is to start application, when the runtime projector is loading resources into the application domain, creating CLR types, their instances and invoking actions. During this process, the Local Repository (for instance, File System) is logically locked for any changes and physically will be shutdown the application domain when the resources have been changed.

The Push deployment simplifies deployment process, the Local Repository is full decoupled from the source of the metadata (Repository), in other words, we can push the metadata from Visual Studio, from script, etc. During this deployment, we are creating (caching) a runtime copy of the metadata (resources), so we have a full isolation from the Modeling/Repository. That is an advantage of this scenario.

The Dublin PDC version shows this capability of hosting services on the IIS/WAS with Dublin extension. We can see great features such as monitoring, managing, etc. services hosted in the IIS/WAS Windows Server 2008 or Vista/Windows 7 machines. The Dublin MMC allows making some local changes in the local copy of the metadata. I can see this being helpful for very small project, but for enterprise applications, managing local copy of the metadata on the production boxes, without their tracking, versioning and rollback is not a good approach. In addition, the local metadata is a complex resource which represents only a portion of the logical model stored in the Repository. Therefore, all changes should be made in the centralized logical model and then push it again to the local repository. I think, that should be the way to manage services from the Repository using a Modeling Tool or similar one which will take care of the changes in the logical model especially when the deployment is done for the Cloud.

OK, now, let's look at the other option for deploying metadata to the host such as pulling runtime metadata from the Repository.

2. Pull Metadata from Repository

This scenario doesn't have a Local Repository for caching runtime metadata from the central Repository. It is based on the pulling the runtime metadata from the Repository by Bootstrap/Loader component located in the Application domain.

When application process starts, the Bootstrap/Loader will publish (broadcasting) event message to obtain metadata or by asking the Repository for the metadata. The major advantage of this scenario is dynamically managing services on the fly from Repository which can be done directly or using discovery mechanism and also capability to tuning metadata on the fly based on the analyzing and processing rules, etc. Therefore, there is no manageability of the service on the machine level, all changes must be done through the central repository tools. Note, that this scenario was not introduced in the PDC 2008.

Now, let's focus on the metadata stored in the Repository. The concept of the Manageable Services was described in detail my previous article called Contract Model for Manageable Services, the result of the service virtualization and its manageability using metadata is shown in the following picture:

As you can see, the service virtualization is basically described by two models such as Contract and Application Models, in other words ServiceModel = ContractModel + ApplicationModel. These two models are logically isolated and connected via EndpointDescription. Responsibility of the Contract Model is describing ABC (Address-Binding-Contract) metadata for the connectivity. The other model, Application Model is describing a service activity behind the endpoint. It doesn't need to know how its model was connected to other Application Models. Therefore these two models can be created individually during the design time and only in the last modeling step such as hosting, we need to assign a physical endpoint for a specific service in the Application Model.

The Service Model is allowing full mediation of connectivity, messaging, MEP (Message Exchange Pattern), message transformation, service orchestration, etc. and its results are stored in the SQL Server relational tables for next editing or deploying. The service mediation features are limited by .NetFX 3.5 Technologies specifically WCF and WF paradigm models (WorkflowServices) which are used for implementation of the Manageable Services. The current .NetFX 3.5 Technology integrates two models for the runtime known as WorkflowServices, but still each model need to use own metadata, for example: config, xoml and rules files. In the upcoming new version of the .NetFX (4.x) we will actually have only two resources such as config and xaml for projecting a WorkflowService (or Service) in the runtime CLR types.

The following picture shows the concept of the metadata driven services, where a Design Tool has a responsibility to create the metadata in the Repository and Deploy Tool is for packaging metadata for its runtime projecting in the specific host such as IIS/WAS (or custom hosting). Note, this article implemented option to deploy metadata only for IIS/WAS. Of course, you can deploy it also for Dublin (PDC version) and have a side by side deployment with .NetFX 4.0. In that case, your Dublin features are limited.

What about the Azure? At this time (PDC version), we cannot deploy any custom activities including a root activity. There are predefined set of the Cloud Activities for workflow orchestration on the cloud. We will see more about this at PDC 2009 conference.

As you can see in the above picture, the complexity of the Service Model is "coded" by Design Tool which can be very sophisticated or simple by using just MMC Console. This article includes a solution of the modeling Services using MMC Tool which I built for the Contract and Application Models. It is required have some knowledge of the WCF/WF (to use the Tool at this level), xslt, xml Technologies such as bindings, contracts, workflow activities, xpath, etc.

In summary, the Manageable Services represented by the Contract and Application Models are stored in the centralized knowledge base known as Repository and from there they can be deployed to the physical servers for their runtime projecting and running.

This Model First approach requires having some design tool for efficiency of generating model metadata. Our modeling productivity is depended on how smart is our design tool to "cook" our models. The Repository has obviously empty knowledge base and we have to teach it for connectivity and business activities modeled in the services. Each Service Model can be used as a template for other models.

Ok, let's continue little differently in the next section of the article, because the goal is to present modeling of the services, Repository and Tool, rather then how to implement it. I will describe some interesting parts from the design and implementation point of view as well, please see Design and Implementation paragraph.

The Repository is a central storage of the Manageable Services described by Contract and Application Models. This article describes an Application Model model, only. The following picture shows its schema for SQL Server Tables:

The core of the Application Model is a Service schema. There are five important resources in this schema such as config, xoml, rules, xslt and wsdl. All these resources have a runtime format, understandable by projector, therefore it is not necessary having a custom provider for deployment process. The reason for that is a Workflow Designer support where a result of the orchestration is exported in the xoml/rules resources. There are two additional resources in the Service schema such as xslt and wsdl. The first one, xslt resource is for message mediation and the other one is for metadata exchange operation. Both resources are optional.

Note, that the wsdl resource is included automatically when an endpoint from the Contract Model is assigned to the Service and Contract is untyped.

Each Service can be assigned by AppDomain and each AppDomain can be assigned by Application, which is the highest level in the Application Model. The model supports a resource versioning. The Application resource represents also an entry point for deployment scenario. Note, that this article does not include GroupOfAssemblies and Assemblies schemas for managing custom assemblies in the Application Model.

As you can see, the above schema looks very straightforward and it can be transformed to another modeling platform (for instance Oslo) and this migration process is basically depended on xoml/rules resources such as workflow migration WF 3.5 to 4.0 version, workflow complexity, etc.

As I mentioned earlier, the metadata from Repository can be pushed or pulled for their runtime projecting. In the case of pulling, the Repository supports a service to get the resources from storage. The name of the service is LocalRepositoryService.

Now it is time to show the Design Tool (Metadata Tooling) for generating data into the SQL tables.

The design tool for Manageable Services is implemented in the MMC snap-in, where a central pane is dedicated for specific user control. The left and right panes are for handling scope nodes and their actions. The following picture shows two panes on the MMC such as scope nodes and user control for Workflow Designer:

The details of the scope nodes are shown in the following screen snippet:

As you can see, there are two models of the ManageableServices such as Contract and Application located on the left pane. The first one is to create a model of the endpoints and the second one is the service model and service hosting. This example shows an Application Test and domain abc with 3 Echo services. One of the Echo service is a router for versioning Echo services located in the same domain. I will describe this service later in more details.

By selecting a specific scope node, we can get a user control for a specific action in the central pane. For example, the following picture shows a list of all services when we click on the scope node Services.

The first step of the tooling is to create a Service. By selecting the Services scope node we can get a choice for creating a new service.

Add New Service

The first page of the Service Creator is asking us for an attribute IsTemplate? If it's checked, the service can be used for creating another template and/or simply deleting existing one, etc.

Click Next to populate a service properties such as Name, Topic, etc. Note, that this article supports only XomlWorkflowService authoring.

Click Next to select a template for our new service. In this example, the EmptyIntegrator has been selected, what it is a basic template for service orchestration. This template has only one activity for receiving message in the request/response manner.

Click Next and the last page of the Service Creator Wizard will appear. The last page summarizes all information about our new service. After clicking Finish, the service will create metadata for this service template in the Repository.

The following screen snippet shows a result of the adding new service:

As you can see, there is MyService in the Test topic group. The central pane shows a Workflow Designer for service mediation. All attributes should be related with name of the service such as MyService. The Microsoft Workflow Designer has been embedded into MMC snap-in with some modification, for instance using XmlNotepad 2007 for xoml resource. This designer has a responsibility to create xoml and rules resources in the same way like it is hosted in the Visual Studio.

As I mentioned earlier, the Manageable Services are implemented using the WorkflowServices paradigm from .NetFX 3.5 version. The following code snippet shows xoml resource using the basic template as it was created in the above example MyService. Note, that namespaces are omitted for clarity snippet.

<ns0:WorkflowIntegrator x:Name="MyService" Topic="Test" Version="1.0.0.0"

MessageRequest="{x:Null}"

MessageResponse="{x:Null}"

ContextForward="InOut">

<ns1:ReceiveActivity.WorkflowServiceAttributes>

<ns1:WorkflowServiceAttributes Name="MyService"

ValidateMustUnderstand="False"

ConfigurationName="MyService"/>

</ns1:ReceiveActivity.WorkflowServiceAttributes>

<ns1:ReceiveActivity x:Name="receiveActivity1"

CanCreateInstance="True">

<ns1:ReceiveActivity.ServiceOperationInfo>

<ns1:TypedOperationInfo Name="ProcessMessage"

ContractType="{x:Type p7:IGenericContract}"/>

</ns1:ReceiveActivity.ServiceOperationInfo>

<ns1:ReceiveActivity.ParameterBindings>

<WorkflowParameterBinding ParameterName="(ReturnValue)">

<WorkflowParameterBinding.Value>

<ActivityBind Name="MyService" Path="MessageResponse"/>

</WorkflowParameterBinding.Value>

</WorkflowParameterBinding>

<WorkflowParameterBinding ParameterName="message">

<WorkflowParameterBinding.Value>

<ActivityBind Name="MyService" Path="MessageRequest"/>

</WorkflowParameterBinding.Value>

</WorkflowParameterBinding>

</ns1:ReceiveActivity.ParameterBindings>

</ns1:ReceiveActivity>

</ns0:WorkflowIntegrator>

The workflow activity (root) is customized by WorkflowIntegrator (derived from SequentialWorkflowActivity) custom activity for holding service info such as Topic and Version. The workflow Name and ConfigurationName must be same as the service name. In addition, there are dependency properties for Request/Response Messages for binding purposes in the scope of the Workflow. The last property is ContextForward which is a configuration option for by-passing context header, or its cleanup. Its default value is InOut.

Note, that the above xoml resource represents a minimum (required) metadata for receiving a message in the Request/Response message exchange pattern. This metadata is generated automatically by Design Tool, but in the case of drag&drop xoml from another source direct to the XmlNotepad control, the root activity must be a WorkflowIntegrator activity.

OK, now we are ready for service mediation, in other words, to orchestrate a service using activities from Workflow Toolbox.

Basically, the service mediation is intercepting and modifying messages within the service. The mediation enabled service allows decoupling a logical business model in the loosely coupled manner into the business services. In this chapter I will focus on mediation of the service message in the Manageable Services represented by System.ServiceModel.Channels.Message. Based on the MessageVersion, it is a composition of the business part (known as the payload or operation message) and group of additional context information known as headers. The headers and payload are physically isolated on the transport level, which allows to deserialize a network stream separately from the business part. In other words, the service message can be immediately inspected by its headers without deserializing (consuming) its payload. For instance, the service router (based on the context information located in the message headers) can re-route this message to the appropriate consumer of the business part without the knowledge of the business body.

Based on the above, we can see that the headers can be easily mediated by mediation primitives driven by well know clr types. The other part of the message such as payload requires the usage of loosely coupled mediation primitives. This type of mediation primitives need to use technologies such as xpath and xslt.

The xpath mediation primitives are using xpath 1.0 expression to identify one or more fields in a message for filtering or selecting based on their value. Let's look at the following XPathIfElse and XPathInspector custom activities (mediation primitives).

Notice, that the default message contract for Manageable Service is untyped, which means that the service will received a row message with serialized headers, however payload is not consumed.

XPathIfElse Activity

The XPathIfElse activity is a custom activity based on the feature of the Microsoft IfElseActivity for clr type primitives. The difference is in the condition expression, where the xpath expression is used instead of clr type expression. The parent activity such as XPathIfElse activity is binding to the service message for its inspection by each branch activity such as XpathIfElseBranch activity. Each branch has its own xpath expression for evaluation on the service message. Note, that the branch is working with the copy of service message. There is a MatchElement in the XPathIfElseBranch activity to select a message element for xpath expression for performance reasons. Later you will see how we minimize the performance hit from desterilizing message body. Note, that the XPathIfElse activity will not change message contents; it is a passive mediation primitive.

The following picture is a screen snippet of the XPathIfElse custom activity that shows its property grids

The MatchElement is an enum type with the following options:

public enum MatchElementType

{

None,

Root,

Header,

Body,

Action,

EnvelopeVersion,

IsFault,

IdentityClaimType

}

For example, if the branch condition needs to perform only for a specific Action, then selecting the MatchElement for Action and typing actual value of the Action in the XPath property will minimize a performance hit in this mediation process.

XPathEditor can help to find a specific xpath expression in the service message. This editor has a build-in interactive validation during typing xpath expression. Its concept is based on known information about the message version and schema. The version of this article is limited for MessageVersion and manually dropping the element on the XmlNotepad control. The full version allows selecting the schema from the Contract model, exposing all types into the combo box and then inserting them into the message.

There is a tab inserting or editing namespace in the XPathEditor when XPath expression needs to use extra namespaces. This collection of the namespaces is stored in the hidden XPathIfElseBranch.Namespaces property.

The following picture is a screen snippet of the XPathEditor. It can also be shown by double clicking on the XPathIfElseBranch activity:

XPathInspector Activity

The XPathInspector is a custom activity to inspect field and/or value of the service message. There is an enum property to specify a scope of the inspection to minimize the performance hit. The result of the Boolean expression can be bound with other activities for instance IfElseActivity

To type an XPath expression, the XPathEditor can be used in the same way like it was described in the above section.

TransformMessage Activity

The TransformMessage activity can also be used in the message flow to extract some business parts, creating a new root element with MessageVersion.None for internal usage within the service. This scenario is focusing on the performance issue to optimize the number of required copies of the messages.

The following example shows usage of the TransformMessage and XPathIfElse activities to optimize a performance issue during the message mediation:

The above service mediation transforms a received message into the small complex element based on the internal service schema for simple and fast evaluation in the following activities with more branches. This solution is consuming copy of the incoming message by TransformMessage activity instead of consuming message by each branch activity. When branch has been selected, the original message is passed for its business processing. In the above example, the message is sent to the specific service. Note, there is no MessageVersion for output/input message between the mediators.

Let look at another example of TransformMessage activity. In this example, the branch V1000 is transforming a received message contract for its specific target service before and after ProcessMessage activity.

As you can see, the TransformMessage is a very powerful activity. It is depended on the Design Toll how fast it will produce a correct xslt metadata. The usage of some 3rd party tool is recommended for more complex transformation, for example from Altova to generate an xslt resource and then dropping it on the XmlNotepad control.

CreateMessage Activity

The CreateMessage is a custom activity built as mediation primitive for creating a message based on the xslt metadata and MessageVersion. The following picture shows its screen snippet, including a property grid:

All properties in the above activity have the same features as TransformMessage. You can see, there is no MessageInput in the CreateMessage, because this is a creator, not a consumer of the message.

The following is an example of using this custom activity for broadcasting event message:

After processing received message, the service will generate notification message created by mediation primitive in the CreateMessage custom activity.

CopyMessage Activity

The CopyMessage is a custom activity to produce a copy of the message for its future consumption. The following screen snippet shows its icon and properties:

TraceWrite Activity

The TraceWrite is a custom activity for diagnostic purposes. The message and formatted text are shown on the debug output device, for instance, DebugView for Windows

In this chapter I was focus on the Service Mediation behind the endpoint, in other words, after receiving the message. This declaratively mediation is using the mediation primitives implemented as custom activities handled by WF programming model.

The WorkflowServices is an integrated model of the WCF and WF models, therefore there is a capability to mediate service at the Endpoint layer (model). This mediation has been described in details in my previous article VirtualService for ESB, however the following code snippet shows this feature:

<endpointBehaviors>

<behavior name='xpathAddressFilter'>

<filter xpath="/s12:Envelope/s12:Header/wsa10:To[contains( ... )]" />

</behavior>

</endpointBehaviors>

By injecting an address filter into the endpoint behavior pipeline, we can mediate a service at the front level such as rejecting an incoming message, re-routing message to other service and etc. This low level mediation is implemented by mediation primitive ESB.FilteringEndpointBehaviorExtension using an XPath 1.0 expression. Before using this feature, the mediation primitive must be added into the extensions like is shown in the following part of the service config file:

<extensions>

<behaviorExtensions>

<add name='filter'

type='ESB.FilteringEndpointBehaviorExtension, ESB.Core,

Version=1.0.0.0, Culture=neutral, PublicKeyToken=null'/>

</behaviorExtensions>

</extensions>

Note, that this article doesn't have a visual designer for this kind of mediation, it is necessary to make it manually in the XmlNotepad control for config metadata.

The Metadata Exchange Endpoint (MEX) is a special endpoint contract in the WCF programming model to export metadata used to describe a service connectivity. This document is built on the runtime, based on the ServiceEndpoint description for each typed contract. In other words, if the contract is untyped (Action = "*"), there is no way to build metadata for generic contract on the fly.

For example, let's assume we have a versioning router service like it was shown earlier in the Service Mediation section. The consuming branch version of the V1000 and V1001 is different, but their endpoint is the same. Therefore, the router endpoint cannot have an MEX feature; thus the request is forwarded to the actual service for its processing. That's fine, but what about if the target service endpoint is also untyped (generic contract) for mediation enabled service?

Well, there is one solution such as preparing this MEX document in the Contract Model and delivering on the runtime in the Application (Service) Model. In other words, the design time can create MEX in the Contract-First fashion, storing it in the Repository and then deploying it to the runtime projector in case if consumer needs to ask for a physical endpoint for its MEX document.

WSRT_Mex Activity

The WSRT_Mex is a custom activity for responding request for MEX document in the WS-Transfer fashion. The following screen snippet shows its icon and property grid:

Based on the Wsdl property, the MEX document can be obtained by Repository or locally from File System. When service is hosted on the IIS/WAS, this resource will be located within the site together with others resources such as config, xoml, rules, xslt and svc.

Usage of the WSRT_Mex custom activity is straightforward. The following picture shows an example of some business service (Imaging), where MEX has own branch for processing WS-Transfer Get Request:

The Operation will evaluate an Action header for the following expression in order to process MEX operation:

System.ServiceModel.OperationContext.Current.IncomingMessageHeaders.Action ==

"http://schemas.xmlsoap.org/ws/2004/09/transfer/Get"

Note, that it is not required to use an endpoint MEX in the service configuration. From the incoming message point of the view, the mex operation message is processing in the transparent manner like another action. It is a responsibility of the service mediation to provide this feature at the physical endpoint.

The other preferred way to get the MEX document is by asking the Repository. The Repository knows all details about the models in advance before the deployment, therefore the physical service doesn't need to be on-line (deployed and run) to get this information.

The following picture shows a Microsoft utility WCF Test Client to dynamically create a proxy that calls a Repository for MEX document:

As you can see, the service Echo has been added before askingfor it again. The access to the Repository service is configurable in the config file (our example is net.pipe://localhost/repository/mex? ).

Last comment about the Repository and MEX. Discovering a MEX document from the Repository where are located are logical centralized business models is very powerful and is opening more challenges in the metadata/model driven architecture. The recently PDC 2008 conference introduced a Microsoft Technologies for cloud computing and Windows Azure Platform. What about having the Repository on the Cloud to exchange an endpoint MEX and other service metadata models? Think about that, and try to figure out the answer by yourself.

As I mentioned earlier, the Application Model can be pushed or pulled to the runtime projector hosted on the physical machines. The Design Tool has built in capability to deploy a specific Application version. This option is only for IIS/WAS hosting.

The deployment process has two steps. In the first step, deployment package (for selected Application) is created and stored in the Repository. In the following example we can see Application.Test (1.0.0.0) package:

Clicking on the Create button, a new package will be created and stored in the Repository. The content of the package (stored in the Repository) is shown on the user control. We can see a physical organized package hosted in the IIS/WAS site.

The next step is to install this package on the hosting server. Clicking the button Install the following dialog will ask some information such as name of the server, site, ...

Clicking on the button Deploy, the package will be installed on the specific server in the Physical path and virtual directory will be created for this Application. Note, that this article version has some limitation such as localhost and Default Web Site are supported.

After successful deployment, the Manageable Services in the deployed Application are ready for usage. Any changes in the Application or Contract Model must be stored in the Repository, refreshing and re-deployed again.

This article includes a msi file for installation of the Design Tool and Repository. This give you a fast start up and a minimum installation steps in comparison to the solution from the source code package such as compiling, installation MMC snap-ins, windows service, creating database, etc. Note, that this article version supports an installation of Repository on your local machine, only.

The process of the installation is divided into two steps:

- Installation of the Design Tools (MMC snap-ins)

- Installation of the Repository

Let's go through the following steps, notice that you should Run as Administrator for that process:

- Download included msi file

- Open this file

- click on the Next button for all pages

- click on the Close button on the Installation Complete page to exit installation process

After that, all components have been installed in the folder: C:\Program Files\RomanKiss\ManageableServices\ and ready to manage metadata in the Repository, but during the first time it will fail because the process of the creating Repository is not a part of the msi installation. This process is invoked manually based on the scripts located in the Repository subfolder. The major reason is to avoid some accidental auto installation process without the backup your Repository.

Therefore the next part of the installation is creating Repository:

- open the cmd console as administrator

- change directory for

C:\Program Files\RomanKiss\ManageableServices\Repository - open

setup.bat file to create and load Repository - (open cleanup.bat file to delete Repository) this is a step for cleanup Repository before creating other one from scratch

The setup/cleanup batch files use a predefined name of the database for repository such as LocalRepository on the local SQLEXPRESS server. If you are planning to change it, that is the place for that.

Oh, there is one more place, the windows service config file need to be changed as well, or you can stop the LocalRepositoryService and type your Repository name in the start parameters text box and start again.

Note, the WF sql scripts are not a part of this installation. The following connectionString is used in the config resource:

Data Source=localhost\sqlexpress;Initial Catalog=PersistenceStore;

Integrated Security=True;Pooling=False

That's is all for the installation. Now you can find Manageable Services icon on your desktop

and open it. You will be prompted for each MMC snap-in for ConnectionToLocalRepository dialog.

For default installation click on OK button and then you are in business of the designing models for Manageable Services.

I do recommend to play with Contract and Application scope nodes, their actions, etc just to see features. Don't worry about generating some mess in the Repository; it can be very easily cleaned up (using clenaup.bat file) and recreated by setup.bat file

One more thing, if MMC snap-in will throw an exception, follow-up MMC exit. I do apologize for that bug, just close the MMC and reopen fresh one and continue again. All changes are stored in the Repository in the transactional manner (accepted by saving). Please keep that in mind that before closing the MMC.

OK, now it is the time to rock&roll with some simple example for full round trip through the designing, deploying and testing.

This is a simple example for Echo service with the following contract:

[ServiceContract]

interface IService

{

[OperationContract]

string GetFullName(string firstName, string lastName);

}

I am going to show you all steps how to manage this service model including its testing with a virtual client represented by Microsoft WCF Client Test program.

I will assume that you have completed the installation as described in the above chapter. To simplify this example, the Repository has been preloaded for 3 simple Echo services such as two versions of the Echo service and one service for versioning routing.

The example is divided into the following major steps (actions):

- Creating a Contract Model for our example such as

IService - Creating Echo Service(s) - this step is already done during an installation process, when services have been imported into the Repository.

- Creating an Application Model for our Echo Services and hosting on the IIS/WAS

- Deploying Application to the target server

- Testing

Contract Model for IService

As I mentioned earlier, the Repository is a Knowledge Base of the Contracts, Services, Endpoints, etc. If this knowledge doesn't exist, we have to add it either manually or importing from the other place such can be assembly, wsdl endpoint, etc. In our example (for simplicity) the following steps demonstrate the contract import from the assembly.

Step 1 - Import Contract

Select the scope node Contracts, right click on action Import Contracts:

The above user control is designed for importing a contract from the assembly or url endpoint. Check the box FromAssembly and click on the Get button. You should see the above screen snippet in our snap-in.

Select Contract IService and then click on the button Import. This action will import all metadata into the Repository for the selected Contract.

So, now our Repository has more knowledge, you can check it in the Schemas, Messages and Operations scope nodes but not in the Endpoints. That's the following step.

Step 2 - New Endpoint

Select the scope node Endpoints, right click on action Add New Endpoint and populate this user control for the following:

- Name:

Echo - Address: http://localhost/Test/abc/Echo/Echo.svc

- Binding:

wsHttpContextNoneSecure - Description:

type some description - Version:

1.0.0.0 - select

XmlSerializer - check

XmlFormat - select Contract

IService - select

GetDocumentByKey - select

GetFullName

After the above steps, your snap-in should be the same as it is shown in the following screen snippet:

Now, click the button Export. The following screen snippet will be show up in your snap-in:

In this step we have an opportunity to preview what is going to be published it from Repository such as wsdl metadata. Also, we can see all Generated Contract Types.

Next action is to populate information for storing this metadata in the Repository under the unique key such as Name and Topic. Therefore, type the Name: Echo and Topic: Test, check ExportMetadata and click on the Save button. The result of this action is the following screen snippet:

Now, at this point, the Repository has a first metadata (Endpoint) to be published in the Contract-First manner, in other words, the client can ask the Repository for metadata (wsdl). As you can see in the above picture, this capability is built-in to the snap-in, therefore we can be the first client (tester) for our new metadata. Click on the Run buttonsvcutil handle IService contract for this virtual client. This is a real connectivity to the Repository via its service LocalRepositoryService.

Of course, any client for instance, Visual Studio can consume this metadata from the Repository. In our test, we will use a virtual client utility from the Microsoft SDK such as WCF Test Client, therefore launch this program and Add Service from the Repository like it is shown on the following picture:

After clicking on the Run button, we should have a client for this contract. Note, there is some glitch in this client utility program (.NetFX 3.5 version), so just ignore it and continue.

At this moment we have our client ready for runtime test, but there is no physical deployment of our Test application with an Echo service(s). That's the next step, so let's continue and make all metadata plumbing in the Application Model. I hope your model is setup the same way like it was described in the above first section.

Application Model for Echo Service

The goal of this step is to create an Application Model of the services divided into the domain based on the business needs. For our Echo service (in this moment we are using only one initiate version 1.0.0.0) we are going to create a business domain abc that will host our Echo service.

Add New Domain

By selecting the scope node Domains and right click on the Add New Domain action, we will get the following screen snippet:

Populate user control properties such as the Name: abc, Version: 1.0.0.0 and description (this is an option) and select the Service Echo version 1.0.0.0

Clicking on the Finish button, we have a new domain in the Repository. As you will see later on, the domain can be modified based on the requirements or creating its new version, etc.

Next step is to create an Application, using the same philosophy as for the Domain. We need a logical Application to package our business domains for their isolation within the physical process.

Add New Application

The new Application requires populating some properties. In our example, properties such as HostName: Test, ApplicationName: Test, MachineName: localhost, Description (option) , Version: 1.0.0.0 and one check for IIS/WAS Hosting. Then we are selecting all domains in this application. In this example you see only one domain such as abc.

Clicking on the Finish button, the new Application will be stored in the Repository. We can come back later to modify it based on the additional requirements such as adding new Domain(s).

So far, we have one Contract model and one Application with Echo Service model in the Repository. Now, it's time to connect these models together, in other words we need to assign a physical endpoint to the Application (specifically to the Echo service).

Assigning Endpoint for Service

Select in the Echo (1.0.0.0) scope node a ServiceEndpoints node, right click a select Add New Endpoint action. This user control pane will give you a choice to select all endpoints for this service. In our example, we can see a VirtualAddress (since wedecided to use an IIS/WAS hosting). This is actually a filter for Repository to popup all endpoints when you click on the comboBox Name. The following picture shows the result of that selection:

Clicking on the Finish button, the config resource will be updated in the Repository when the config scope node is selected.

To finish this process we have to create a package of this Application for its deployment. Note that after creating this package, any changes in the models will not have an impact for this package, in other words, if the model must be changed, the specific resource must be refreshed and the package recreated again.

Create Package

For Push deployment option, the Application must create a deployment package. The snap-in has a built-in option for target IIS/WAS (Dublin), only.

Selecting a Test 1.0.0.0 scope node and its CreatePackage action, the following user control will show up in the central pane. In the case, when the Application has already created package and stored in the Repository, we can see its contents.

To create a package (or new fresh one), we can click on the Create button. We can see all details about the package, resources, assemblies and structure of the web site for this application.

Now, here comes the magic step - deploying to the physical target. We worked very hard to get to this point, so let's make the last step for Repository which is installing the package.

Deploy Package

Clicking on the Install button, the following dialog will show up:

You can see some properties for targeting, don't change them, this article version doesn't support full features, we can deploy our example to localhost server and Default Web Site only. Just click on the Deploy button and our example is ready for real test.

Testing Echo Application

As I mentioned earlier, the testing service is using the Microsoft utility WCF Test Client. In this step, we have already setup our client for invoking a GetFullName service operation. The following screen snippet shows a result of the invoking this operation:

Changes in the Application

Ok, let's continue with our simple example by adding a new version of the Echo Service into the Application. We will have two Echo services in the same domain without any change in the physical endpoint. This situation maps real requirements in the transparent manner between the old and new clients. For this solution we have to add a special pre/post service known as router service. Based on the message content, the router will forward the request/response to the appropriate target.

I described this scenario in the service mediation example in details. So, first of all, we are going to add two additional Echo services into the domain abc using a Modify Domain action :

Clicking on the Finish button, the Repository will update this change in the abc domain, see the following screen snippet of the abc scope node:

As you can see in the above picture, all services have the same name. The topic of the router service is prompted by '@' character to indicate a router feature for creating a deployment package for IIS/WAS hosting.

Now, we have to go to the Contract Model and add two new Endpoints for:

- new

Echo service (version 1.0.0.1). In this example we don't change the service contract, it is the same as IService contract. You can accomplish this task in the same way as the creation of the Echo Endpoint, only difference is the name of the Endpoint and version - router service using a

IGenericContract service operation. This contract can be imported from the assembly ESB.Contracts . Note, that this contract is a untyped, therefore we don't have a wsdl metadata, therefore it is not published by Repository.

After this "Repository learning process" we can see three (3) Echo endpoints:

Once we have those endpoints in the Contract Model, we can use them in the Application Model. Basically, we need to make the following tooling in the ServiceEndpoints section:

- assigning an endpoint

Echo to the Echo 1.0.0.0 service - assigning an endpoint

Echo1001 to the Echo 1.0.0.1 service - assigning an endpoint

EchoRouter to the Echo router service

Tooling endpoints in the ServiceEndpoints scope node is straightforward and well supported from the Contract Model. On the other hand, the ClientEndpoints sometime require some manual tuning in the client section of the config resource.

Note, that the name of the endpoints in the ClientEndpoints must be corresponded with the EndpointName value of the SendActivity.

OK, now we made all required changes in the Contract and Application models for our Echo versioning, therefore we have to create a new deployment package:

As you can see, the above picture shows a new structure of the Application Package. The first service is a router - message forwarder for version 1.0.0.0 and 1.0.0.1 located within the same (Test) web site.

Clicking on the Install button we can have this version on the target server ready for its testing. Because we didn't change a service contract, we can use our already created client.

The result of consuming the Echo version 1.0.0.0 service:

The result of consuming the Echo version 1.0.0.1 service:

That's all for this example. I hope, you get a picture of the tooling Manageable Services in the Repository. The tooling process is smart based on the tool capability. This article solution has some limitations; of course, the tool is opened to add more tooling actions, validations, etc. in the incremental manner, based on the needs.

Design and Implementation of the Manageable Services Tool has been described in my article Contract Model for Manageable Services in details. I will describe some interesting part of the implementation, I would like to put a credit in for the following 3rd party software that helped me save some implementation time and allowed me to focus more on the metadata models:

This project used many technologies such as MMC 3.0, Linq Sql, Linq Xml, .NetFx 3.5, etc. Of course, without the Reflector, it will be very hard to accomplish this task. It is very hard to show all pieces how they been designed and implemented over the year, actually from the time when the WorkflowServices has been introduced in the .NetFx 3.5 Technology.

However, here are few code snippets:

public static void AddServiceToPackage(Package package, ServicePackage service)

{

if (package == null)

throw new ArgumentNullException("package");

if( service == null)

throw new ArgumentNullException("service");

foreach (PackageItem item in service)

{

string filename =

string.Concat(service.Path, string.IsNullOrEmpty(item.Name) ?

service.Name : item.Name, ".", item.Extension);

Uri partUri =

PackUriHelper.CreatePartUri(new Uri(filename, UriKind.Relative));

PackagePart packagePart =

package.CreatePart(partUri,

System.Net.Mime.MediaTypeNames.Text.Xml, CompressionOption.Maximum);

if (string.IsNullOrEmpty(item.Body) == false)

{

using (StreamWriter sw = new StreamWriter(packagePart.GetStream()))

{

sw.Write(item.Body);

sw.Flush();

}

}

}

}

public static Package OpenOrCreatePackage(Stream stream)

{

if (stream == null)

stream = new MemoryStream();

return Package.Open(stream, FileMode.OpenOrCreate, FileAccess.ReadWrite);

}

where declaration of the ServicePackage is shown in the following code snippet:

public class PackageItem

{

public string Name { get; set; }

public string Extension { get; set; }

public string Body { get; set; }

}

public class ServicePackage : List<PackageItem>

{

public string Name { get; set; }

public string Path { get; set; }

}

The above code snippet is a part of the section for creating a deployment package and storing it in the repository. The metadata from the Repository are collected in the ServicePackage for a specific path, then zipped and stored in the Repository Application Model for later installation.

Another code snippet shows a part of the ApplicationVersionNode scope node for its loading from Repository using a Linq Sql technique.

internal void Load()

{

this.Children.Clear();

RepositoryDataContext repository =

((LocalRepositorySnapIn)this.SnapIn).Repository;

var application =

repository.Applications.FirstOrDefault(a=>a.id==new Guid(ApplicationId));

if (application != null && application.iisHosting)

{

this.Children.Add(new ApplicationConfigNode(new Guid(ApplicationId)));

}

using (TransactionScope tx = new TransactionScope())

{

var domains = from d in repository.GroupOfDomains

where d.applicationId == new Guid(this.ApplicationId)

orderby d.priority

select d;

foreach (var domain in domains)

{

var query =

repository.AppDomains.FirstOrDefault(e=>e.id==domain.domainId);

if (query != null)

{

DomainVersionNode node =

new DomainVersionNode(domain.domainId.ToString());

node.DisplayName =

string.Format("{0} ({1})", query.name, query.version);

this.Children.Add(node);

}

}

tx.Complete();

}

}

Complete source code of the Manageable Services is included in this article.

This article is a last part of the project that I started as VirtualService for ESB and following up with Contract Model for Manageable Services a year ago. Windows Azure Platform was not introduces to the public at that time, therefore the first part of this project has been written as Virtual Service for Enterprise Service Bus in the concept of the "distributed BizTalk". Little after, my first part of the project was published, I adjusted my vision of the logical connectivity from the ESB to the Manageable Services that allows me to manage and mediate a business process behind the endpoint. I introduced two models such as Contract and Application Models and Contract Model for Manageable Services article described this model and its tooling support in details.

Managing contract in the virtual fashion without having its physical endpoint has been a major key of this project and I think it was a good decision that time in terms of the conceptually matching with a new version of the Managed Services Engine by Microsoft, recently released two months ago at the CodePlex.

Logically centralized Service Model (Contract + Application) in the Repository enables us to manage services across more business models (enterprise applications.) and physically decentralized to the runtime projecting. With upcoming Windows Azure Platform, the Manageable Services are getting a challenge in the Cloud, where they can be part of the .Net Service Bus party. In my opinion, this is a big step from the ESB to the Cloud built-in infrastructure with a capability of the Service Bus.

The second vision of the What next is a big challenge for Repository. Repository represents a Knowledge Base of the Connectivity and Services where during the learning process (tooling) this knowledge is growing based on the needs. Pushing the Repository on the Cloud and connecting to the .Net Service Bus, we can also think to exchange metadata not only at the Endpoint level (mex), but also at the Service Model or higher one.

To continue this vision, there is a big challenge in the metadata driven Manageable Services centralized stored in the Repository. As I mentioned earlier, the Repository learns by manual process using some tools at the design time. Adding the capability to tune our models in the Repository on the runtime will open a completely new challenge in the event-driven architecture, where a logical model can be managed by services itself.

OK, I am going to stop my dream from the Cloud, and back to the ground for short What next. The answer is the upcoming new Microsoft Technologies based on the WCF/WF 4.0 and xaml stack, therefore that is the what next to the Manageable Services. This challenge will allow targeting a Manageable Service anywhere, including Cloud. Of course, we have to wait for the release (or beta) version of the .NetFx 4.0.

Another feature for Manageable Services is incrementally developing more features in the tooling support such as XsltMapper Designer, XsltProbe, Model Validation, Service Simulator and Animator, Management for Assemblies, RepositoryAdmin, etc.

Ok, as you can see, there are the lots of challenges in this area for Manageable Services. Also, keep in mind, the project Manageable Services described in this article has been implemented on the current .NetFx 3.5 version with focusing on the upcoming model/metadata driven architecture and it's not a production version.

In conclusion, this article described a tooling support for Manageable Services logically centralized in the Repository and the physically decentralized to the target server for their projecting in the application domain. If you have been with me so far, you should now possess a good understanding of the services enabled application driven by metadata. I hope you enjoined it.

References:

VirtualService for ESB

Contract Model for Manageable Services