This project started out when I began searching for an quick way to put source code on my website to make it easy for guests to browse projects. Time is very valuable so I don't always like downloading a zipped archive and then exploding it to see it - I'd rather have a space online.

(Note: When installing this application yourself, you should publish as a web application and then update the web.config to point the appSetting "root" to the root directory for your source code. The web application should have permissions to read the specified folder).

<appSettings><add key="root" value="c:\dev\"/></appSettings>

It was then I realized this was a perfect candidate for a mini-project. It will demonstrate the flexibility of having a solid architecture, provides plenty of opportunity for refactoring, and demonstrates some basic programming paradigms while solving the problem I had of showing source code!

My initial requirement was that I would be able to throw "Iteration 1" together in a few hours. I spend enough time coding as it is and don't need a runaway project taking any time or focus away from my main goals. So, the requirements were quite simple:

- Show a tree view to browse down to various nodes, directories or source files

- Show the source with some sort of highlighting

- Don't show everything, just the pertinent stuff

Not bad, so where to start?

First, I wanted to create a flexible model. My thought for iteration 1 was to parse the file system and show nodes, but thinking ahead, I may eventually want to link to third-party repositories like SVN. Therefore, I decided to interface my models.

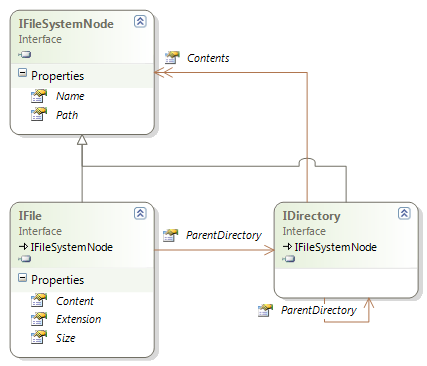

I have the most generic part of the structure, the IFileSystemNode, which basically contains a name and a path. This can be further resolved to a IDirectory, which contains a collection of other IFileSystemNode, or an IFile that is clarified by an extension (maybe I should change that to type) and possibly has actual content to render.

Next step was implementation of these models. I created a base abstract class for the node and then created a DirectoryModel and a FileModel.

The point here is that I could have an implementation that holds a Uri and exposes path as a URL, etc. In keeping with agile concepts, I'm not going to think too far ahead and go with what I have for now.

Next is the data access layer. First step was a generic IDataAccess that simply allows a "Load" of an entity:

namespace Interface.DataAccess

{

public interface IDataAccess<T> where T: IFileSystemNode

{

T Load(string path);

}

}

Because I don't need to get the contents of a file until requested, I created a IFileDataAccess that specifically takes a reference to a file object and then loads the contents.

The implementation is relatively straightforward. The DirectoryDataAccess recursively iterates through the structure, and either builds a collection for each directory or calls out to the file data access to load the file contents:

public DirectoryModel Load(string path)

{

if (string.IsNullOrEmpty(path))

{

throw new ArgumentNullException("path");

}

DirectoryModel retVal = ModelFactory.GetInstance<directorymodel>();

if (Directory.Exists(path))

{

retVal.Path = path;

DirectoryInfo dir = new DirectoryInfo(path);

retVal.Name = dir.Name;

foreach(DirectoryInfo subDir in dir.GetDirectories())

{

DirectoryModel subDirectory = Load(subDir.FullName);

subDirectory.ParentDirectory = retVal;

retVal.Contents.Add(subDirectory);

}

foreach(FileInfo file in dir.GetFiles())

{

FileModel fileModel = _fileAccess.Load(file.FullName);

fileModel.ParentDirectory = retVal;

retVal.Contents.Add(fileModel);

}

}

return retVal;

}</directorymodel>

Here is my first note to self ... instead of recursing, I could easily just load the names for the top level directory items and set an "isLoaded" flag. Then, only if the user drills down to a lower level do I need to start recursing there. That will be a refactoring we do later ... right now, I suck the whole thing into memory at once, but that obviously won't scale to a large set of projects or make sense going over the network to a source repository. We'll get there!

The FileDataAccess pulls out the filename and extension information. It implements the "LoadContents" by pulling in all of the bytes for the file:

...

if (File.Exists(file.Path))

{

file.Content = File.ReadAllBytes(file.Path);

}

...

I like to have a service layer on top of the data access. This layer can coordinate multiple data access (i.e., in the future when I add an SVN implementation, the service layer can instantiate a file system and an SVN data access handler and then aggregate the results to pass up to the presentation layer). In this project, the service layer mostly acts as a pass-through to the data access. However, it also contains a list of valid extensions that we will show the end user, and therefore manages the call to the "LoadContents" based on whether or not the extension is eligible. This prevents us from trying to load DLLs, for example.

Now that we have that all wrapped up, let's go to the controls. I could have tapped into the extensive control library that AJAX provides, but I thought it would be fun to go ahead and roll my own controls. I did decide to use JQuery because it makes manipulating the client so easy.

First, the control architecture. I want to have a "tree" that has branches (directories or files) and then a "leaf" that is the display of the code. This was fairly easy to visualize and map out.

I decided to make the branches a server control based on a Panel. The branch can then control the visibility of the child branches. The branch contains an IFileSystemNode and generates an anchor tag for that node. Then, based on if it is IDirectory or IFile, it generates a click event to expand children or load the code contents. I decided to let the branches recursively load themselves. For this phase, the entire tree structure is output and then the second-level branches are collapsed by default. Again, this is the first iteration: the next refactoring for the UI will be to lazy load the child nodes. This won't scale to a ton of projects "as is."

You'll find some interesting code in the JavaScript for the branches. For example, I found that initially hiding the DIVs somehow didn't synchronize with the internal DOM state and forced users to click twice in order to expand a node. To solve this, I created an artificial attribute that is attached to the nodes and call a redundant "hide" to get the DOM in synch:

if (!$(child).attr('init')) {

$(child).hide();

$(child).attr('init','true');

}

The rest is just a simple toggle of the child visibility and its siblings. The entire architecture ends up looking like this:

The branch is a server control, the tree is a container for the related branches. The branches expose an event that other clientside controls can listen to when the user clicks on a node to view. This is where the "leaf" comes in. It is basically a title and a DIV, and it registers to listen for the click event. When that happens, it makes a callback to grab the code contents and then renders them in the DIV.

For syntax highlighting, I used Alex Gorbatchev's Syntax Highlighter. It does a great job and is what I use in my C#er : IMage blog. Once the div is rendered, with a little help in the Leaf to figure out the right brush based on the file extension (this is presentation logic, not service), the Syntax object is called and it does its job with highlighting the code.

I used the Callback function in the Leaf "as intended" which is to generate the code with a call to GetCallbackEventReference which generates the appropriate JavaScript. In order to prevent users from aggressively clicking nodes while they are still loading, we set a global flag and flash an error until the current leaf is fully loaded. It ends up looking like this:

function leafCallback(path,name) {

if (window.loading) {

alert('An existing request is currently loading.

Please wait until the code is loaded before clicking again.');

return;

}

window.loading = true;

$("#_codeName").html(name);

$("#_divCode").html("Loading...");

var arg = path + '|' + name;

;

}

We pass down the path and the name so we can find the correct node on the server and then the server sends back the bytes to render. The callback function simply injects the source and then calls the syntax highlighter:

function leafCallbackComplete(args, ctx) {

$("#_divCode").html(args);

window.loading = false;

window.scrollTo(0, 0);

setTimeout('SyntaxHighlighter.highlight()', 1);

}

I decided to build a static cache and retain one copy of the entire structure in memory. This is refreshed every hour so it will pull in new source as I add new projects. The Cache simply uses the ASP.NET Cache object, and either pulls the tree from cache, or invokes the service to load the tree recursively. Obviously, this strategy will need to change when I refactor to support lazy loading:

namespace CodeBrowser.Cache

{

public static class TreeCache

{

private const string CACHE_KEY = "Master.Node.Key";

private static readonly TimeSpan _expiration = new TimeSpan(0,1,0,0);

public static IDirectory GetMasterNode()

{

IDirectory retVal = (IDirectory) HttpContext.Current.Cache.Get(CACHE_KEY);

if (retVal == null)

{

IService<DirectoryModel> service = ServiceFactory.GetService<DirectoryModel>();

retVal = service.Load(ConfigurationManager.AppSettings["root"]);

HttpContext.Current.Cache.Insert

(CACHE_KEY, retVal, null, DateTime.Now.Add(_expiration), TimeSpan.Zero);

}

return retVal;

}

}

}

Note that the main web project only deals with interfaces and factories (and concrete models), not actual implementations of services (it doesn't even know about data access behind a service). This allows us plenty of flexibility to extend down the road.

The only other thing I did was create a base page which registers all of the includes and CSS for the project. This makes the default page nice and neat as all of the set up is done in the background. I used a loose version of my MVC for WebForms architecture, but didn't scope out interfaces and control/controller factories. This is another place where I can refactor down the road and abstract these a bit more. For now, the controllers simply inject the controls and use the cache to pull in the file system structure.

You can view a live copy of the browser by clicking here (and use it to browse the code). To download the source in a zip file, click here. I hope you enjoy and learn from this project and I look forward to doing some refactoring and extending it in future posts.

Codeproject