A Time of Change

In 2002, the US Department of Education began the "No Child Left Behind" program. Already having a number of school districts as clients, Cayen Systems was contacted by Milwaukee Public Schools and several others about enhancing their current web application for after school data collection and reporting, "APlus", to help manage the NCLB-mandated Supplemental Education Services (SES) programs within the districts. Late in 2003, Cayen Systems launched "APlus SST" (Supplemental Services Tracker) - its web-based solution for SES program management.

The product was a success. In the first year, 39 school districts were using the product. By early 2009, 200 school districts were using APlus SST to manage their SES programs. Cayen Systems took advantage of their broadened client base by creating more products for the APlus platform that users in other parts of the district / organization would find useful. The added modules for Choice Placement, GEAR UP, SES Providers, Federal Inventory, and Title I. They built an add-on for biometric-based attendance scanning. They also built a separate web-based application for single site non-profit management. In addition, they began work on a web-based day school management system, "Cayen SchoolAdmin".

The rapid growth, while great for sales, was beginning to reveal some weaknesses in the company's software development life cycle. Throughout 2009, these weaknesses gradually became more apparent and serious. The breaking point came in late August 2009; the day after the APlus 6.0 release, Cayen Systems received over 350 support calls. It was clear that a new approach would be necessary to meet the demands of the growing client base and the increasing complexity of the software systems.

Challenges facing the team:

- Releases were often late, averaging 4.5 days past the target delivery date.

- Releases were large. A typical release cycle was 8-12 weeks.

- Work estimates were grossly inaccurate. On average, a task estimated at 10 hours was taking the team 21.4 hours to complete.

- Quality was degrading. The standing bug count for the main applications was over 220, and climbed with each release.

- Roles and responsibilities were very "silo'ed". Work on certain tasks would completely stop if certain team members were out of the office because they were "the only one who could do it", which created regular impediments.

- Team members, particularly the QA Engineers, were constantly being pulled to fight fires.

- Developers were rarely in direct contact with clients or Client Services team members to hear "the voice of the customer". Talking to people on other teams directly was generally discouraged.

- System integration was laborious, slow and error-prone. Testing and deploying a release typically took at least a week.

The Journey

Introspection

The team started tackling the challenges by making changes to internal processes. They spent time modifying their ERP system, attempting to make it easier for everyone in the company to understand what each team was doing with a particular work item and what stage of the development life cycle it was at. The individual departments also began meeting more frequently; even cross-team meetings occurred on occasion.

Most stakeholders (internal and external) believed that product quality was in need of the most improvement. Internal testing processes were revised and updated to include additional points of review; two team members would now test a work item instead of just one. Client Services team members were asked to participate in the version/integration testing phase of each release cycle in an effort to get "the client's perspective" on upcoming changes.

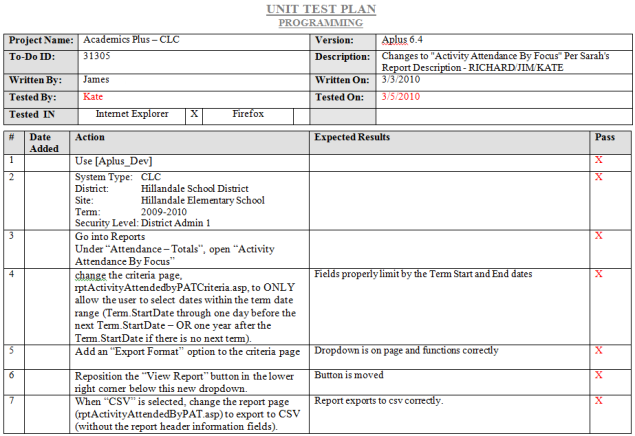

The team also started writing "test plans" (based on a template from TMap) for each deliverable work item. Below is a sample of one of the test plans:

While these efforts were directed at known problem areas, coming up with a customized solution to the problems internally just wasn't working. All the extra process steps, meetings and documentation resulted in longer delivery times. Quality and communication weren't really improving. The team wasn't seeing much progress on overcoming their challenges.

A few team members suggested looking into an established, standardized development life cycle model that other software companies were using successfully. After researching options, the Scrum model appeared to be a good fit. Fortunately, the team had learned from their mistakes - they knew they needed help. Management decided to hire an experienced coach to help lead the effort, and found a great match with Redpoint Technologies.

Our coach was onsite 2-3 days a week with the teams through their first few Sprints, helping them understand and implement the basic principles and practices of Scrum and Agile. He spent time with management to understand their goals and objectives, and delivered some recommendations based on his experiences with observations of current practices. He also helped the team establish a rhythm to their work by implementing the standard Scrum "ceremony" meetings: Daily Scrums, Planning Meetings and Sprint Retrospectives.

Equipped with new knowledge, proven techniques, and a plan of action based on expert analysis, the team was ready to take on their challenges.

A Firm Foundation

The team was feeling the most pain from their current version build and integration process, and agreed that it should be the first challenge they tackled. Up to this point, the current process was to code and test in an isolated development environment for the duration of the release cycle. A week before the target release date, the team would set up 6-8 test sites, one for each of the major products, and deploy their work to this group of sites. The list of modified source files scheduled for release was retrieved by hand from the ERP system. The development manager would usually perform this task, copying and pasting the names of the files from the "Modified Objects" list for each work item marked with the current release number into an Excel spreadsheet. Then the files were manually checked out from the source control system, and manually copied to each test site.

All of the manual steps in the process caused it to be highly error-prone and very time consuming. It generally took about 2 days to build the list of modified files, check them out of source control, rebuild the testing environment, and deploy the changes. Then it took another day to work through and correct integration issues from 8-10 weeks worth of work. Finally, another 2-3 days were needed to complete all of the step-by-step functional testing documents. Some releases, depending on how many issues were found, required the team to repeat this process 2-3 times.

The team decided to implement a simple continuous integration testing model instead. A couple of the developers got together and created a small utility that connected the ERP and source control systems. With just a few pieces of user input, all of the modified source files for a particular version of any product could be quickly gathered and retreived for deployment.

The team also set up a dedicated testing server built with snapshots of client data and sites to ensure they were testing and reviewing their changes just as a client would see them. They also designated a build engineer role to manage and maintain the test server and build process. Now pulling and testing the latest build of any product was easy. Rebuilding the testing environment could now be done at any time within a couple of hours instead of in a few days.

Every Monday from then on, the test environment was rebuilt. The latest build was collected from source control and pushed to the test sites. The newest version of client databases were pulled down from the production servers to overwrite last week's data. Any integration issues were immediately detected at the beginning of the work week, giving the team plenty of time to resolve them before the next build occurred.

The company saw a drastic decrease in the amount of bugs and integration issues that were making it into the production environment. After using this process for a few Sprints, integration issues became nearly nonexistent, and the bug count began to drop. The process and test environment became so stable that clients were given access to their own "test sites" for previewing custom features or major changes.

Telling the Story

Next, the team decided to address the lengthy specification and testing documents. On average, they were spending three times as long writing these as they were writing and testing the code - and the documents were rarely reusable.

Development tasks were described in Specification documents. Each "spec" was written by an analyst, reviewed by a manager, and coded by a developer. There were two versions of most specs; one for clients and one for internal use. The manager always estimated the work. Below is a sample portion of an internal spec that was used to build a report for a client:

SST Eval Report - SES vs. Non SES – [developer]/[analyst]

Original Author: [analyst] on 12/15/2009

Reviewed By: [manager] on 12/21/2009

Revised By: [developer] on 12/29/2009

Reviewed By: [manager] on 12/30/2009

Background: A report is needed that shows Average Score, Highest Score, Lowest Score, Median and Mode for the selected Test for both SES and Non-SES students.

Create a new [Report] record:

- Report = SES Evaluation Report

- Report Group = Academics

- Control = SES Evaluation Report

- Control Category = Reports - Academics

- FileName = rptSESEvaluationCriteria.asp

- Description = View the Average, Highest Score, Lowest Score, Median and Mode for the selected Test for both SES and Non-SES students.

- OneSite = False

- AllSites = True

|

Our coach suggested trying the User Story format to capture requirements instead. The team developed a basic template, and began to see immediate productivity gains upon implementation. They were now able to create a single document that captured requirements, details and acceptance / test criteria - while still allowing the team creative flexibility in how they would accomplish the work. The same document could also be shared and revised with stakeholders - whether internal or external. Here's a sample of a User Story from a report we build for a client:

| As an SES user, I need a report that shows the Application Status of students in my Program. | Details: Create a Report that will group students by Application Status. (Figure #3)

- The report needs to run for a single Site, or for all Sites within a District.

- Display the Student's Name, ID, Site, Application Status and Notes.

- Report will output to HTML or CSV.

| 12 hours |

The responsibility of estimating all deliverable work items was also passed to the team. For small tasks, 1-2 of the team members would review the Story and provide an estimate. For larger projects, the team began holding Planning Poker meetings to estimate all of the work items together.

Creating a list of work items and requirements, which had previously been the most time consuming portion of the development process, was now one of the simplest. It also made estimating work more efficient and accurate, because everyone involved could easily understand the scope of a feature and what would be required to deliver it.

Agile Refined

Shortly after the team adopted agile, it became apparent that our product was not stable. Injections were expected each day, and the team members felt the frustration. We had adopted a four week sprint followed by a release, which was obviously too long given the amount of change happening on a day to day basis. To address the issue the product owners and team decided to add a section to the scrum board for "critical bugs". This section would have a independent priority from the primary sprint, and subsequently the team was expected to manage both at the same time. Immediately this new process began to fail. The team was divided with no clear vision of the product. A change was needed, however we were unsure exactly how to handle it.

It was recommended by our coach that we do two things. The first, shorten the sprints to allow for continuous improvement. User stories identified during the first sprint could be handled in the second sprint without being considered an "injection". The second, break the team in two. Since we had a constant stream of customizations being requested by clients, along with a constant stream of bugs necessary to the stabilization of the product, it made sense to give two teams separate and more specific goals. Within the first sprint the teams and the product owners began to feel the success of these changes. The maintenance team could operate on a one week sprint—allowing for a more rapid release. While the customization team could focus on longer sprints to build larger features. Unexpectedly, we found that teams of 3 or 4 operated more effectively; communication improved and internal impediments were minimized. The change worked!

There's no "I" in Cayen

One of our coach's initial recommendations to management was to focus on and encourage cross-team collaboration. However, the existing work environment was divided. Cubes separated teams from each other. Management was upstairs, Client Services and QA were floor level, and Engineering was in the basement.

To alleviate this challenge, management decided to move all the teams to the same floor of the building. Almost all of the cubicle walls were taken down to make room for everyone and to create an open workspace:

They also set up a dedicated Scrum room in one of the now vacant offices. All teams in the company have a board and daily or weekly Scrum meetings here to help spread visibility and interaction across the organization:

Above: IT/Admin, New Products, Client Care and Business Development.

Below: Data Services and Classic(APlus) Products.

As a result, cross-team communication has improved dramatically since the change. It's easy to stop by someone's workstation and ask a question, or pull a developer into a client call to answer a technical question. The thread of collaboration runs through the organization on a daily basis.

Results so far

- All of our internal teams / departments, including management, now utilize the Scrum framework and Agile concepts as a regular part of their day-to-day workflow.

- Releases are almost always on time, only missing the target date twice in the last two years. Even in those cases, the release we delivered within 1 day of the target.

- Delivery times are much shorter, with a release occurring every 3-5 weeks.

- Estimates are accurate, averaging a 4.4% variance. A 10 hour task now takes the team about 10.4 hours to complete.

- Bug counts are down +65% across all products, and they continue to fall with each release.

- Teams are averaging 96.6% satisfaction with staff interactions on Client Care surveys.

- Teams are more engaged and empowered to make decisions that benefit the organization and clients. We rarely have Support tickets that make it beyond the Client Care and QA teams.

- Teamwork and collaboration are greatly improved, giving developers better insight into client wants and needs. New features / customizations are demonstrated to internal and external stakeholders before being put into production. Clients are also given a dedicated testing site while custom features are being built for them to review and verify the changes before they are deployed to their production site.

Agile stresses continuous improvement. We're very proud of our accomplishments, but recognize that there are plenty of things we can still do better - for our clients, our business and ourselves.

Conclusions

Some things we've learned from this experience:

- Keep the client's needs top of mind. All teams should be hearing "the voice of the customer" as often as possible. Involve clients in the development life cycle as much as they're willing to be involved.

- Collaboration is critical. At all levels of the organization and external client base, constant communication and feedback are truly valuable.

- Support from upper management was critical to our success. They desired change and helped drive it - both by investing in the process (bringing in an expert to coach the teams, Scrum boards / materials, work environment changes, etc.), and by constantly communicating their desire to make improvements in day-to-day processes.

- Having a great coach really helped us get up and running. Besides the experience, insight and excellent guidance he provided, he also mitigated internal disagreements that occurred at critical junctures of the journey simply by being a neutral third party and respected expert.

- The Product Owner role is a very important part of an efficient Agile team. Having a well-groomed Product Backlog and an up-to-date Product Roadmap provide a much higher degree of vision and flexibility to everyone involved in the project - the team, management, and the clients.

- Collect and post metrics. Add visibility to your results, good or bad. A good result is a success to share; a bad one is a common goal to improve.

- "Don't be a victim of your paradigms." A great quote from our Scrum coach. Basically, keeping an open mind to new ideas, opinions and techniques, on all levels of an organization, is a key to succeeding with Agile.

Resources

There are links throughout this article describing in detail terms or processes that we used. We also frequently referenced materials from Mountain Goat Software. There are lots of great, polished presentations here that your team / group can make use of. "Overview of Scrum" and "Effective User Stories" have been particularly useful for us. Their books are also excellent sources of information.

We'd also like to share some simple tools that we've collected or created along the way (Downloadable content - 48kb):

- Roadmap.xls - Early on, we had difficulty looking beyond the current Sprint or release cycle we were in. This created a number of problems for us when trying to plan larger projects or coordinate work across teams - until we started using this tool. Now, anybody in the company can open this file and, in seconds, get a picture of what we're planning to work on months down the road.

- Product Backlog.xls - Just a simple Backlog sheet really. Our teams currently keep Stories in a custom-built ERP system, but we still use this template from time to time when "whiteboarding" a large feature or project. Note that we estimate Stories in Hours. We have a few business reasons for this; your business / team may want to use more abstract estimates.

- Sprint Backlog.xlsx - We use this common Scrum tool to help increase visibility on each Engineering team. Each day our ScrumMasters print and post new Burndowns so everyone in the organization can see the team's progress. It's great for Sprint Planning meetings as well; just bring up a new worksheet and start pasting in your Story commitments.

- User Story Template.xls - Because we accept customization requests from clients, we routinely send out quotes for development work. We needed a simple way to communicate the User Stories, acceptance criteria and work hours between the clients, developers, and business team. This template has worked really well for us; so well that we use it for all large projects, both internal and external.

Questions? Thoughts? Criticism? We'd love to hear from you: engineering@cayen.net

History

* 05/30/2012: Initial posting.

* 06/02/2012: Added a link for Downloadable content.