For a change from me bleating on about ServiceNow reporting, I thought I’d write something a lot more generic.

This is the number one recurring mistake I have seen consulting and pops its ugly head up time and again across all business sectors, reporting software, and methodologies.

It is particularly prevalent for ITIL service reporting, but appears whenever time periods are used as a basis for measuring multiple values.

The thought process tends to go something like this:

- Ok, we need to know how many Incidents were opened last week, so let’s filter the report for those records.

- Now we need to know the summary by Priority, so add a group for the Priority and count the Incidents in each group.

- What’s next? Oh yeah, Incidents which were closed last week. Easy stuff, let’s just count the Incidents with a Status of ‘Closed’.

- Next, outstanding Incidents that were not closed at the end of the week.

- Easy, just count the Incidents that do not have a Status of ‘Closed’.

- Done!

It’s not done! It’s massively wrong, misleading and hides the worst Incident resolution stats!

What this report will show is the number of Incidents opened last week and the number of those opened Incidents which were closed.

Any Incident which was opened two weeks ago and closed last week will not appear in this report, not only that, it will not appear as closed in any report… ever.

Additionally, if the report for the last week is refreshed first thing on a Monday morning, it will show one result, but if more Incidents from last week are closed after that and it is refreshed again: the results of the report will change.

This is horribly wrong for a report that should be a definitive record of the previous week’s results. And a ‘solution’ of “run it once, first thing on a Monday” is amateurish in the extreme.

And if all that is not bad enough, with the above report, it is possible for the KPIs for each week to be reached, but fail over a monthly or a yearly based report!

So What Went Wrong?

Quick answer: the date range.

Because the report developer’s focus was initially on the opened Incidents, the rest of the report metrics suffer.

To correct this, the date range filter needs to accommodate both open and closed Incidents within the last week.

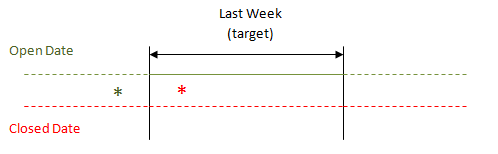

This is what the report does currently (the solid lines depict which date ranges are evaluated in the filter):

Because the closed date is not used, only Incidents opened in the last week are selected with no regard as to whether or not they are closed.

The asterisks are there to illustrate my earlier point. The green asterisk represents an Incident being opened towards the end of the week. The red asterisk is the same Incident being closed in the following week.

The closing of this Incident does not fall into the report logic and will never be displayed, even though an Incident being closed (or resolved) is the best indicator of work being done.

The obvious solution may appear to be to use the closed date, rather than status, like this:

This works for the open and closed Incidents, but does not identify ALL the outstanding ones at the week’s end.

The problem is that an Incident can be opened before last week and remains open during the entirety of last week.

To cover all possibilities, a wider approach is needed:

In short, every Incident opened before the end of last week which was not closed before the start of last week.

This logic will provide the correct bucket from which the separate metrics can be extracted.

In theory, this means scanning the entire database every week for long term open Incidents which can be a massive reporting overhead.

The way to handle this is to either make an arbitrary decision as to what age open Incidents can be ignored in this style of report (but ideally be featured in a dedicated ‘Old Incidents’ report)… or investigate the actual data and find the real cut-off date for Incidents which are not closed and report from that point.

Finally

The above is focused purely on the conflict between measuring three simple values in the one report.

With other metrics added, the issue is compounded, especially when re-opened, re-allocated or suspended Incidents are thrown into the mix.

The key thing to keep in mind: when designing any report is to base the date filters on whole of the data required for ALL the metrics being featured in the report.

And finally, whenever adding a new metric to an existing report, re-examine the date filter in light of the new requirement. It is sometimes the case that to get an accurate result for a new metric causes an impact on every other metric already in the report.