Introduction

SharpZipLib is a pretty great Open Source data compression library. If you need to package up some files for distribution in a programmatic way in .NET, it will probably solve 100% of your problems about 80% of the time. Basically, if you want to try to zip files that are larger than 2GB, SharpZipLib won't work properly for you without a bit of extra effort.

ZipBuilder is an attempt to package up that extra effort in a reusable way so people don't have to solve this problem again. It also provides a convenient solution to the problem of "I need to package up a bunch of files programmatically". With ZipBuilder, you can just specify the input of what files to compress, and the name of the output zip file. This can all be accomplished in just two lines of code.

Background

If you have used SharpZipLib in the past, you are probably familiar with the typical pattern for creating a new zip archive for a single file. It goes something like this in its most rudimentary form:

var inputStream = File.OpenRead("c:\\file_to_archive.txt");

var buffer = new byte[inputStream.Length];

inputStream.Read(buffer, 0, inputStream.Length);

var zipStream = new ZipOutputStream(File.Create("c:\\archive.zip"));

var zipentry = new ZipEntry(Path.GetFileName("c:\\file_to_archive.txt"));

inputStream.PutNextEntry(zipentry);

zipStream.Write(buffer, 0, buffer.Length);

zipStream.Finish();

zipStream.Close();

In this example, I didn't include anything about how to deal with multiple files in the archive. I also didn't provide a checksum for the file being archived. So, basically, with 9 lines of code, I haven't really solved any problem of great importance. To solve a real problem, I'm looking at adding a lot more than this, just to create a package that is comprised of multiple files and include a checksum.

Any astute reader will recognize an even bigger problem lurking here, which is the fact that this code's algorithm for compressing a single file is essentially:

- Read a large block of data into memory

- Write a large block of data back to disk through the compressor

This works great if your files are reasonably small, but it doesn't work so well if your files are larger than 2GB. When you start needing to compress files that are this large, you need to break the work down into chunks that fit inside IStream's signed 32-bit Read/Write operational limitation.

Now, even if you did manage to put together something that breaks the input stream up into chunks, you would then run into a new problem if you wanted to provide checksum values for verifying file integrity. The problem in this case is that the CRC32 object that comes as part of SharpZipLib doesn't support generating the CRC on anything but a single buffer at a time. Thus, you will also need to write your own CRC32 object that can accumulate data over multiple buffer reads.

Luckily, with ZipBuilder, both of these problems are solved. ZipBuilder is capable of taking any file and handling it a chunk at a time. Furthermore, it has its own Crc32Accumulator that is capable of calculating a CRC over a large set of data that is read in a chunk at a time. And Finally, ZipBuilder provides a convenient interface to let you just "create a zip package from a bunch of files", without having to think about it too hard.

Using the Code

To make use of ZipBuilder, you really only have to write the following:

var zipper = new ZipBuilder();

zipper.CreatePackage("c:\\backups\\backup.zip", "c:\\data\\*.sql");

With these two lines of code, you will be able to take all of the SQL files in the data folder and store them in a zip archive called backup.zip. These files will all be stored with a proper checksum, and it won't matter how large any of them are.

That's it for the basic case. But, ZipBuilder does support some other features that might be of use to you. First, if you want to adjust the compression level for the files in your archive, or you want to change the amount of memory used for the chunk buffers, you can do that with code like the following:

var zipper = new ZipBuilder();

zipper.CompressionLevel = 9;

zipper.BlockSize = 1048576 * 4;

zipper.CreatePackage("c:\\backups\\backup.zip", "c:\\data\\*.sql");

By using the CompressionLevel and BlockSize properties, you can tell ZipBuilder how tight to compress the input files and how much memory to use during compression. ZipBuilder will default to using 64MB of memory for its memory buffer, but you can feel free to decrease this in more constrained environments and increase it when you want to speed things up and you have the memory to spare. ZipBuilder will also default to compression level 9. I recommend consulting SharpZipLib's documentation for a description of what these compression level values imply.

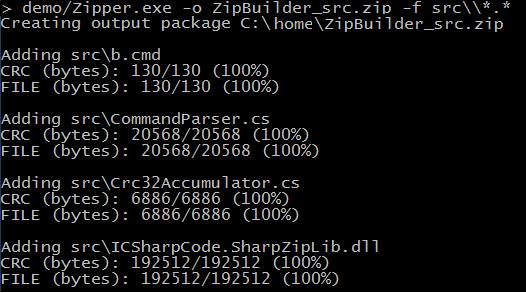

Second, you can register callbacks to monitor ZipBuilder's progress as it creates a zipped package. You can see this in action in the demo application:

var packer = new ZipBuilder();

packer.FileStarted = (string f) => {

Console.WriteLine(String.Empty);

Console.WriteLine("Adding " + f);

};

packer.FileProgress = (long p, long t) => {

float percentComplete = (float)p / (float)t * 100;

var msg = String.Format("FILE (bytes): {0:n0}/{1:n0} ({2:0}%)",

(p), (t), percentComplete);

Console.Write(String.Empty.PadLeft(70, ' ') + "\r");

Console.Write(msg + "\r");

if (p == t) {

Console.WriteLine();

}

};

In this example, I've shown how you can assign a callback and receive information on when ZipBuilder starts or finishes a file, and where it is in its progress on a single file in terms of bytes processed and total bytes to process. In this example, we use this capability to display the progress of the CRC calculation and file compression in the Zipper.exe utility.

It should be noted that these "events" were implemented using a callback and not via the C# eventing paradigm. It would be easy to convert ZipBuilder into something that does support multiple observers through that mechanism; I just didn't think it was necessary while putting this project together.

Points of Interest

Overall, I'm fairly happy with how this project turned out. I was a little frustrated that SharpZipLib's CRC32 object didn't support accumulated CRC calculation, but it was fun to write my own implementation of this to work around that limitation. I'm also a little concerned that I may have missed something in how to use SharpZipLib that would have solved my problem with their CRC32 implementation. Hopefully, the authors of SharpZipLib will take my Crc32Accumulator implementation and make its features a core part of their CRC32 object. This assumes they are actually novel and that I haven't missed something obvious.

History