Index

This article aims at presenting a new approach to create a software

documentation and describing the structure, functionalities and internals of the

gds application created to implement the concepts here exposed. Please notice

that the application itself is not intended to be a commercial product or a

company-tool (although it may serve as such) rather than a conceptual

implementation of the following concepts.

A common software documentation is usually made of one or several text and

graphical documents which explain how the software is structured and how it

works. There are many types of software documentation and even more

methodologies to create one (e.g. Unified Process, Tropos - agent oriented,

etc..) and most of them are standardized groups of steps, refinements and

frameworks. Without diving into one specific documentation system it's obvious

that each one has pros and cons depending on the task it's used on. Following a

software documentation methodology means planning a number of initial steps and

then proceeding to design the application's components which will eventually be

translated into pure code. Although modeling languages, graphs and diagrams can

be used to describe the resulting structure of a previous written code project,

the standard modeling techniques recommend a series of steps and practices to be

followed upon each software project opening. In particular modeling a wide

software from the ground up it's a complex task that requires a deep knowledge

of the underlying architecture, API and features available.

Since having a perfect knowledge of a language doesn't mean you can always

easily grasp the meaning or concepts expressed within a text (or a generic

graphical representation) that uses that language - and this isn't just

valid for programming languages -, it is perfectly normal to spend time on a

source code file to understand what that code actually does and to "mentally

link" those operations in the complex view of a greater application. Describing

a software behavior and structure should help other people who are supposed to

work with your code to understand how you structured your code (how many modules

/ did you follow a pattern / etc..) and what's the purpose of a specific part of

your project. Extensively commenting a piece of code is a good programming practice although sometimes it may not be enough to completely replace a proper documentation. As long as you really want other people to understand your code

it's your primary goal to give a complete insight of what your application does

and how it works. A software company that hires a new programmer and put him to

work on a specific part of a greater application is interested in providing him

as much information as possible on how that module works, what is supposed to do

and (possibly) why they designed it that way. The sooner the programmer grasps

the code and gets familiar with it, the sooner he will be fully operative on

that code and capable of modify it or expand it.

The ability to explain a concept clearly and as effectively as possible is a

personal skill and varies from one to another. However there are practices and

techniques which may greatly simplify a concept understanding. First of all: the

level of complexity. If a module is very complex (i.e. it's formed by many other

functions/modules or performs a great variety of operations strictly

interconnected between them) it might be difficult to describe in a formal

documentation. In general every complex element can be split into a number of

other parts. Let's take for instance a simple program which asks for a matrix

and returns each matrix's number square

#include <iostream>

using namespace std;

class myMatrix

{

public:

int rows, columns;

double *values;

myMatrix(int height, int width)

{

if (height == 0 || width == 0)

throw "Matrix constructor has 0 size";

rows = height;

columns = width;

values = new double[rows*columns];

}

~myMatrix()

{

delete [] values;

}

myMatrix& operator= (myMatrix const& m)

{

if(m.rows != this->rows || m.columns != this->columns)

throw "Size mismatch";

memcpy(m.values,this->values,this->rows*this->columns*sizeof(double));

}

double& operator() (int row, int column)

{

if (row < 0 || column < 0 || row > this->rows || column > this->columns)

throw "Size mismatch";

return values[row*columns+column];

}

};

int main(int argc, char* argv[])

{

int dimX, dimY;

cout << "Enter the matrix's X dimension: ";

cin >> dimX;

cout << "Enter the matrix's Y dimension: ";

cin >> dimY;

myMatrix m_newMatrix(dimY,dimX);

for(int j=0; j<dimY; j++)

{

for(int i=0; i<dimX; i++)

{

cout << "Enter the (" << i << ";" << j << ")th element: " << endl;

cin >> m_newMatrix(j,i);

}

}

for(int j=0; j<dimY; j++)

{

for(int i=0; i<dimX; i++)

{

m_newMatrix(j,i) = m_newMatrix(j,i)*m_newMatrix(j,i);

}

}

cout << endl;

for(int j=0; j<dimY; j++)

{

for(int i=0; i<dimX; i++)

{

cout << m_newMatrix(j,i) << "\t";

}

cout << endl;

}

return 0;

}There are various levels of detail that could explain this simple program's tasks and modus operandi. Just like higher level languages can abstract more than lower level ones, a first high level may explain this program's purpose in a simple and concise way. A second level may expand on the first one and give insights on how the program is structured. An additional third level may continue the second level's work and additionally expand each description. This process can continue until the application's code, which acts like a last level complete of every information needed, is reached. The code provides a greater level of detail but is more complex with respect to the other levels.

The above program might have a level structure like the following

In this case it was chosen to use three levels (plus the code level) to represent the same information at higher and lower (richer) detail, but the number of levels could have been greater. The concept of "greater detail" is a fundamental one in almost every software documentation and in every software designing process.

Another concept that needs to be taken into account when getting started with a new software code is the context where a chunk of code is inserted into. Most of the time spent searching for a specific part of the code where the program performs certain operations is needed by the programmer to create a "mental map" in which each block is categorized and its role in the overall architecture is well-defined.

Finally the execution order of the program's blocks isn't always obvious, especially when dealing with highly multi-threaded code. Sometimes only a careful reading can lead to understand the synchronizing mechanisms of the threads involved.

Using a graphical and interactive approach to software documenting is a relatively new concept. Since a concrete example is worth a thousand words, in this section a small Qt C++ program will be presented along with its associated interactive documentation. The entire package (program sources + documentation directory) can be downloaded by the link on the top of this page.

The program we are going to examine through the help of an interactive documentation is a simple one: a basic linear function drawer in a restricted Cartesian graph area

Since this is a sample (and simple) application, just a basic drawing feature has been implemented with a code that isn't definitely brilliant for error handling and modularity. Although its code is not hard to understand by reading it whole, if the application had been a more complex one a programmer would have spent a considerable amount of time trying to understand the structure, all the data types and their roles, the execution flux (as already said multithreading can hinder this process) and the overall cooperation between various modules.

The following video is a showcase of how the gds software produces an interactive documentation for the simple graph application

Youtube Demo Video of the GDS Application for the sample Cartesian Graph App

Youtube Demo Video of the GDS Application for the sample Cartesian Graph App

GDS stands for "Graphical Documentation System" and it's a concept experimental application designed to provide an interactive and extremely intuitive overlook of a new software code. If used properly gds allows a programmer to create a high detailed documentation of its code for others to use and understand.

The concepts presented a few sections above have led the gds app designing and realization. In this section the application usage is briefly presented, afterwards the application's structure and code organization will be presented. Note that gds uses openGL rendering and requires openGL extensions 3.3 or higher to run properly. It also needs Microsoft Visual C++ 2010 Redistributable x86 package installed (you can freely download it from here).

The application has two main operative modes:

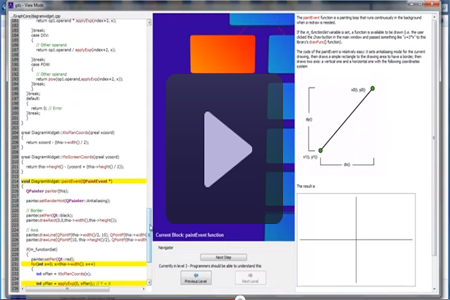

- View Mode - this mode provides a virtual tour of the three-level documentation and it's recommended for first-time code users

- Edit Mode - this mode allows to create a new documentation (if the documentation directory where all the database files are stored isn't present) or to edit an existing one

The user is prompted for a choice upon the application's start

The view mode is quite intuitive, there's a code pane (not visible in level one - everyone should be able to understand it), a central diagram pane and a right documentation pane. There's also a navigation pane that allows three actions

- Zoom out to the previous level (level 1 is the maximum a user can zoom out)

- Zoom in in the selected node (level 3 is the maximum a user can zoom in)

- Select next block - this is useful to navigate inside the code and get a precise order of how things happen in the code logic. Blocks order can be set in edit mode (we'll see how quite soon).

The following screens show the gds app in view mode respectively in level 1, 2 and 3

The edit mode allows a programmer to modify or create a new or existing documentation. When there's no documentation (i.e. there's no gdsdata directory in the application's path) no graph is available and gds tries to recreate it. This could mean that the documentation has been moved elsewhere (and gds can't find it) or that there's no documentation yet.

The following is the gds app in edit mode with no documentation found

With the "Add Child Block" button nodes can be added (or root nodes if there is no graph) to the documentation along with a label (a block name), an index and the documentation. The index field is used by the view mode to navigate through blocks in the right order. If this index is set duplicate or wrong, the view mode will simply navigate through the wrong order. In each level it is possible to delete an element (whatever it is: root/child/parent) or swap its content with another one.

Pressing the "Next Level" button while having one node selected will cause the gds to create a sub-level for that node: that means the block needs additional details on how it works. The gds automatically saves modified nodes when navigating through levels or closing the application.

The code pane on the left is visible only in level 2 and 3 and lets a user select a codefile and highlight lines into it

A level 2 block may not have a codefile associated, so there's a "Clear" button. Notice that gds is meant to reside in a fixed location inside your code project's root directory. Every path to codefiles is stored as relative to gds directory. There are simple correction algorithms to retrieve the right line of code if it has been moved, however gds should be used to document files that are meant to be released and "ready". Obviously a documentation file might also be deleted and the associated node would get the "Documentation file not found" error then allowing the user to define a new documentation file. As already stated this is a concept and experimental application to convoy a new documentation method, a commercial tool that would embrace this philosophy should integrate these functionalities into a proper version control system (which would also keep track of different and moved files).

Since gds has been conceived to be an easy-to-use application there's nothing else needed to know to use it as a normal user.

The following sections will describe in greater detail the programming logic behind gds, so it's mainly targeted to a programming audience or to someone who is interested in modifying gds (gds is opensource).

The following section will require some basic openGL programming knowledge - reader advised.

The QGLDiagramWidget class is the main widget of the entire gds application. It's the central pane which displays the 3D graph and allows the tree diagram to be rendered. Since the application uses Qt libraries, the widget is a subclass of the QGLWidget class that provides functionalities to draw openGL graphics by providing three main virtual functions that can be reimplemented:

- paintGL() - this is the function where the openGL scene is rendered and where most of the widget's code resides

- resizeGL() - called whenever the widget is resized

- initializeGL() - sets up an openGL rendering context, called once before paintGL or resizeGL

The diagram widget also uses overpainting (see the Qt documentation for more information) which basically means the block name is painted over the openGL rendered scene, the code that calls for a repaint and then performs the overpainting is the following

void QGLDiagramWidget::paintEvent(QPaintEvent *event)

{

makeCurrent();

glPushAttrib(GL_ALL_ATTRIB_BITS);

glMatrixMode(GL_PROJECTION);

glPushMatrix();

glMatrixMode(GL_MODELVIEW);

glPushMatrix();

QGLWidget::paintEvent(event);

if(dataDisplacementComplete)

{

QPainter painter( this );

painter.setPen(QPen(Qt::white));

painter.setFont(QFont("Arial", 10, QFont::Bold));

QRectF rect(QPointF(10,this->height()-25),QPointF(this->width()-10,this->height()));

QRectF neededRect = painter.boundingRect(rect, Qt::TextWordWrap, "Current Block: " + m_selectedItem->m_label);

if(neededRect.bottom() > this->height())

{

qreal neededSpace = qAbs(neededRect.bottom() - this->height());

neededRect.setTop(neededRect.top()-neededSpace-10);

}

painter.drawText(neededRect, Qt::TextWordWrap , "Current Block: " + m_selectedItem->m_label);

painter.end();

}

swapBuffers();

glMatrixMode(GL_MODELVIEW);

glPopMatrix();

glMatrixMode(GL_PROJECTION);

glPopMatrix();

glPopAttrib();

if(!m_readyToDraw)

{

m_readyToDraw = true;

qWarning() << "GLWidget ready to paint data";

if(m_associatedWindowRepaintScheduled)

{

qWarning() << "m_associatedWindowRepaintScheduled is set";

if(m_gdsEditMode)

{

qWarning() << "Calling the deferred painting method now..";

((MainWindowEditMode*)m_referringWindow)->deferredPaintNow();

}

else

{

qWarning() << "Calling the deferred painting method now..";

((MainWindowViewMode*)m_referringWindow)->deferredPaintNow();

}

}

}

} The code is extensively commented, but a few words are worth spending since may give a useful insight on what's going on.

The QGLDiagramWidget uses double buffering, that means the scene rendered on the openGL context isn't showed until a swapBuffers() call is made. This prevents flickering between colorpicking modes (we'll explain this shortly) and animated transitions.

The initializeGL() function takes care of initializing all the resources needed by the openGL scene, i.e. VBOs (Virtual Buffer Objects, these are buffers needed to store data for the elements to be drawn like vertex, uv texture coordinates, normals and indices), textures and shaders.

loadShadersFromResources("VertexShader1.vert", "FragmentShader1.frag", &ShaderProgramNormal);

loadShadersFromResources("VertexShader2Picking.vert", "FragmentShader2Picking.frag", &ShaderProgramPicking);

freeBlockBuffers();

initBlockBuffers();

freeBlockTextures();

initBlockTextures();

The GLwidget uses a simple 3D model whose vertices, UV texture coordinates, normals and indices are stored into the "roundedRectangle.h" file. The widget also renders it and applies a phong lighting model through the compiled shader programs (there are two pairs of vertex and fragment shaders, the first pair is used to normally draw elements, the second pair is used to initialize a colorpicking scene and to perform simple operations like connectors drawing).

The vertex shader used to normally render objects is the following

#version 330 core

layout(location = 0) in vec3 aVertexPosition;

layout(location = 1) in vec2 aTextureCoord;

layout(location = 2) in vec3 aVertexNormal;

uniform mat4 uMVMatrix;

uniform mat4 uPMatrix;

uniform mat3 uNMatrix;

out vec2 vTextureCoord;

out vec3 vTransformedNormal;

out vec4 vPosition;

void main()

{

vPosition = uMVMatrix * vec4(aVertexPosition, 1.0);

gl_Position = uPMatrix * vPosition;

vTextureCoord = aTextureCoord;

vTransformedNormal = uNMatrix * aVertexNormal;

} There are attributes used to receive model's vertices, UV texture coordinates and normal versors, uniforms to receive perspective, modelview (composed by model - this matrix is set to the element's position and view - this is set by default to the root element but can be changed with directional arrows on the keyboard) and a normal matrix needed to preserve direction and modulo of the unit normal vector (if you're interested in why a special normal matrix needs to be passed to the fragment shader to adjust lighting take a look at Eric Lengyel's "Mathematics for 3D Game Programming and Computer Graphics"). The vertex shader basically just calculates the new vertex position and passes it along with uv coordinates and the trasformed normal to the fragment shader.

The fragment shader takes care of calculating the light direction (these shaders use per-fragment light that comes from a point) and the light weighting vector that will be used to weight the light color components. Finally it renders the texture (using the UV texture coordinates) keeping in mind the light weighting.

#version 330 core

in vec2 vTextureCoord;

in vec3 vTransformedNormal;

in vec4 vPosition;

uniform sampler2D myTextureSampler;

uniform vec3 uAmbientColor;

uniform vec3 uPointLightingLocation;

uniform vec3 uPointLightingColor;

void main()

{

vec3 lightWeighting;

vec3 lightDirection = normalize(uPointLightingLocation - vPosition.xyz);

float directionalLightWeighting = max(dot(normalize(vTransformedNormal), lightDirection), 0.0);

lightWeighting = uAmbientColor + uPointLightingColor * directionalLightWeighting;

vec4 fragmentColor;

fragmentColor = texture2D(myTextureSampler, vec2(vTextureCoord.s, vTextureCoord.t));

gl_FragColor = vec4(fragmentColor.rgb * lightWeighting, fragmentColor.a);

} The paintGL() function is where most of the graphic work is done. After initializing the viewport and several other default values (e.g. glClearColor) the function can switch into two modes:

- A color picking one

- The normal rendering one

Color picking is a graphic technique often used with openGL to identify objects clicked in the scene. Is a more recent technique than SELECT picking and ensures a perfect integration with programmable pipelines (on the other hand SELECT picking relies on fixed pipelines).

Basically each object is stored as a "dataToDraw" object and is granted a unique color

unsigned char dataToDraw::gColorID[3] = {0, 0, 0};

float QGLDiagramWidget::m_backgroundColor[3] = {0.2f, 0.0f, 0.6f};

dataToDraw::dataToDraw()

{

m_colorID[0] = gColorID[0];

m_colorID[1] = gColorID[1];

m_colorID[2] = gColorID[2];

gColorID[0]++;

if(gColorID[0] > 255)

{

gColorID[0] = 0;

gColorID[1]++;

if(gColorID[1] > 255)

{

gColorID[1] = 0;

gColorID[2]++;

}

}

if(gColorID[0] == (QGLDiagramWidget::m_backgroundColor[0]*255.0f)

&& gColorID[1] == (QGLDiagramWidget::m_backgroundColor[1]*255.0f)

&& gColorID[2] == (QGLDiagramWidget::m_backgroundColor[2]*255.0f))

{

gColorID[0]++;

}

} When the user clicks on an object the mouse position is recorded and, since raw openGL doesn't recognize objects as entities, the entire scene is rendered with each mesh' unique color. Afterwards the point where the mouse was clicked is read back from the framebuffer and its color is compared against each object's color to identify the object the user clicked on.

The code snipper that performs this work is the following

if(m_pickingRunning)

{

currentShaderProgram = ShaderProgramPicking;

if(!currentShaderProgram->bind())

{

qWarning() << "Shader Program Binding Error" << currentShaderProgram->log();

}

uMVMatrix = glGetUniformLocation(currentShaderProgram->programId(), "uMVMatrix");

uPMatrix = glGetUniformLocation(currentShaderProgram->programId(), "uPMatrix");

float gl_temp_data[16];

for(int i=0; i<16; i++)

{

gl_temp_data[i]=(float)gl_projection.data()[i];

}

glUniformMatrix4fv(uPMatrix, 1, GL_FALSE, &gl_temp_data[0]);

gl_modelView = gl_view * gl_model;

glBindBuffer(GL_ARRAY_BUFFER, blockVertexBuffer);

glEnableVertexAttribArray(0);

glVertexAttribPointer(

0,

3,

GL_FLOAT,

GL_FALSE,

sizeof (struct vertex_struct),

(void*)0

);

glBindBuffer(GL_ELEMENT_ARRAY_BUFFER, blockIndicesBuffer);

glDisable(GL_TEXTURE_2D);

glDisable(GL_FOG);

glDisable(GL_LIGHTING);

adjustView();

postOrderDrawBlocks(m_diagramData, gl_view, gl_model, uMVMatrix, TextureID);

gl_previousUserView = gl_view;

unsigned char pixel[3];

glReadPixels(m_mouseClickPoint.x(), m_mouseClickPoint.y(), 1, 1, GL_RGB, GL_UNSIGNED_BYTE, pixel);

if(pixel[0] == (unsigned char)(m_backgroundColor[0]*255.0f)

&& pixel[1] == (unsigned char)(m_backgroundColor[1]*255.0f)

&& pixel[2] == (unsigned char)(m_backgroundColor[2]*255.0f))

{

m_goToSelectedRunning = false;

qWarning() << "background selected..";

m_pickingRunning = false;

this->setFocus();

}

else

{

QVector<dataToDraw*>::iterator itr = m_diagramDataVector.begin();

while(itr != m_diagramDataVector.end())

{

if((*itr)->m_colorID[0] == pixel[0] && (*itr)->m_colorID[1] == pixel[1] && (*itr)->m_colorID[2] == pixel[2])

{

m_selectedItem = (*itr);

this->setFocus();

m_pickingRunning = false;

The selected element's pointer is then passed back to the associated window (view mode or edit mode) to signal the user has clicked on the graph on another element (or the same).

Drawing the tree isn't trivial if you have no experience with binary and n-ary trees. The algorithms that perform the tree-displacement are the following

void QGLDiagramWidget::calculateDisplacement()

{

long depthMax = findMaximumTreeDepth(m_diagramData);

m_depthIntervals.resize(depthMax+1);

postOrderTraversal(m_diagramData);

dataDisplacementComplete = true;

if(!m_swapInProgress)

repaint();

}

void QGLDiagramWidget::postOrderTraversal(dataToDraw *tree)

{

for(int i=0; i<tree->m_nextItems.size(); i++)

{

postOrderTraversal(tree->m_nextItems[i]);

}

if(tree->m_nextItems.size() == 0)

{

tree->m_Ydisp = - (tree->m_depth * MINSPACE_BLOCKS_Y);

tree->m_Xdisp = m_maximumXreached + MINSPACE_BLOCKS_X;

m_depthIntervals[tree->m_depth].m_allElementsForThisLevel.append(tree->m_Xdisp);

if(tree->m_Xdisp > m_maximumXreached)

m_maximumXreached = tree->m_Xdisp;

}

else

{

tree->m_Ydisp = - (tree->m_depth * MINSPACE_BLOCKS_Y);

if(tree->m_nextItems.size() == 1)

{

tree->m_Xdisp = tree->m_nextItems[0]->m_Xdisp;

}

else

{

qSort(m_depthIntervals[tree->m_depth+1].m_allElementsForThisLevel.begin(), m_depthIntervals[tree->m_depth+1].m_allElementsForThisLevel.end());

long min = m_depthIntervals[tree->m_depth+1].m_allElementsForThisLevel[0];

long max = m_depthIntervals[tree->m_depth+1].m_allElementsForThisLevel[m_depthIntervals[tree->m_depth+1].m_allElementsForThisLevel.size()-1];

tree->m_Xdisp = (max+min)/2;

}

m_depthIntervals[tree->m_depth].m_allElementsForThisLevel.append(tree->m_Xdisp);

if(tree->m_Xdisp > m_maximumXreached)

m_maximumXreached = tree->m_Xdisp;

m_depthIntervals[tree->m_depth+1].m_allElementsForThisLevel.clear();

}

m_diagramDataVector.append(tree);

}

long QGLDiagramWidget::findMaximumTreeDepth(dataToDraw *tree)

{

if(tree->m_nextItems.size() == 0)

return 0;

else

{

int maximumSubTreeDepth = 0;

for(int i=0; i<tree->m_nextItems.size(); i++)

{

long subTreeDepth = findMaximumTreeDepth(tree->m_nextItems[i]);

if(subTreeDepth > maximumSubTreeDepth)

maximumSubTreeDepth = subTreeDepth;

}

return maximumSubTreeDepth+1;

}

} The steps are:

- Explore the memory-stored tree in post-order (children first -> parents after)

- Use the depth information for the Y coordinate and the children information for the X

3. The father is always centered between its children

The result is a tree displacement (screen of the application in its early stage)

There are other things that could be said on the GLWidget but what has just been mentioned it's more than enough to understand the code.

Another big unit of the project is the edit mode window, mainly because of its number of controls and widgets incorporated. The code is highly commented here too, so we'll just focus on the parts of the code that are relevant to a complete comprehension. The view window code is rather similar although there are a great number of small differences that would make a unique refactoring a living hell (that's why two classes have been created).

By default the edit mode window's constructor starts in level-one mode. Each level is identified by an enum type and each object (i.e. each block) has a dbDataStructure associated with it. The core structures declarations can be found in the "gdsdbreader.h" header

#include <QDir>

#include "diagramwidget/qgldiagramwidget.h"

#define GDS_DIR "gdsdata"

enum level {LEVEL_ONE, LEVEL_TWO, LEVEL_THREE};

class dbDataStructure

{

public:

QString label;

quint32 depth;

quint32 userIndex;

QByteArray data;

quint64 uniqueID;

QVector<dbDataStructure*> nextItems;

QVector<quint32> nextItemsIndices;

dbDataStructure* father;

quint32 fatherIndex;

bool noFatherRoot;

QString fileName;

QByteArray firstLineData;

QVector<quint32> linesNumbers;

void *glPointer;

friend QDataStream& operator<<(QDataStream& stream, const dbDataStructure& myclass)

{

return stream << myclass.label << myclass.depth << myclass.userIndex << qCompress(myclass.data)

<< myclass.uniqueID << myclass.nextItemsIndices << myclass.fatherIndex << myclass.noFatherRoot

<< myclass.fileName << qCompress(myclass.firstLineData) << myclass.linesNumbers;

}

friend QDataStream& operator>>(QDataStream& stream, dbDataStructure& myclass)

{

stream >> myclass.label >> myclass.depth >> myclass.userIndex >> myclass.data

>> myclass.uniqueID >> myclass.nextItemsIndices >> myclass.fatherIndex >> myclass.noFatherRoot

>> myclass.fileName >> myclass.firstLineData >> myclass.linesNumbers;

myclass.data = qUncompress(myclass.data);

myclass.firstLineData = qUncompress(myclass.firstLineData);

return stream;

}

}; the structure provides fields to store each object's data (label, user index, unique index to create the documentation structure, rich text compressed data, etc..) along with data that is not meant to be stored on disk, that's why there are two stream operators overrides that take care of what should be written to disk and what should not.

Three of the main functions of this unit are

void MainWindowEditMode::tryToLoadLevelDb(level lvl, bool returnToElement)

void MainWindowEditMode::saveCurrentLevelDb()

void MainWindowEditMode::saveEverythingOnThePanesToMemory()

their code is quite large but they perform roughly the 70% of the work of the gds storing system.

The tryToLoadLevelDb() function takes care of the database files loading from the gds default directory (defined in "gdbsreader.h") depending on the level we want to explore. The "returnToElement" parameter specify whether the function should select the previous zoom-ed element when returning from a deeper level backwards.

The saveCurrentLevelDb() and saveEverythingOnThePanesToMemory() respectively save all the items data on disk and on memory (by re-constructing an updated version of the dbDataStructure tree).

All the elements are stored in a dynamic QVector<dbDataStructure*> vector

QVector<dbDataStructure*> m_currentGraphElements;

dbDataStructure* m_selectedElement;

quint64 m_currentLevelOneID;

quint64 m_currentLevelTwoID; the vector is used to store just the pointers to the elements, the connection between them (parent->children) are stored in their dbDataStructure object.

The two m_currentLevelOneID and m_currentLevelTwoID variables are used to keep track of the current element where a zoom is active in the first and second level (the third level hasn't an additional zoom property).

Both edit and view windows use Qt's ui templates (similar to Visual Studio's DLGTEMPLATEEX wysiwyg editor) managed by Qt Creator.

The right rich text area is a textEditorWin object, which in turn is a subclass of a QMainWindow base class. This is necessary to add toolbars, actions and complex controls to the base widget - a mere QTextEdit rich text editor. The code is rather straightforwarding and, except for a number of small changes, resembles the rich text editor widget of the Qt SDK so we won't bother describing it further.

The code area (for both edit and view windows) is a CodeEditorWidget (QTextEdit subclass) with a CppHighlighter (QSyntaxHighlighter subclass) object associated to its document() and set with a standard C/C++ syntax highlight configuration. Along with the initialization settings a system of signals and slots (Qt's exclusive) provides a convenient way to link a line counter widget to the scrollbar events

setReadOnly(true);

setAcceptRichText(false);

setLineWrapMode(QTextEdit::NoWrap);

connect(this, SIGNAL(textChanged()), this, SLOT(updateFriendLineCounter()));

QScrollBar *scroll1 = this->verticalScrollBar();

QScrollBar *scroll2 = m_lineCounter->verticalScrollBar();

connect((const QObject*)scroll1, SIGNAL(valueChanged(int)), (const QObject*)scroll2, SLOT(setValue(int)));

connect(this, SIGNAL(updateScrollBarValueChanged(int)), (const QObject*)scroll2, SLOT(setValue(int))); The mouseReleaseEvent() override takes care of intercepting the block (equivalent to line in a plain-text context) where the user clicked (if in edit mode) to highlight a specific line of code whose number will be stored in the

QVector<quint32> m_selectedLines

vector. Each node's associated code (if any) is stored in the following fields

QString fileName;

QByteArray firstLineData;

QVector<quint32> linesNumbers; It has to be noticed that each code block is identified by its first line compressed data and next line numbers (lines after the first have their numbers stored relatively to the first). This is a simple approach to bear with the lack of a proper version control system which should instead check for differences and try to merge versions. Inserting marking comment tags in the code could have been another solution but since we believe the code shouldn't be messed up with, the above approach was chosen.

A tricky part exclusive of the main edit window is the following:

void *MainWindowEditMode::GLWidgetNotifySelectionChanged(void *m_newSelection)

{

if(!m_lastSelectedHasBeenDeleted)

{

qWarning() << "GLWidgetNotifySelectionChanged.saveEverythingOnThePanesToMemory()";

saveEverythingOnThePanesToMemory();

}

if(m_swapRunning)

{

GLDiagramWidget->m_swapInProgress = true;

dbDataStructure *m_newSelectedElement;

long m_newSelectionIndex = -1;

long m_selectedElementIndex = -1;

int foundBoth = 0;

for(int i=0; i<m_currentGraphElements.size(); i++)

{

if(foundBoth == 2)

break;

if(m_currentGraphElements[i]->glPointer == m_newSelection)

{

m_newSelectedElement = m_currentGraphElements[i];

m_newSelectionIndex = i;

foundBoth++;

}

if(m_currentGraphElements[i]->glPointer == m_selectedElement->glPointer)

{

m_selectedElementIndex = i;

foundBoth++;

}

}

QByteArray m_temp = m_newSelectedElement->data;

m_newSelectedElement->data = m_selectedElement->data;

m_selectedElement->data = m_temp;

QString m_temp2 = m_newSelectedElement->fileName;

m_newSelectedElement->fileName = m_selectedElement->fileName;

m_selectedElement->fileName = m_temp2;

m_temp2 = m_newSelectedElement->label;

m_newSelectedElement->label = m_selectedElement->label;

m_selectedElement->label = m_temp2;

long m_temp3 = m_newSelectedElement->userIndex;

m_newSelectedElement->userIndex = m_selectedElement->userIndex;

m_selectedElement->userIndex = m_temp3;

m_temp = m_newSelectedElement->firstLineData;

m_newSelectedElement->firstLineData = m_selectedElement->firstLineData;

m_selectedElement->firstLineData = m_temp;

QVector<quint32> m_temp4 = m_newSelectedElement->linesNumbers;

m_newSelectedElement->linesNumbers = m_selectedElement->linesNumbers;

m_selectedElement->linesNumbers = m_temp4;

GLDiagramWidget->clearGraphData();

updateGLGraph();

GLDiagramWidget->calculateDisplacement();

GLDiagramWidget->m_swapInProgress = false;

}

else

{

for(int i=0; i<m_currentGraphElements.size(); i++)

{

if(m_currentGraphElements[i]->glPointer == m_newSelection)

{

m_selectedElement = m_currentGraphElements[i];

break;

}

}

}

qWarning() << "New element selected: " + m_selectedElement->label;

qWarning() << "GLWidgetNotifySelectionChanged.clearAllPanes() and loadSelectedElementDataInPanes()";

clearAllPanes();

loadSelectedElementDataInPanes();

if(m_swapRunning)

{

m_swapRunning = false;

ui->swapBtn->toggle();

return m_selectedElement->glPointer;

}

else

return NULL;

} when an object is selected (colorpicking mode - GLWidget) on the openGL diagram, the widget notifies its parent window (edit or view mode) that the selection has been changed. The edit window, however, provides another functionality: elements swapping. When the user pressed the "Swap Element" button (which is a toggle button), the system records the first swap item as the current selected item. In turn, when the user selects another element, it is marked as "second swap item" and the swap begins. Since the GLWidget simply ignores all this, the swap logic is handled by the edit window itself and that's exactly what happens in the code function above. If the swap mode isn't active the selected graph's element is retrieved in the dbDataStructure, the selected element is saved into memory and the panes are reloaded with the new selected element's data.

Other tricky functions:

void MainWindowEditMode::on_deleteSelectedElementBtn_clicked()

this slot handles the "Delete Selected Element" action in three different ways:

- If the selected element is the root, deletes all the graph

- If the selected element isn't the root and hasn't children, is eliminated

- If the selected element isn't the root but has children, the user is prompted whether the system should delete the children along with their parent or assign the children to their parent's parent through a pointers system

void MainWindowEditMode::on_addChildBlockBtn_clicked()

this slot handles the "Add Children Block" action. If there's a variable called m_firstTimeGraphInCurrentLevel set, the graph is empty and a root element must be created (no father), otherwise a child element is created and the selected element is set as father.

Finally, the GLWidget provides functions to control the drawing process without the hassle of dealing with painting events

void MainWindowViewMode::deferredPaintNow()

{

bool oldValue = GLDiagramWidget->m_swapInProgress;

GLDiagramWidget->m_swapInProgress = true;

GLDiagramWidget->clearGraphData();

updateGLGraph();

GLDiagramWidget->calculateDisplacement();

GLDiagramWidget->m_swapInProgress = oldValue;

GLDiagramWidget->changeSelectedElement(m_selectedElement->glPointer);

loadSelectedElementDataInPanes();

} First a call to the clearGraphData() is made, this clears the blocks vectors in the GLWidget and calls for a repaint, then a calculateDisplacement() call occurs to initialize the post-order traversal and displacement calculation, eventually a changeSelectedElement() (if the element needed to be selected is different from the root) is called to select another element that, in turn, will instruct the painting function to use a different gradient texture to render the selected element.

All the connection between elements are automatically created in the drawConnectionLinesBetweenBlocks() function of the GLWidget so there's no need for the main windows to explicitly call it

void QGLDiagramWidget::drawConnectionLinesBetweenBlocks()

{

ShaderProgramPicking->bind();

GLuint uMVMatrix = glGetUniformLocation(ShaderProgramPicking->programId(), "uMVMatrix");

GLuint uPMatrix = glGetUniformLocation(ShaderProgramPicking->programId(), "uPMatrix");

float gl_temp_data[16];

for(int i=0; i<16; i++)

{

gl_temp_data[i]=(float)gl_projection.data()[i];

}

glUniformMatrix4fv(uPMatrix, 1, GL_FALSE, &gl_temp_data[0]);

for(int i=0; i<16; i++)

{

gl_temp_data[i]=(float)gl_view.data()[i];

}

glUniformMatrix4fv(uMVMatrix, 1, GL_FALSE, &gl_temp_data[0]);

GLuint uPickingColor = glGetUniformLocation(ShaderProgramPicking->programId(), "uPickingColor");

glUniform3f(uPickingColor, 1.0f,0.0f,0.0f);

if(m_diagramDataVector.size() == 0 || m_diagramDataVector.size() == 1)

return;

QVector<dataToDraw*>::iterator itr = m_diagramDataVector.begin();

struct Point

{

float x,y,z;

Point(float x,float y,float z)

: x(x), y(y), z(z)

{}

};

std::vector<Point> vertexData;

while(itr != m_diagramDataVector.end())

{

QVector3D baseOrig(0.0,0.0,0.0);

QMatrix4x4 modelOrigin = gl_model;

modelOrigin.translate((qreal)(-(*itr)->m_Xdisp),(qreal)((*itr)->m_Ydisp),0.0);

baseOrig = modelOrigin * baseOrig;

for(int i=0; i< (*itr)->m_nextItems.size(); i++)

{

dataToDraw* m_temp = (*itr)->m_nextItems[i];

QVector3D baseDest(0.0, 0.0, 0.0);

QMatrix4x4 modelDest = gl_model;

modelDest.translate((qreal)(-m_temp->m_Xdisp),(qreal)(m_temp->m_Ydisp),0.0);

baseDest = modelDest * baseDest;

vertexData.push_back( Point((float)baseOrig.x(), (float)baseOrig.y(), (float)baseOrig.z()) );

vertexData.push_back( Point((float)baseDest.x(), (float)baseDest.y(), (float)baseDest.z()) );

}

itr++;

}

GLuint vao, vbo;

glGenVertexArrays(1, &vao);

glBindVertexArray(vao);

glGenBuffers(1, &vbo);

glBindBuffer(GL_ARRAY_BUFFER, vbo);

size_t numVerts = vertexData.size();

glBufferData(GL_ARRAY_BUFFER,

sizeof(Point)*numVerts,

&vertexData[0],

GL_STATIC_DRAW);

glEnableVertexAttribArray(0);

glVertexAttribPointer(0,

3,

GL_FLOAT,

GL_FALSE,

sizeof(Point),

(char*)0 + 0*sizeof(GLfloat));

glDrawArrays(GL_LINES, 0, numVerts);

glBindVertexArray(0);

glBindBuffer(GL_ARRAY_BUFFER, 0);

} Connectors are simply drawn by linking basic color picking shaders (without textures or lighting calculations) and setting a VAO (Vertex Array Object) and a VBO (Vertex Buffer Object) to store the vertices to connect with lines. The role of the VBO is to store the memory needed to perform the operation (which will be accomplished by the associated shaders) while the VAO specify how data are stored into the VBO. However these are basic openGL actions.

This paper's goal was to present a new software documentation approach implemented through an experimental concept application - gds. Software engineering methodologies are techniques relatively new compared to other engineering fields so there might be a lot of improvements and changes in the future.

To be completely honest this work also helped me to learn openGL and strengthen my Qt knowledge, beside realizing an old idea I've been thinking on for a long time.