Introduction

Caching of frequently used data greatly increases the scalability of your application since you can avoid repeated queries on database, file system or to webservices. When objects are cached, they can be retrieved from the cache which is a lot faster and more scalable than loading from database, file or web service. However, implementing caching is tricky and monotonous when you have to do it for many classes. Your data access layer gets a whole lot of code that deals with caching objects and collection, updating cache when objects change or get deleted, expire collections when a contained object changes or gets deleted and so on. The more code you write, the more maintenance overhead you add. Here I will show you how you can make the caching a lot easier using LINQ to SQL and my library AspectF. It’s a library that helps you get rid of thousands of lines of repeated code from a medium sized project and eliminates plumbing (logging, error handling, retrying, etc.) type code completely.

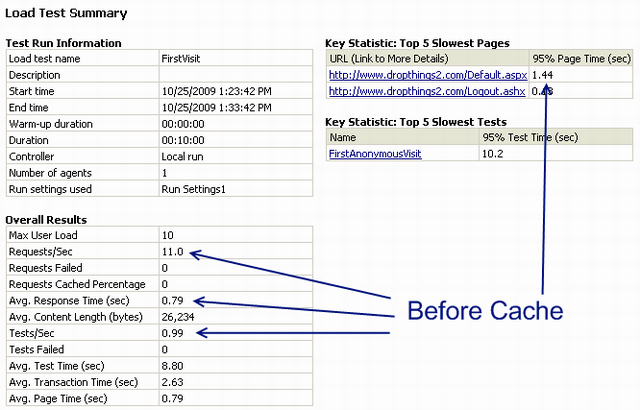

Here’s an example how caching significantly improves the performance and scalability of applications. Dropthings – my open source Web 2.0 AJAX portal, without caching can only serve about 11 request/sec with 10 concurrent users on a dual core 64 bit PC. Here data is loaded from database as well as from external sources. The average page response time is 1.44 sec.

After implementing caching, it became significantly faster, around 32 requests/sec. Page load time decreased significantly as well to 0.41 sec only. During the load test, CPU utilization was around 60%.

It shows clearly the significant difference it can make to your application. If you are suffering from poor page load performance and high CPU or disk activity on your database and application server, then caching Top 5 most frequently used objects in your application will solve that problem right away. It’s a quick win to make your application a lot faster than doing complex re-engineering in your application.

Common Approaches to Caching Objects and Collections

Sometimes the caching can be simple, for example caching a single object which does not belong to a collection and does not have child collections that are cached separately. In such a case, you write simple code like this:

- Is the object being requested already in cache?

- Yes, then serve it from cache.

- No, then load it from database and then cache it.

On the other hand, when you are dealing with cached collection where each item in the collection is also cached separately, then the caching logic is not so simple. For example, say you have cached a User collection. But each User object is also cached separately because you need to load individual User objects frequently. Then the caching logic gets more complicated:

- Is the collection being requested already in cache?

- Yes. Get the collection. For each object in the collection:

- Is that object individually available in cache?

- Yes, get the individual object from cache. Update it in the collection.

- No, discard the whole collection from cache. Go to next step:

- No. Load the collection from source (e.g. database) and cache each item in the collection separately. Then cache the collection.

You might be thinking why do we need to read each individual item from cache and why do we need to cache each item in collection separately when the whole collection is already in cache? There are two scenarios you need to address when you cache a collection and individual items in that collection are also cached separately:

- An individual item has been updated and the updated item is in cache. But the collection, which contains all those individual items has not been refreshed. So, if you get the collection from cache and return as it is, you will get stale individual items inside that collection. This is why each item needs to be retrieved from cache separately.

- An item in the collection may have been force expired in cache. For example, something changed in the object or the object has been deleted. So, you expired it in cache so that on next retrieval it comes from database. If you load the collection from cache only, then the collection will contain the stale object.

If you are doing it the conventional way, you will be writing a lot of repeated code in your data access layer. For example, say you are loading a Page collection that belongs to a user. If you want to cache the collection of Page for a user as well as cache individual Page objects so that each Page can be retrieved from Cache directly, then you need to write code like this:

public List<Page> GetPagesOfUserOldSchool(Guid userGuid)

{

ICache cache = Services.Get<ICache>();

bool isCacheStale = false;

string cacheKey = CacheSetup.CacheKeys.PagesOfUser(userGuid);

var cachedPages = cache.Get(cacheKey) as List<Page>;

if (cachedPages != null)

{

var resultantPages = new List<Page>();

foreach (Page cachedPage in cachedPages)

{

var individualPageInCache = cache.Get

(CacheSetup.CacheKeys.PageId(cachedPage.ID)) as Page;

if (null == individualPageInCache)

{

isCacheStale = true;

}

else

{

resultantPages.Add(individualPageInCache);

}

}

cachedPages = resultantPages;

}

if (isCacheStale)

{

var pagesOfUser = _database.GetList<Page, Guid>(...);

pagesOfUser.Each(page =>

{

page.Detach();

cache.Add(CacheSetup.CacheKeys.PageId(page.ID), page);

});

cache.Add(cacheKey, pagesOfUser);

return pagesOfUser;

}

else

{

return cachedPages;

}

}

Imagine writing this kind of code over and over again for each and every entity that you want to cache. This becomes a maintenance nightmare as your project grows.

Here’s how you could do it using AspectF:

public List<Page> GetPagesOfUser(Guid userGuid)

{

return AspectF.Define

.CacheList<Page, List<Page>>(Services.Get<ICache>(),

CacheSetup.CacheKeys.PagesOfUser(userGuid),

page => CacheSetup.CacheKeys.PageId(page.ID))

.Return<List<Page>>(() =>

_database.GetList<Page, Guid>(...).Select(p => p.Detach()).ToList());

}

Instead of 42 lines of code, you can do it in 5 lines!

Here’s how it works, the CacheList function takes an ICache interface implementation, which is an interface that deals with the underlying implementation of cache, takes a key for the collection that is used to store items in cache, and a delegate where you return the cache key for each item in the collection. Here’s what it does:

- Check if the collection exists in Cache.

- If yes, then

- For each item in the collection, get the key for the item by calling the delegate.

- Check if the item exists in cache.

- If yes, keep it.

- If no, then the collection is stale. It needs to be refreshed.

- If no, then

- Execute the code in

Return functions argument. The code will return the collection from source (database, file, webservice, ...) - Store each item in collection in cache separately.

- Cache the collection.

Just like CacheList, there’s Cache which caches a single item in cache using the simple logic explained earlier. For example, if you want to cache a single Page, you do this:

public Page GetPageById(int pageId)

{

return AspectF.Define.Cache<Page>(Services.Get<ICache>(),

CacheSetup.CacheKeys.PageId(pageId))

.Return<Page>(() =>

_database.GetSingle<Page, int>(...);

}

If the key specified here does not exist in Cache, it will call the code specified in the Return functions argument. Then it will cache whatever your code returns. Any subsequent call to this method will return the item from cache (if exists) and will not execute the code in Return’s argument.

The ICache interface is defined as:

public interface ICache

{

void Add(string key, object value);

void Add(string key, object value, TimeSpan timeout);

bool Contains(string key);

void Flush();

object Get(string key);

void Remove(string key);

void Set(string key, object value);

void Set(string key, object value, TimeSpan timeout);

}

You need to implement this interface based on whatever caching mechanism you want to use. For example, I have used it for Enterprise Library Caching Application block this way:

class EntlibCacheResolver : ICache

{

private readonly static ICacheManager _CacheManager =

CacheFactory.GetCacheManager("DropthingsCache");

#region ICache Members

public object Get(string key)

{

return _CacheManager.GetData(key);

}

public void Put(string key, object item)

{

_CacheManager.Add(key, item);

}

public void Add(string key, object value, TimeSpan timeout)

{

_CacheManager.Add(key, value, CacheItemPriority.Normal, null,

new AbsoluteTime(DateTime.Now.Add(timeout)));

}

public void Add(string key, object value)

{

_CacheManager.Add(key, value);

}

public bool Contains(string key)

{

return _CacheManager.Contains(key);

}

public void Flush()

{

_CacheManager.Flush();

}

public void Remove(string key)

{

_CacheManager.Remove(key);

}

public void Set(string key, object value, TimeSpan timeout)

{

if (_CacheManager.Contains(key))

_CacheManager.Remove(key);

this.Add(key, value, timeout);

}

public void Set(string key, object value)

{

if (_CacheManager.Contains(key))

_CacheManager.Remove(key);

this.Add(key, value);

}

#endregion

}

This class uses the Enterprise Library Caching Application block.

Caching LINQ to SQL Objects

Caching LINQ to SQL requires some plumbing. First you have to make the DataContext support SerializationMode = Unidirectional so that the Entity classes generated by LINQ to SQL has the [Serializable] attribute. You do this by opening the data context in Visual Studio designer and go to the properties of the data context (not any of the entities) and change the SerializationMode.

Second, you have to implement a Detach method. You need to declare a partial class for the same LINQ to SQL entities and create a Detach method which initializes all referenced objects and collections and clears the event listeners so that change tracking is turned off. For example, I have a Data Context where aspnet_User references several other classes. So, I have to clear them all.

public partial class aspnet_User

{

#region Methods

public void Detach()

{

this.PropertyChanged = null;

this.PropertyChanging = null;

this._Pages = new EntitySet<Page>(new Action<Page>(this.attach_Pages),

new Action<Page>(this.detach_Pages));

this._UserSetting = default(EntityRef<UserSetting>);

this._Tokens = new EntitySet<Token>(new Action<Token>(this.attach_Tokens),

new Action<Token>(this.detach_Tokens));

this._aspnet_UsersInRoles = new EntitySet<aspnet_UsersInRole>(

new Action<aspnet_UsersInRole>(this.attach_aspnet_UsersInRoles),

new Action<aspnet_UsersInRole>(this.detach_aspnet_UsersInRoles));

this._RoleTemplates = new EntitySet<RoleTemplate>(

new Action<RoleTemplate>(this.attach_RoleTemplates),

new Action<RoleTemplate>(this.detach_RoleTemplates));

}

#endregion Methods

}

Here you clear the event listeners because it’s used by the Object Change Tracker. When the aspnet_User objects are serialized for caching, it will have no reference to the Object change tracker. Next you have to clear all references to other entities because those references are not serializable.

Finally, you need to turn off Deferred Loading feature of DataContext which allows you to lazily load referenced objects and collections when you access them. This won’t work when an object has been loaded from cache and has no association with any DataContext. In order to disable Deferred Loading, you need to create the DataContext which this option turned off.

var context = new DropthingsDataContext(GetConnectionString());

context.DeferredLoadingEnabled = false;

Now that you have cleared all referenced entities, when you store an object in cache, the referenced entities will not be stored in the cache. So, when you retrieve from cache, you will have to load those referenced entities separately. As a result, when you get aspnet_User from cache, do not expect the aspnet_UsersInRoles collection to be already populated or loaded on demand using LINQ to SQL lazy loading feature.

Handling Update and Delete Scenarios

Caching objects and collections require clever design planning. When you are caching an object, you need to ensure it is updated or expired when the object changes so that future requests to the same object gets the latest object. So, whenever you call update or delete on some entity, you need to deal with the cache as well.

public void Update(UserSetting userSetting)

{

_database.UpdateObject<UserSetting>(...);

RemoveUserSettingCacheForUser(userSetting);

}

private void RemoveUserSettingCacheForUser(UserSetting userSetting)

{

_cacheResolver.Remove(CacheSetup.CacheKeys.UserSettingByUserGuid(userSetting.UserId));

}

Here you see, when you are updating an object in database, you remove the item from cache so that next Get call loads fresh object from database.

Expiring Dependent Objects and Collections in Cache

If you have objects that are also part of cached collection, for example, a Page collection where the collection is cached and individual Page objects are also cached separately, you need to handle expiration/removal of collections from cache that contain Page object. For example, say you cached a collection of Page for a user. Now user added a new Page object. The cached collection does not contain it yet. In this case, if you get the cached collection of Page for the user, you won’t get the new Page that user just added. Similarly, if user deletes a page, the cached collection contains the Page that no longer exists. So, you need to expire the collection in cache.

public void Delete(Page page)

{

RemovePageIdDependentItems(page.ID);

_database.Delete<Page>(DropthingsDataContext.SubsystemEnum.Page, page);

}

public Page Insert(Action<Page> populate)

{

var newPage = _database.Insert<Page>

(DropthingsDataContext.SubsystemEnum.Page, populate);

RemoveUserPagesCollection(newPage.ID);

return newPage.Detach();

}

Here you see, when a new page is inserted or a page is deleted, any cache entry that’s referring to that page is removed from cache.

Handling Objects that are Cached with Multiple Keys

Frequently used objects are sometimes cached more than once using different keys. For example, a User object is cached using UserGuid and UserName because you need to get a user by UserName and by UserGuid frequently. Then the challenge is to expire all such cached objects when something changes on a User.

Here’s how I do it:

public void RemoveUserFromCache(aspnet_User user)

{

CacheSetup.CacheKeys.UserCacheKeys(user).Each(cacheKey =>

Services.Get<ICache>().Remove(cacheKey));

}

Here, the method UserCacheKeys returns all possible cache keys for the user. Each key is removed from the cache. Thus all instances of an object get removed from cache.

public static string UserFromUserName(string userName)

{

return "UserFromUserName." + userName;

}

public static string UserFromUserGuid(Guid userGuid)

{

return "UserFromUserGuid." + userGuid.ToString();

}

public static string[] UserCacheKeys(aspnet_User user)

{

return new string[] {

UserFromUserName(user.UserName),

UserFromUserGuid(user.UserId)

};

}

I always use functions to produce cache keys and declare these functions in a class where I deal with all Cached object keys.

Avoid Database Query Optimizations When You Cache Sets of Data

In general, you always try to optimize database queries so that only what’s absolutely necessary is loaded from database. For example, sometimes you load pages of a user. Sometimes you have to load pages of a user with a certain filter. So, you create two different queries - one loads all pages of a user and the other loads only the pages that match some additional criteria for a user. This is the best practice since you do not want to load all pages of a user and then filter on the business layer based on criteria. That will be waste of database resource and waste of data transfer over the network.

When you implement caching, you are then caching the collection of Pages of a user. So, the superset of pages is already cached. You can easily retrieve that superset and do some filter and return the subset you need. There’s no need to cache “all” pages of a user and cache “filtered” pages of a user separately in cache. That would be waste of precious cache space. Since retrieving all pages of a user from cache is lot faster than retrieving it from database, you can avoid caching them separately. For example:

public List<Page> GetPagesOfUser(Guid userGuid)

{

return AspectF.Define

.CacheList<Page, List<Page>>(_cacheResolver,

CacheSetup.CacheKeys.PagesOfUser(userGuid),

page => CacheSetup.CacheKeys.PageId(page.ID))

.Return<List<Page>>(() =>

_database.GetList<Page, Guid>(...)

.Select(p => p.Detach()).ToList());

}

public List<Page> GetLockedPagesOfUser(Guid userGuid)

{

return this.GetPagesOfUser(userGuid)

.Where(page =>

page.IsLocked && page.IsDownForMaintenance == isDownForMaintenenceMode)

.ToList()

}

Here you see, GetLockedPagesOfUser reuses the GetPagesOfUser function. Thus the Pages of user get cached and further filtering required is done by retrieving the cached collection. If I were not caching, I would have written a separate query to load only the locked pages of a user and would not have reused GetPagesOfUser here because it would be a waste to get all the pages and then filtering it on client side. But when cache is in place, I can save implementation of all these filtered queries separately and end up with less code in data access layer.

Conclusion

Caching greatly improves performance and scalability of your application at the cost of maintainability and added complexity in design and development. You have to cache objects keeping all expiration scenarios in mind. Otherwise your app results in showing stale data producing incorrect results. Moreover, if you aren’t using a library like AspectF, which greatly simplifies caching, you are repeating code that quickly becomes a maintenance nightmare. Using AspectF, you can greatly simplify caching implementation and make your app faster and more scalable without the maintenance challenges.

History

- 1st November, 2009: Initial post